The Unjournal: reporting some progress

(Link: our main page, see also our post Unjournal: Call for participants and research.)

- Our group (curating articles and evaluations) is now live on Sciety HERE.

- The first evaluated research project (paper) has now been posted HERE .

First evaluation: Denkenberger et al

Our first evaluation is for "Long Term Cost-Effectiveness of Resilient Foods for Global Catastrophes Compared to Artificial General Intelligence Safety", by

David Denkenberger, Anders Sandberg, Ross Tieman, and Joshua M. Pearce, published in the International Journal of Disaster Risk Reduction.

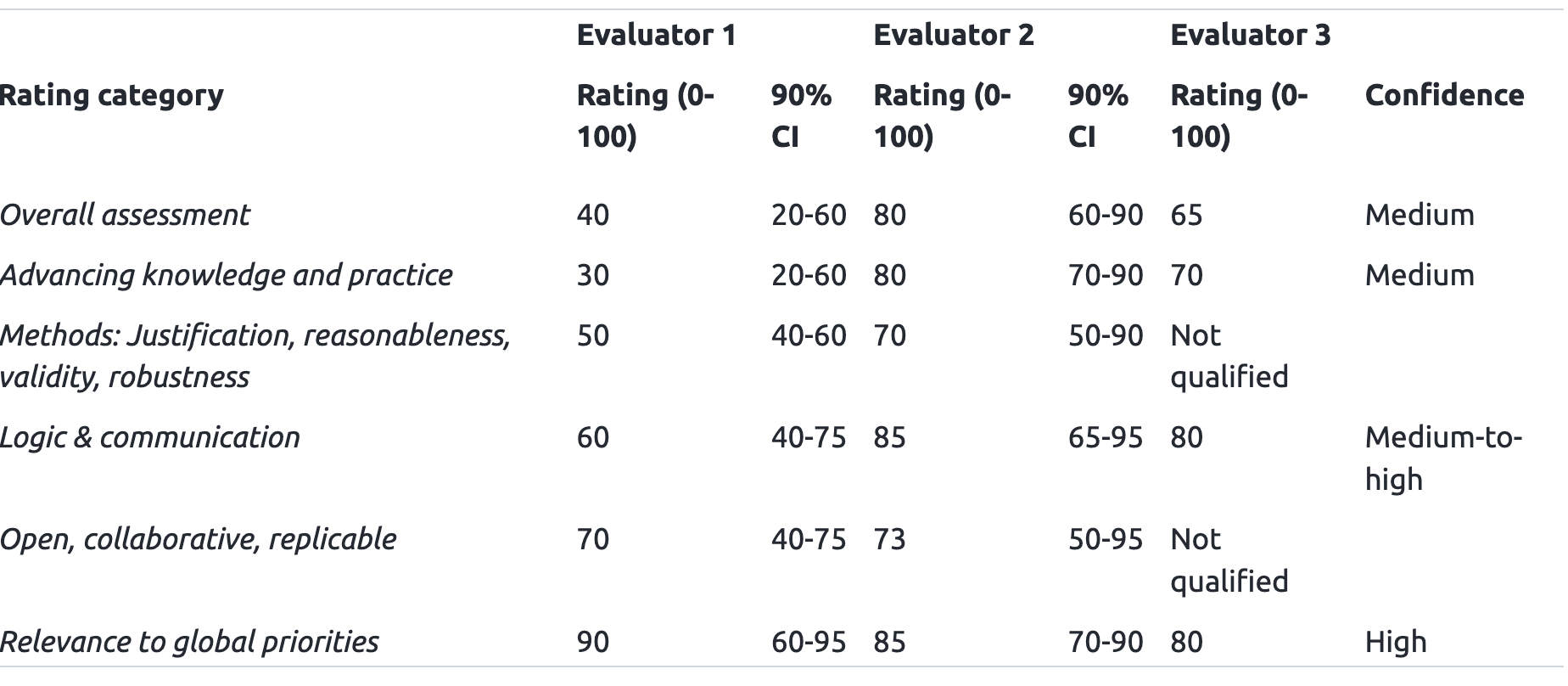

These three reports and ratings (see a sample below) come from three experts with (what we believe to be) complementary backgrounds (note, these evaluators agreed to be identified rather than remain anonymous):

- Alex Bates: An award-winning cost-effectiveness analyst with some background in considering long-term and existential risks

- Scott Janzwood: A political scientist and Research Director at the Cascade Institute [1]

- Anca Hanea: A senior researcher and applied probabilist based at the Centre of Excellence for Biosecurity Risk Analysis (CEBRA) at the University of Melbourne. She has done prominent research into eliciting and aggregating (expert) judgments, working with the RepliCATS project.

These evaluations were, overall, fairly involved. They engaged with specific details of the paper as well as overall themes, directions, and implications. While they were largely positive about the paper, they did not seem to pull punches. Some examples of their feedback and evaluation below (direct quotes).

Extract of evaluation content

Bates:

I’d be surprised if I ever again read a paper with such potential importance to global priorities.

My view is that it would be premature to reallocate funding from AI Risk reduction to resilient food on the basis of this paper alone. I think the paper would have benefitted from more attention being paid to the underlying theory of cost-effectiveness motivating the investigation. Decisions made in places seem to have multiplied uncertainty which could have been resolved with a more consistent approach to analysis.

The most serious conceptual issue which I think needs to be resolved before this can happen is to demonstrate that ‘do nothing’ would be less cost-effective than investing $86m in resilient foods, given that the ‘do nothing’ approach would potentially include strong market dynamics leaning towards resilient foods.".

Janzwood

the authors’ cost-effectiveness model, which attempts to decrease uncertainty about the potential uncertainty-reducing and harm/likelihood-reducing 'power' of resilient food R&D and compare it to R&D on AGI safety, is an important contribution"

It would have been useful to see a brief discussion of some of these acknowledged epistemic uncertainties (e.g., the impact of resilient foods on public health, immunology, and disease resistance) to emphasize that some epistemic uncertainty could be reduced by exactly the kind of resilient food R&D they are advocating for.

Hanea:

The structure of the models is not discussed. How did [they] decide that this is a robust structure (no sensitivity to structure performed as far as I understood)"

It is unclear if the compiled data sets are compatible. I think the quantification of the model should be documented better or in a more compact way."

The authors also responded in detail. Some excerpts:

The evaluations provided well thought out and constructively critical analysis of the work, pointing out several assumptions which could impact findings of the paper while also recognizing the value of the work in spite of some of these assumptions. "

Thank you for highlighting this point [relating to discount rate], this is an important consideration that would make valuable future work.

They also respond specifically to many points, e.g.,

In reality we anticipate that there are a myriad of ways in which nuclear risk and AGI would interact with one another. …

I do not attempt to summarize the overall evaluations and responses here. If you are interested in helping do this, or communicating the synthesis, please dm me.

Followup and AMA

We have roughly ten further papers in the evaluation pipeline, with an emphasis on potentially-high impact work published in the NBER working paper series. We expect two more to be released soon.

AMA: I will try to take this milestone as an opportunity to answer any questions you may have about The Unjournal. I'll try to respond within 48 hours.

(Again, see https://bit.ly/eaunjournal for our Gitbook project space, which should answer some questions.)

- ^

His research "focuses on how scientists and policymakers collaborate to address global catastrophic risks such as climate change, pandemics, and other emerging threats ... strategies and tools that we can use to make better decisions under deep uncertainty". He was previously a Visiting Researcher at the Future of Humanity Institute (FHI).

I'm one of the evaluators involved in the project (Alex Bates). I wanted to mention that it was an absolute pleasure to work with Unjournal, and a qualitative step above any other journal I've ever reviewed for. I'd definitely encourage people to get involved if they are on the fence about it!

Hi David, I'm excited about this! It certainly seems like a step in the right direction. A few vague questions that I'm hoping you'll divine my meaning from:

In the first stage, that is the idea. In the second stage, I propose to expand into other tracks.

Background: The Unjournal is trying to do a few things, and I think there are synergies: (see the Theory of Change sketch here)

1. Make academic research evaluation better, more efficient, and more informative (but focusing on the 'impactful' part of academic research)

2. Bring more academic attention and rigor to impactful research

3. Have academics focus on more impactful topics, and report their work in a way that makes it more impactful

For this to have the biggest impact, changing the systems and leveraging this... we need academics and academic reward systems to buy into it. It needs to be seen as rigorous, serious, ambitious, and practical. We need powerful academics and institutions to back it. But even for more modest goal of getting academic experts to (publicly) evaluate niche EA-relevant work, it's still important to be seen as serious, rigorous, credible, etc. That's why we're aiming for the 'rigor stuff' for now, and will probably want to continue this, at least as a flagship tier/stream into the future

But it seems like a great amount of EA work is shallower, or more weirdly formatted than a working paper. I.e., Happier Lives Insitute reports are probably a bit below that level of depth (and we spend a lot more time than many others) and GiveWell's CEAs have no dedicated write-ups. Would either of these research projects be suitable for the Unjournal?

Would need to look into specific cases. My guess is that your work largely would be, at least 1. If and when we launch the second stream and 2. For the more in-depth stuff that you might think "I could submit this to a conventional journal but it's too much hassle".

GiveWell's CEAs have no dedicated write-ups.

I think they should have more dedicated writeups (or other presentation formats), and perhaps more transparent formats, with clear reasoning transparent justifications for their choices, and robustness calculations, etc. Their recent contest goes in the right direction, though.

In terms of 'weird formats' it depends what you mean by weird. We are eager to evaluate work that is not the typical 'frozen pdf prison' but is presented (e.g.) as a web site offering foldable explanations for reasoning transparency. And in particular, open-science friendly dynamic documents where the code (or calculations) producing each results can be clearly unfolded, with a clear data pipeline, and where all results can be replicated. This would an improvement over the current journal formats: less prone to error, easier to check, easier to follow, easier to re-use, etc.

I agree that it's a challenge, but I think that this is a change whose time has come. Most academics I've talk to individually think public evaluation/rating would be better than the dominant (and inefficient) traditional journal system, but everyone thinks "I can't go outside of this system on my own". I outline how I think we might be able to crack this (collective action problem and inertia) HERE. (I should probably expand on this.). And I think that we (non-university linked EA researchers and funders) might be in a unique position to help solve this problem. And I think there would be considerable rewards and influence to 'being the ones who changed this'. But still ...

I agree this would be valuable, and might be an easier path to pursue. And there may indeed be some tradeoffs (time/attention). I want to continue to consider, discuss, and respond to this in more detail.

For now, some off-the-cuff justifications for the current path:

(I'll respond to your final point in another comment, as it's fairly distinct)

Reporting the right outcomes and CEAs, more reasoning-transparency, offering tools/sharing data and models, etc.

Thanks, great reply!

I'm hoping these will be very useful, if we scaled up enough. I also want to work to make these scores more concretely grounded and tied to specific benchmarks and comparison groups. And hope to do better to operationalize specific predictions,[1], and use well-justified tools for aggregating individual evaluation ratings into reliable metrics. (E.g., potentially partnering with initiatives like RepliCATS.

Not sure precisely what you mean by 'control for the depth'. The ratings we currently elicit are multidimensional, and the depth should be captured in some of these. But these were basically a considered first-pass; I suspect we can do better to come up with a well-grounded and meaningful set of categories to rate. (And I hope to discuss this further and incorporate the ideas of particular meta-science researchers and initiatives)

For things like citations, measures of impact, replicability, votes X years on 'how impactful was this paper' etc., perhaps leveraging prediction markets. ↩︎

This is an absolutely amazing project, thanks so much! Are you intending on doing evaluation of 'canonical non-peer reviewed EA work?

Thanks and good question.

Short answer, probably down the road a little bit, after our pilot phase ends.

We're currently mainly focused on getting academic and academic-linked researchers involved. Because of this, we are leaning towards targeting conventionally-prestigious and rigorous academic and policy work that also has the potential to be highly impactful.

In a sense, the Denkenberger paper is an exception to this, in that it is somewhat niche work that is particularly of interest to EAs and longtermists.

Most of the rest of our 'current batch' of priority papers to evaluate are NBER working papers or something of this nature. That aligns with the "to make rigorous work more impactful" part of our mission.

But going forward we would indeed like to do more of exactly what you are suggesting. To bring academic (and non-EA policy) expertise to EA-driven work; this is the ~"to make impactful work more rigorous" part of our mission. This might be done as part of a separate stream of work; we are still working out the formula.

Thanks again for this fantastic initiative. Here's the official link to the final Denkenberger publication for those interested.

Is there a way to get email alerts whenever a new UnJournal evaluation gets published?

Atm not but we're working on something like an email newsletter. And one thing you can do is follow Unjournal's Sciety Group -- click the 'follow' button. I think that gives you updates, but you need to have a Twitter account

By the way I added an ‘updates’ page to the gitbook HERE

I plan is to update this every ~fortnight and try to integrate this with an email subscription list, RSS, and social media. Not sure if the MailChimp signup thing is working yet.