The views expressed here are my own, not those of my employers.

Summary

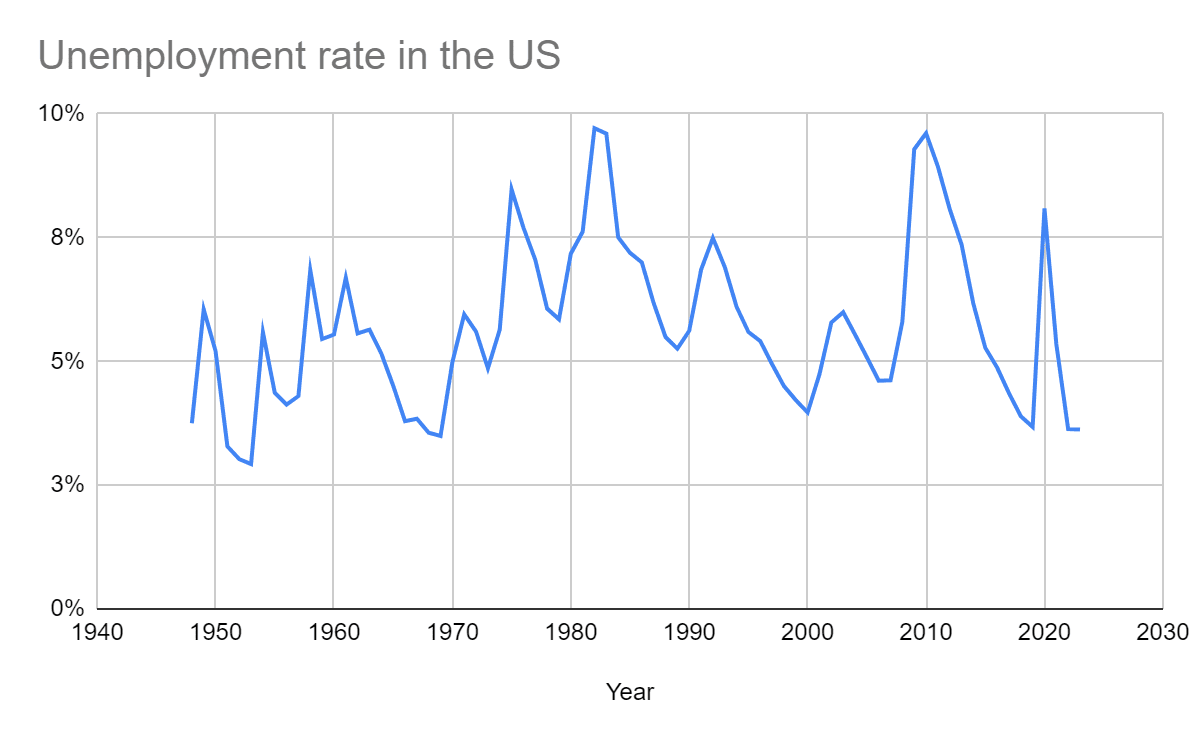

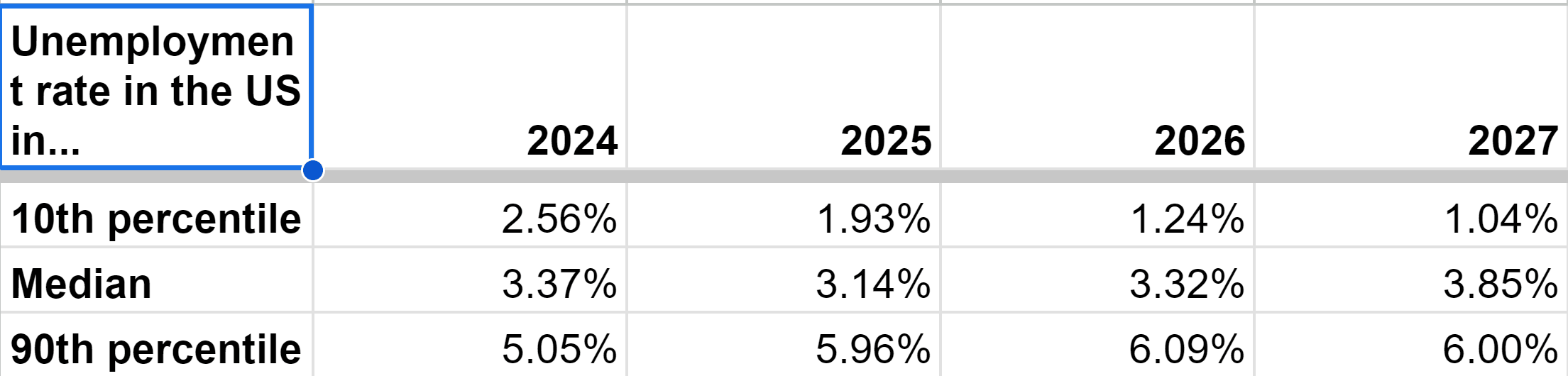

- The 5th percentile, median and 95th percentile annual unemployment rate in the United States (US) from 1948 to 2023 were 3.54 %, 5.55 % and 9.02 %.

- There has been a very weak upward trend in the annual unemployment rate in the US, with the coefficient of determination (R^2) of the linear regression of it on the year being 3.54 %.

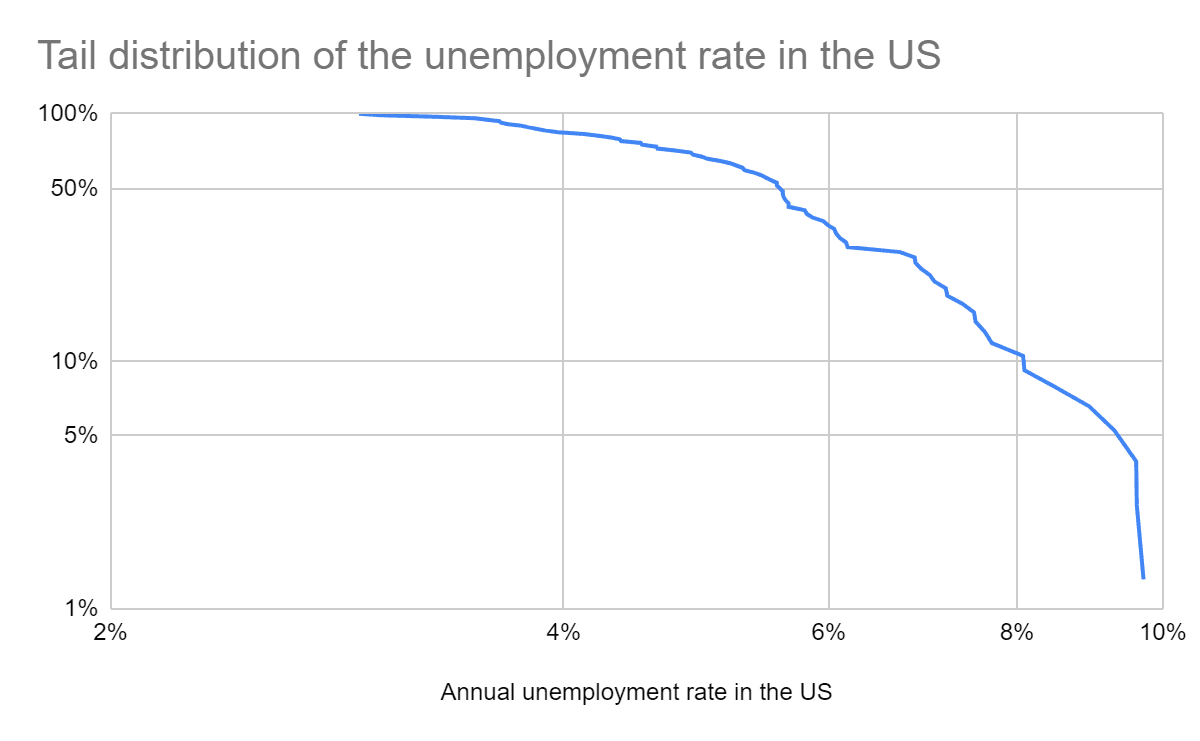

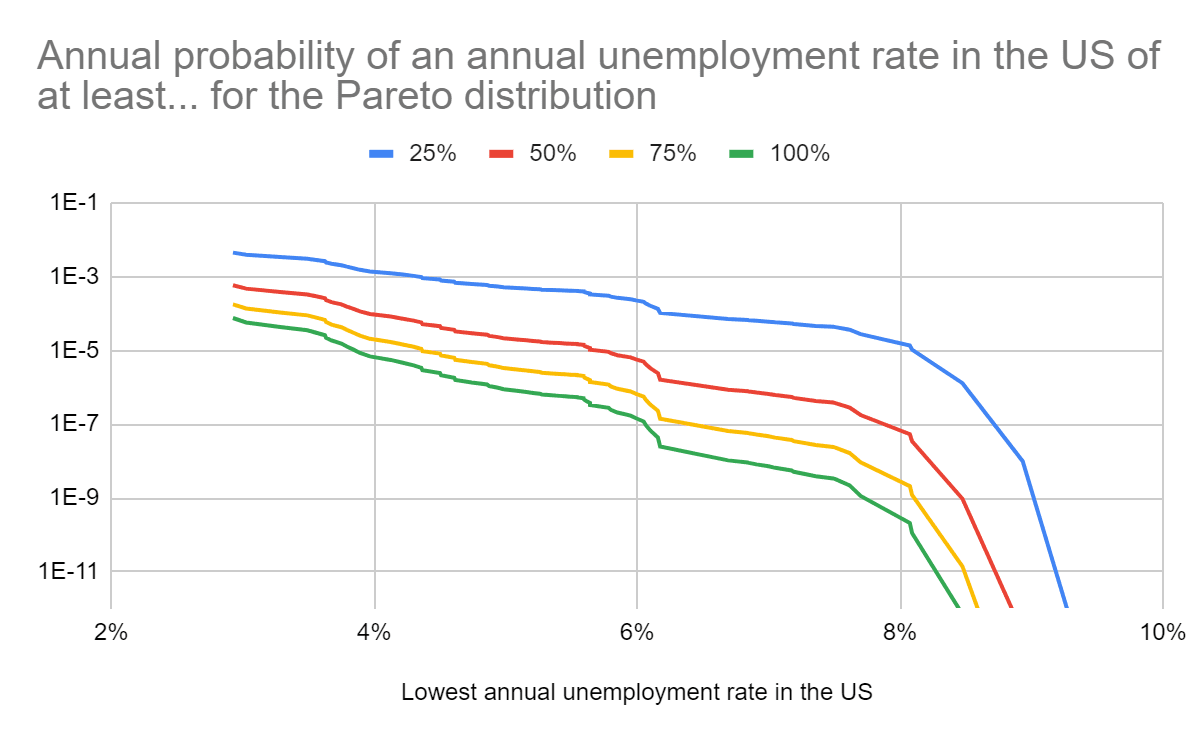

- The tail distribution of the annual unemployment rate in the US bends downwards in a log-log plot. I got a similar pattern for the annual conflict and epidemic/pandemic deaths as a fraction of the global population.

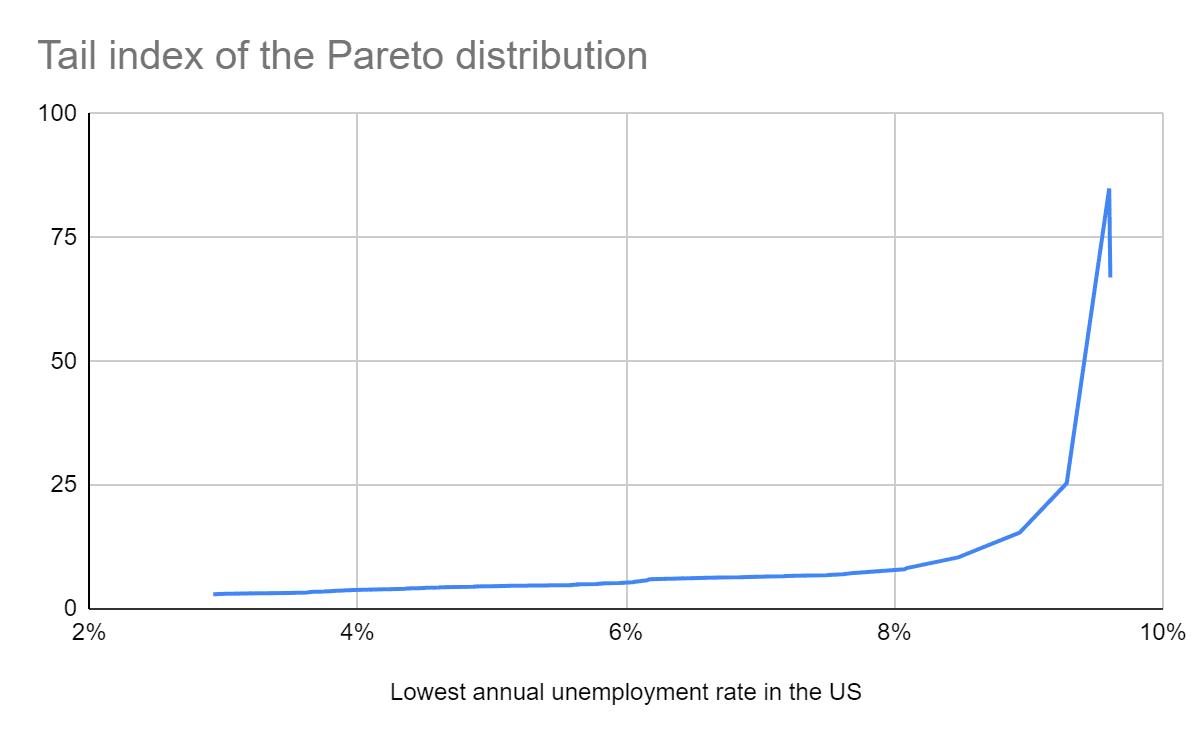

- For the Pareto distributions fit to the 10.5 % (8) rightmost points of the tail distribution in a log-log plot, the annual probability of this being:

- At least 10 % is 2.04 %.

- 100 % is 2.11*10^-10.

- I suspect many in the global catastrophic risk community underweight the outside view outlined in this post, and therefore overestimate the probability of massive unemployment.

Methods

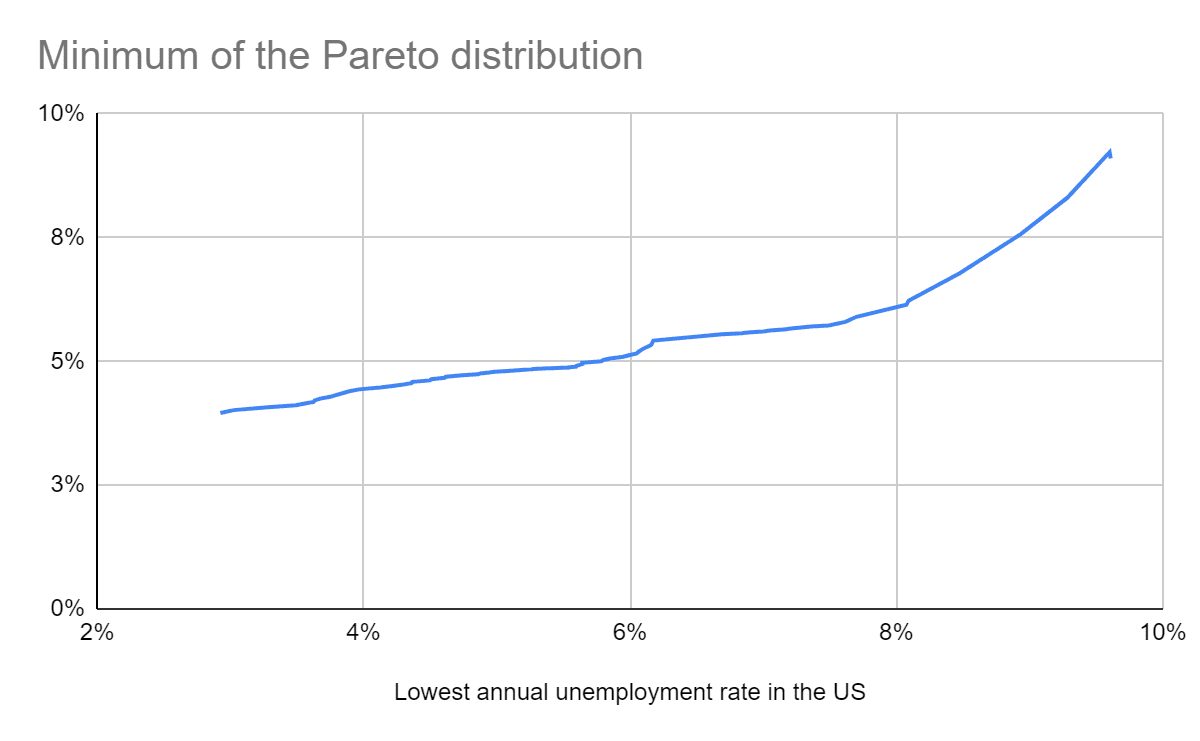

I fit Pareto distributions (power laws) to the 2, 3, … and 76 (= 2023 - 1948 + 1) highest values of the annual unemployment rate in the US from 1948 to 2023. To do this:

- I get the slope and intercept of linear regressions of the above sections of the tail distribution on the annual unemployment rate in the US.

- Since the tail distribution of a Pareto distribution is P(X > x) = (“minimum”/x)^“tail index”, ln(P(X > x)) = “tail index”*ln(“minimum”) - “tail index”*ln(x) = “intercept” + “slope”*ln(x), so I determine the parameters of the Pareto distributions from:

- “Tail index” = -“slope”.

- “Minimum” = e^(“intercept”/“tail index”).

Then I obtain the annual probability of an annual unemployment rate in the US of at least 10 %, 25 %, 50 %, 75 % and 100 %. Note unemployment requires not only not having a job, but also being actively looking for one:

Someone in the labor force is defined as unemployed if they were not employed during the survey reference week, were available for work, and made at least one active effort to find a job during the 4-week survey period.

The calculations are in this Sheet.

Results

Historical data

Time series

Basic stats

| Statistic | Annual unemployment rate in the US |

| Mean | 5.70 % |

| Minimum | 2.93 % |

| 5th percentile | 3.54 % |

| 10th percentile | 3.71 % |

| Median | 5.55 % |

| 90th percentile | 7.89 % |

| 95th percentile | 9.02 % |

| Maximum | 9.71 % |

Linear regression on the year

| Linear regression of the annual unemployment rate in the US on the year | ||

| Slope (pp/century) | Intercept (pp) | Coefficient of determination |

| 1.39 | -21.9 | 3.54 % |

Tail distribution

Tail risk based on Pareto distributions

Discussion

Historical data

The 5th percentile, median and 95th percentile annual unemployment rate in the US from 1948 to 2023 were 3.54 %, 5.55 % and 9.02 %. The maximum was 9.71 % in 1982.

There has been a very weak upward trend in the annual unemployment rate in the US, with the R^2 of the linear regression of it on the year being 3.54 %.

The tail distribution of the annual unemployment rate in the US bends downwards in a log-log plot. I got a similar pattern for the annual conflict and epidemic/pandemic deaths as a fraction of the global population.

Tail risk

My estimates for the annual probability of an annual unemployment rate in the US of:

- At least 10 % range from 0.105 % to 6.54 %.

- 100 % range from 1.32*10^-88 to 0.00749 %.

For the Pareto distributions fit to the 10.5 % (8) rightmost points of the tail distribution in a log-log plot, the annual probability of this being:

- At least 10 % is 2.04 %.

- 100 % is 2.11*10^-10.

I believe the rightmost points of the tail should get the most weight to predict tail risk, which implies trusting lower estimates more. On the other hand, I expect inside view factors related to transformative AI (TAI) to push estimates upwards. All in all, I suspect many in the global catastrophic risk community underweight the outside view outlined in this post, and therefore overestimate the probability of massive unemployment.

Thank you for your reply!

Summary: My main intention in my previous comment was to share my perspective on why relying too much on the outside view is problematic (and, to be fair, that wasn’t clear because I addressed multiple points). While I think your calculations and explanation are solid, the general intuition I want to share is that people should place less weight on the outside view, as this article seems to suggest.

I wrote this fairly quickly, so I apologize if my response is not entirely coherent.

Emphasizing the definition of unemployment you use is helpful, and I mostly agree with your model of total AI automation, where no one is necessarily looking for a job.

Regarding your question about my estimate of the median annual unemployment rate: I haven’t thought deeply enough about unemployment to place a bet or form a strong opinion on the exact percentage points. Thanks for the offer, though.

To illustrate the main point in my summary, I want to share a basic reasoning process I'm using.

Assumptions:

Worldview implications of my assumptions:

To articulate my intuition as clearly as possible: the lack of action we’re currently seeing from various stakeholders in addressing the advancement of frontier AI systems seems to be, in part, because they rely too heavily on the outside view for decision-making. While this doesn’t address the crux of your post ( but it prompted me to write my comment initially), I believe it’s dangerous to place significant weight on an approach that attempts to make sense of developments we have no clear reference classes for. AGI hasn’t happened yet, so I don’t understand why we should lean heavily on historical data to assess such a novel development.

What’s currently happening is that people are essentially throwing their arms up and saying, “Uh, the probabilities are so low for X or Y impact of AGI, so let’s just trust the process.” If people placed more weight on assumptions like those above, or reasoned more from first principles, the situation might look very different. Do you see? My issue is with putting too much weight on the outside view, not with your object-level claims.

I am open to changing my mind on this.