The development of AGI could be the most important event of our lifetimes. Ensuring that AI is developed and deployed safely could be the most impactful thing many of us can work on. However, the development of Frontier AI systems is happening in only a handful of companies. This leaves the rest of us to wonder: How can we influence Frontier AI Companies?

In this post, I will answer this question by describing 15 levers we can use to influence these AI companies. Hereby, I will only cover aspects that directly influence the company and the people within it, and leave aside any intermediate variables (eg shaping public opinion). I’ll add examples, opinions on their impact potential and actors that are suited to pull on these levers.

I think identifying these levers is important for building a strong Theory of Change. In most cases, an intervention to make AI safer needs to influence the developers of these systems. Thus, your pathway to impact should pull on at least one of the 15 levers I describe below.

Exception: There are legitimate interventions for reducing risks from AI that don’t go through AI companies. They are targeted at making society more resilient to the transformation caused by AI (eg work on Gradual Disempowerment). Other interventions could be focused on getting other actors to deploy AI Systems more responsibly. Furthermore, I’m assuming that AI Companies are the leading actors developing powerful AGI. While this is currently the case and I find it likely that it will remain this way, there are scenarios where government-controlled efforts are the leading developers of AI (eg a CERN for AI structure).

Limitations: This analysis doesn’t go in depth on any vector and doesn’t analyse their effectiveness. I’ve thought about this for 1 day. I’m confident each of the vectors is a real lever for influence (although they differ in effectiveness), but I might have missed some. Esp analyses in the “Governance” section likely have important gaps, as it is not my expertise

| Direct Work | Work inside or make direct contributions from the outside |

| Join them | Build and influence things inside the company |

| Build something for them | Create things they can use |

| Collaborate with them | Partner with them |

| Governance | Legal and regulatory power |

| Regulate them | Pass laws that force compliance |

| Sue them | Litigation for transparency and behaviour change |

| Set standards for them | Industry norms that become requirements |

| Improve Decisions | Shape information and culture |

| Convince them | Provide evidence that changes minds |

| Spread memes to them | Viral ideas that reshape thinking |

| Set social expectations for them | Make safety prestigious, recklessness shameful |

| Economic | Control resources and market dynamics |

| Invest in them | Make investment conditional on safety |

| Sell to them | Supplier leverage (chips, compute, data) |

| Buy from them | Customer demands and procurement requirements |

| Shape their market | Competitive pressure on capabilities or safety |

| Change their talent pipeline | Influence who they can hire |

| Insure them | Make insurance cost dependent on safety requirements |

Direct Work

1. Join them

Who? (Potential) employees

Working at the AI companies means you can be in the room where decisions happen. You can play internal politics, make new projects happen or just be in a decision-making position. This gives you sway over crucial decisions about compute allocation, release strategies or research priorities. You can also contribute by implementing solutions and carrying out projects that have a positive impact, for example, by being an individual contributor in a safety team. Lastly, insiders have access to sensitive information that they can leak to governments or the public if necessary. These positions are clearly very impactful as they are close to the action, but also bring risks like accelerating AI development or being corrupted by power/money/social influence (more discussion).

But not everybody can (or should) work in an AI Company. As of 2025, DeepMind, Anthropic and OpenAI have a combined 6600 highly competitive positions. Furthermore, it is important that others put pressure on the companies from the outside, which gives safety-conscious employees leverage to push for change.

2. Build something for them

Who? Researchers, Entrepreneurs, Engineers

AI companies have limited capacity, so for a lot of important things, they won’t have time to do them. You can help them by building a tool they can use, inventing a method they can implement or curating a dataset. In this way, you can differentially accelerate good developments of AI.

- Build open-source tools or paid products that the companies can use. For example, you could build a tool for monitoring agent actions that companies can easily deploy.

- Invent new methods that can be implemented inside companies. For example, more robust safeguards or alignment algorithms.

- Build datasets they can use for training or evaluation. AI companies are hungry for data and thus likely to use your curated, high-quality dataset. So this can be a great way to differentially accelerate or measure capabilities you care about.

This lever is open to anyone with the ability to build the appropriate technology. Note that your goal is usage by the companies, so you should also spend significant effort to make sure they are aware and potentially implementing it.

3. Collaborate with them

Who? Researchers, Entrepreneurs, Engineers

This is essentially a more direct version of ‘building something they can use’. Collaboration has the advantage that you have a higher chance that the company will actually use what you built and that you get some inside information about where you can meaningfully help. On the other hand, you might face a balance between honest communication and a good relationship with the company, or it might be used for safety washing. Examples of collaborations are external safety testers (like METR), data collectors (like ScaleAI) or academic researchers. While some research is better done independently of the companies, many contributions benefit from direct interaction with the companies.

Governance

4. Regulate them

Who? Policy makers, think tanks, advocates, voters

By passing and enforcing laws about AI development, governments can influence AI companies. Many strategies aim to have their impact through increasing the likelihood and effectiveness of AI regulation being passed. Politicians, staffers, think tankers, civil servants, advocates and some technical researchers all aim to influence AI Companies by getting governments to create, change or remove legislation that determines how AI Companies need to or are incentivised to act. For example, the EU AI Act’s Code of Practice is already demanding a range of risk management steps AI companies need to follow. Notable legislative efforts (e.g. SB 53) are underway and could pass in multiple US states.

The country in which the AI company is based clearly has the most leverage for guiding action. But other governments also have leverage if their country is a large market (e.g. the EU), houses important suppliers (e.g. ASML in the Netherlands) or offers huge funding (e.g., the UAE). Thus, the opportunity for regulation is strongest in the US/China, but other countries have some possibilities for light-touch regulation.

5. Sue them

Who? Anybody who has been harmed, Lawyers

Aside from government action, outside actors can also help to enforce regulations through litigation. The most prominent example is the New York Times suing OpenAI over copyright law, which will likely lead AI Companies to change their ways of accruing data. There are many plausible angles for litigation, from privacy violations to product liabilities. Aside from “winning” in court, such litigation can also force transparency over internal documents (see Musk vs OpenAI emails), stop or slow down releases and impose significant costs on the defendant.

6. Set standards for them

Who? Competitors, Standard Setting Bodies

The US has no comprehensive legislation on AI. In such a vacuum, it is natural to look towards industry best practices and voluntary standards for guidance on how AI companies should act responsibly. Standards and best practices matter as they can be adopted into legislation later, become procurement requirements or limit liability. Furthermore, by following industry standards and best practices, a company can signal that it is a responsible actor.

However, it’s important who sets the standards. While government-led standards like NIST’s AI Risk Management Framework can set important directions, industry-designed self-regulation can just be a way to prevent stronger government action. An example of the latter is the Frontier Model Forum, which facilitates cooperation between AI companies on finding best practices, information sharing and advancing AI Safety. However, it is financed and led by the AI companies themselves and thus unlikely to cause large changes in their behaviour.

Improve Decisions

Employees in AI companies need to constantly make high-stakes and uncertain decisions. Which research direction to pursue? How much compute to allocate to which project? What release strategy to use? While company incentives can be misaligned with broader society, I believe most people inside companies are trying to make decisions that are good for the world. We should help them make better decisions.

7. Convince them

Who? Researchers, Advocates, Advisors, and Anybody with access to employees

We can help people in companies by improving the quality of arguments and evidence available to them. This will help them make better decisions. For example, researching weaknesses of a safety method, providing forecasts about AI development or introducing new strategic considerations. Sometimes companies even actively solicit such feedback from the public.

Aside from producing new evidence and arguments, the right information also needs to reach the right people. That means there is an important role in filtering and delivering the right information to the relevant people inside labs. Such a role could be filled by advisors, consultants or trusted internet sources (e.g. newsletters).

8. Spread memes to them

Who? Anybody, respected figures, AI "influencers"

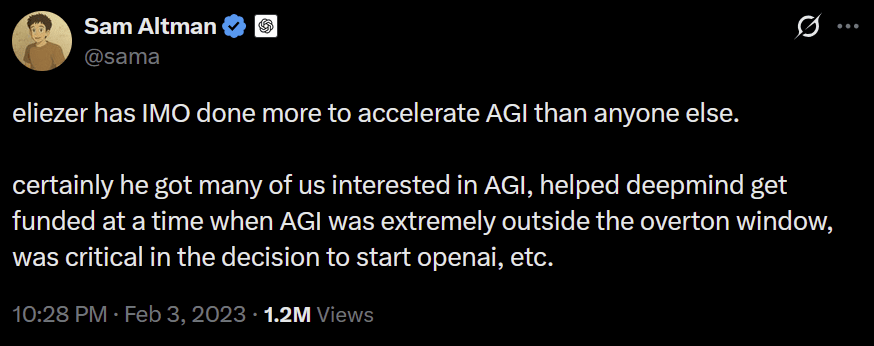

One can attempt to seed and spread viral ideas that change one's way of thinking about the world. Through cultural dissemination, these can reach researchers and decision makers at AI companies and influence their decisions and actions. Eliezer Yudkowsky, chief doomer and elite meme spreader, is a great example of this. His work was mostly impactful by popularising the idea that superintelligent AI could spell doom for humanity. Some actors are trying to create more of this by providing prizes for new memes.

There are, however, serious downside risks from this, as memes can have unintended effects (see below), they can change based on memetic pressure to become toxic or just generally fail to take off.

On the other hand, once memes are out, they can have unintended consequences.

9. Setting societal expectations for them

Who? Anybody, Advocates

Workers at AI Companies are not rational robots, but social animals that are responsive to public opinion and moral judgments. Aside from being convinced by good evidence and arguments, they will also change their judgement based on the expectations and moral judgements of friends, family and the general public. If they are aware that everybody would hugely respect them if they did X and think they are monsters if they did Y, they are more likely to do X. Notably, this needn’t only look like guilt-tripping and criticism, but also include positive encouragement and admiration for doing the right thing.

Economic

10. Invest in them

Who? VCs, Investment Companies, very rich people

AI Companies depend on large investments to continue scaling. Actors who can provide large funding thus gain some leverage to influence the company as a condition of investment. For example, an investment by SoftBank into OpenAI was made conditional on the company changing its governance structure, which did cause OpenAI to attempt a (possibly illegal) for-profit conversion.

Investments can also be tied to voting rights or board seats. An early investment by OpenPhilanthropy in OpenAI enabled them to nominate a value-aligned board member (Helen Toner). While this didn't turn out great in hindsight (suspending Sam Altman for 4 days wasn't worth $30 million), it looked for a while like an incredible bargain.

Unfortunately, as valuations of AI companies rise (OpenAI reportedly selling at $500billion), influence is restricted to actors that can move many billions in funding. Furthermore, "non-super-duper-rich" individuals cannot directly invest in AI developers as they are not publicly traded (OpenAI, Anthropic) or only a part of a much larger Tech Company (Google DeepMind, Meta AI). Lastly, investing in AI companies has the negative consequence of enabling scaling and speeding up the arrival of dangerous AI systems.

11. Sell to them

Who? Compute-, data-, infrastructure-providers

AI Companies depend on a range of suppliers and providers of services. Suppliers can use this dependence as leverage to steer the company's actions. NVIDIA or TSMC hold especially large power as there are no competitors (90%+ market share), their products cannot be replaced by others, and their products are essential to the operation of AI Companies.

Similarly, cloud compute providers (AWS, Oracle Cloud, …) hold large leverage, although they are more replaceable. For example, Microsoft's deal to provide cloud compute to OpenAI bought them a (non-voting) board seat, access to all pre-AGI tech and likely granted them significant input in the development and release of GPT4.

While GPU/semiconductor companies and compute providers have by far the largest leverage, there are also other suppliers:

- Data providers: Scale AI et al. label and collect high-quality data; Reddit or Publishing houses hold the rights to very useful training data

- Technical Infrastructure: MLOps platforms such as Weights & Biases or ways of disseminating AI models like HuggingFace.

- Integrations: Services that offer integration with the AI, like Atlassian, Cloudflare or Paypal that offer integration with Claude

- Other Infrastructure: Banking services, …

12. Buy from them

Who? individual users, business customers, governments

Companies need to earn money and will thus be responsive to customer demands. This aspect is strengthened by the similarity between products of different providers, which makes the cost of switching very low.

Single Customers can set financial incentives for AI companies by voting with their wallets or boycotting products. While these are popular tactics in the animal rights movement or environmentalism, they have much less use in AI Safety. However, for companies focused on B2C, large changes in customer demands would likely translate into changes in internal priorities.

Large business customers might have higher leverage to make demands. Esp customers that channel a lot of demand, like API wrapper startups (eg Cursor), could make strong requests to AI companies.

Lastly, the government could exert pressure when giving large contracts, eg using AI for defence. As a starter, governments could instate basic safety requirements like not giving huge defence contracts to AI companies whose new model just went on a pro-Nazi spree. More ambitiously, they could attach stringent safety requirements and information security for models used in the military.

13. Shape their market

Who? Competitors

Companies are responsive to market pressures. Competitors have leverage by shaping the market they both partake in. For example, one company might release models with new capabilities, thus putting pressure on other companies to catch up and release similar models. Other market shaping actions include race dynamics to take a market or reach a capability first, pricing strategies, release strategies (eg open-source AI commodifying chatbots), customer acquisition strategies, adding new features or making specific aspects (e.g. safety) more salient to customers.

14. Change their talent pipeline

Who? potential employees, field builders, educators, competitors

Another critical input into the AI production function is the people developing and deploying AI. They have a rare and highly sought-after skillset without which the companies can’t survive. This was shown in Meta’s recent spending spree to buy top-talent. This gives candidates leverage to make demands from their future employers. Furthermore, it’s a vector for competitors to influence each other. For example Anthropic poached large parts of OpenAI’s alignment team. This brain-drain hurt OpenAI's public image wrt safety.

Others can attempt to influence the makeup of the talent pool of AI companies. For example, field-building programs like MATS aim to produce and accelerate people who care about AI Safety and could end up working at AI Companies. This increases the percentage of candidates who care about safety. Top universities are also in a position of power by educating students about the importance of ethical AI development.

15. Insure them

Who? Insurance companies

Companies are often required to or benefit from being insured. For example, D&O insurance is required by board members, and Professional liability can be required to sell to enterprise customers. Insurance companies are experts in risk assessment and could thus play an important role in setting prices for and requiring certain safety practices. For example, Lloyd's of London could state that large AI companies' products need safety certification to be insured. Additionally, legislation could demand insurance against AI-caused damages (see SB1047), which would make the risk quantification and management conducted by insurance companies an essential part of the safety process. However, insurance is limited to non-existential safety issues, as an existential catastrophe likely implies that the insurance check won’t be cashed.

Thanks for the feedback and comments to Charles Whitman and Joep Storm.

I made some memes for this post, but decided they didn’t fit the vibe. Click here if you still want to see them.