Highlights

- CFTC asking for public comments about allowing Kalshi to phagocytize PredictIt’s niche

- $25k tournament by Richard Hanania on Manifold Markets.

- pastcasting.com allows users to forecast on already resolved questions with unknown resolutions which hopefully results in faster feedback loops and faster learning

- Hedgehog Markets now have automatic market-maker-based markets

- Jonas Moss looks at updating just on the passage of time

Index

- Prediction Markets & Forecasting Platforms

- Blog Posts and Research

- In The News

You can sign up for this newsletter on Substack, or browse past newsletters here. If you have a content suggestion or want to reach out, you can leave a comment or find me on Twitter.

Prediction Markets & Forecasting Platforms

Manifold Markets

Manifold markets partnered (a) with Richard Hanania's Center for the Study of Partisanship and Ideology (a) (some updates here (a).

The Salem Center at the University of Texas is hiring a new research fellow for the 2023-2024 academic year. This position will pay $25,000 and not require teaching or in-person residency. Rather, it will provide an academic job and financial support for a researcher to do whatever they want with their time, in order to advance their career or work on other projects.

Unlike a typical fellowship, you will not apply to this one by sending us letters of recommendation and a CV listing all of your publications and awards, and then relying on our subjective judgements about other people’s subjective judgments about your work. Rather, you will participate in a forecasting tournament on economics and social and political issues. At the end of the process, we will interview the top five finalists and decide among them.

Substack now supports manifold market embeds (a), which looks much like the version in the EA Forum. But now, users who are logged into Manifold Markets in the same browser can bet in there directly:

Metaculus

Nikos Bosse summarizes Metaculus’ Keep Virginia Safe Tournament (a). I would have found it interesting to read some speculation about what decisions were changed back at the Virginia Department of Health as a result of this tournament.

I appreciated the comments on this Metaculus question (a) on China annexing at least half of Taiwan by 2050. Some examples:

- blednotik on how hard Russia is to sanction.

- nextbigfuture: "Apple CEO Tim Cook, other CEOS and the heads of Vanguard etc... would be on the phone to Biden, Pelosi, Schumer telling them... what are we paying you for. The chips must flow".

Metaculus is still hiring.

Polymarket

Polymarket now supports deposits from Coinbase (a), and is trying out an order book (a).

PredictIt

PredictIt CEO goes on Star Spangled Gamblers (a) to give encouragement to the PredictIt community after the CFTC decided to withdraw its no-action letter. PredictIt veteran Domah is skeptical (a).

Various news media covered the downfall of PredictIt, for example:

In the previous edition of this newsletter, I mentioned that I assigned a 60% chance that Kalshi caused the previous fall from grace of Polymarket, and a 40% chance that they caused PredictIt's demise.

I’ve gotten some pushback on that, and a simple calculation (a) just based on Laplace's law shows that the probability is probably higher.

Kalshi

The US CFTC is asking for public comments about allowing Kalshi to host politics prediction markets (a). I particularly liked this comment (a) by a JP Morgan executive, and this one (a) by a representative of the "Center for Effective Bribery". I drafted my own comment, but the CFTC’s website isn’t playing nice: I’ll report back next month.

Good Judgment Inc and Good Judgment Open

Good Judgment releases a report (a) on Superforecasters™'s probabilities on various climate risks.

I appreciated these two comments on Good Judgment Open:

- belikewater (a) considers the chance (a) of an electrical blackout lasting at least one hour and affecting 60 million or more people in the US and/or Canada before April 2023, and pegs it at 2%.

- orchidny (a) considers the chance that Donald Trump be criminally charged with or indicted for a federal and/or state crime in the US.

Odds and ends

pastcasting.com is a new website which allows users to forecast on already resolved questions they don’t have prior knowledge about to get quick feedback. Would recommend!

Hedgehog Markets now have automatic market-maker-based markets (a). This is a type of prediction market that I've come to know and love because it moves the game a bit from user vs user to platform vs user. They also have a neat piece that covers the recent history of prediction markets (a). I’d say that Hedgehog Markets has matured a fair bit since launch, and I would encourage readers to explore their markets a bit.

Hypermind has a small contest (a) on Russian sanctions. In the question creation phase, with a $4k prize pool, participants can propose questions and bet on which questions will be chosen. Then a $10k tournament will take place on the basis of those questions.

Yoloreked (a) from YOLO (a) and wreck (a) is a new crypto prediction market. I’m mentioning it because I find the name funny, but it’s probably on the scammer side.

A consultant reviews some Oracle products related to forecasting in the transportation industries (a). I found this a neat look into that industry; seems much more professionalized.

Blog Posts and Research

Aleja Cotra—a researcher known for producing a Biological Anchors estimate (a) for the time until AGI—posted a two-year update on her personal AI timelines (a). These have become shorter.

Friend of the newsletter Eli Lifland has been upping his publishing pace, starting with a Personal forecasting retrospective: 2020-2022 (a).

Comparing expert elicitation and model-based probabilistic technology cost forecasts for the energy transition (a), h/t Dan Carey:

We conduct a systematic comparison of technology cost forecasts produced by expert elicitation methods and model-based methods. Our focus is on energy technologies due to their importance for energy and climate policy

We show that, overall, model-based forecasting methods outperformed elicitation methods

However, all methods underestimated technological progress in almost all technologies, likely as a result of structural change across the energy sector due to widespread policies and social and market forces.

Stephanie Losi writes The Silence of Risk Management Victory (a), giving past examples of scenarios which might have led to catastrophe if not for preventative measures. See also the preparedness paradox (a).

While people’s forecasts of future outcomes are often guided by their preferences (“desirability bias”), it has not been explored yet whether people infer others’ preferences from their forecasts.

Across 3 experiments and overall 30 judgments, forecasters who thought that a particular future outcome was likely (vs. unlikely) were perceived as having a stronger preference for this outcome

Holden Karnofsky looks at AI strategy nearcasting (a), defined as "trying to answer key strategic questions about transformative AI, under the assumption that key events (e.g., the development of transformative AI) will happen in a world that is otherwise relatively similar to today's."

The Quantified Uncertainty Research Institute, the NGO for which I work, recently released an "early access" version of Squiggle (a), a language for probabilistic estimation. We are also hiring (a)!

Nathan Barnard looks at how forecasting could have prevented intelligence failures (a), speculating that better forecasting would lead to better outcomes by allowing nations to better know when to hold 'em and when to fold 'em. I am sympathetic to the general argument, but a bit uncertain about the extent to which Tetlock-style forecasting could have provided better guidance in the specific historical case studies mentioned, as opposed to on average, across many such cases.

This blog post (a), via Stat Modeling (a), covers some recent bets on climate change.

Nostalgebraist picks a beef (a) with Metaculus (a).

Nikos Bosse and Sam Abbott argue that one currently neglected strategy of making forecasting more useful is to focus on making domain experts better forecasters (a).

An article in Nature publishes a standardized and comparable set of short-term forecasts (a) on COVID-19 in the US.

Issues from the Technological Forecasting and Social Change journal can be seen here (a). I only briefly skimmed it, and I don't particularly expect it to be particularly good, but it's possible it might be of interest to some in the community.

Technical content

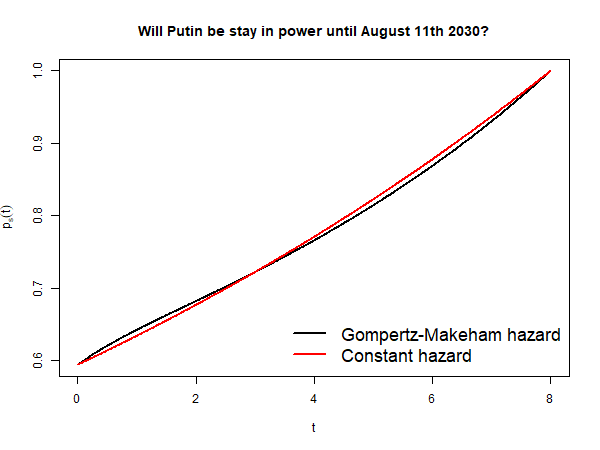

Jonas Moss looks at updating just on the passage of time (a). In particular, he works out the details for questions about hazard rates, like "Will Putin stay in power until August 11th 2030?", and given a few possible forms of the hazard rate (constant, Weibull, Gompertz–Makeham (a)).

I found it amusing that his more complicated Gompertz-Makeham model gave essentially the same answer as a much simpler constant hazard rate model:

Ege Erdil presents Variational Bayesian Methods (a), and interprets naïve k-means clustering as a Bayesian approximation.

There was some neat back and forth on continuous prediction market at the Eth Research Forum (a). In particular, Bo Wagonner proposes the following scheme (a):

The market maker offers to sell “shares” in any given point on the real line. The payoff of a share drops off with distance between its center point and the actual outcome, in the shape of a Gaussian. E.g. if you bought a share of 500k, and the outcome is 300k, your share pays out something like e^{-(500k - 300k)^2 / 2sigma^2} where sigma is a constant chosen ahead of time.

I think this is ingenious because it allows users to bet in favour or against a distribution, but without having to specify all of it, and while being resilient against small perturbations.

In the News

Fraser Nelson writes about fallible forecasts in the UK's recent history (a).

Zuckerberg: Company's pandemic-era forecast was too rosy (a). "Zuckerberg told staffers the world's biggest social media company had planned for growth too optimistically, mistakenly expecting that a bump in usage and revenue growth during COVID-19 lockdowns would be sustained."

Hungary's weather chief sacked over the wrong forecast (a).

Note to the future: All links are added automatically to the Internet Archive, using this tool (a). "(a)" for archived links was inspired by Milan Griffes (a), Andrew Zuckerman (a), and Alexey Guzey (a).

When you lose — and you sure can lose, with N large, you can lose real big. Q.E.D.

Paul Samuelson, in Why we should not make mean log of wealth big though years to act are long (a), points out that Kelly-betting is not statewise dominant over more risk-averse approaches, and thus its preferability depends on one's risk profile.

Linking to the Pastcasting announcement which has more background on motivation and how it works.

Cheers!