Note: This post was crossposted from Planned Obsolescence by the Forum team, with the author's permission. The author may not see or respond to comments on this post.

If you thought we might be able to cure cancer in 2200, then I think you ought to expect there’s a good chance we can do it within years of the advent of AI systems that can do the research work humans can do.

Josh Cason on Twitter raised an objection to recent calls for a moratorium on AI development:

Or raise your hand if you or someone you love has a terminal illness, believes Ai has a chance at accelerating medical work exponentially, and doesn't have til Christmas, to wait on your make believe moratorium. Have a heart man ❤️ https://t.co/wHK86uAYoA

— Josh Cason (@TheGrizztronic) April 2, 2023

I’ve said that I think we should ideally move a lot slower on developing powerful AI systems. I still believe that. But I think Josh’s objection is important and deserves a full airing.

Approximately 150,000 people die worldwide every day. Nearly all of those deaths are, in some sense, preventable, with sufficiently advanced medical technology. Every year, five million families bury a child dead before their fifth birthday. Hundreds of millions of people live in extreme poverty. Billions more have far too little money to achieve their dreams and grow into their full potential. Tens of billions of animals are tortured on factory farms.

Scientific research and economic progress could make an enormous difference to all these problems. Medical research could cure diseases. Economic progress could make food, shelter, medicine, entertainment and luxury goods accessible to people who can't afford it today. Progress in meat alternatives could allow us to shut down factory farms.

There are tens of thousands of scientists, engineers, and policymakers working on fixing these kinds of problems — working on developing vaccines and antivirals, understanding and arresting aging, treating cancer, building cheaper and cleaner energy sources, developing better crops and homes and forms of transportation. But there are only so many people working on each problem. In each field, there are dozens of useful, interesting subproblems that no one is working on, because there aren’t enough people to do the work.

If we could train AI systems powerful enough to automate everything these scientists and engineers do, they could help.

As Tom discussed in a previous post, once we develop AI that does AI research as well as a human expert, it might not be long before we have AI that is way beyond human experts in all domains. That is, AI which is way better than the best humans at all aspects of medical research: thinking of new ideas, designing experiments to test those ideas, building new technologies, and navigating bureaucracies.

This means that rather than tens of thousands of top biomedical researchers, we could have hundreds of millions of significantly superhuman biomedical researchers.[1]

That’s more than a thousand times as much effort going into tackling humanity’s biggest killers. If you thought we might be able to cure cancer in 2200, then I think you ought to expect there’s a good chance we can do it within years of the advent of AI systems that can do the research work humans can do.[2]

All this may be a massive underestimate. This envisions a world that’s pretty much like ours except that extraordinary talent is no longer scarce. But that feels, in some senses, like thinking about the advent of electricity purely in terms of ‘torchlight will no longer be scarce’. Electricity did make it very cheap to light our homes at night. But it also enabled vacuum cleaners, washing machines, cars, smartphones, airplanes, video recording, Twitter — entirely new things, not just cheaper access to things we already used.

If it goes well, I think developing AI that obsoletes humans will more or less bring the 24th century crashing down on the 21st. Some of the impacts of that are mostly straightforward to predict. We will almost certainly cure a lot of diseases and make many important goods much cheaper. Some of the impacts are pretty close to unimaginable.

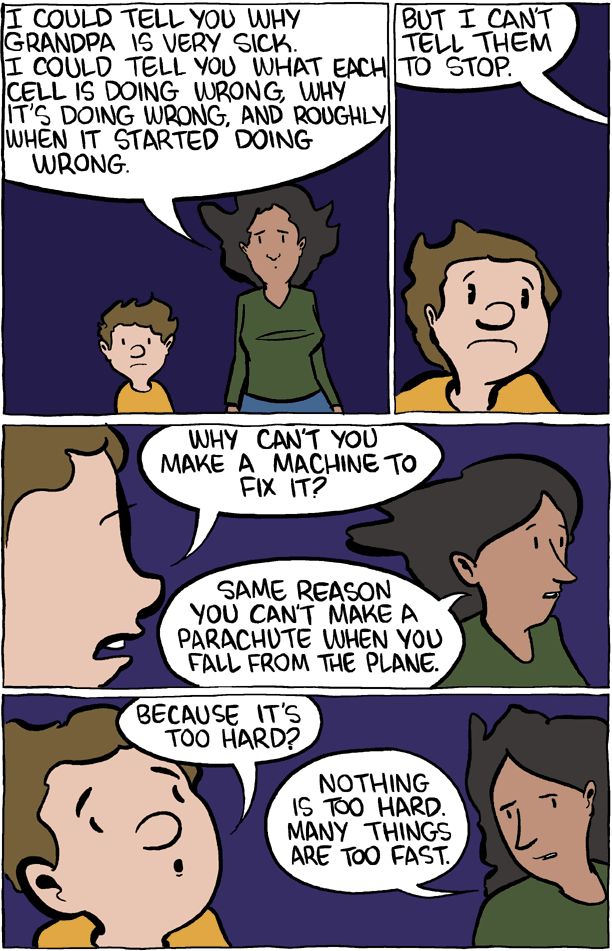

Since I was fifteen years old, I have harbored the hope that scientific and technological progress will come fast enough. I hoped advances in the science of aging would let my grandparents see their great-great-grandchildren get married.

Now my grandparents are in their nineties. I think hastening advanced AI might be their best shot at living longer than a few more years, but I’m still advocating for us to slow down. The risk of a catastrophe there’s no recovering from seems too high.[3] It’s worth going slowly to be more sure of getting this right, to better understand what we’re building and think about its effects.

But I’ve seen some people make the case for caution by asking, basically, ‘why are we risking the world for these trivial toys?’ And I want to make it clear that the assumption behind both AI optimism and AI pessimism is that these are not just goofy chatbots, but an early research stage towards developing a second intelligent species. Both AI fears and AI hopes rest on the belief that it may be possible to build alien minds that can do everything we can do and much more. What’s at stake, if that’s true, isn’t whether we’ll have fun chatbots. It’s the life-and-death consequences of delaying, and the possibility we’ll screw up and kill everyone.

Tom argues that the compute needed to train GPT-6 would be enough to have it perform tens of millions of tasks in parallel. We expect that the training compute for superhuman AI will allow you to run many more copies still. ↩︎

In fact, I think it might be even more explosive than that — even as these superhuman digital scientists conduct medical research for us, other AIs will be working on rapidly improving the capabilities of these digital biomedical researchers, and other AIs still will be improving hardware efficiency and building more hardware so that we can run increasing numbers of them. ↩︎

This assumes we don’t make much progress on figuring out how to build such systems safely. Most of my hope is that we will slow down and figure out how to do this right (or be slowed down by external factors like powerful AI being very hard to develop), and if we give ourselves a lot more time, then I’m optimistic. ↩︎

I thought about this a moderate amount as my wife was dying. This is definitely personal for me. But I still think the potential risks are too great and that the overall balance favors caution, even if people like my wife won't be able to benefit. Cost-benefit analysis is often very sad like that.

I think that just as the risks of AGI are overstated, so too are the potential benefits. Don't get me wrong, i expect it would still be revolutionary and incredible, just not magical.

Going from tens of thousands of biomedical researchers to hundreds of millions would definitely greatly speed up medical research... but I think you would run into diminishing returns, as the limiting bottleneck is often not the number of researchers. For example, coming up with the covid vaccine took barely any time at all, but it took years to get it out due to the need for human trials and to actually build and distribute the thing.

I still think there would be a massive boost, but perhaps not a "jump in forward a century" one. It's hard to predict exactly what the shortcomings of AGI will be, but there has never been a technology that lacked shortcomings, and I don't think AGI will be the exception.

I agree that bottlenecks like the ones you mention will slow things down. I think that's compatible with this being a "jump in forward a century" thing though.

Let's consider the case of a cure for cancer. First of all, even if it takes "years to get it out due to the need for human trials and to actually build and distribute the thing" AGI could still bring the cure forward from 2200 to 2040 (assuming we get AGI in 2035).

Second, the excess top-quality labour from AGI could help us route-around the bottlenecks you mentioned:

Let me make the contrarian point here that you don't have to build AGI to get these benefits eventually. An alternative, much safer approach would be to stop AGI entirely and try to inflate human/biological intelligence with drugs or other biotech. Stopping AGI is unlikely to happen and this biological route would take a lot longer but it's worth bringing up in any argument about the risks vs. reward of AI.

I fully agree, see this post.

In the context of the OP, the thought experiment would need to be extended.

"Would you risk a 10% chance of a deadly crash to go to [random country]" -> ~100% of people reply no.

"Would you risk a 10% of a deadly crash to go to a Utopia without material scarcity, conflict, disease?" -> One would expect a much more mixed response.

The main ethical problem is that in the scenario of global AI progress, everyone is forced to board the plane, irrespective of their preferences.

I agree with you more than with Akash/Tristan Harris here, but note that death and Utopia are not the only possible outcomes! It's more like "Would you risk a 10% of a deadly crash for a chance to go to a Utopia without material scarcity, conflict, disease"

Yeah, that awesome stuff.

My impression is that most people who buy "LLMs --> superintelligence" favor caution despite caution slowing awesome stuff.

But this thread seems unproductive.

I agree that if you have to slow down all AI progress or none of it, you should slow it all down. But fortunately, you don't-- you can almost have the best of both worlds.

Insofar as AI x-risk looks like LLMs while awesome stuff like medicine (and robotics and autonomous vehicles and more) doesn't look like LLMs, caution on LLMs doesn't delay other awesome stuff.* So when you talk about slowing AI progress, make it clear that you only mean AI on the path to dangerous capabilities.

*That's not exactly true: e.g. maybe an LLM can automate medical research, or recursively bootstrap itself to godhood and then solve medicine. But "caution with LLMs" doesn't conflict with "progress on medicine now."

AI biologists seem extremely dangerous to me - something "merely" as good at viral genomes as GPT-4 is at language would already be an existential threat to human civilization, if not necessarily homo sapiens.

Sure.

Often when people talk about awesome stuff they're not referring to LLMs. In this case, there's no need to slow down the awesome stuff they're talking about.

One thing I, as a lay person do not understand about the benefits of AI: Why do we think the corporations that would own these systems would care for the poor and sick? We already have the cure for many of the worst diseases in the world, but we do not cure them because the people who suffer them do not have money to pay for the cure. The same goes for poverty, we have enough food and wealth already for everyone to live dignified and fulfilling lives but we do not share the resources because some people want more than what is required. Why would this distribution problem not continue under AGI? Why would not AGI be focused on marginal life extension for the rich, and bigger houses, space travel etc. for the middle classes rather than getting more resources to the poorest? I would love to be convinced that this is not likely to continue happening under very capable AI - I hope that I have missed convincing writing on how AGI within the current economic system would differ significantly from how other increases in productivity and technical progress get distributed (or that AGI will radically transform the economic system to cater more to the marginalized).

In other words, it seems to me that to solve poverty and global health, power needs to shift. And power structures do not seem to be something you can fix with science or technology.

I'm confused, we make this caution compromise all the time - for example, medicine trial ethics. Can we go faster? Sure, but the risks are higher. Yes, that can mean that some people will not get a treatment that is developed a few years too late.

Another closer example is gain of function research. The point is, we could do a lot, but we chose not to - AI should be no different.

Seems to me that this post is a little detached from real world caution considerations, even if it isn't making an incorrect point.

As I have argued here in more detail, we don't need AGI for an amazing future, including curing cancer. We don't have to decide between "all in for AGI" and "full-stop in developing AI". There's a middle ground, and I think it's the best option we have.