The EA Mindset

This is an unfair caricature/ lampoon of parts of the 'EA mindset' or maybe in particular, my mindset towards EA.

Importance: Literally everything is at stake, the whole future lightcone astronomical utility suffering and happiness. Imagine the most important thing you can think of, then times that by a really large number with billions of zeros on the end. That's a fraction of a fraction of what's at stake.

Special: You are in a special time upon which the whole of everything depends. You are also one of the special chosen few who understands how important everything is. Also you understand the importance of rationality and evidence which everyone else fails to get (you even have the suspicion that some of the people within the chosen few don't actually 'really get it').

Heroic responsiblity: "You could call it heroic responsibility, maybe,” Harry Potter said. “Not like the usual sort. It means that whatever happens, no matter what, it’s always your fault. Even if you tell Professor McGonagall, she’s not responsible for what happens, you are. Following the school rules isn’t an excuse, someone else being in charge isn’t an excuse, even trying your best isn’t an excuse. There just aren’t any excuses, you’ve got to get the job done no matter what.”

Fortunately, you're in a group of chosen few. Unfortunately, there's actually only one player character, that's you, and everyone else is basically a robot. Relying on a robot is not an excuse for failing to ensure that everything ever goes well (specifically, goes in the best possible way).

Deference: The thing is though, a lot of this business seems really complicated. Like, maximising the whole of the impact universe long term happiness... where do you even start? Luckily some of the chosen few have been thinking about this for a while, and it turns out the answer is AI safety. Obviously you wouldn't trust just anyone on this, everything is at stake after all. But the chosen few have concluded this based on reason and evidence and they also drink huel like you. And someone knows someone who knows Elon Musk, and we have $10 trillion now so we can't be wrong.

(You'd quite like to have some of that $10 trillion so you can stop eating supernoodles, but probably it's being used on more important stuff...)

(also remember, there isn't really a 'we', everyone else is a NPC, so if it turns out the answer was actually animals and not AI safety after all, that's your fault for not doing enough independent thinking).

Position to do good: You still feel kinda confused about what's going on and how to effectively maximise everything. But the people at EA orgs seem to know what's going on and some of them go to conferences like the leaderscone forum. So if you can just get into an EA org then probably they'll let you know all the secrets and give you access to the private google docs and stuff.

Also, everyone listens to people at EA orgs, and so you'll be in a much better position to do good afterwards. You might even get to influence some of that $10 trillion dollars that everyone talks about. Maybe Elon Musk will let you have a go on one of his rockets.

Career capital: EA orgs are looking for talented, impressive ambitious, high potential, promising people. You think you might be one of those, but sometimes you have your doubts, as you sometimes fail at basic things like having enough clean clothes. If you had enough career capital, you could prove to yourself and others that you in fact did have high potential, and would get a job at an EA org. You're considering getting enough career capital by starting a gigaproject or independently solving AI safety.

This things seem kind of challenging, but you can just use self improvement to make yourself the kind of person that could do these things.

Inner Rings and EA

C. S. Lewis' The Inner Ring is IMO, a banger. My rough summary - inner rings are the cool club/ the important people. People spend a lot of energy on trying to be part of the inner rings, and sacrifice things that are truly important.

There are lots of passages that jump out at me, wrt to my experience as an EA. I found it pretty tough reading in a way... in how it makes me reflect on my own motivations and actions.

[of inner rings] There are what correspond to passwords, but they are too spontaneous and informal. A particular slang, the use of particular nicknames, an allusive manner of conversation, are the marks.

There's a perrenial discussion of jargon in EA. I've typically thought of jargon as a trade off between havivng more efficient discourse on the one hand, and lower barriers for new people to enter the conversation on the other. Reading things makes me think of jargon more as a mechanism to signal in-group membership.

And when you had climbed up to somewhere near it by the end of your second year, perhaps you discovered that within the ring there was a Ring yet more inner, which in its turn was the fringe of the great school Ring to which the house Rings were only satellites.

There was a time when I was working very hard to get hired at an EA org. At the time I had some vague sense of 'I just need to work hard now, and once I get the job I'll have made it, I'll be able to relax, I'll properly be an EA'.

Once I got the job, this... didn't quite happen. The goalposts shifted, and 'get a job at an EA org' was replaced by 'get into this new role', 'perform really well', 'get into this more exclusive group of decision makers'.

People who believe themselves to be free, and indeed are free, from snobbery, and who read satires on snobbery with tranquil superiority, may be devoured by the desire in another form. It may be the very intensity of their desire to enter some quite different Ring which renders them immune from all the allurements of high life. An invitation from a duchess would be very cold comfort to a man smarting under the sense of exclusion from some artistic or communistic côterie. Poor man—it is not large, lighted rooms, or champagne, or even scandals about peers and Cabinet Ministers that he wants: it is the sacred little attic or studio, the heads bent together, the fog of tobacco smoke, and the delicious knowledge that we—we four or five all huddled beside this stove—are the people who know.

I think I've been kind of snobby about non-EAs in the past. Eg. friends that are non EA's/ are 'normal', and care about things like buying a house, getting a promotion, having nice clothes... I've had an underlying sense of - can't you see that this is all kind of shallow/ meaningless, and you're in a big hamster rat wheel race? And I had a feeling of superiority in being aware of the game, and deciding not to play, and dedicate myself to something that actually matters. But poor me, it is not large lighted rooms or champagne or a nice house that I want: it is the heads bent together, the fog of large whiteboards and the delicious knowledge that we are the people who are actually making a difference.

I must not assume that you have ever first neglected, and finally shaken off, friends whom you really loved and who might have lasted you a lifetime, in order to court the friendship of those who appeared to you more important, more esoteric.

This bit was tough to read. I've largely prioritised making friends with EAs, in particular those who seemed important, to the detriment of other relationships.

The quest of the Inner Ring will break your hearts unless you break it. But if you break it, a surprising result will follow. If in your working hours you make the work your end, you will presently find yourself all unawares inside the only circle in your profession that really matters. You will be one of the sound craftsmen, and other sound craftsmen will know it.

Some of the people I most respect have this 'sound craftsmen'ness to them. They are seemingly impervious to who the 'important people' are, and what they think. They just do their thing.

And if in your spare time you consort simply with the people you like, you will again find that you have come unawares to a real inside: that you are indeed snug and safe at the centre of something which, seen from without, would look exactly like an Inner Ring. But the difference is that the secrecy is accidental, and its exclusiveness a by-product, and no one was led thither by the lure of the esoteric: for it is only four or five people who like one another meeting to do things that they like

This makes me think of EA groups. It seems to me that in the earlier years, EA groups were much more people 'who like one another meeting to d othings that they like', and now they are more like recruiting grounds for the inner circle.

I expect the above is too cynical, both about my own motivations, and about the EA community. I and people in the EA community have a lot of genuine, noble motivations. But the 'inner ring' motivations make up a larger proportion than I would have liked, and than I previously thought.

EA and Belonging word splurge

Epistemic Status: Similar to my other rants / posts, I will follow the investigative strategy of identifying my own personal problems and projecting these onto the EA community. I also half-read a chapter of a Brene Brown book which talks about belonging, and I will use the investigative strategy of using that to explain everything.

I've felt a decreased sense of belonging in the EA community which leads me to the inexorable conclusion that EA is broken or belonging constrained or something. I'll use that as my starting point and work backwards from there.

Prioritising between people isn't great for belonging

The community is built around things like maximising impact, and prioritisation. Find the best, ignore the rest.

Initially, it seemed like this was more focused on prioritising between opportunities, eg. donation or career opportunities. Though it seems like this has in some sense bled into a culture of prioritising between people, and that doing this has become more explicit and normalised.

Eg. words I see a lot in EA recruitment: talented, promising, high-potential, ambitious. (Sometimes I ask myself, wait a minute... am I talented, promising, high-potential, ambitious?). It seems like EA groups are encouraged to have a focus on the highest potential community members, as that's where they can have the most impact.

But the trouble is, it's not particularly nice to be in a community where you're being assessed and sized up all the time, and different nice things (jobs, respect, people listening to you, money) are given out based on how well you stack up.

Basically, it's pretty hard for a community with a culture of prioritisation to do a good job of providing people with a sense of belonging.

Also, heavy tailed distributions - EA's love them. Some donation opportunities/ jobs are so much more impactful than the others etc. If the thing you're doing isn't in the good bit of the tail, it basically rounds to zero. This is kind of annoying when by definition, most of the things in a heavy tailed distribution aren't in the good bit.

Is belonging effective?

A sense of belonging seems nice, but maybe it's a nice to have, like extra leg room on flights or not working on weekends. Fun, but not necessary if you care about having an impact.

I think my take is that for most people, myself included, it's a necessity. Pursuing world optimisation is only really possible with a basis of belonging.

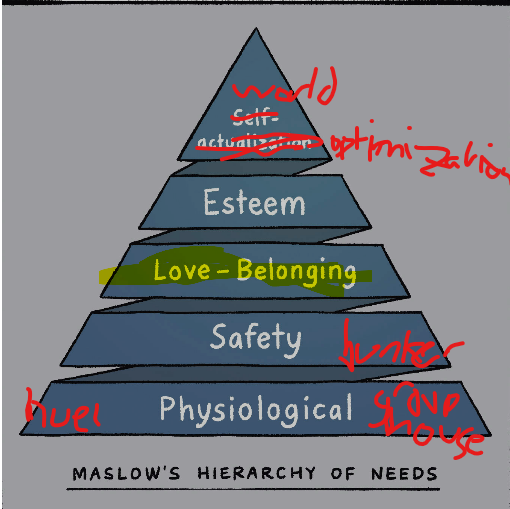

Here's a nice image from Brene Brown's book which I've lightly edited for clarity.

I think the EA community provides some sense of belonging, but probably not enough to properly keep people going. Things can then get a bit complicated, with EA being a community built around world optimisation.

If people have a not-quite-fully-met need to belong, and the EA community is one of their main sources of a sense of belonging, they'll feel more pressure to fit in with the EA community - eg. by drinking the same food, espousing the same beliefs, talking in the same way etc.

I don't understand how to belong to something as massive and distributed as EA. Instead I belong to little pieces: wee communities nested inside the movement. I belong to my org. I belong to EA Bristol. I belong to EA twitter and DEAM, though I often wish I didn't (which breaches your children's definition). Common themes: co-location or daily group chats, shared memories and individuation, lulz. Which of these are you not getting?

[I see that many people 'belong to' structures as massive and nebulous as EA, e.g. religions. But I don't really get it.

I'm not the ideal person to talk about this because I have an anomalously low need for belonging and don't really get it in general.]

Belonging vs. fitting in

Brene Brown asked some eighth graders to come up with the differences between 'fitting in' and 'belonging'.

Some of their things:

- Belonging is being somewhere where you want to be, and they want you. Fitting in is being somewhere where you want to be, but they don't care one way or the other.

- Belonging is being accepted for being you. Fitting in is being accepted for being like everyone else.

I always find it a bit embarassing when eighth graders have more emotional insightfulness than I do, which alonside their poor understanding of Bayesianism, is why I tend to avoid hanging out with them.

I've had experiences of both belonging and fitting in with EA, but I've felt like the fitting in category has become larger over time, or at least I've become more aware of it.

Belonging, fitting in, and why do EAs look the same?

There's this thing where after people have been in EA for a while, they start looking the same. They drink the same huel, use the same words, have the same partners, read the same econ blogs... so what's up with that?

Let's take Brene Brown's insightful eighth graders as a starting point

Belonging is being accepted for being you. Fitting in is being accepted for being like everyone else.

- Things are good and nice hypothesis: EAs end up looking the same because they identify and converge on more rational and effective ways of doing things. EA enables people to be their true selves, and EAs true selves are rational and effective, which is why everyone's true selves drink Huel.

- Cynical hypothesis: EAs end up looking the same because people want to fit in, and they can do that by making themselves more like other people. I drink Huel because it tells other people that I am rational and effective, and I can get over the lack of the experience of being nourished by reminding myself that huel is scientifically actually more nourishing than a meal which I chew sat round a dinner table with other people.

Fitting in with one group makes it harder to fit in with other groups + me being annoying

One maybe sad thing on the cynical hypothesis is that the strategy for fitting in in one group, eg. adopting all these EA lifestyle things, decreases the fit in other groups, and so increases the dependence on the first group... eg. the more I ask my non EA friends what their inside views on AI timelines are the more they're like, this guy has lost the plot and stop making eye contact with me.

(In my research for this post I asked a friend 'Did I become more annoying when I got into the whole EA stuff? It would be helpful if you could say yes because it will help me with this point I'm trying to make' And he said 'Well there was this thing where you were a bit annoying to have conversations with about the world and politics and stuff because you had this whole EA thing and so thought that everything else wasn't important and wasn't worth talking about because the obvious answer was do whatever is most effective... but tbh otherwise not really, you were always kind of annoying)

I found this post harder to understand than the rest of the series. The thing you're describing makes sense in theory, but I haven't seen it in practice and I'm not sure what it would look like.

What EA-related lifestyle changes people would other people find alienating? Veganism? Not participating in especially expensive activities? Talking about EA?

I haven't found "talking about EA" to be a problem, as long as I'm not trying to sell my friends on it without their asking first. I don't think EA is unique in this way — I'd be annoyed if my religious friends tried to proselytize to me or if my activist friends were pressuring me to come and protest with them.

If I talk about my job or what I've been reading lately in the sense of "here's my life update", that goes fine, because we're all sharing those kinds of life updates. I avoid the EA-jargon bits of my job and focus on human stories or funny anecdotes. (Similarly, my programmer friends don't share coding-related stories I won't understand.)

And then, when we're not sharing stories, we're doing things like gaming or hiking or remembering the good times, all of which seem orthogonal to EA. But all friendships are different, and I assume I'm overlooking obstacles that other people have encountered.

(Also, props for doing the research!)

Misc thoughts

Longtermism and feedback loops

A challenge for long-termists is a lack of feedback loops. It's pretty difficult to tell if and how things are going to affect the long-term future, and so pretty difficult to tell what valuable work looks like. In the absence of feedback from the world, people will use feedback from others to determine whether their work is valuable. Plausibly this means that longtermism will be more susceptible to groupthink than neartermism.

Jealousy

Sometimes I feel jealous (or some nearby emotion thing) in the EA community. Times when I feel like this:

- Someone posts with an idea that I had on the EA Forum.

- Someone gets a job that I wanted.

- Someone who is younger than me is doing things that are more important than the things I’m doing.

Some thoughts

- My guess is that this is related to an underlying feeling of scarcity, or insecurity. When I feel more secure as an EA, whatever that means, I'm more likely to celebrate others successes and take joy in them, rather than feel jealous.

- Often the jealousy comes with some sense of ‘that could have been me/ I could have done that, if only I... ’. I don’t feel jealous of Nick Bostrom - I never could have been Nick Bostrom but can feel jealous when there's something that feels like I could have done /had but don't.

- If this is related to some sense of scarcity, what's the thing that feels scarce? Maybe something like status, or being seen as doing valuable work by others (in particular people that I and others see as doing valuable work).

- Noticing instances of feeling jealous makes me a bit more suspicious of my motivations for doing EA things.

Why do people overwork?

It seems to me that:

EAs will say things like - EA is a marathon not a sprint, it's important to take care of your mental health, you can be more effective if you're happier, working too much is counterproductive...

But also, it seems like a lot of EAs (at least people I know) are workaholics, work on weekends and take few holidays, sometimes feel burnt out...

Overall, it seems like there's a discrepancy between what people say about eg. the importance of not overworking and what people do eg. overwork. Is that the case? Why?

I wouldn't measure overwork in hours (hours worked per week / 40): instead it's measured in spoons (hours worked / capacity for sustainable hours). When I work on things I love and think important, and when I am being suitably rewarded, and when I have the gross fortune to have the rest of my life all be in order, it is possible to work well every day, to "rest in motion".

(Form warning: yes in practice many people use more spoons than they have and they generate social feedback towards more work which needs active effort to stop from spiralling into damage.)

Agree about the first one - see here for one antidote.

Another feature which has so far cut against longtermist groupthink is that you had to be pretty weird to spend your life thinking about these things (unless you were an SF writer). That one is leaving us though.

Maybe people are overoptimistic about indendepent/ grant funded work as an option or something?

EA seems unusually big on funding people indendently, eg. people working via grants rather than via employment through some sort of organisation or institution.

(Why is that? Well EAs want to do EA work. And there are more EAs that want to do EA work than there are EA jobs in organisations. Also EA has won the lottery again... so EAs get funded outside the scope of organisations).

When I was working at an organisation (or basically any time I've been in an institution) I was like 'I can't wait to get out of this organisation with all it's meetings and slack notifications and meetings... once I'm out I'll be independent and free and I'll finally make my own decisions about how to spend my time and realise my true potential'.

But as inspirational speaker Dylan Moran warns: 'Stay away from your potential. You'll mess it up, it's potential, leave it. Anyway, it's like your bank balance - you always have a lot less than you think'

After leaving an organisation and beginning to work on grant funding I found it a lot more difficult than expected, and missed the structure that came with working in an organisation.

Some more good things about organisations: mentorship, colleagues, training, plausibly free stationary, a clear distinction between work time and not work time, defined roles and responsibilities, feedback, a sense of identity, something to blame if things don't go to plan.

Speculatively, EA is quite big on self-belief/ believing in one's own potential, and encouraging people to take risks.

And I worry that all this means that more people end up doing independent work than is a good idea.

Thanks, I think this is an interesting take, in particular since much of the commentary is rather the opposite - that EAs should be more inclined not to try to get into an effective altruist organisation.

I think one partial - and maybe charitable - explanation why independent grants are so big in effective altruism is that it scales quite easily - you can just pay out more money, and don't need a managerial structure. By contrast, scaling an organisation takes time and is difficult.

I could also see room for organisational structures that are somewhere in-between full-blown organisations and full independence.

Overall I think this is a topic that merits more attention.

Formal hiring processes as public goods.

Taken in isolation, formal hiring processes (eg. open applications, blinded and standardised assessments...) are often inefficient. It's often easier to source candidates from within one's network, and assess fit on an ad hoc basis.

But there's advantages for a community to collectively use formal hiring processes rather than informal hiring processes.

Formal hiring processes contribute to a meritocractic culture, informal processes contribute to a nepotistic culture.

Some half baked worries about 'EA has loads of money now'

We have loads of money now. But none of it is in my bank account.

- There’s a lot of money in EA right now.

- This money is not evenly distributed. A small number of people have influence over a very large amount of money.

- Everyone else doesn't have this money. But they can apply to get some of this money through eg. grants, prizes or jobs.

Money is nice

- Getting a slice of EA money is good because:

- Money means you can buy things and things are nice and even sometimes necessary (like luxuries or necessities, respectively)

- Getting money enables you to work on things you actually think are meaningful and important (eg .EA stuff)

- Getting money is validating. It means that sensible smart expert people have looked at you and your plans, and think they are valuable.

- This packs a lot of things together, and means that getting prizes, grants, jobs, money can feel very important

Money rich time poor

- The people who do have a lot of money don’t have a lot of time, and will have to do pretty shallow assessments for determining who gets the money (or at least this is my strong suspicion).

- There’s a big difference between whether something is valuable on a quick look, and whether it’s valuable after an in depth look.

- It sucks pretty hard to be rejected for applications for EA money (see getting a slice of EA money is good)

- It sucks really pretty hard if it seems like you haven’t been assessed thoroughly.

This can lead to bad things probably

This could mean:

- People optimise for applications that look good on a shallow assessment (eg. buzzwords, signalling) than on a deep assessment (eg. deep knowledge of stuff)

- People apply for funding for things/ do things that they think will get funded (eg. things of a list of things) rather than what they think is actually valuable

- Basically this is a principal agent problem probably, or at least something like that. Alice (funders) wants Bob (EAs) to clean her house (do valuable projects). And Bob (EAs) really wants money (money). But Alice (funders) has poor eyesight (doesn’t have much time) and so can’t tell easily between a clean house (good project) and a dirty house (bad project).

- I think I got a bit carried away with the above example, but the point is that if we’re not careful it’s gonna be messy.

Also

- In general, large inequalities of money, influence, status, access to information seem kinda bad. And it seems like there are some pretty big inequalities in EA rn.

One perhaps opposite concern I have about this: having lots of money dispersed by grantmakers means you're less like a market economy, and more like a communist economy (except rich, and the central planners are just giving you cash). But this is bad, because it's better to live in a market economy - you have a healthier relationship to your money because you earned it (as opposed to "well I guess I just asked for this money and now I have it so I hope I'm not squandering it in someone else's eyes"), and the constraints you feel in seem more real rather than "why aren't the money fairies giving me stuff".

I share your concerns; what would you recommend doing about it though? One initiative that may come in handy here is the increased focus on getting “regular people” to do grantmaking work, which at least helps spread resources around somewhat. Not sure there’s anything we can do to stop the general bad incentives of acting for the sake of money rather than altruism. For that, we just need to hope most members of the community have strong virtues, which tbh I think we’re pretty good about.

Doctor doctor, I can't stop thinking about impact and how I could be saving more lives if I switched career

Other options I considered

- Facelift virtue ems

- Felt emu fricatives

- Lucrative STEM fief

- Fact feel virtueism

- Mi AI cult fever fest

Cities and Ambition and EA

Paul Graham has an essay ‘Cities and Ambition’ where he talks about cities and ambition.

Great cities attract ambitious people. You can sense it when you walk around one. In a hundred subtle ways, the city sends you a message: you could do more; you should try harder.

Cities have messages, things they are saying you should do or ways you should be. Eg. according to Paul Graham, the message in Cambridge/ Boston is you should be smarter, in Silicon Valley it's you should be more powerful, in Paris it's do things with style.

I’ve found this an interesting lens for viewing EA - eg. seeing EA as a ‘city’.

EA Criticism and EA as a City

Quote from the 80,000 Hours newsletter

Enter a new contest, EA Criticism and Red Teaming, where participants critique a theory or empirical work from effective altruism.

This is one kind of EA criticism. There's another, pretty different kind of criticism, which is criticism of EA as a city. Eg. is EA a good place to live? What are the messages that EA sends to it's inhabitants? Are they good messages?

Another way of getting at this distinction: critiquing EA ideas vs. EA culture.

I have some criticisms of/ beefs with/ constructive feedback for EA which I've procrastinated writing for a long time/ felt a bit stuck on. One of the (charitable) reasons for my procrastination, is, I think, that I've been trying to critique EA as a theory, but actually what I want to critique is more EA as a city. A bad analogy: Like someone living in New York, not really liking the vibe, and aiming to understand or communicate this by writing a long google doc about New York's policies.

The narratives in EA and the messages are not the same

A key thing from Paul's essay is that some cities have messages - they tell you that you should be a certain way. Eg. Cambridge tells you 'you should be smarter'.

But Cambridge doesn't tell you this explicitly, eg. there is probably no big billboard saying 'you should be smarter, your sincerely, Cambridge'. As Paul says:

A city speaks to you mostly by accident — in things you see through windows, in conversations you overhear. It's not something you have to seek out, but something you can't turn off. One of the occupational hazards of living in Cambridge is overhearing the conversations of people who use interrogative intonation in declarative sentences.

My claim is something like: EA also 'speaks to you mostly by accident - in things you see through windowns, in conversations you overhear'. And importantly, the way EA tells you that you should be a certain way can be different to the consensus narrative of how you should be.

For example, I posted a while back saying

Why do people overwork?

It seems to me that:

EAs will say things like - EA is a marathon not a sprint, it's important to take care of your mental health, you can be more effective if you're happier, working too much is counterproductive...

But also, it seems like a lot of EAs (at least people I know) are workaholics, work on weekends and take few holidays, sometimes feel burnt out...

Overall, it seems like there's a discrepancy between what people say about eg. the importance of not overworking and what people do eg. overwork. Is that the case? Why?

It seems to me that the concensus narrative is 'you should have a balanced life', but the thing that EA implicitly tells you is 'you should work harder' (and this is the message that drives people's behaviour).

Here's a bunch of other speculative ways in which it seems to me that the consensus narrative and the implicit message come apart.

- You should have a balanced life vs. you should work harder

- You should think independently vs. you should think what we think

- EA is about doing the most good with a portion of your resources vs. EA is about doing the most good with all of your resources

A weird thing: If respected/ important/ impressive people say eg. 'EA is about maximising the good with only a portion of your resources' but then what they do is give everything they have to EA including their soul and weekends, then the thing that other people end up doing is not maximising the good with only a portion of your resoures, but it is saying that EA is about maximising the good with only a portion of your resources, whilst giving everything plus the kitchen sink to EA. (H/T SWIM)

The discrepancy between explicit messages/ narratives vs. implicit messages/ incentives can result in some serious black-belt level mind-judo.

For example, previously if people had asked me about work life balance I would say that this is very important, people should have balance, very important. But then often I felt like I needed to work on evenings and weekends. To resolve this apparent discrepancy, I'd say to others that I didn't really mind working on evenings and weekends, satisfying both the need to be consistent with the 'don't overwork/ take care of yourself' narrative, and the need to get my work done/ keep up with the Joneses.

Good post. I've especially noticed such a discrepancy when it comes to independence vs deference to the EA consensus. It seems to me that many explicitly argue that one should be independent-minded, but that deference to the EA consensus is rewarded more often than those explicit discussions about deference suggest. (However, personally I think deference to EA consensus views is in fact often warranted.) You're probably right that there is a general pattern between stated views and what is in fact rewarded across multiple issues.

One thought I had while reading this was just: you run slower during a marathon, but marathons are still really hard.

Maybe this comment conflates working more than average with giving "everything ... including their soul and weekends"?

It's tricky because different people perhaps need to hear different things here. I'd like to have a culture where it's possible for people to work normal hours in EA jobs. But I also know people who work more than average because they care deeply about their work and are ambitious, without seeming (to me at least) to be on the verge of crisis.

Project Idea: Interviewing people who have left EA

I can remember a couple of years ago hearing some discussion of a project of interviewing people who have left EA. As far as I know this hasn't happened happened (though might just not be aware of it).

I think this is a good idea, and something I'd like to see as part of the Red Teaming contest.

Why I think this is a good idea:

People who leave EA, at least in some cases, will do so because they no longer buy into it, or no longer like it. Sometimes they will just drift away, but other times, they'll have reasons for leaving.

I expect people leaving EA to be a particularly valuable source of criticism, and to highlight things that would otherwise go unnoticed. A couple of different framings:

- EA as a product: If you're building a product, and want to make it better, you probably want to talk to people who didn't like the product, or used the product for a bit and then stopped, and not just get input from people who are still using the product

- EA as a theory: If you're developing a theory, and want to make it better, you probably want to talk to people that disagreed with the theory, and not just...

Receiving criticism from people who were never convinced by EA/ who have never been 'in' EA seems useful, though I think there's additional benefit to criticism from people who were in EA and then left.

- EA looks pretty different from the inside than from the outside. (Often external EA criticisms seem to be focused on an out of date version of EA). Criticisms of a city you have lived in will be pretty different from a city you haven't lived in.

- EA is something of a worldview, with a loose shared set of concepts, beliefs, language, norms etc. People who have adopted this worldview will probably be better able to communicate to it. Eg. it's probaby easier for someone who has been a Catholic to present a compelling criticism of Catholicism to Catholics.

I also expect people leaving EA to be much less likely to actually eg. write up and post their criticisms of EA. Some reasons:

- The usual motivations will apply less. Eg. Alice is an EA and writes up an EA criticism piece because she wants to help the EA community become better, and because she wants to make a name for herself in EA (eg. write a banging post, everyone thinks its amazing, she gets a job at an EA org or something). Robert is leaving EA and feels pessimistic about the EA community improving, and is less bothered about making a name for himself within EA, and so doesn't write a post.

- EA is in part a worldview, and leaving EA can mean shifting away from that worldview. Thinking about worldviews is pretty hard - they are nebulous, big and often not made explicit. Also communicating with people who do not share your worldview is hard. Imaginary caricature:

- Robert: I don't really like the vibe of EA anymore, and think there are systemic problems with the power structure.

- Alice: Interesting, do you have any reason and evidence for that, or subjective probability estimates? Also would you like some Huel?

- EA can become a big part of people's lives, covering their beliefs, hopes and dreams, ambitions, social group, diet etc. Leaving this all can be hard/ emotionally taxing, and plausibly building a new life seems more important than writing up a list of reasons why you don't endorse the old life.

"70,000 hours back"; a monthly podcast interviewing someone who 'left EA' about what they think are some of EAs most pressing problems, and what somebody else should do about them.

Who thought the userid did not have leftism as in left of center, but leftism as in those who left the community. :D

Cities and Ambition and EA

Paul Graham has an essay ‘Cities and Ambition’ where he talks about cities and ambition.

Cities have messages, things they are saying you should do or ways you should be. Eg. according to Paul Graham, the message in Cambridge/ Boston is you should be smarter, in Silicon Valley it's you should be more powerful, in Paris it's do things with style.

I’ve found this an interesting lens for viewing EA - eg. seeing EA as a ‘city’.

Good post. I've especially noticed such a discrepancy when it comes to independence vs deference to the EA consensus. It seems to me that many explicitly argue that one should be independent-minded, but that deference to the EA consensus is rewarded more often than those explicit discussions about deference suggest. (However, personally I think deference to EA consensus views is in fact often warranted.) You're probably right that there is a general pattern between stated views and what is in fact rewarded across multiple issues.