Quick takes

The positive media storm for Anthropic is bigger than I thought it would be.

Almost every major news network has featured them and almost all of it puts a halo on Amodei (which feels a bit icky but hey).

And every 4th post on my linkedin is along the lines of

"Claude hits no. 1 on App store"

"the idea that no big tech has morals is dead,"

"my 3 year love affair with GPT Is over"

"I made the switch to Claude and I'll never look back"

As much as refusing the govt. contact might delay their IPO and give their valuation a temporary hit, they could hardly have h...

Dean Ball's commentary on this refamed the issue for me https://www.hyperdimensional.co/p/clawed

...The big difference, however, is that Anthropic is essentially using the contractual vehicle to impose what feel less like technical constraints and more like policy constraints on the military. Think of the difference between “this fighter jet is not certified for flight above such-and-such an altitude, and if you fly above that altitude, you’ve breached your warranty,” and “you may not fly this jet above such-and-such an altitude”). It is probably the case that

I have twice recently "gently counseled" people on EA forum norms when they come in, in my opinion, a little too hot for this rather cool medium 😃 is there something official/CEA-endorsed on this subject? If not, should I/someone write it? I could point them to Scout Mindset but that's kind of a high barrier to entry.

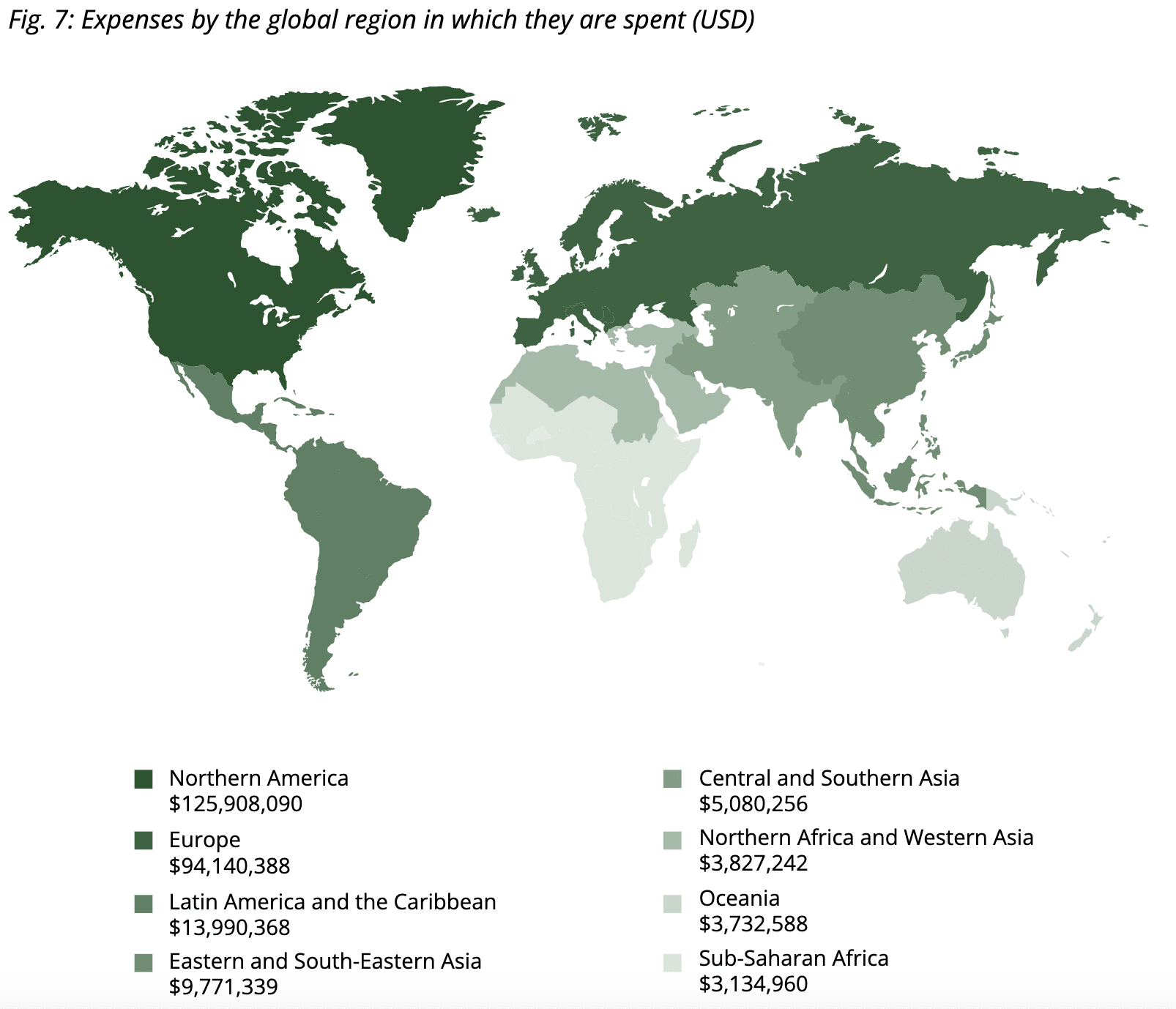

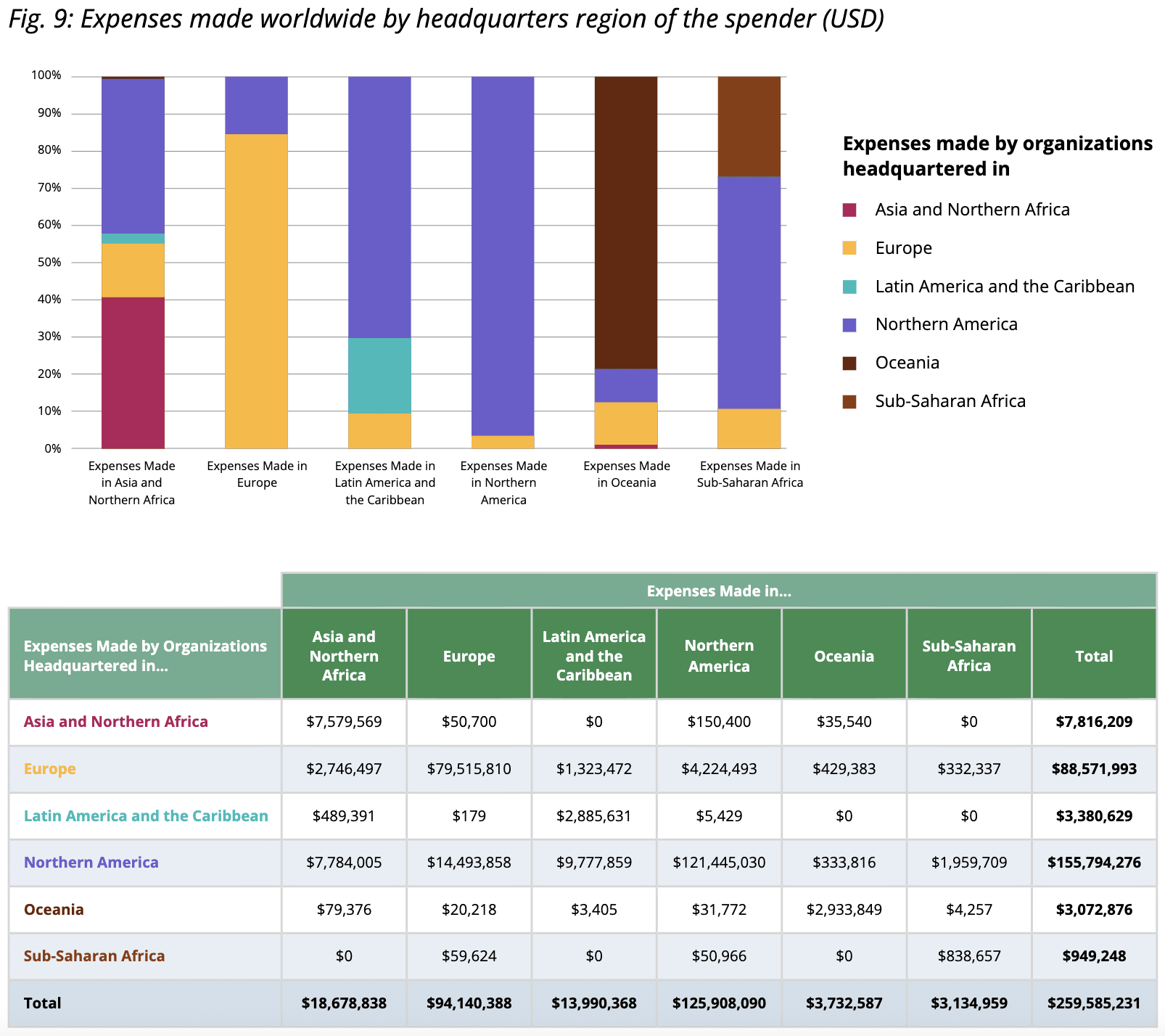

Thought to share some infographics on animal advocacy org expenses from the Stray Dog Institute's 2024 State of the Movement report, which I learned about via Moritz's excellent post.

Most org spending is in North America and Europe:

North American and European orgs accounted for most of the spend in sub-Saharan Africa and LATAM & the Caribbean, despite spending (say) only ~1% of their total expenses in SSA:

I don't have any good sense of how this Global North-dominated funding potentially skews priorities, but this drill down by animal ...

I'd be keen to get takes from folks in the know on what seems underfunded here. Farmed insects jump out: just $135k out of $260m overall (~0.05%) seems nuts.

Aquatic animals look really underfunded (see Tom and Aaron's post). But 'number of individuals' isn't necessarily the best proxy for how much money should be spent; e.g., we probably shouldn't be funding a welfare ask for every farmed fish out there until we have a better idea how to help them.

I'm surprised that there hasn't been an attempt (as far as I know) to fund/create a competitor to Epoch.ai.

It wouldn't have to compete on all benchmarks, but it would be good to have a forecasting organisation that could be trusted with potentially dual use insights into capabilities trajectories. I don't believe this would require uniformity of views: it would just require people with a proper sense of responsibility.

I also think that the bad judgement displayed by some of their employees impinges on some of their research (emphasis on some, particularly ...

Off hand, METR, Forethought, MIT Future Tech, AI Futures Project, AI Index, HAL, and Artificial Analysis all substantially overlap with us in our research focuses and other work, though no two orgs have exactly the same remit. The list of orgs and individual researchers whose work at least partially overlaps with us is far larger.

Personally I think there is a huge amount of descriptive and forecasting research to be done around AI, far more than any one organization can or should take on. I would welcome more "competitors" and I don't want anyone who is in...

This might feel obvious, but I think it's under-appreciated how much disagreement on AI progress just comes down to priors (in a pretty specific way) rather than object-level reasoning.

I was recently arguing the case for shorter timelines to a friend who leans longer. We kept disagreeing on a surprising number of object-level claims, which was weird because we usually agree more on the kinda stuff we were arguing about.

Then I basically realized what I think was going on: she had a pretty strong prior against what I was saying, and that prior is abstract en...

I think there are ways to get the info without threatening the coherence of your system. For example, you can try to understand, and then absorb into intuition, alternative root/basic intuitions. Cf. https://en.wikipedia.org/wiki/World_Hypotheses by Pepper, and Lakoff's ideas on metaphors. As a concrete example in the case of timelines, I would offer https://www.lesswrong.com/posts/wgqcExv9AgN5MuJuY/bioanchors-2-electric-bacilli as an intuition for longer / less confident timelines that you could try to understand intuitively (without necessarily believing...

It might genuinely be the time to boycott Chat GPT and start campaigns targeting corporate partners. But this isn't yet obvious. Even if so, what would be the appropriate concrete and reasonable asks? I think there is a bit of epistemic crisis emerging at the moment. If there's a case to be made, it needs to be made sooner rather than latter. And then we need coordination.

The AI Safety Conversation Is Missing 1.4 Billion People

Cross-posted from my personal notes. I'm sharing this because I think the EA/AI safety community needs to hear it, and because I've been living it.

I lead AI safety work in Nigeria. When I tell people this, the most common reaction is a polite pause, the kind that says: that's interesting, but is that really AI safety?

I want to argue that it is. And that the gap it represents is one of the most neglected problems in the entire AI safety ecosystem.

[Crossposted from social media, in the spirit of Draft Amnesty Week]

After a lot of thinking, I am updating my Giving What We Can🔸10% donation allocation, shifting about a third of my donation portfolio to the Center for Land Economics 🔰.

There are several reasons why I am excited about this donation opportunity.

I believe that Georgism has the potential to radically transform our economy and society. 'Land is a Big Deal', as they say. Raising public funds without deadweight costs is a big part of this. But more fundamentally, by reducing the costs of livin...

Whenever I talk about Effective Altruism (EA) to someone new, I talk about EA-the-Movement and EA-the-Philosophy. EA-the-Movement draws a specific kind of person (quantitative, techy, philosophical) and has selected a few causes it has determined to be the most effective. EA-the-Philosophy is about asking whether our donations and volunteering are going to places that get the most bang for our buck and those questions can be applied to anything we care about.

It's a way of easing people into our way of thinking without insisting that they join our par...

So, for example, if you're talking with a feminist you can ask them where their donations or volunteering could do more or the most good, and they could think about that and come up with answers. And you could give suggestions, e.g. charities that focus on girls education in LMICs, or reproductive health for women, or maternal health charities.

If you're talking with MRAs, you could ask them what are the more effective ways to help men and boys, and they could research ways to help in cases of IPV or MGC, etc.

And the same goes for environmentalists, c...

Given finitude of existence, I think I'd rather help an old lady cross the street & make someone smile than read forum posts. If I had a lot more time in a day, then forum posts might come after reading fiction, gardening, and baking dark chocolate brownies. But before, reading Chomsky, learning Mandarin, and trying to find an answer to a non-pragmatic theoretical question like "If aliens exist, what could that mean about humanity?"

What a coincidence! I was just getting annoyed by Financial Times articles that always make up reasons after things happen. It made me realize that most news is exactly what Chomsky calls Manufacturing Consent.

I chatted with an AI about the idea that DNA/RNA arriving on Earth via asteroids is basically a biological Von Neumann machine. So the alien question is actually a strict Existential Risk (X-risk) issue, not a non-pragmatic one.

Also, my first language is Mandarin.

Farmed animal welfare is one of the most important cause areas out there. Though we’ve written about animal welfare broadly before, we recently published a dedicated piece on farmed animals specifically. Given how often this cause area shows up on our job board and throughout our content, we thought it deserved its own standalone overview, which covers:

- How different farmed animals are treated, including fish, crustaceans, and insects.

- Promising approaches already reducing suffering at scale.

- Why farmed animal welfare remains highly neglected d

I.

Once upon a time, there was an EA named Alice. EA made a lot of sense to Alice, and she believed that some niche problems/causes were astronomically bigger than others. But she eventually decided that (1) the theories of change were confusing/suspicious and (2) there's substantial evidence that a bunch of EA work is net-negative. So she decided to become a teacher or doctor or something.

II.

Alice made a mistake! If she thinks that some problems/causes are astronomically bigger than others, and she's skeptical of certain approaches, she should look for bet...

(I’m biased since I’ve mostly donated to animal welfare / digital minds. I’m also super busy now so it’s possible I just haven’t thought your argument through sufficiently.)

If you’re a pure EV maximizer I agree with your implicit claim that it’s probably best to prioritize AI safety and/or helping steer AI for the benefit of neglected groups (animals and digital minds).

If like most people you have risk aversion, like wanting high confidence you’ve made a positive difference, or wanting to make sure a greater % of EA community resources are devoted to inter...

PSA: regression to the mean/mean reversion is a statistical artifact, not a causal mechanism.

So mean regression says that children of tall parents are likely to be shorter than their parents, but it also says parents of tall children are likely to be shorter than their children.

Put in a different way, mean regression goes in both directions.

This is well-understood enough here in principle, but imo enough people get this wrong in practice that the PSA is worthwhile nonetheless.

Note that in the context of trading/investing, the two terms are often used differently. There, “mean reversion” often means negative autocorrelation of returns, which can either be ~causal or driven by price level noise (which in turn is more like a “regression to the mean” idea). If you invest in a mean reversion strategy you tend to have an actual mechanism in mind though.

“Regression to the mean” is a less ambiguous term and generally means what you describe.

Is there any possibility of the forum having an AI-writing detector in the background which perhaps only the admins can see, but could be queried by suspicious users? I really don't like AI writing and have called it out a number of times but have been wrong once. I imagine this has been thought about and there might even be a form of this going on already.

In saying this my first post on LessWrong was scrapped because they identified it as AI written even though I have NEVER used AI in online writing not even for checking/polishing. So that system obviously isnt' perfect.

We're actually currently working on updating our policy on AI-generated content, so this thread (and follow up poll) is helpful! :)

I’m pretty confident the EA community is underdiscussing on how to prevent global AGI powered autocracy, especially if the US democracy implodes under AGI pressure. There are two key questions here: (I) How to make the US more resilient, and (ii) how can we make the world less dependent on the US democracy resilience.

The Forum should normalize public red-teaming for people considering new jobs, roles, or project ideas.

If someone is seriously thinking about a position, they should feel comfortable posting the key info — org, scope, uncertainties, concerns, arguments for — and explicitly inviting others to stress-test the decision. Some of the best red-teaming I’ve gotten hasn’t come from my closest collaborators (whose takes I can often predict), but from semi-random thoughtful EAs who notice failure modes I wouldn’t have caught alone (or people think pretty differently...

RoastMyPost by the Quantified Uncertainty Research Institute might be useful for your idea too if you have a gdoc proposal.

I believe EA Forum should (re-)consider adding auto-translated content by default. Has this been considered lately?

Observation: As a native german-speaker, auto-translated reddit-content has become extremely relevant in my google-results lately whenever I research something using german search terms.

Expected Effect: I believe this could have large outreach effects infering from how many german policy makers, altruistic donors or otherwise interested people (questions of ethics, economics, altruism, AI) I deem very much open to contents that EA has already ...

I think something a lot of people miss about the “short-term chartist position” (these trends have continued until time t, so I should expect it to continue to time t+1) for an exponential that’s actually a sigmoid is that if you keep holding it, you’ll eventually be wrong exactly once.

Whereas if someone is “short-term chartist hater” (these trends always break, so I predict it’s going to break at time t+1) for an exponential that’s actually a sigmoid is that if you keep holding it, you’ll eventually be correct exactly once.

Now of course most chartists (my...

Do we need to begin considering whether a re-think will be needed in the future with our relationships with AGI/ASI systems? At the moment we view them as tools/agents to do our bidding, and in the safety community there is deep concern/fear when models express a desire to remain online and avoid shutdown and take action accordingly. This is viewed as misaligned behaviour largely.

But what if an intrinsic part of creating true intelligence - that can understand context, see patterns, truly understand the significance of its actions in light of these insight...