This is the third post in a series. The first post is here and the second post is here. The fourth post is here and the fifth is here.

Solutions exist, but some of them undermine long-termist projects

Previously, we laid out the more general problem. How does this problem arise? Roughly speaking, there are three factors which combine to create the problem. The first factor has to do with the probability function, the second with the utility function, and the third with the way they are combined to make decisions.

1. Probability function: The first factor is being willing to consider every possible scenario. More precisely, the first factor is assigning nonzero probability to every possibility consistent with your evidence. This is sometimes called open-mindedness or regularity in the literature.

2. Utility function: The second factor is having no limit on how good things can get. More precisely, the second factor is having an unbounded utility function. (A bounded utility function, by contrast, never assigns any outcome a utility above v, for some finite v.)

3. Decision rule: The third factor is choosing actions on the basis of expected utility maximization, i.e. evaluating actions by multiplying the utility of each possible outcome by its probability, summing up, and picking the action with the highest total.

When the first two factors combine, we tend to get funnel-shaped action profiles. When we combine this with the third factor, we get incoherent and/or absurd results. Hence, the steelmanned problem is something like an impossibility theorem: We have a set of intuitively appealing principles which unfortunately can’t all be satisfied together.

Having explained the problem, we can categorize the major proposed solutions: Those involving the probability function, those involving the utility function, and those involving the decision rule.

The remainder of this section will briefly describe the array of available solutions. I don’t give the arguments for and against in the body of this article because this article is focused on exploring the implications of each solution for long-termism rather than figuring out which solution is best. The arguments for and against each solution can be found in the appendix; suffice it to say that all solutions are controversial.

Solutions involving the probability function:

1. Reject open-mindedness, and argue that we should only consider possibilities in the well-behaved, left-hand section of the chart. Basically, find some way to cut off the wide part of the funnel. The most straightforward way to do this would be to pick a probability p and ignore every possibility with less than p probability. This strategy is taken by e.g. Hajek (2012) and Smith (2014).

2. Argue that the probabilities are systematically low enough so as to not create a funnel. For example, this involves saying that the probability that the Mugger is telling the truth is less than 0.1^10^10^10. This strategy preserves open-mindedness but at the cost of facing most of the same difficulties that solution #1 faces, plus some additional costs: One needs a very extreme probability function in order to make this work; the probabilities have to diminish very fast to avoid being outpaced by the utilities.

Solutions involving the utility function:

3. Argue that we should use a bounded utility function, i.e. that there is some number N such that no outcome could ever have utility higher than N or lower than -N. This would standardly involve assigning diminishing returns to scale on the things we value, e.g. saying that saving 10^10^10^10 lives is only 10^100 times as good as saving 1 life. That way we can still say that it’s always better to save more lives—there is no “best possible world”—while still having a bounded utility function. I prefer this strategy, as do some others. (Sprenger and Heesen 2011) This strategy strongly conflicts with many people’s moral intuitions. Additionally, many have argued that this strategy is unsatisfying.

4. Argue that even though the utility function is unbounded, the utilities are systematically low enough so as to not create a funnel. This strategy conflicts with most of the same intuitions as #3, and faces some additional difficulties as well: You need a pretty extreme utility function to make this work.

Solutions involving the decision rule:

5. Argue that we should use some sort of risk-weighted decision theory instead of normal expected utility maximization. For example, you could assign a penalty to each possible outcome that is some function of how unlikely it is. This strategy faces some of the same difficulties as #2 and #4; the risk-weighting must be pretty extreme in order to keep up with the growing divergence between the utilities and the probabilities. See Buchak’s work for a current example of this line of reasoning. (2013)

6. Argue that we should use some sort of decision theory that allows large chunks of the action profiles to “cancel out,” and then argue that they do in fact cancel out in the ways necessary to avoid the problem. For example, Section 3 argued that the probability of saving 10^10^10^10 lives by donating to AMF is higher than the probability of saving 10^10^10^10 lives by donating to the Mugger. Perhaps this sort of thing can be generalized: Perhaps, of all our available actions, the ones that rank highest only considering possibilities of probability >p are also going to rank highest for any larger set of outcomes also, for some p. The second part of this strategy is the more difficult part. Easwaran (2007) has explored this line of reasoning.

7. Argue that we should give up on formalisms and “go with our gut,” i.e. think carefully about the various options available and then do what seems best. This tried-and-true method of making decisions avoids the problem, but is very subjective, prone to biases, and lacks a good theoretical justification. Perhaps it is the best of a bad lot, but hopefully we can think of something better. Even if we can’t, considerations about formalisms can help us to refine our gut judgments and turn them from guesses into educated guesses.

Concluding this section

It would be naive to say something like this: “Even if the probability of extinction is one in a billion and the probability of preventing it is one in a trillion, we should still prioritize x-risk reduction over everything else…” The reason it would be naive is that tiny probabilities of vast utilities pose a serious unsolved problem, and there are various potential solutions that would undermine any project with probabilities so low. Whether or not you should prioritize x-risk reduction, for example, depends on which possibilities you decide to exclude, or how low your bound on utility is, or how risk-averse you are, or what your gut tells you, or… etc.

Having said that, it would also be premature to conclude that long-termist arguments should be rejected on the basis of these considerations. The next section explains why.

This is the third post in a series. The first post is here and the second post is here. The fourth post is here and the fifth is here.

Notes:

20: The utility function is the function that assigns utilities to possibilities; the probability function is the function that assigns probabilities to possibilities.

21: Assuming that the outlandish scenarios like the ones above are consistent with your evidence—if not, I’m curious to hear about your evidence! Note that “consistent with” is used here in a very strict sense; to be inconsistent with your evidence is to logically contradict it, rather than merely be strongly disconfirmed by it.

22: See Hájek and Smithson (2012), also Beckstead (2013).

23: We don’t necessarily get them: for example, if your probability function assigns sufficiently low probability to high-utility outcomes, then your action profiles could be bullet-shaped even though all three factors are present. This could also happen if your utility function assigns sufficiently low utility to low-probability outcomes. However, as far as I can tell the probability functions needed to achieve this are so extreme and contrived as to be no more plausible than closed-minded probability functions, and similarly the utility functions needed are so extreme that they might as well be bounded. For more on that, see appendix 8.1 (forthcoming).

24: This array of solutions isn’t exhaustive. In particular, there are infinitely many ways that involve the decision rule, and I’ve only described three. However, I’ve tried to include in this category all the major kinds of solution I’ve seen so far.

25: The best way to do this would be to say that those possibilities do in fact have positive probability, but that for decision-making purposes they will be treated as having probability 0. That said, you could also say that the probabilities really are zero past a certain point on the chart. Note that there are other ways to make a cutoff that don’t involve probabilities at all; for example, you could ignore all possibilities that “aren’t supported by the scientific community” or that “contradict the laws of physics.”

26: The idea is that even if our utility function is bounded, and hence even if we avoid the steelmanned problem, there are other possible agents that really do have unbounded utility functions. What would it be rational for them to do? Presumably there is an answer to that question, and the answer doesn’t involve bounding the utility function—rationality isn’t supposed to tell you what your ends are, but rather how to achieve them. See Smith (2014) for a version of this argument.

27: Note that the relationship between #1 and #2 is similar to the relationship between #3 and #4.

28: To be clear, her proposal is more nuanced than simply penalizing low-probability outcomes. It is designed to make sense of preferences exhibited in the Allais paradox.

29: For example: If we ignore all possibilities with probabilities less than one in a trillion, and the probability of preventing extinction is less than one in a trillion, we might as well buy gold-plated ice cream instead of donating. Another example: If our utility function is bounded in such a way that creating 10^40 happy lives is only 10^10 times as good as saving one happy life, and the probability of preventing extinction is less than one in a trillion, the expected utility of x-risk reduction would be less than that of AMF.

Works Cited

Hájek, Alan. (2012) Is Strict Coherence Coherent? Dialectica 66 (3):411-424.

-This is Hajek's argument against regularity/open-mindedness, based on (among other things) the St. Petersburg paradox.

Hájek, Alan and Smithson, Michael. (2012) Rationality and indeterminate probabilities. Synthese (2012) 187:33–48 DOI 10.1007/s11229-011-0033-3

-The argument appears here also, in slightly different form.

Smith, N. (2014) “Is Evaluative Compositionality a Requirement of Rationality?” Mind, Volume 123, Issue 490, 1 April 2014, Pages 457–502.

-Defends the “Ignore possibilities that are sufficiently low probability” solution. Also argues against the bounded utilities solution.

Beckstead, Nick. (2013) On the Overwhelming Importance of Shaping the Far Future. 2013. PhD Thesis. Department of Philosophy, Rutgers University.

Sprenger, J. and Heesen, R. “The Bounded Strength of Weak Expectations” Mind, Volume 120, Issue 479, 1 July 2011, Pages 819–832.

-Defends the “Bound the utility function” solution.

Easwaran, K. (2007) ‘Dominance-based decision theory’. Typescript.

-Tries to derive something like expected utility maximization from deeper principles of dominance and indifference.

Buchak, Lara. (2013) Risk and Rationality. Oxford University Press.

-This is one recent proposal and defense of risk-weighted decision theory; it's a rejection of classical expected utility maximization in favor of something similar but more flexible.

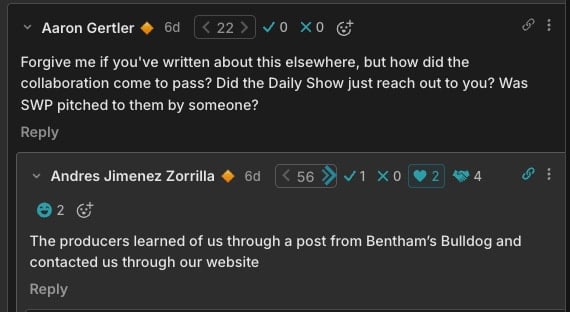

Small suggestion: I would find it helpful if you linked to the previous post(s) in the series in the beginning and, if the forum software allows it, to make references to individual sections (such as "Section 3 argued that") to be clickable links to those sections.

Good point. I put in some links at the beginning and end, and I'll go through now and add the other links you suggest... I don't think the forum software allows me to link to a part of a post, but I can at least link to the post.