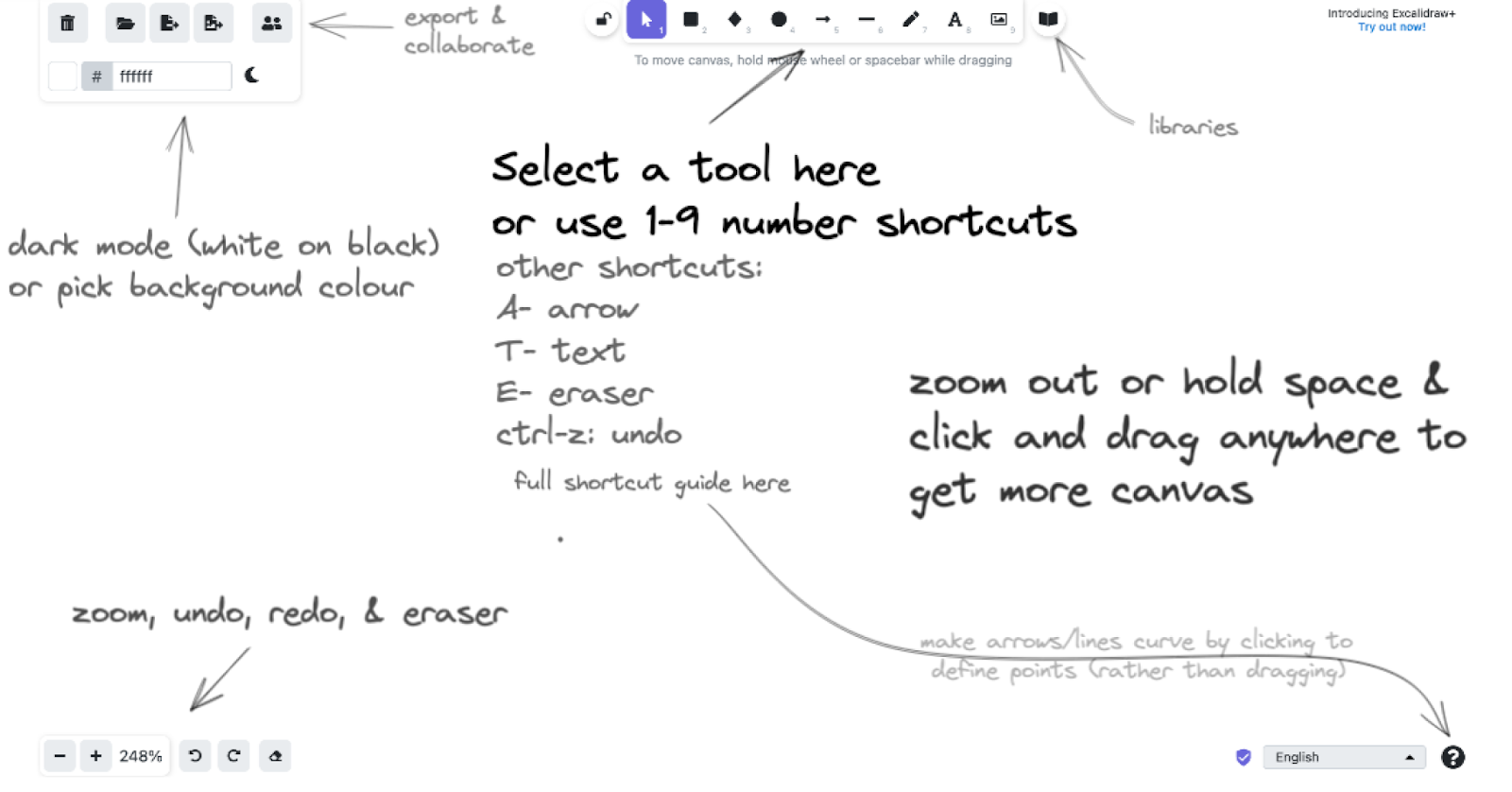

Excalidraw is a collaborative, shared whiteboard for typed text and sketching. It's really useful for summarising notes & making diagrams for forum posts; it's one of the tools I've used a lot to make these tutorials! Its key attractions are simplicity and easy collaboration. It’s designed for quickly creating simple graphics, and has a ‘sloppy/whiteboard’ style.

This post is a simple tutorial for how to use excalidraw. The landing page also has a pretty good introduction to the basic features.

When can Excalidraw be useful?

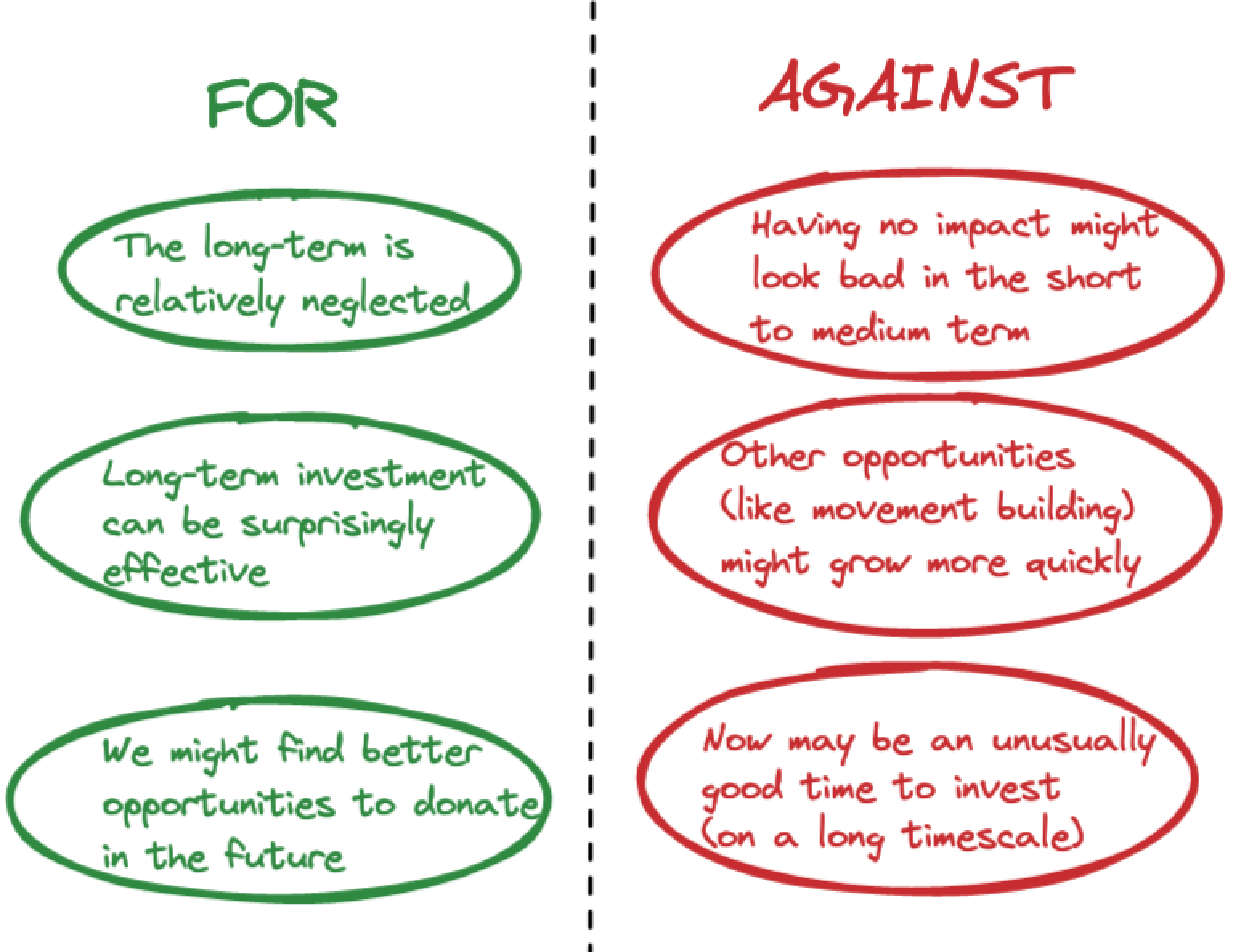

- Visually summarise an argument

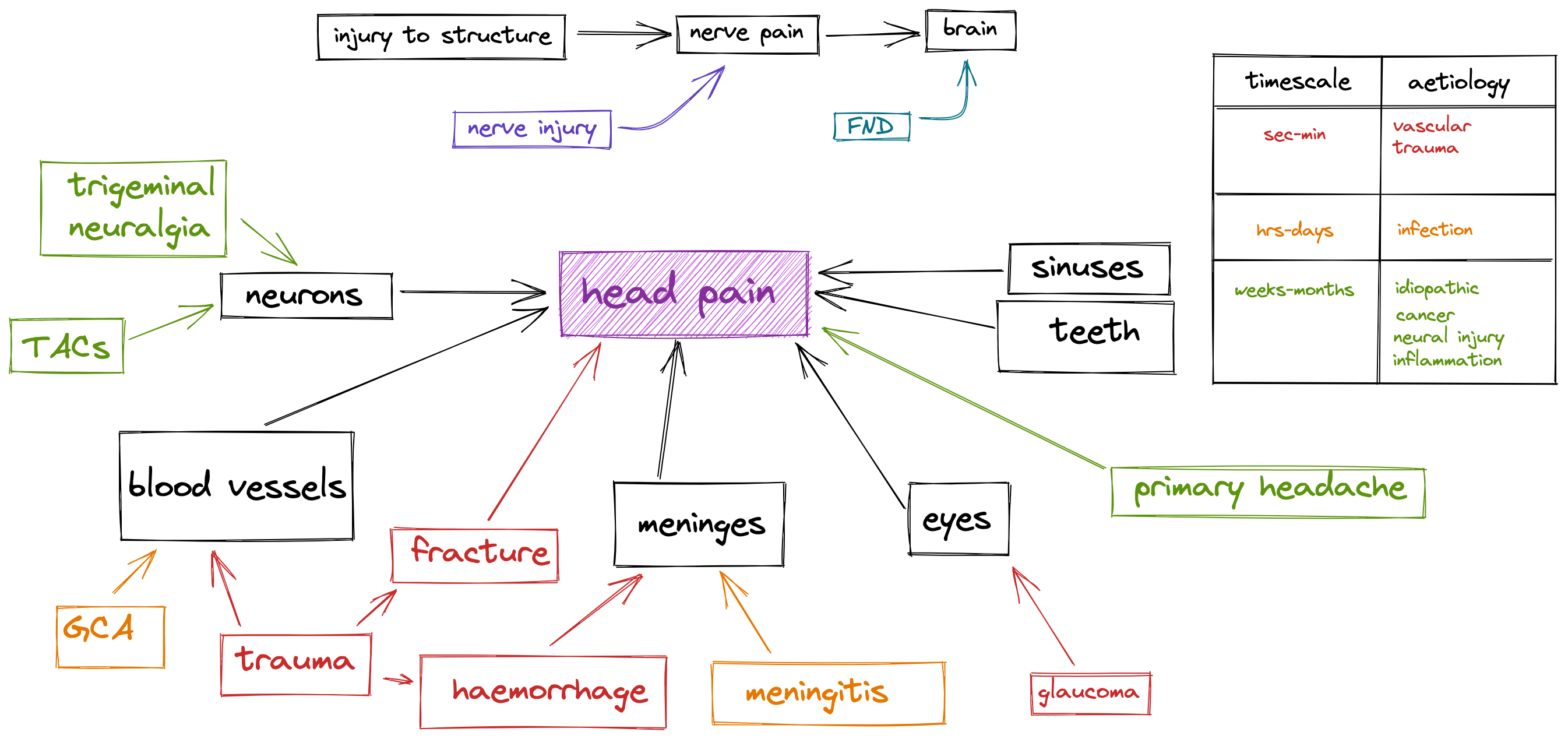

- Summarise notes on a topic

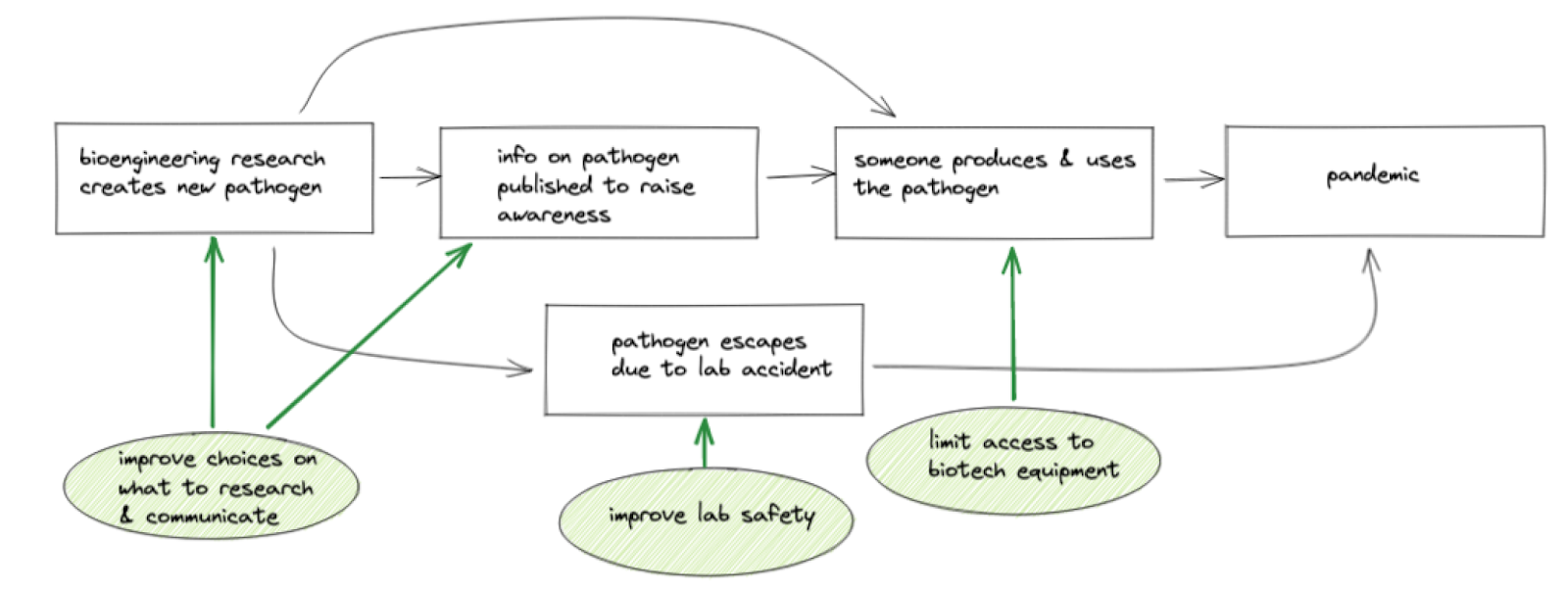

- Build a logic model to help you think through a problem

- Have a debate over zoom, but draw diagrams for one another in real-time to help explain your point

- Represent the AI governance or whole AI safety community diagrammatically

Video Guide

Text Guide

Basic Features

If you’d prefer a worked example, you’re looking at one! The above image was made in Excalidraw. Here’s another:

The point here isn’t that this is a perfect, wonderful diagram that’s much better than the original– it isn’t. The point is that this took 5-10 minutes to make without having to spend time learning a complex tool, and could be done collaboratively.

Collaboration & Export

In the top right box, you can export your whole drawing either as a file to load back into Excalidraw or as an image. You can press the ‘Live Collaboration’ button to start a session.

Once you start a session, you can copy a link which gives anybody access to real-time collaboration on your current board.

When any member leaves the session, they ‘take a version with them’, which they can keep editing locally.

If you want to keep your whiteboard and re-use it later, you can save it either as an Excalidraw file (editable later) or an image file.

Libraries

You can also import libraries of icons made in Excalidraw using the book icon at the top of the screen (to the right of the other tools). There are only a few libraries available right now, but it can be useful for common icon sets.

Worked Examples

These are both examples I actually used! The first I made live with somebody while I was running a tutorial on the topic, the second I made as a quick summary for a discussion group. I've linked the source for the second, but the first was from the top of my head.

Summarise a topic (medical causes of headache):

Summarise a topic (patient philanthropy):

Personal Thoughts

I really like Excalidraw! It's a very polished implementation of a quick sketching tool, and the collaborative features, like the rest of Excalidraw, work exactly how I'd want them to without any fidgeting.

At the moment, the icon libraries feature is pretty sparse, consisting mostly of UI and software architecture symbols, but there's nothing stopping you publishing EA-related symbol libraries!

I was mildly irritated there were no options to save canvasses in the web app, which it turns out is behind a 7$/month paywall. This might be worth it if you're using it a lot, but the offline saving options are pretty comprehensive. Maybe consider it to support the tool's continued existence.

Finally, Excalidraw is a somewhat limited tool not suited to really complex diagrams, but this isn't really its intended purpose. If you want to make more precise or detailed diagrams, I can personally recommend Affinity Designer, and GIMP is a reasonable free alternative. This space is pretty saturated, but they all (to my knowledge) have a much steeper learning curve than Excalidraw, and I'm not aware of more powerful tools with equivalent live collaboration.

Try it Yourself!

Try making a sketch of how your job leads to impact! Share it in the comments, so people can compare different jobs & different styles of diagram.

We'll also be running a session on Monday the 30th at 6pm GMT in the EA GatherTown to discuss Excalidraw and do a short exercise!

On Monday: a post discussing Squiggle, a coding language for model-building!

Yep, Excalidraw is great! I also used it to make this post:

https://www.lesswrong.com/posts/TvrfY4c9eaGLeyDkE/induction-heads-illustrated