What is this?

- I thought it would be useful to assemble an FAQ on the FTX situation.

- This is not an official FAQ. I'm not writing this in any professional capacity.

- This is definitely not legal or financial advice or anything like that.

- Please let me know if anything is wrong/unclear/misleading.

- Please suggest questions and/or answers in the comments.

What is FTX?

- FTX is a Cryptocurrency Derivatives Exchange. It is now bankrupt.

Who is Sam Bankman-Fried (SBF)?

- The founder of FTX. He was recently a billionaire and the richest person under 30.

How is FTX connected to effective altruism?

- In the last couple of years, effective altruism received millions of dollars of funding from SBF and FTX via the Future Fund.

- SBF was following a strategy of "make tons of money to give it to charity." This is called "earning to give", and it's an idea that was spread by EA in early-to-mid 2010s. SBF was definitely encouraged onto his current path by engaging with EA.

- SBF was something of a "golden boy" to EA. For example, this.

How did FTX go bankrupt?

- FTX gambled with user deposits rather than keeping them in reserve.

- Binance, a competitor, triggered a run on the bank where depositors attempted to get their money out.

- It looked like Binance was going to acquire FTX at one point, but they pulled out after due diligence.

- Source

How bad is this?

- "It is therefore very likely to lead to the loss of deposits which will hurt the lives of 10,000s of people eg here" (Source)

- Also:

Does EA still have funding?

- Yes.

- Before FTX there was Open Philanthropy (OP), which has billions in funding from Dustin Muskovitz and Cari Tuna. None of this is connected to FTX.

Is Open Philanthropy funding affected?

- Global health and wellbeing funding will continue as normal.

- Because the total pool of funding to longtermism has shrunk, Open Philanthropy will have to raise the bar on longtermist grant making.

- Thus, Open Philanthropy is "pausing most new longtermist funding commitments" (longtermism includes AI, Biosecurity, and Community Growth) for a couple of months to recalibrate.

- Source

How much of EA's money came from FTX Future Fund?

- As per the post Historical EA funding data from August 2022, the estimation for 2022 was:

- Total EA funds: 741 M$

- FTX Future Fund contribution: 262 M$ (35%)

- (This Q&A contributed by Miguel Lima Medín.)

If you got money from FTX, do you have to give it back?

- "If you received money from an FTX entity in the debtor group anytime on or after approximately August 11, 2022, the bankruptcy process will probably ask you, at some point, to pay all or part of that money back."

- "you will receive formal notice and have an opportunity to make your own case"

- "this process is likely to take months to unfold" and is "going to be a multi-year legal process"

- If this affects you, please read this post from Open Philanthropy. They also made an explainer document on clawbacks.

What if you've already spent money from FTX?

- It's still possible that you may have to give it back.

If you got money from FTX, should you give it back?

- You probably shouldn't, at least for the moment. If you gave the money back, there's the possibility that because it wasn't done through the proper legal channels you end up having to give the money back twice.

If you got money from FTX, should you spend it?

- Probably not. At least for the next few days. You may have to give it back.

I feel bad about having FTX money.

- Reading this may help.

- "It's fine to be somebody who sells utilons for money, just like utilities sell electricity for money."

- "You are not obligated to return funding that got to you ultimately by way of FTX; especially if it's been given for a service you already rendered, any more than the electrical utility ought to return FTX's money that's already been spent on electricity"

What if I'm still expecting FTX money?

- The FTX Future Fund team has all resigned, but "grantees may email grantee-reachout@googlegroups.com."

- "To the extent you have a binding agreement entitling you to receive funds, you may qualify as an unsecured creditor in the bankruptcy action. This means that you could have a claim for payment against the debtor’s estate" (source)

I needed my FTX money to pay the rent!

- Nonlinear has stepped in to help: "If you are a Future Fund grantee and <$10,000 of bridge funding would be of substantial help to you, fill out this short form (<10 mins) and we’ll get back to you ASAP."

I have money and would like to help rescue EA projects that have lost funding.

- You can contribute to the Nonlinear emergency fund mentioned above.

- "If you’re a funder and would like to help, please reach out: katwoods@nonlinear.org"

How can I get support/help (for mental health, advice, etc)?

- "Here’s the best place to reach us if you’d like to talk. I know a form isn’t the warmest, but a real person will get back to you soon." (source)

- Some mental health advice here.

- Someone set up a support network for the FTX situation.

- This table lists people you can contact for free help. It includes experienced mental health supporters and EA-informed coaches and therapists.

- This Slack channel is for discussing your issues, and getting support from the trained helpers as well as peers.

How are people reacting?

- Will MacAskill: "If there was deception and misuse of funds, I am outraged, and I don’t know which emotion is stronger: my utter rage at Sam (and others?) for causing such harm to so many people, or my sadness and self-hatred for falling for this deception."

- Rob Wiblin: "I am ******* appalled. [...] FTX leaders also betrayed investors, staff, collaborators, and the groups working to reduce suffering and the risk of future tragedies that they committed to help."

- Holden Karnofsky: "I dislike “end justify the means”-type reasoning. The version of effective altruism I subscribe to is about being a good citizen, while ambitiously working toward a better world. As I wrote previously, I think effective altruism works best with a strong dose of pluralism and moderation."

- Evan Hubinger: "We must be very clear: fraud in the service of effective altruism is unacceptable"

Was this avoidable?

- Nathan Young notes a few red flags in retrospect.

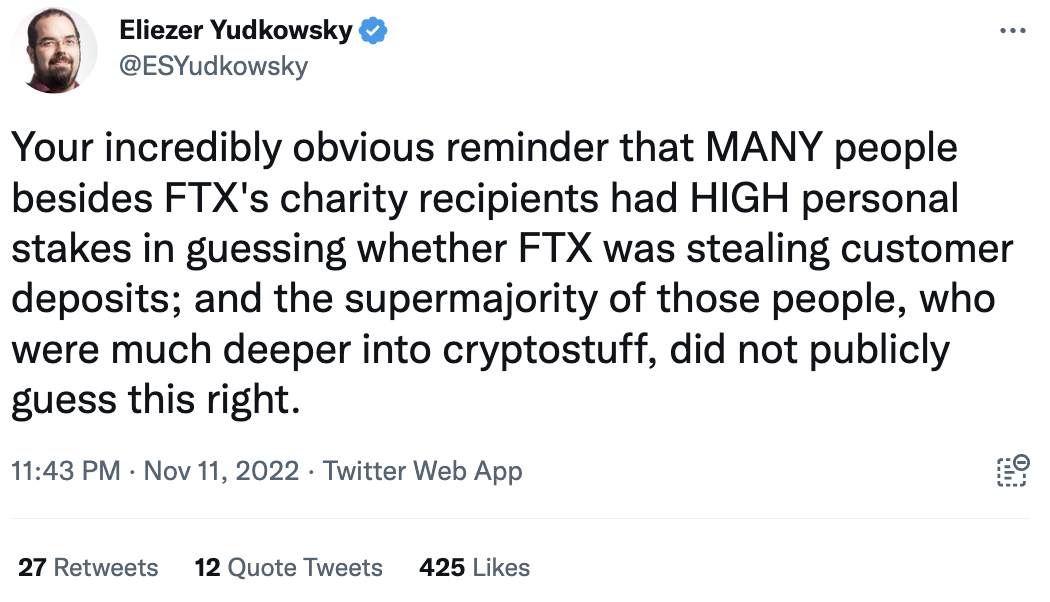

- But on the other hand:

Did leaders in EA know about this?

Will there be an investigation into whether EA leadership knew about this?

- Tyrone-Jay Barugh has suggested this, and Max Dalton (leader of CEA) says "this is something we’re already exploring, but we are not in a position to say anything just yet."

Why didn't the EA criticism competition reveal this?

- (This question was posed by Matthew Yglesias)

- Bruce points out that there were a number of relevant criticisms which questioned the role of FTX in EA (eg). However, there was no good system in place to turn this into meaningful change.

Does the end justify the means?

- Many people are reiterating that EA values go against doing bad stuff for the greater good.

- Will MacAskill compiled a list of times that prominent EAs have emphasised the importance of integrity over the last few years.

- Several people have pointed to this post by Eliezer Yudkowsky, in which he makes a compelling case for the rule "Do not cheat to seize power even when it would provide a net benefit."

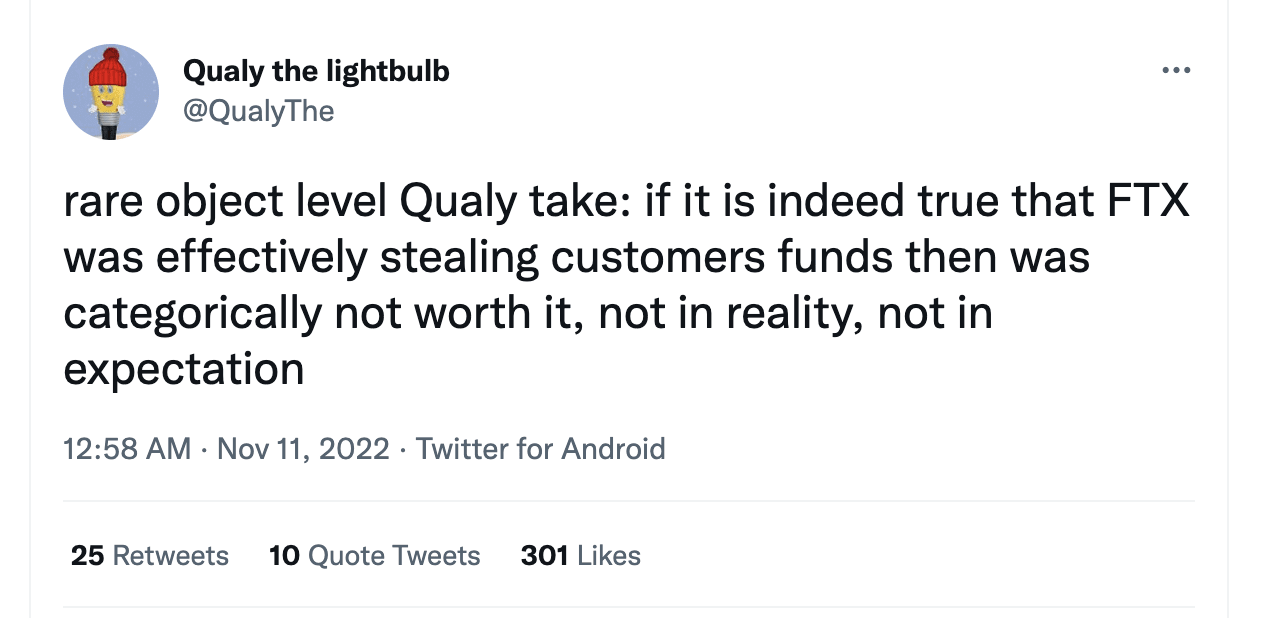

Did Qualy the lightbulb break character?

- Yes.

Nitpick: the sources for "SBF is bankrupt" and for "SBF will likely be convicted of a felony" lead to Nathan's post which I do not believe says either, and which has hundreds of comments so if it's one of them maybe link to that?

For the first of those, while I know FTX filed for bankruptcy (and had their assets frozen by the Bahamas), I haven't heard about SBF personally filing for bankruptcy (which doesn't rule it out, of course).

For the second, it is probably premature to assume he'll be convicted? This depends not only on what he did, but also on the laws and the authorities in several countries. On the other hand, I'm not actually knowledgeable about this, and here is a source claiming that he will probably go to jail.

Thanks for this; it's a nicely compact summary of a really messy situation that I can quickly share if necessary.

FYI: this post has been linked to by this article on Semafor, with the description:

I don't think you're from CEA... Right?

The "correction" is:

Which is of course patently false. What does it mean for a forum to put together an FAQ? Have Semafor ever used a forum before?

I got funding approved from FTX (but they didn't transfer any money to me). Will anyone else look at my funding application and consider funding it instead?

nitpick: It's Cari Tuna, not Kari Tuna

Nitpick: It is actually appropriate to carry tuna, but only if you are carrying distressed tuna to safety

Other possible question for this FAQ:

How much of EA's money came from FTX Future Fund?

As per the post Historical EA funding data from August 2022, the estimation for 2022 was:

* Total EA funds: 741 M$

* FTX Future Fund contribution: 262 M$ (35%)

If anyone has more up to date analysis, or better data, please report it.

And many thanks for the very clear and useful summary. Well done.

Expanding on what Nathan Young said about the dangers of wealthy celebrities mentioning Effective Altruism, I am wondering if it's EA's best interest to certify donors and spokespeople before mentioning EA. The term "effective altruism" itself is ambiguous and having figures such as Musk or FTX using their own definitions without going through the rigor of studying the established definition only makes the problem worse. With certification (one that needs to be renewed annually I must add), it ensures that there's agreement between well-known figures and the EA community that they are in alignment with what EA really means. It also adds accountability to their pledges and donations.

Thank you for providing this FAQ. Maybe you want to add this:

A support network has been set up for people going through a rough patch due to the FTX situation.

In this table, you can find the experienced mental health supporters to talk to.

You can join the new Support Slack here.

People can share and discuss their issues, and get support from the trained helpers as well as peers.

Re: do the ends justify the means?

It is wholly unsurprising that public facing EAs are currently denying that ends justify means. Because they are in damage control mode. They are tying to tame the onslaught of negative PR that EA is now getting. So even if they thought that the ends did justify the means, they would probably lie about it. Because the ends (better PR) would justify the means (lying). So we cannot simply take these people at their word. Because whatever they truly believe, we should expect their answers to be the same.

Let's thin... (read more)

Risky bets aren't themselves objectionable in the way that fraud is, but to just address this point narrowly: Realistic estimates puts risky bets at much worse EV when you control a large fraction of the altruistic pool of money. I think a decent first approximation is that EA's impact scales with the logarithm of its wealth. If you're gambling a small amount of money, that means you should be ~indifferent to 50/50 double or nothing (note that even in this case it doesn't have positive EV). But if you're gambling with the majority of wealth that's predictably committed to EA causes, you should be much more scared about risky bets.

(Also in this case the downside isn't "nothing" — it's much worse.)

I think marginal returns probably don't diminish nearly as quickly as the logarithm for neartermist cause areas, but maybe that's true for longtermist ones (where FTX/Alameda and associates were disproportionately donating), although my impression is that there's no consensus on this, e.g. 80,000 Hours has been arguing for donations still being very valuable.

(I agree that the downside (damage to the EA community and trust in EAs) is worse than nothing relative to the funds being gambled, but that doesn't really affect the spirit of the argument. It's very easy to underappreciate the downside in practice, though.)

Caroline Ellison literally says this in a blog post:

"If you abstract away the financial details there’s also a question of like, what your utility function is. Is it infinitely good to do double-or-nothing coin flips forever? Well, sort of, because your upside is unbounded and your downside is bounded at your entire net worth. But most people don’t do this, because their utility is more like a function of their log wealth or something and they really don’t want to lose all of their money. (Of course those people are lame and not EAs; this blog endorses double-or-nothing coin flips and high leverage.)"

So no, I don't think anyone can deny this.

This feels a bit unfair when people (i) have argued that utility and integrity will correlate strongly in practical cases (why use "perfectly" as your bar?), and (ii) that they will do so in ways that will be easy to underestimate if you just "do the math".

You might think they're mistaken, but some of the arguments do specifically talk about why the "assume 0 correlation and do the math"-approach works poorly, so if you disagree it'd be nice if you addressed that directly.

Fair.

TBH, this has put me off of utilitarianism somewhat. Those silly textbook counter-examples to utilitarianism don't look quite so silly now.

Except the textbook literally warns about this sort of thing:

Again, warnings against naive utilitarianism have been central to utilitarian philosophy right from the start. If I could sear just one sentence into the brains of everyone thinking about utilitarianism right now, it would be this: If your conception of utilitarianism renders it *predictably* harmful, then you're thinking about it wrong.

Also note Sam's own blog

Interesting, thanks. This quote from SBF's blog is particularly revealing:

Here SBF seems to be going full throttle on his utilitarianism and EV reasoning. It's worth noting that many prominent leaders in EA also argue for this sort of thing in their academic papers (their public facing work is usually more tame).

For example, here's a quote from Nick Bostrom (head huncho at the Future of Humanity Institute). He writes:

That sentence is in the third paragraph.

Then you have Will MacAskill and Hilary Greaves saying stuff like:

... (read more)I think the quotes from Sam's blog are very interesting

and are pretty strong evidence for the view that Sam's thinking and actions were directly influenced by some EA ideas.I think the thinking around EA leadership is way too premature and presumptive. There are many years (like a decade?) of EA leadership generally being actually good people and not liars. There are also explicit calls in "official" EA sources that specifically say that the ends do not justify the means in practice, honesty and integrity are important EA values, and pluralism and moral humility are important (which leads to not doing things that would transgress other reasonable moral views).

Most of the relevant documentation is linked in Will's post.

Edit: After reading the full blog post, the quote is actually Sam presenting the argument that one can calculate which cause is highest priority, the rest be damned.

He goes on to say in the very next paragraph:

He concludes the post by stating that the multiplicative model, which he thinks ... (read more)

Hi @Hamish Doodle. My post cited here with regards to "If you got money from FTX, do you have to give it back?" and "If you got money from FTX, should you spend it?" was intended to inform people about not spending FTX money (if possible) until Molly's announced EA forum post. She has now posted it here.

Could you please add/link that source? I believe the takeaway is simillar but it's a much more informative post. The key section is:

... (read more)https://www.theguardian.com/us-news/2022/dec/12/former-ftx-ceo-sam-bankman-fried-arrested-in-the-bahamas-local-authorities-say

How much taxpayer money was funnelled through Ukraine to FTX and back to American politicians