I wrote a reply to the Bentham Bulldog argument that has been going mildly viral. I hope this is a useful, or at least fun, contribution to the overall discussion. Intro/summary below, full post on Substack.

----------------------------------------

“One pump of honey?” the barista asked.

“Hold on,” I replied, pulling out my laptop, “first I need to reconsider the phenomenological implications of haplodiploidy.”

Recently, an article arguing against honey has been making the rounds. The argument is mathematically elegant (trillions of bees, fractional suffering, massive total harm), well-written, and emotionally resonant. Naturally, I think it's completely wrong.

Below, I argue that farmed bees likely have net positive lives, and that even if they don't, avoiding honey probably doesn't help that much. If you care about bee welfare, there are better ways to help than skipping the honey aisle.

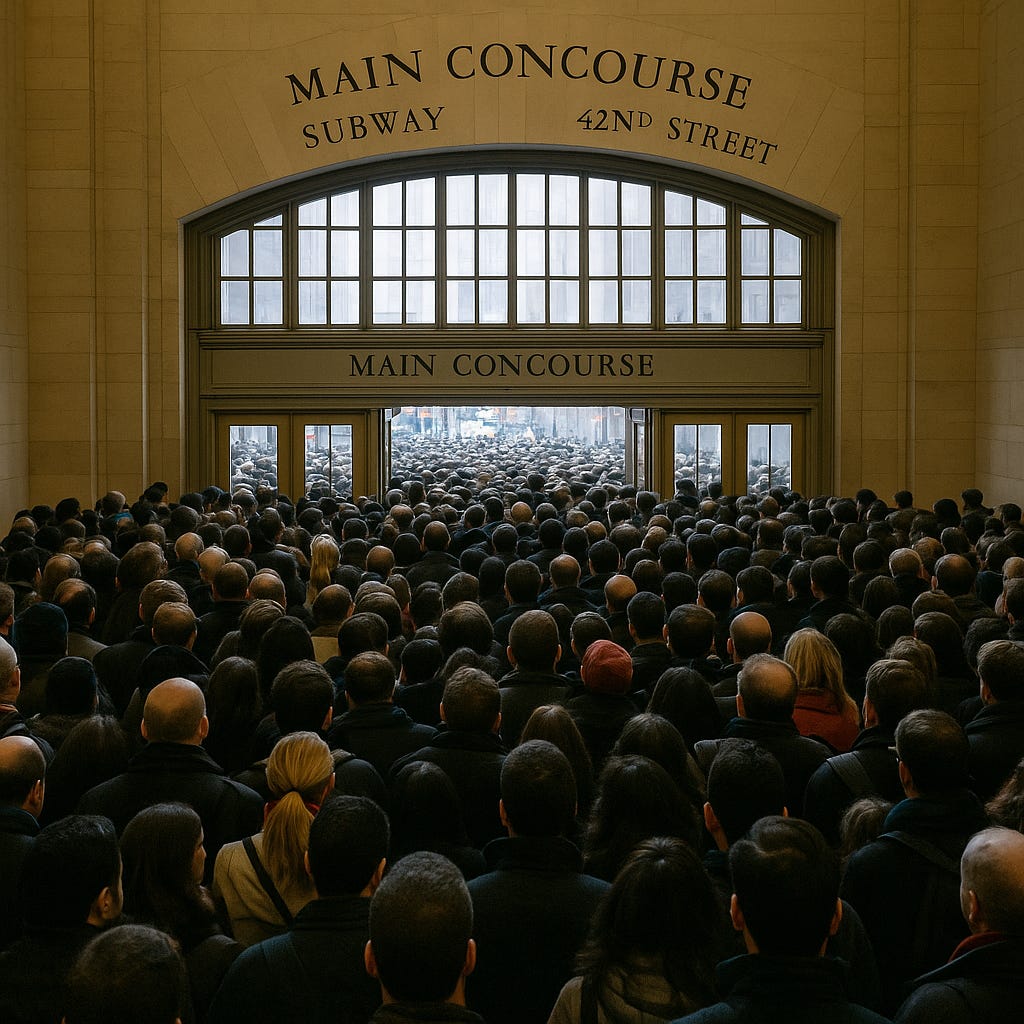

Source

Bentham Bulldog’s Case Against Honey

Bentham Bulldog, a young and intelligent blogger/tract-writer in the classical utilitarianism tradition, lays out a case for avoiding honey. The case itself is long and somewhat emotive, but Claude summarizes it thus:

P1: Eating 1kg of honey causes ~200,000 days of bee farming (vs. 2 days for beef, 31 for eggs)

P2: Farmed bees experience significant suffering (30% hive mortality in winter, malnourishment from honey removal, parasites, transport stress, invasive inspections)

P3: Bees are surprisingly sentient - they display all behavioral proxies for consciousness and experts estimate they suffer at 7-15% the intensity of humans

P4: Even if bee suffering is discounted heavily (0.1% of chicken suffering), the sheer numbers make honey consumption cause more total suffering than other animal products

C: Therefore, honey is the worst commonly consumed animal product and should be avoided

The key move is combining scale (P1) with evidence of suffering (P2) and consciousness (P3) to reach a mathematical conclusion (

I know there's:

I have thought about using the different options, and some of the concerns are:

If you streamline the controls required for the specific application, for example, web-browsing, then any peripheral options become better.

People use the mouse when they would be better off using keyboard shortcuts or some add-in or even another software program.

With the right software or configuration, any solution becomes more useful or attractive.