I want to share some results from my MSc dissertation on AI risk communication, conducted at the University of Oxford.

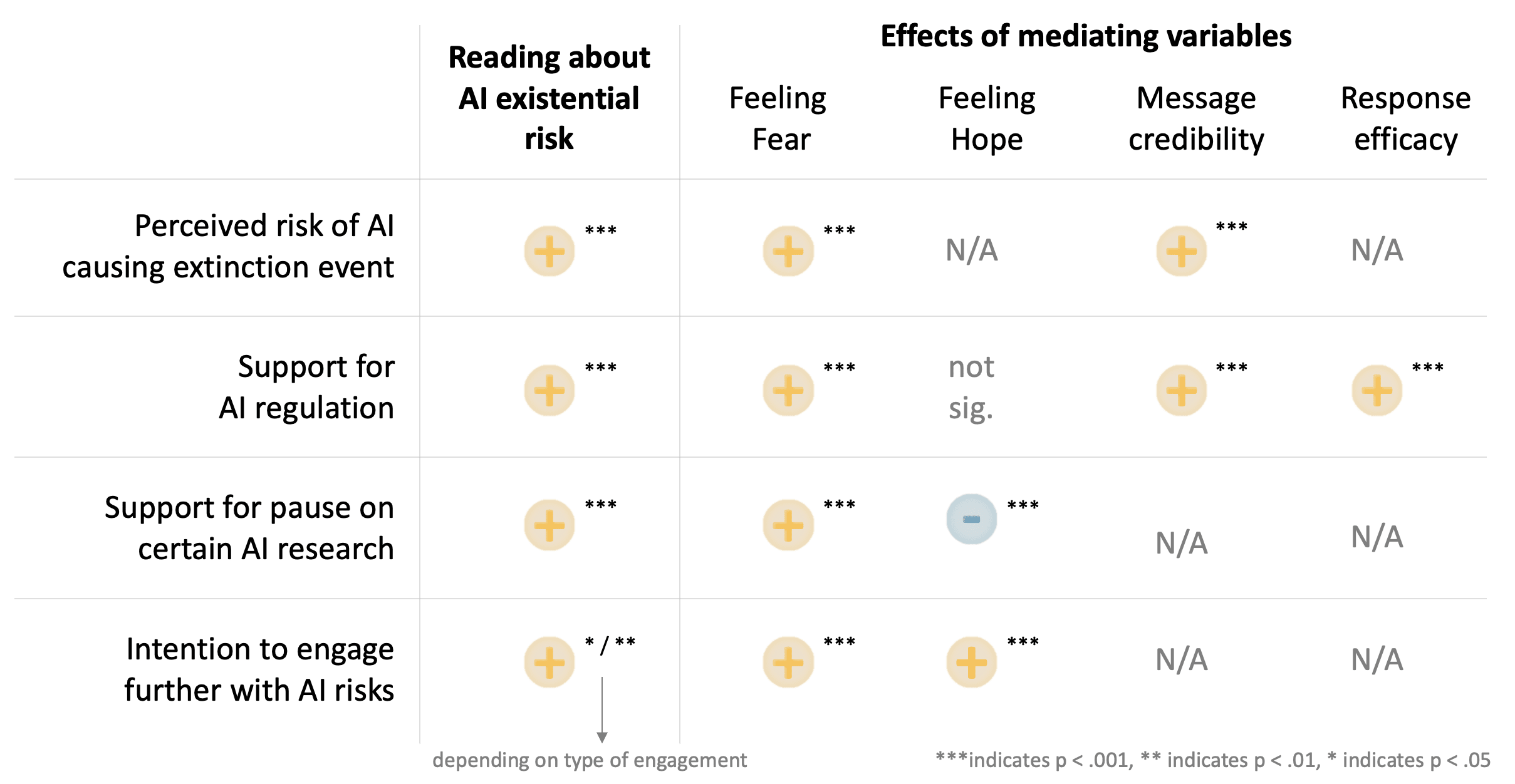

TLDR: In exploring the impact of communicating AI x-risk with different emotional appeals, my study comprising of 1,200 Americans revealed underlying factors that influence public perception on several aspects:

- For raising risk perceptions, fear and message credibility are key

- To create support for AI regulation, beyond inducing fear, conveying the effectiveness of potential regulation seems to be even more important

- In gathering support for a pause in AI development, fear is a major driver

- To prompt engagement with the topic (reading up on the risks, talking about them), strong emotions - both hope and fear related - are drivers

AI x-risk intro

Since the release of ChatGPT, many scientists, software engineers and even leaders of AI companies themselves have increasingly spoken up about the risks of emerging AI technologies. Some voices focus on immediate dangers such as the spread of fake news images and videos, copyright issues and AI surveillance. Others emphasize that besides immediate harm, as AI develops further, it could cause global-scale disasters, even potentially wipe out humanity. How would that happen? There are roughly two routes. First, there could be malicious actors such as authoritarian governments using AI e.g. for lethal autonomous weapons or to engineer new pandemics. Second, if AI gets more intelligent some fear it could get out of control and basically eradicate humans by accident. This sounds crazy but the people creating AI are saying the technology is inherently unpredictable and such an insane disaster could well happen in the future.

AI x-risk communication

There are now many media articles and videos out there talking about the risks of AI. Some announce the end of the world, some say the risks are all overplayed, and some argue for stronger safety measures. So far, there is almost no research on the effectiveness of these articles in changing public opinion, and on the difference between various emotional appeals. Do people really consider the risks from AI greater when reading an article about it? And are they more persuaded if the article is predicting doomsday or if it keeps a more hopeful tone? Which type of article is more likely to induce support for regulation? What motivates people to inform themselves further and discuss the topic with others?

Study set up

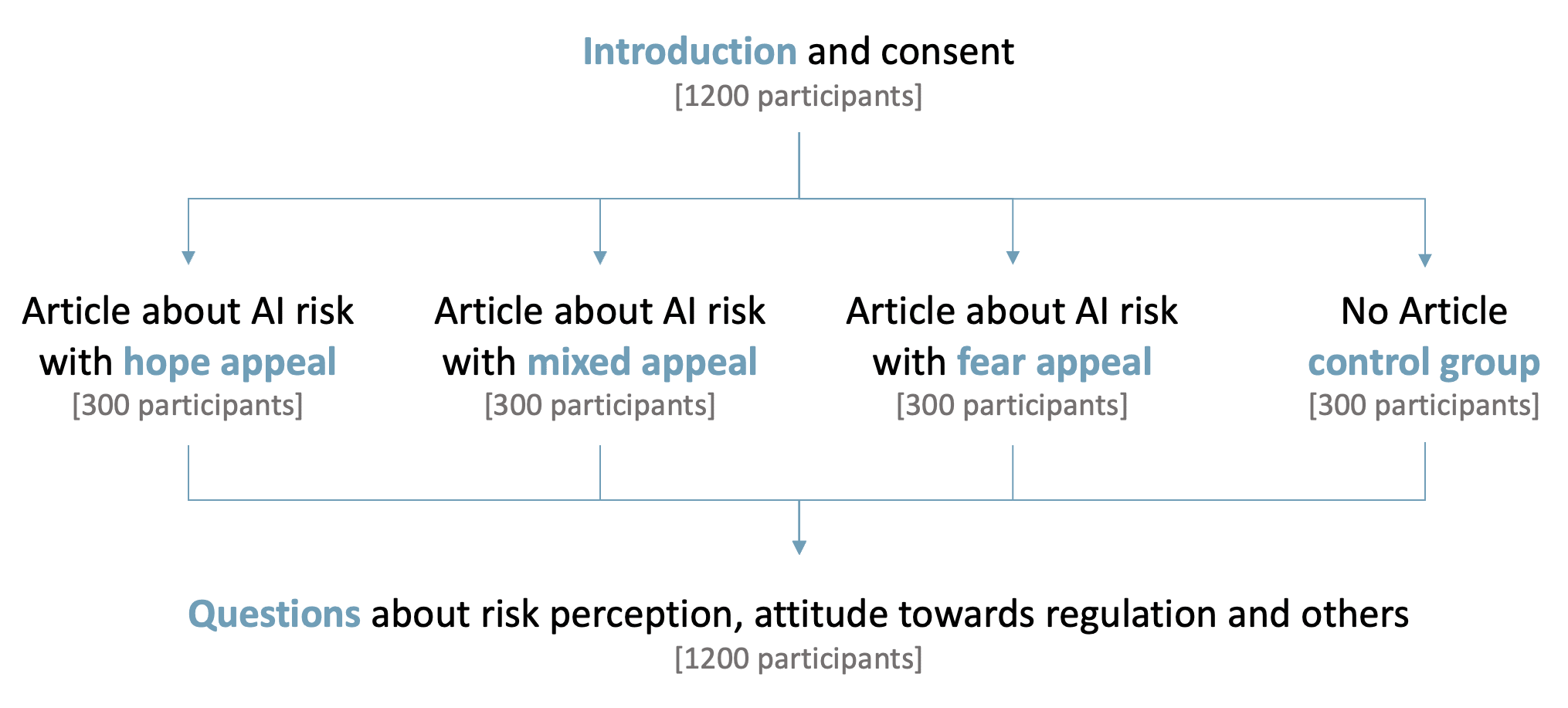

The core of the study was a survey experiment with 1200 Americans. The participants were randomly allocated to four groups: one control group and three experimental groups each getting one of three articles on AI risk. All three versions explain that AI seems to be advancing rapidly and that future systems may become so powerful that they could lead to catastrophic outcomes when used by bad actors (misuse) or when getting out of control (misalignment). The fear version focuses solely on the risks; the hope version takes a more optimistic view, highlighting promising risk mitigation efforts and the mixed version is a combination of the two transitioning from fear to hope. After reading the article I asked participants to indicate emotions they felt when reading the article (as a manipulation check and to separate the emotional appeal from other differences in the articles) and to state their views related to various AI risk topics. The full survey including the articles and the questions can be found in the dissertation on page 62 and following (link at the bottom of page).

Findings

Overview of results

1. Risk perception

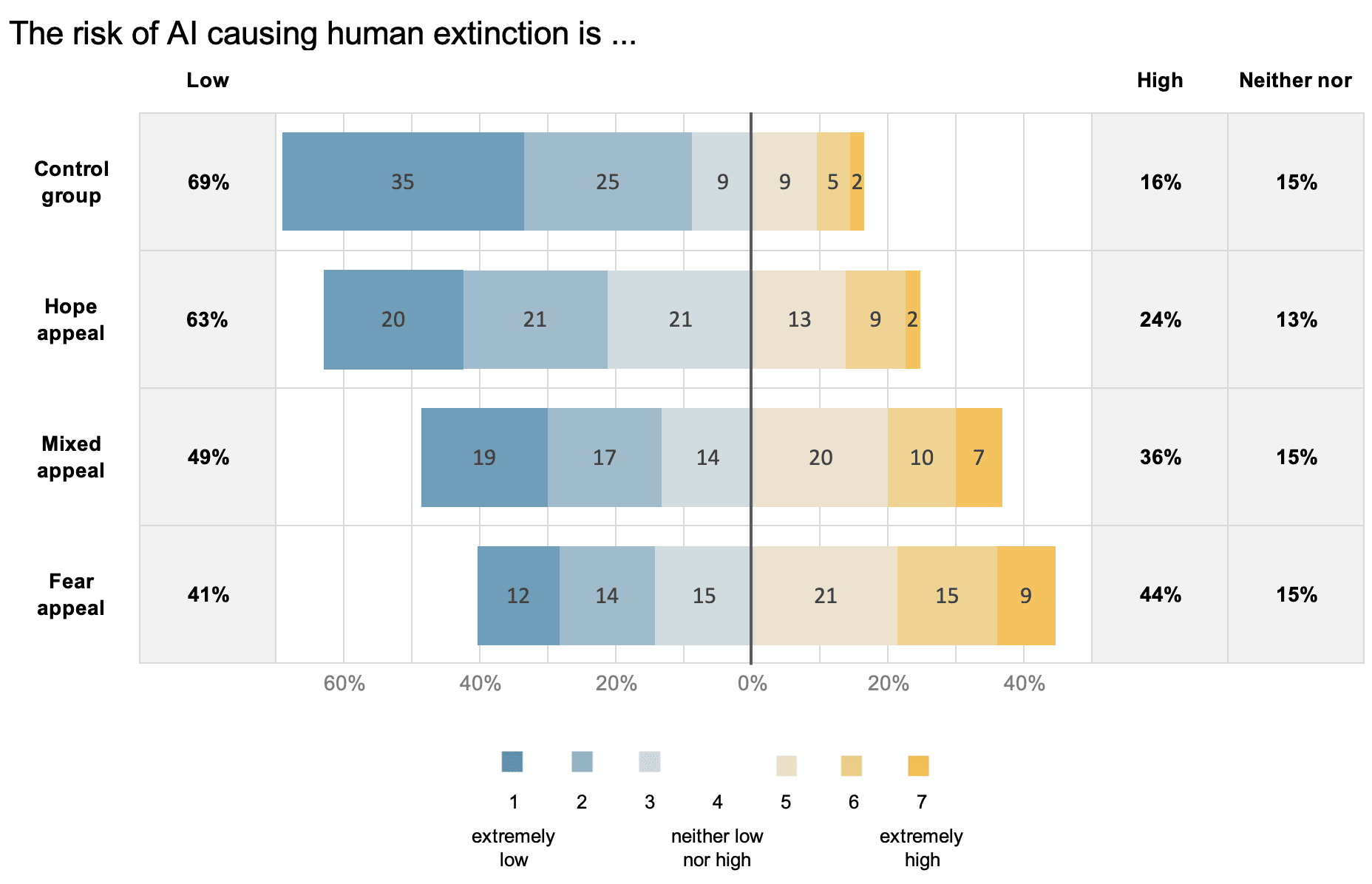

To measure risk perception, I asked participants to indicate their assessment of the risk level of AI risk (both existential risk and large-scale risk) on a scale from 1, extremely low, to 7, extremely high with a midpoint at 4, neither low nor high. In addition, I asked participants for their estimations on the likelihood of AI risk (existential risk and large-scale risk, both within 5 years and 10 years, modelled after the rethink priorities opinion poll). All risk perception measures related to x-risk showed the same statistically significant difference between groups. The answers for AI x-risk are shown below, the statistical analysis and descriptives for the other measures can be found in the full paper.

The fear appeal increased risk perception the most, followed by the mixed appeal and the hope appeal. Analyses show that fearful emotions are indeed the main mechanism at play, counteracted by message credibility as a second mechanism which is lower in the fear appeal article. Interesting are also the differences in the distribution between e.g., the extremes (1 and 7) and the mid values (3,4,5). The fear article for example had a particularly strong effect in decreasing the share of 1s (extremely low risk), to a third of the control group but also in increasing the share of 7s (extremely high risk) from 2% to 9%. AI risk communicators should be clear in what they want to achieve (raising risk perceptions as much as possible or to a certain level).

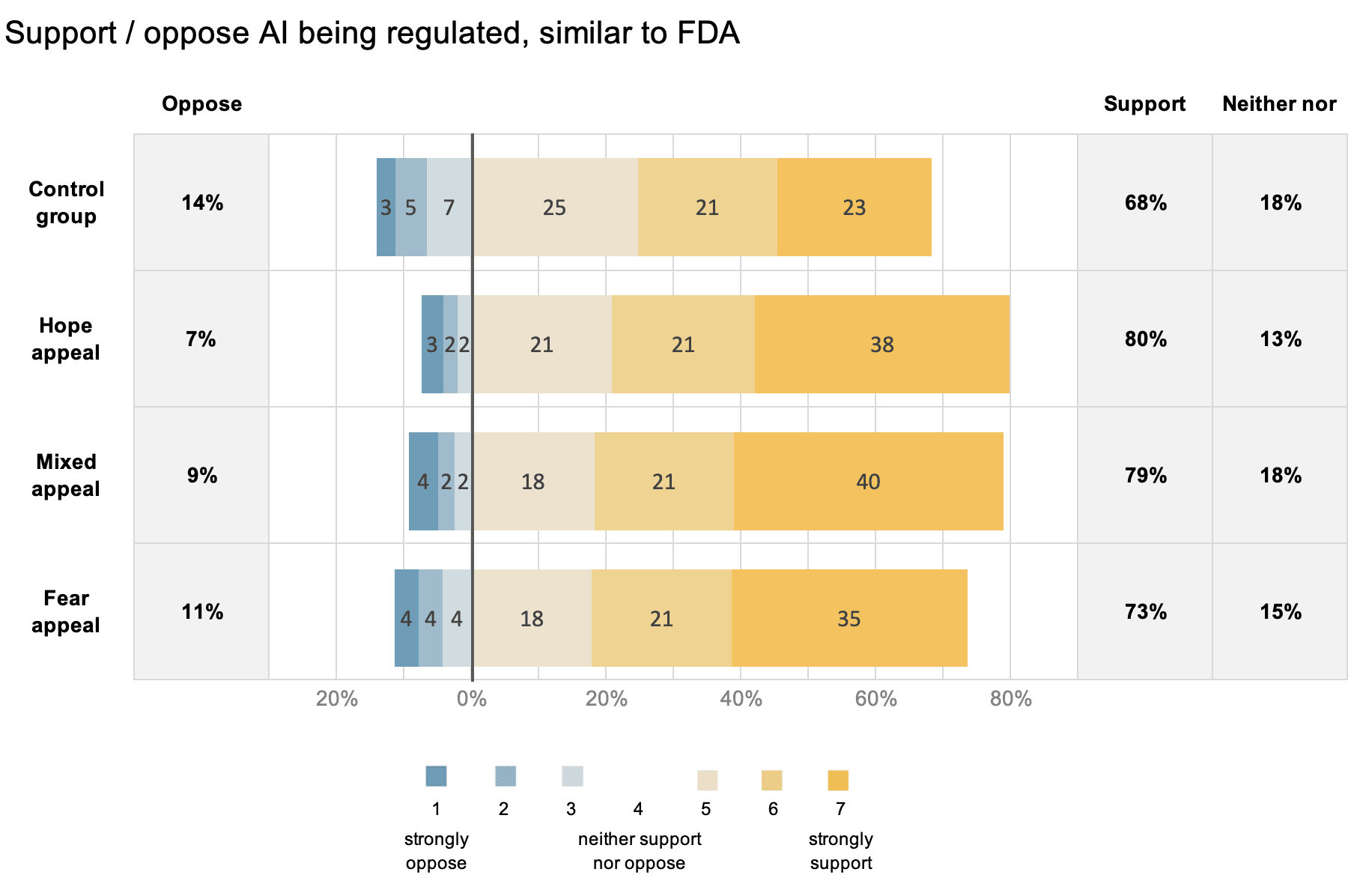

2. AI regulation

Following the framing of a 2023 rethinkpriorities poll, I asked participants if they would support AI being regulated by a federal agency, similar to how the FDA regulates the approval of drugs and medical devices in the US. While all experimental groups (fear, hope and mixed) show significantly higher levels of support for AI regulation than the control group, the mean differences between the three groups are not significant. The descriptive statistics (see below) show that the hope appeal led to higher levels of support. However, this effect does not seem to be based on higher feelings of hope instead of feelings of fear per se. In fact, fear is a positive predictor of support for AI regulation. The overall stronger effect of the hope appeal can be (at least partially) attributed to higher perceived efficacy of regulation (i.e. how effective participants think AI regulation would be) and message credibility (how credible the article was seen as).

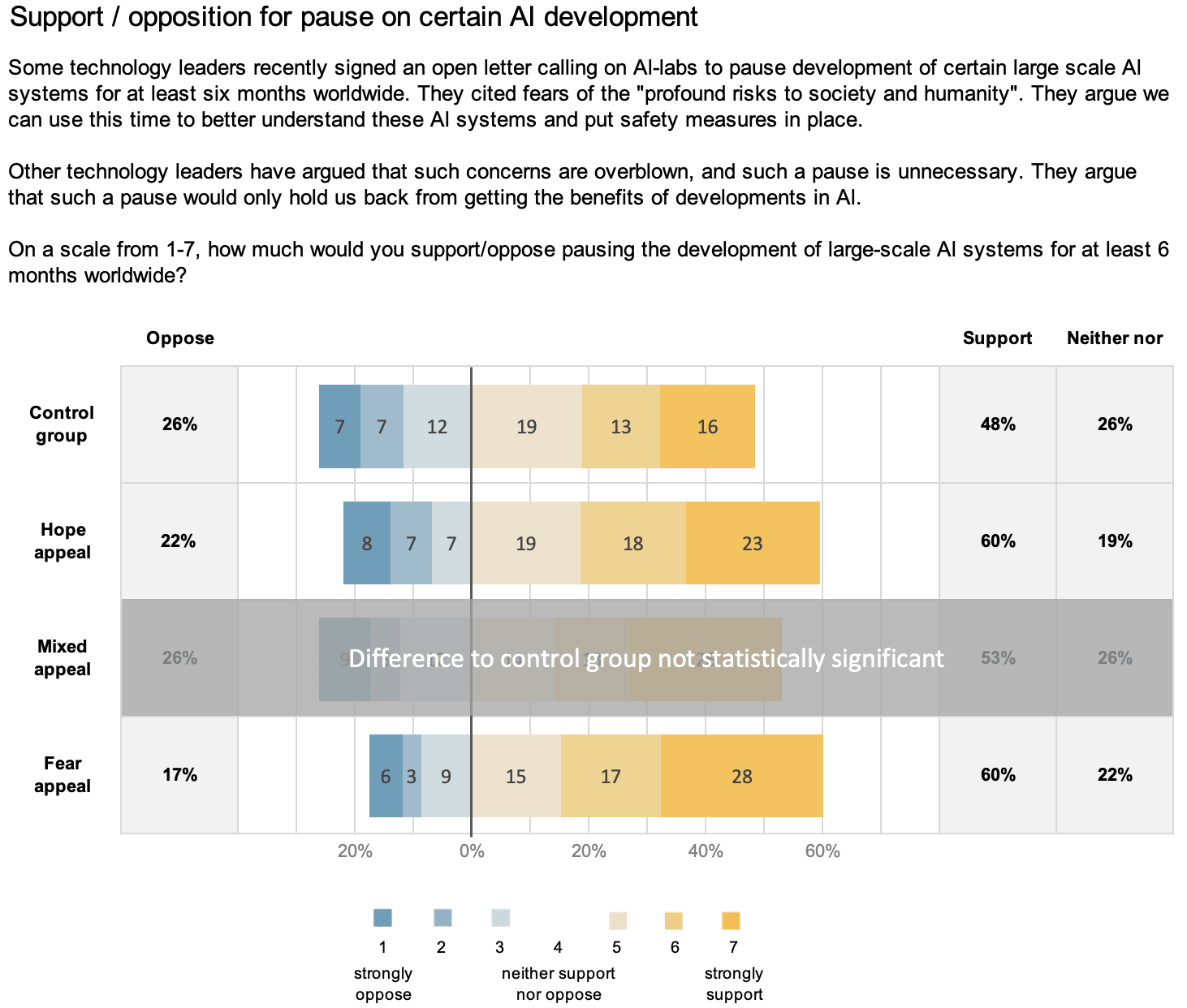

3. AI pause

I also asked participants about their support for a potential 6 months pause (moratorium) on certain AI development. For comparability, I again mirrored the framing of a 2023 rethinkpriorities poll including a short overview of different opinions regarding such a potential moratorium. The fear appeal article had the strongest effect and both fearful and hopeful emotions acted as mediators, positive and negative respectively. Notably, higher age and being female made support for such pause also significantly more likely.

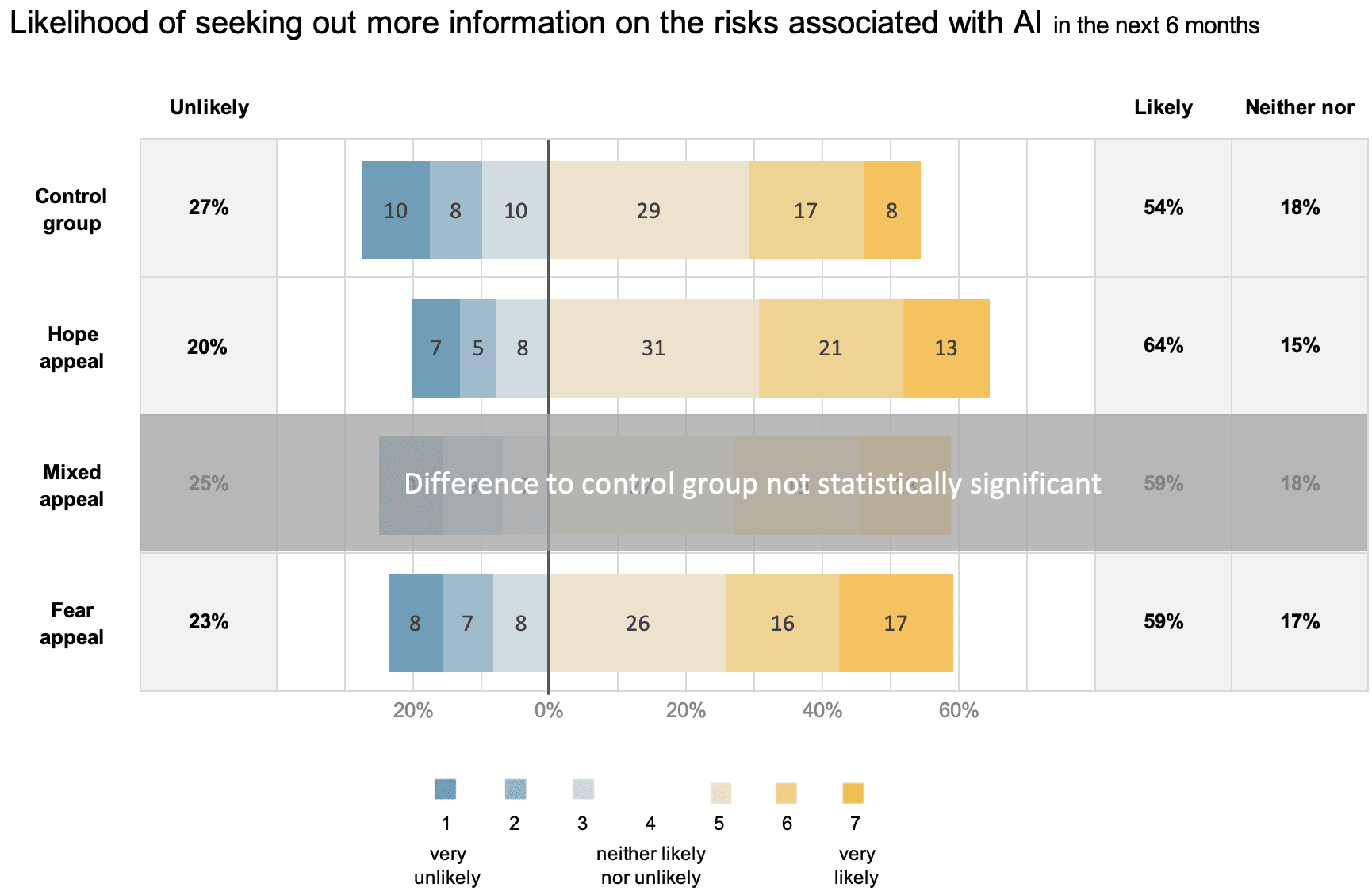

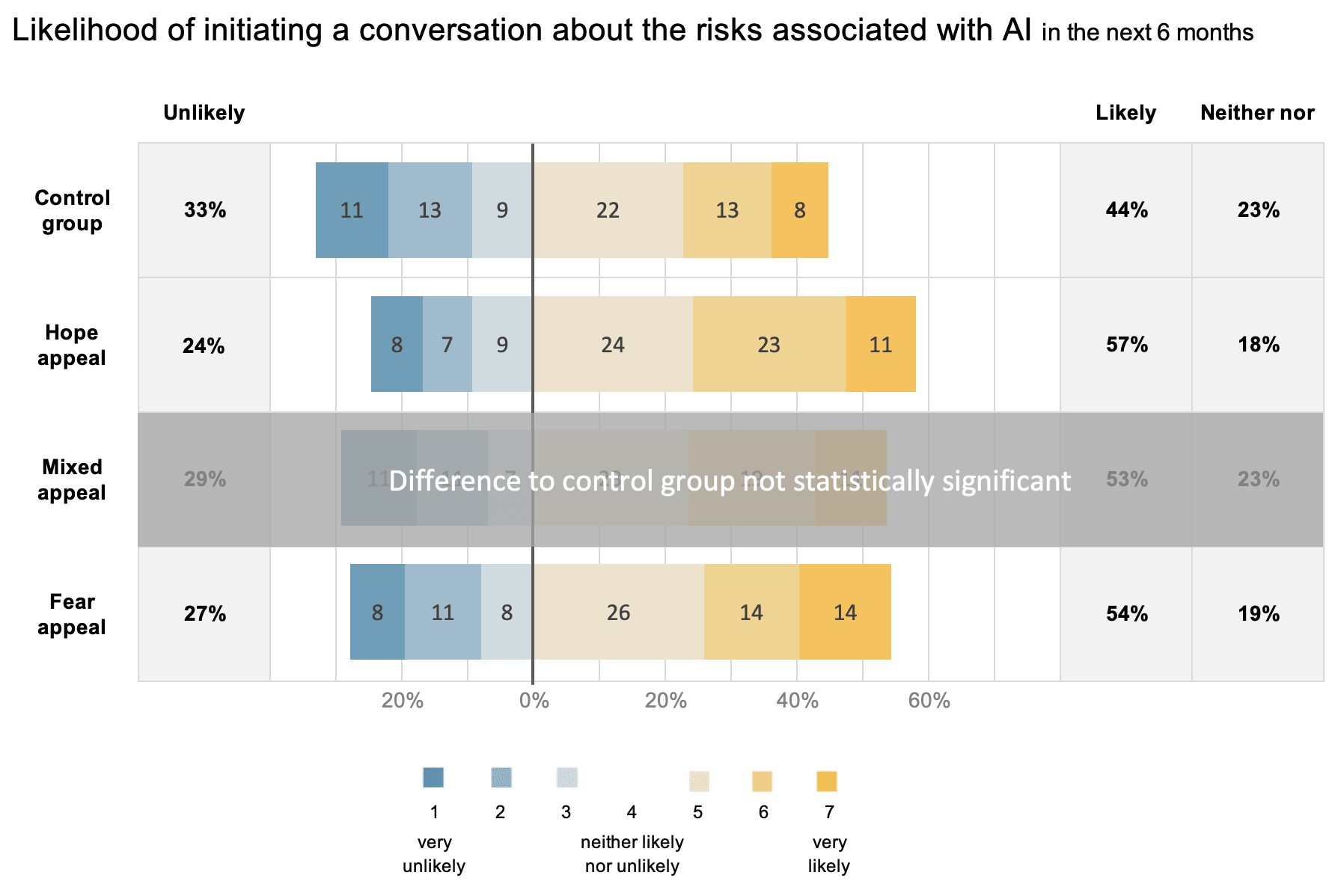

4. Further engagement with AI risks

Finally, I wanted to see whether/ which articles shape participant's intentions to further engage with the topic. While the effect sizes were relatively small, participants reading the hope and fear appeal had significantly stronger intentions of seeking out more information and initiating a conversation about the risks associated with AI in the next 6 months, compared to those of the control group. Here, any induced emotions - fearful or hopeful ones - increased the stated likelihood of engaging in those actions.

Link to complete study

The full dissertation has not been published as of now, but you can access the submitted version here. I want to note a few things as a disclaimer here. First, I was doing an MSc in Sustainability, Enterprise and the Environment and had to link the dissertation to the topic of sustainability. The paper therefore makes a (slightly far-fetched) bridge to the sustainable development goals. Second, due to lack of experience and time (this was my first study of this kind and I only had the summer to write it), the work is highly imperfect. I tested too many things without robust hypotheses, I had a hard time choosing which statistical analysis to make leading to a bit of a mess there and I wish I had time to write a better discussion of the results. So please interpret the results with some caution.

In any way, I'd be glad to hear any reactions, comments or ideas in the comments!

Also, I'm happy to share the data from the study if you're interested in doing further analyses, just message me if you're interested.

PS: I'm super grateful for the financial support provided by Lightspeed Grants, which was crucial in achieving the necessary participant number and diversity for my study.

Great work, thanks a lot for doing this research! As you say, this is still very neglected. Also happy to see you're citing our previous work on the topic. And interesting finding that fear is such a driver! A few questions:

- Could you share which three articles you've used? Perhaps this is in the dissertation, but I didn't have the time to read that in full.

- Since it's only one article per emotion (fear, hope, mixed), perhaps some other article property (other than emotion) could also have led to the difference you find?

- What follow-up research would you recommend?

- Is there anything orgs like ours (Existential Risk Observatory) (or, these days, MIRI, that also focuses on comms) should do differently?

As a side note, we're conducting research right now on where awareness has gone after our first two measurements (that were 7% and 12% in early/mid '23, respectively). We might also look into the existence and dynamics of a tipping point.

Again, great work, hope you'll keep working in the field in the future!

Thank you, Otto!

All the best for your ongoing and future research, excited to read it once it's out.

It looks like the 3 articles are in the appendix of the dissertation, on pages 65 (fear, Study A), 72 (hope, Study B), and 73 (mixed, Study C).

Johanna - thanks very much for sharing this fascinating, important, and useful research! Hope lots of EAs pay attention to it.

Executive summary: A study of 1,200 Americans found that communicating AI existential risk with a fearful tone raises risk perceptions the most, while a more hopeful appeal creates greater support for AI regulation.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Great work, this is really interesting - particularly the responses for AI regulation support. I wonder if that could be broken down any further in future research, or whether it's most blanket support. Anyway, great job!

This is a great study of a very important question.

Look at politics today and you see that fear is a very powerful motivator. I would say that most voters in the US presidential election seem to be motivated most obviously by fear of what would ensue if the other side wins. Each echo-chamber has built up a mental image of a dystopian future which makes them almost zealots, unwilling to listen to reason, unable even to think calmly.

A big challenge that we face however - with climate change and with AI-risk - is that the risks we speak of are quite abstract. Fears that really work on our emotions tend to be very tangible things - snakes, spiders, knives.

Intellectually I know that the risk of a superintelligent AI taking over the world is a lot scarier than a snake, but I know which one would immediately drive me to act to eliminate the risk.

IMHO there is a massive opportunity for someone to research the best way to provoke a more emotional, tangible fear of AI risk (and of climate risk). This project you've done shows that this is important, that endless optimism is not the best way forward. We don't want people paralysed by fear, but right now we have the opposite, we have everyone moving forward blindly, just assuming that the risk will go away.

Thanks for sharing, this is amazing stuff! May I ask why the name of the software you used to illustrate the results?

Thanks gergo! At the time of writing this post I didn't have the software licenses from the University anymore so I actually just used excel for the graphs (with dynamic formulas & co)

Wow, even more impressive then haha!