Epistemic Status: Exploratory. This is just a brief sketch of an idea that I thought I’d post rather than do nothing with. I’ll expand on it if it gains traction.

As such, feedback and commentary of all kinds are encouraged.

TL;DR

- There is a looming energy crisis in AI Development.

- It is unlikely that this crisis can be solved without using copious amounts of fossil fuels.

- This scenario presents a strategic opportunity for the AI slowdown advocacy movement to benefit from the substantial influence of the climate advocacy movement

- Misalignment of goals between the movements is a risk

- This strategy is a high-stakes bet that requires careful thought but likely demands immediate action.

The Situation

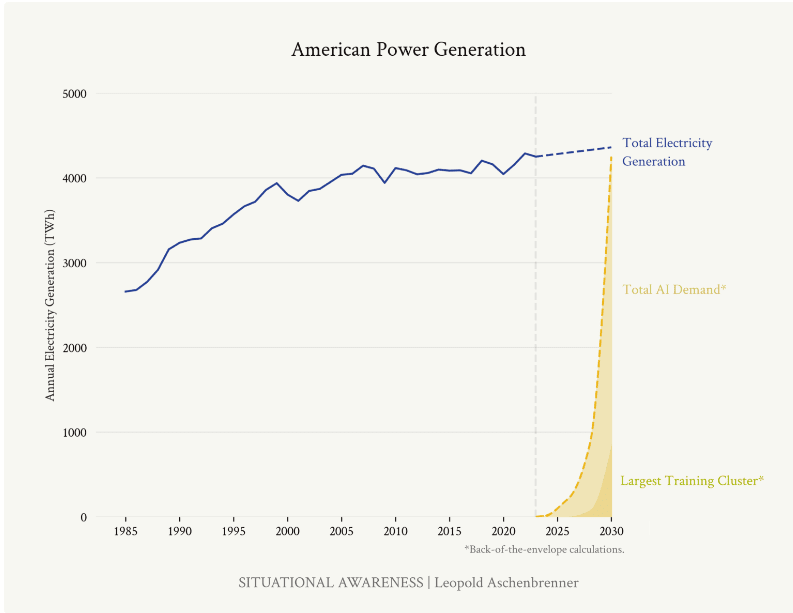

There is a looming energy crisis in AI Development. Recent projections about the energy requirements for the next generations of frontier AI systems are nothing short of alarming. Consider the estimates from former OpenAI researcher Leopold Aschenbrenner's article on the next likely training runs:

- By 2026, compute for frontier models could consume about 5% of US electricity production.

- By 2028, this could rise to 20%.

- By 2030, it might require the equivalent of 100% of current US electricity production.

The implications are stark: the rapid advancement of AI is on a collision course with energy infrastructure and, by extension, climate goals.

It is unlikely that this crisis can be solved without using copious amounts of fossil fuels. Given the projected timelines for AI development and the current state of renewable energy infrastructure, it's highly improbable that this enormous energy demand can be met without heavy reliance on fossil fuels. This presents a critical dilemma:

1. Rapid AI advancement could significantly increase fossil fuel consumption, directly contradicting global climate goals.

2. Attempts to meet this energy demand with renewables would require an unprecedented and likely unfeasible acceleration in green energy infrastructure development.

This scenario creates a natural alignment of interests between climate advocates and those concerned about the risks of rapid AI development.

The Opportunity

This scenario presents a strategic opportunity for AI slowdown advocacy. The climate advocacy movement has spent decades building powerful infrastructure for public awareness, policy influence, and corporate pressure. This existing framework presents a unique opportunity for AI slowdown advocates:

- Megaphone Effect: By framing AI development as a major climate issue, we can tap into the vast reach and resources of climate advocacy groups.

- Policy Pressure: Climate advocates have experience in pushing for regulations on high-emission industries. This expertise could be redirected towards policies that limit large-scale AI training runs.

- Corporate Accountability: Many tech companies have made public commitments to sustainability. Highlighting the conflict between these commitments and energy-intensive AI development could create internal pressure for a slowdown.

- Public Awareness: The climate crisis is well-understood by the general public (and policymakers). Linking AI development to increased emissions could rapidly build support for AI regulation.

The Risk

Misalignment of goals is a risk. While this strategy offers significant potential, it's important to consider possible drawbacks from a misalignment of goals: climate advocates may push for solutions (like rapid green energy scaling) that don't align with AI slowdown goals. In reality, these potential misalignments seem manageable compared to the strategic benefits. The climate advocacy movement is closely linked to the sustainability movement which would find the idea of doubling energy consumption by any means anathema. Nevertheless, the fact that both movements have different world views and goals should be kept in mind when pursuing this strategy.

Conclusion

Collaborating with the climate advocacy movement is a high-stakes bet that requires careful thought but likely demands immediate action. With potentially short AI development timelines and the current intractability of AI slowdown advocacy, we must seriously consider high-payoff strategies like this – and fast.

Something like this:

I think an obvious risk to this strategy is that it would further polarize AI risk discourse and make it more partisan, given how strongly the climate movement is aligned with Democrats.

I think pro-AI forces can reasonably claim that the long-term impacts of accelerated AI development are good for climate -- increased tech acceleration & expanded industrial capacity to build clean energy faster -- so I think the core substantive argument is actually quite weak and transparently so (I think one needs to have weird assumptions if one really believes short-term emissions from getting to AGI would matter from a climate perspective - e.g. if you believed the US would need to double emissions for a decade to get to AGI you would probably still want to bear that cost given how much easier it would make global decarbonization, even if you only looked at it from a climate maximalist lens).

If one looks at how national security / competitiveness considerations regularly trump climate considerations and this was true even in a time that was more climate-focused than the next couple of years, then it seems hard to imagine this would really constrain things -- I find it very hard to imagine a situation where a significant part of US policy makers decide they really need to get behind accelerating AGI, but then they don't do it because some climate activists protest this.

So, to me, it seems like a very risky strategy with limited upside, but plenty of downside in terms of further polarization and calling a bluff on what is ultimately an easy-to-disarm argument.