Seth Ariel Green 🔸

Bio

Participation1

I am a Research Scientist at the Humane and Sustainable Food Lab at Stanford.

How others can help me

the lab I work at is seeking collaborators! More here.

How I can help others

If you want to write a meta-analysis, I'm happy to consult! I think I know something about what kinds of questions are good candidates, what your default assumptions should be, and how to delineate categories for comparisons

Posts 14

Comments184

Topic contributions1

The key point, though, is that cases like Ocado and Albert Heijn are exceptions, not the norm. Most online supermarkets lack the resources and incentives to systematically review and continuously update tens of thousands of SKUs for vegan status.

I'd go a step further: I suspect many supermarkets are going to perceive an incentive not to do this because it raises uncomfortable questions in consmers' minds about the ethical permissibility/goodness of their other items.

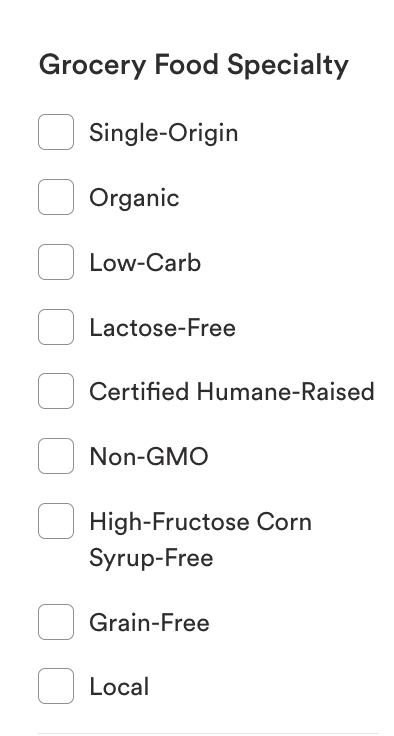

I wonder if this will be more palatable to them if "vegan" is just one of several filters, along with (e.g.) keto, paleo, halal, kosher. Right now the Whole Foods website has the following filters available -- would it be such a stretch to have some identitarian ones?

I really don't know.

I looked into this a bit when i was thinking about how hard it is to get high-welfare animal products at grocery stores (https://regressiontothemeat.substack.com/p/pasturism) and I made contact with a sustainability person at a prominent multinational grocery store and asked if they'd like to meet up during Earth Week to discuss such a filter. They did not write back. I relayed a version of this conversation to someone involved in grocery store pressure campaigns at a high-level, and that second person said, basically anything that implies that some of their food is better/more ethical than other options is going to be a nonstarter.

On the other hand, you've had some initial successes and it seems some grocery stores are already doing this! So I really hope it's plausible. If you're interested, I'm happy to flesh out the details of these prior interactions privately.

Probably because life-saving interventions do not scale this well. It's perfectly plausible that some lives can be saved for $1600 in expectation, but millions of them? No.

Peter Rossi’s Iron Law of Evaluation: the “expected value of any net impact assessment of any large scale social program is zero.” If there were something that did scale this well, it would be a gigantic revolution in development economics. For discussion, se Vivalt (2020), Duflo (2004), and, in a slightly different but theoretically isomorphic context, Stevenson (2023).

On the other hand, Sania (the CEO) is making a weaker claim -- "your ability...is compromised" is not the same as "without that funding, 1.1 million people will die." That's why she's CEO, she knows how to make a nonspecific claim seem urgent and let the listener/reader assume a stronger version.

Hi Jeff, I think we're talking about the same lifespan, my friend was talking about 1 year of continuous use (he works in industrial applications).

Earlier this year year, I sent a Works in Progress piece about Far UV to a friend who is a material science engineer and happens to work in UV applications (he once described his job as "literally watching paint dry," e.g. checking how long it takes for a UV lamp to dry a coat of paint on your car). I asked

I'm interested in the comment that there's no market for these things because there's no public health authority to confer official status on them. That doesn't really make sense to me. If you wanted to market your airplane as the most germ-free or whatever, you could just point to clinical trials and not mention the FDA. Does the FDA or whomever really have independent status to bestow that's so powerful?

Friend replied:

Certain UV disinfection applications are quite popular, but indoor air treatment is one of the more challenging ones. One issue is that these products only can offer "the most germ-free" environment, the amount of protection is not really quantifiable. If a sick person coughed in my direction would an overhead UV lamp stop the germs from reaching me? Probably not...

...far-UVC technology has some major limitations. Relatively high cost per unit, most average only 1 year lifetime or less with decreasing efficacy over time, have to replace the entire system when it's spent, adds to electricity cost when in use, and you need to install a lot of them for it to be effective because when run at too high power levels they produce large amounts of hazardous ozone...

NIST has been working on establishing some standards for measuring UV output and the effectiveness of these systems for the last few years. Doesn't seem to be helping too much with convincing the general public. Covid was the big chance for these technologies to spread, and they did in some places like airports, just not everywhere

You can/should take this with a grain of salt. On the other hand, I generally believe that some EAs tend to be very credulous towards solutions that promise a high amount of efficacy on core cause areas, and operate with a mental model that the missing ingredient for scale is more often money than culture/mechanics: that everything is like bed nets. By contrast, I believe that if some solution looks very promising to outsiders but remains in limited use -- e.g. kangaroo care for preemies in Nigeria or removing lead from turmeric in Bangladesh -- there is likely a deep reason for that that's only legible to insiders, i.e. people with a lot of local context. Here, I suspect that many of us don't have a strong understanding of the costs, limitations, true efficacy, and logistical difficulties of UV light.

That's my epistemology. But if someone wants to fund and run an RCT testing the effects of, say, a cluster of aerolamps on covid cases at a big public event, I'd be happy to consult on design, measurement strategy, IRB approval, etc. (Gotta put that university affiliation to use on something!)

It's an interesting question.

From the POV of our core contention -- that we don't currently have a validated, reliable intervention to deploy at scale -- whether this is because of absence of evidence (AoE) or evidence of absence (EoA) is hard to say. I don't have an overall answer, and ultimately both roads lead to "unsolved problem."

We can cite good arguments for EoA (these studies are stronger than the norm in the field but show weaker effects, and that relationship should be troubling for advocates) or AoE (we're not talking about very many studies at all), and ultimately I think the line between the two is in the eye of the beholder.

Going approach by approach, my personal answers are

- choice architecture is probably AoE, it might work better than expected but we just don't learn very much from 2 studies (I am working on something about this separately)

- the animal welfare appeals are more EoA, esp. those from animal advocacy orgs

- social psych approaches, I'm skeptical of but there weren't a lot of high-quality papers so I'm not so sure (see here for a subsequent meta-analysis of dynamic norms approaches).

- I would recommend health for older folks, environmental appeals for Gen Z. So there I'd say we have evidence of efficacy, but to expect effects to be on the order of a few percentage points.

Were I discussing this specifically with a funder, I would say, if you're going to do one of the meta-analyzed approaches -- psych, nudge, environment, health, or animal welfare, or some hybrid thereof -- you should expect small effect sizes unless you have some strong reason to believe that your intervention is meaningfully better than the category average. For instance, animal welfare appeals might not work in general, but maybe watching Dominion is unusually effective. However, as we say in our paper, there are a lot of cool ideas that haven't been tested rigorously yet, and from the point of view of knowledge, I'd like to see those get funded first.

Hi David,

To be honest I'm having trouble pinning down what the central claim of the meta-analysis is.

To paraphrase Diddy's character in Get Him to the Greek, "What are you talking about, the name of the [paper] is called "[Meaningfully reducing consumption of meat and animal products is an unsolved problem]!" (😃) That is our central claim. We're not saying nothing works; we're saying that meaningful reductions either have not been discovered yet or do not have substantial evidence in support.

However the authors hedge this in places

That's author, singular. I said at the top of my initial response that I speak only for myself.

When pushed, I say I am "approximately vegan" or "mostly vegan," which is just typically "vegan" for short, and most people don't push. If a vegan gives me a hard time about the particulars, which essentially never happens, I stop talking to them 😃

IMHO we would benefit from a clear label for folks who aren't quite vegan but who only seek out high-welfare animal products; I think pasturism/pasturist is a possible candidate.

Love talking nitty gritty of meta-analysis 😃

- IMHO, the "math hard" parts of meta-analysis are figuring out what questions you want to ask, what are sensible inclusion criteria, and what statistical models are appropriate. Asking how much time this takes is the same as asking, where do ideas come from?

- The "bodybuilding hard" part of meta-analysis is finding literature. The evaluators didn't care for our search strategy, which you could charitably call "bespoke" and uncharitably call "ad hoc and fundamentally unreplicable." But either way, I read about 1000 papers closely enough to see if they qualified for inclusion, and then, partly to make sure I didn't duplicate my own efforts, I recorded notes on every study that looked appropriate but wasn't. I also read, or at least read the bibliographies of, about 160 previous reviews. Maybe you're a faster reader than I am, but ballpark, this was 500+ hours of work.

- Regarding the computational aspects, the git history tells the story, but specifically making everything computationally reproducible, e.g. writing the functions, checking my own work, setting things up to be generalizable -- a week of work in total? I'm not sure.

- The paper went through many internal revisions and changed shape a lot from its initial draft when we pivoted in how we treated red and processed meat. That's hundreds of hours. Peer review was probably another 40 hour workweek.

- As I reread reviewer 2's comments today, it occurred to me that some of their ideas might be interesting test cases for what Claude Code is and is not capable of doing. I'm thinking particularly of trying to formally incorporate my subjective notes about uncertainty (e.g. the many places where I admit that the effect size estimates involved a lot of guesswork) into some kind of...supplementary regression term about how much weight an estimate should get in meta-analysis? Like maybe I'd use Wasserstein-2 distance, as my advisor Don recently proposed? Or Bayesian meta-analysis? This is an important problem, and I don't consider it solved by RoB2 or whatever, which means that fixing it might be, IDK, a whole new paper which takes however long that does? As my co-authors Don and Betsy & co. comment in a separate paper on which I was an RA:

> Too often, research syntheses focus solely on estimating effect sizes, regardless of whether the treatments are realistic, the outcomes are assessed unobtrusively, and the key features of the experiment are presented in a transparent manner. Here we focus on what we term landmark studies, which are studies that are exceptionally well-designed and executed (regardless of what they discover). These studies provide a glimpse of what a meta-analysis would reveal if we could weight studies by quality as well as quantity. [the point being, meta-analysis is not well-suited for weighing by quality.] - It's possible that some of the proposed changes would take less time than that. Maybe risk of bias assessment could be knocked out in a week?. But it's been about a year since the relevant studies were in my working memory, which means I'd probably have to re-read them all, and across our main and supplementary dataset, that's dozens of papers. How long does it take you to read dozens of papers? I'd say I can read about 3-4 papers a day closely if I'm really, really cranking. So in all likelihood, yes, weeks of work, and that's weeks where I wouldn't be working on a project about building empathy for chickens. Which admittedly I'm procrastinating on by writing this 500+ word comment 😃

It depends on the specifics, but I live in Brooklyn and getting deliveries from Whole Foods means they probably come to my house in an electric truck or e-cargo bike. That's pretty low-emission. (Fun fact: NYC requires most grocery stores to have parking spots.)