This post summarizes a new meta-analysis from the Humane and Sustainable Food Lab. We analyze the most rigorous randomized controlled trials (RCTs) that aim to reduce consumption of meat and animal products (MAP). We conclude that no theoretical approach, delivery mechanism, or persuasive message should be considered a well-validated means of reducing MAP consumption. By contrast, reducing consumption of red and processed meat (RPM) appears to be an easier target. However, if RPM reductions lead to more consumption of chicken and fish, this is likely bad for animal welfare and doesn’t ameliorate zoonotic outbreak or land and water pollution. We also find that many promising approaches await rigorous evaluation.

This post updates a post from a year ago. We first summarize the current paper, and then describe how the project and its findings have evolved. (August 2025 update: the paper has been published at Appetite.)

What is a rigorous RCT?

We define “rigorous RCT” as any study that:

- Randomly assigns participants to a treatment and control group

- Measures consumption directly -- rather than (or in addition to) attitudes, intentions, or hypothetical choices -- at least a single day after treatment begins

- Has at least 25 subjects in both treatment and control, or, in the case of cluster-assigned studies (e.g. university classes that all attend a lecture together or not), at least 10 clusters in total.

Additionally, studies needed to intend to reduce MAP consumption, rather than (e.g.) encouraging people to switch from beef to chicken, and be publicly available by December 2023.

We found 35 papers, comprising 41 studies and 112 interventions, that met these criteria. 18 of 35 papers have been published since 2020.

The main theoretical approaches:

Broadly speaking, studies used Persuasion, Choice Architecture, Psychology, and a combination of Persuasion and Psychology to try to change eating behavior.

Persuasion studies typically provide arguments about animal welfare, health, and environmental welfare reasons to reduce MAP consumption. For instance, Jalil et al. (2023) switched out a typical introductory economics lecture for one on the health and environmental reasons to cut back on MAP consumption, and then tracked what students ate at their college’s dining halls. Animal welfare appeals often used materials from advocacy organizations and were often delivered through videos and pamphlets. Most studies in our dataset are persuasion studies.

Choice architecture studies change aspects of the contexts in which food is selected and consumed to make non-MAP options more appealing or prominent. For example, Andersson and Nelander (2021) randomly alter whether the vegetarian option occurs on the top of a university cafeteria’s billboard menu or not. Choice architecture approaches are very common in food research, but only two papers met our inclusion criteria. Hypothetical outcomes and immediate measurements were common.

Psychology studies manipulate the interpersonal, cognitive, or affective factors associated with eating MAP. The most common psychological intervention is centered on social norms seeking to alter the perceived popularity of non-MAP dishes, e.g. two studies by Gregg Sparkman and colleagues. In another study, a university cafeteria put up signs stating that “[i]n a taste test we did at the [name of cafe], 95% of people said that the veggie burger tasted good or very good!” One study told participants that people who ate meat are more likely to endorse social hierarchy and embrace human dominance over nature. Other psychological interventions include response inhibition training, where subjects are trained to avoid responding impulsively to stimuli such as unhealthy food, and implementation intentions, where participants list potential challenges and solutions to changing their own behavior.

Finally, some studies combine persuasive and psychological messages, e.g. putting up a sign about how veggie burgers are popular along with a message about their environmental benefits, or pairing reasons to cut back on MAP consumption with an opportunity to pledge to do so.

Results: consistently small effects

We convert all reported results to a measure of standardized mean differences (SMD) and meta-analyze them using the robumeta package in R. An SMD of 1.0 indicates an average change equal to one standard deviation in the outcome.

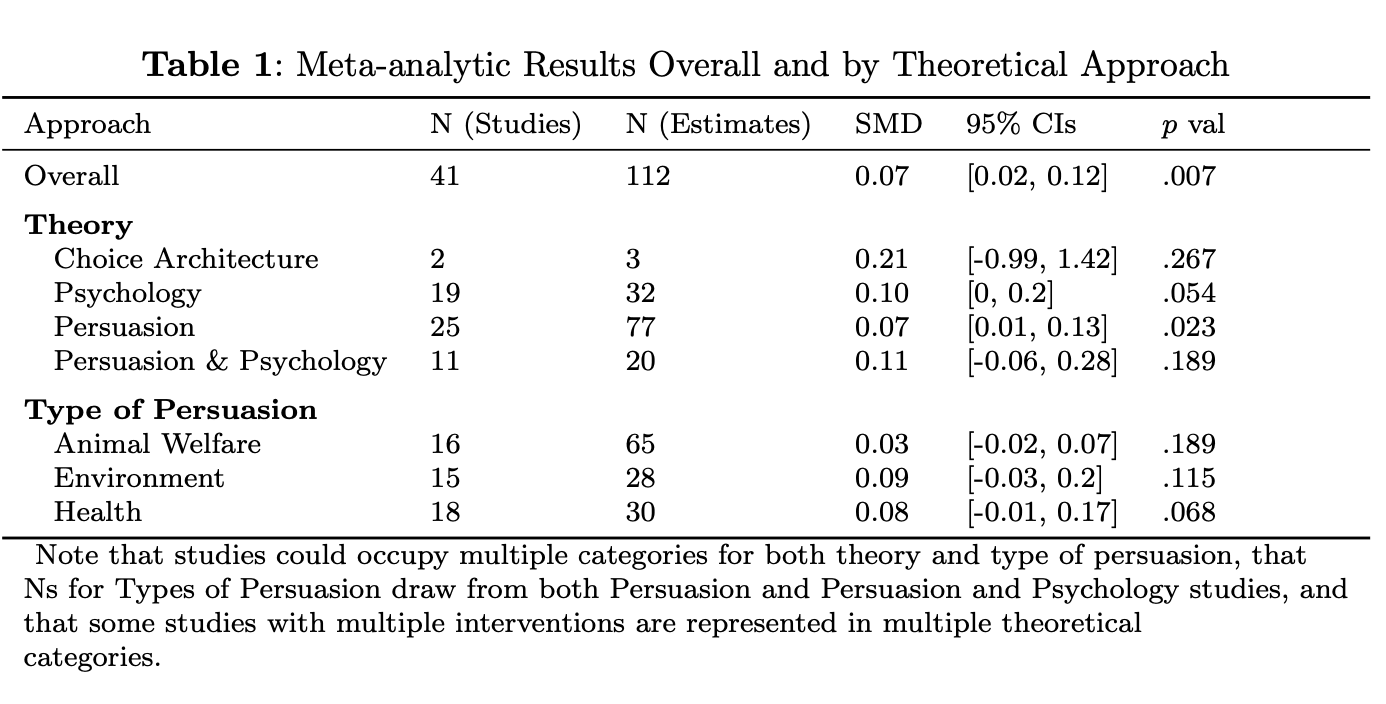

Our overall pooled estimate is SMD = 0.07 (95% CI: [0.02, 0.12]). Table 1 displays effect sizes separated by theoretical approach and by type of persuasion.

Most of these effect sizes and upper confidence bounds are quite small. The largest effect size, which is associated with choice architecture, comes from too few studies to say anything meaningful about the approach in general.

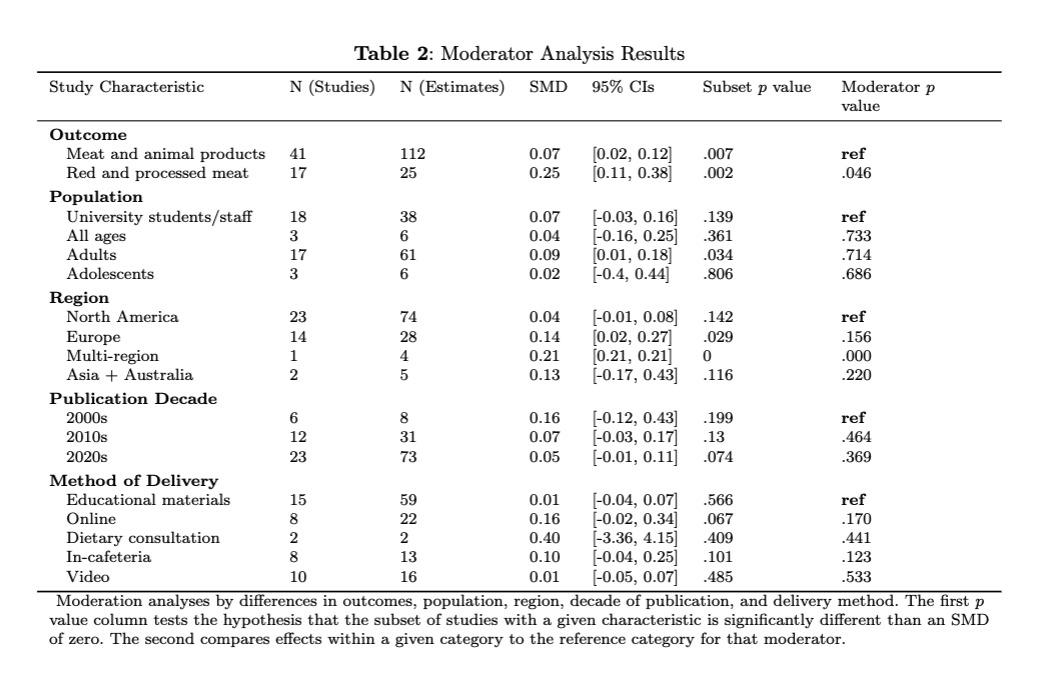

Table 2 presents results associated with different study characteristics.[1]

Probably the most striking finding here is the comparatively large effect size associated with studies aimed at reducing RPM consumption (SMD = 0.25, 95% CI: [0.11, 0.38]). We speculate that reducing RPM consumption is generally perceived as easier and more socially normative than cutting back on all categories of MAP. (It’s not hard to find experts in newspapers saying things like: “Who needs steak when there’s... fried chicken?”)

Likewise, when we integrate a supplementary dataset of 22 marginal studies, comprising 35 point estimates, that almost met our inclusion criteria, we find a considerably larger pooled effect: SMD = 0.2 (95% CI: [0.09, 0.31]). Unfortunately, this suggests that increased rigor is associated with smaller effect sizes in this literature, and that prior literature reviews which pooled a wider variety of designs and measurement strategies may have produced inflated estimates.

Where do we go from here?

In our experience, EAs generally accept that behavioral change, particularly around something as ingrained as meat, is a hard problem. But if you read the food literature in general, you get the impression that consumers are easily influenced by local cues and that their behaviors are highly malleable. In our view, a lot of that discrepancy comes down to measurement challenges. For example, studies that set the default meal choice to be vegetarian at university events sometimes find large effects. But what happens at the next meal, or the day after? Do people eat more meat to compensate? For the most part, we don’t know, although it is definitely possible to measure delayed outcomes.

Likewise, we encourage researchers to distinguish between reducing all MAP consumption from reducing just some particular category of it. RPM is of special concern for its environmental and health consequences, but if you care about animal welfare, a society-wide switch from beef to chicken is probably a disaster.

On a brighter note, we reviewed a lot of clever, innovative designs that did not meet our inclusion criteria, and we’d love to see these ideas implemented with more rigorous evaluation:

- Extended contact with farm animals

- Manipulations to the price of meat

- Activating moral and/or physical disgust

- Watching popular media such as the Simpsons episode “Lisa the Vegetarian” or the movie Babe

- Many categories of choice architecture intervention.

For more, see the paper, our supplement, and our code and data repository.

How has this project changed over time?

Our previous post, describing an earlier stage of this project, reported that environmental and health appeals were the most consistently effective at reducing MAP consumption. However, at that time, we were grouping RPM and MAP studies together. Treating them as separate estimands changed our estimates a lot (and pretty much caused the paper to fall into place conceptually).

Second, we’ve analyzed a lot more literature. In the data section of our code and data repository, you’ll see CSVs that record all of the studies we included in our main analysis; our RPM analysis; a robustness check of studies that didn’t quite make it; the 150+ prior reviews we consulted; and the 900+ studies we excluded.

Third, Maya Mathur joined the project, and Seth joined Maya’s lab (more on that journey here). Our statistical analyses, and everything else, improved accordingly.

Happy to answer any questions!

Acknowledgments. Thanks to Adin Richards, Alex Berke, Alix Winter, Anson Berns, Dan Waldinger, Hari Dandapani, Martin Gould, Martin Rowe, Matt Lerner, and Rye Geselowitz for comments on an early draft. Thanks to Jacob Peacock, Andrew Jalil, Gregg Sparkman, Joshua Tasoff, Lucius Caviola, Natalia Lawrence, and Emma Garnett for help with assembling the database and providing guidance on their studies. Thanks to Sofia Vera Verduzco for research assistance. We gratefully acknowledge funding from the NIH (grant R01LM013866), Open Philanthropy, and the Food Systems Research Fund (Grant FSR 2023-11-07).

- ^

The moderator value estimates should be interpreted non-causally because study characteristics were not randomly assigned.

Thanks so much for this very helpful post!

I'm a bit confused about your framing of the takeaway. You state that "reducing meat consumption is an unsolved problem" and that "we conclude that no theoretical approach, delivery mechanism, or persuasive message should be considered a well-validated means of reducing meat and animal product consumption." However, the overall pooled effects across the 41 studies show statistical significance w/ a p-value of <1%. Yes, the effect size is small (0.07 SMD) but shouldn't we conclude from the significance that these interventions do indeed work?

Having a small effect or even a statistically insignificant one isn't something EAs necessarily care about (e.g. most longtermism interventions don't have much of an evidence base). It's whether we can have an expected positive effect that's sufficiently cheap to achieve. In Ariel's comment, you point to a study that concludes its interventions are highly cost-effective at ~$14/ton of CO2eq averted. That's incredible given many offsets cost ~$100/ton or more. So it doesn't matter if the effect is 'small', only that it's cost-effective.

Can you help EA donors take the necessary next step? It won't be straightforward and will require additional cost and impact assumptions, but it'll be super useful if you can estimate the expected cost-effectiveness of different diet-change interventions (in terms of suffering alleviated).

Finally, in addition to separating out red meat from all animal product interventions, I suspect it'll be just as useful to separate out vegetarian from vegan interventions. It should be much more difficult to achieve persistent effects when you're asking for a lot more sacrifice. Perhaps we can get additional insights by making this distinction?

Hi Wayne,

Great questions, I'll try to give them the thoughtful treatment they deserve.

This is in the supplement rather than the paper, but one of our depressing results is that rigorous evaluations published by nonprofits, such as The Humane League, Mercy For Animals, and Faunalytics, produce a small backlash on average (see table below). But it's also my impression that a lot of these groups have changed gears a lot, and are now focusing less on (e.g.) leafletting and direct persuasion efforts and more on corporate campaigns, undercover investigations, and policy work. I don't know if they have moved this direction specifically because a lot of their prior work was showing null/backlash results, but in general I think this shift is a good idea given the current research landscape.

4. Pursuant to that, economists working on this sometimes talk about the consumer-citizen gap, where people will support policies that ban practices whose products they'll happily consume. (People are weird!) For my money, if I were a significant EA donor on this space, I might focus here: message testing ballot initiatives, preparing for lengthy legal battles, etc. But as always with these things, the details matter. If you ban factory farms in California and lead Californians to source more of their meat from (e.g.) Brazil, and therefore cause more of the rainforest to be clearcut -- well that's not obviously good either.

5. Almost all interventions in our database targeted meat rather than other animal products (one looked at fish sauce and a couple also measured consumption of eggs and dairy). Also a lot of studies just say the choice was between a meat dish and a vegetarian dish, and whether that vegetarian dish contained eggs or milk is sometimes omitted. But in general, I'd think of these as "less meat" interventions.

Sorry I can't offer anything more definitive here about what works and where people should donate. An economist I like says his dad's first rule of social science research was: "Sometimes it’s this way, and sometimes it’s that way," and I suppose I hew to that 😃

I work at a university in China, and with the help of some vegetarian students, I’ve been trying to encourage others to eat less meat. However, I’ve found it challenging to engage students who aren’t already interested in vegetarianism.

For instance, last semester, I organized a Meatless Monday Lunch every week. The same group of people I already knew would attend, but it didn’t attract new participants. I even offered free lunches to students to make it more appealing, but that didn’t seem to help.

I also hosted a documentary screening about the health effects of eating meat. Attendance was very low—fewer than 10 people showed up—and most of them seemed distracted, spending their time on their phones.

On the bright side, our canteen has improved its plant-based options with our help. I think this may encourage more people to try them. Unfortunately, I don’t have access to the canteen’s data, so I’m not sure if this idea actually worked. Personally it did make eating at the canteen a bit more pleasant.

Thank you for sharing your work and taking an active stance to promote vegetarianism. In my personal experience of becoming vegetarian, two things stand out that may be relevant:

My point is that these small and seemingly self directed actions (I never actively tried to convince others) can make a difference. Striving for wider impact is important, but don't undervalue your individual efforts!

Definitely! When I went vegan, I prompted someone I know to look up how dairy cows are treated (not well), and they changed their diet quite a bit in light of that. So I have seen downstream effects personally. Caveat that I am annoying and prone to evangelize.

And if i were going to promote one definitely-not-scalable intervention to one very-hard-to-reach-population, I would take a bunch of die-hard meat eaters to Han Dynasty on the upper west side of Manhattan and order 1) DanDan noodles without pork 2) pea leaves with garlic 3) cumin tofu 4) kung pao tofu and 5) eggplant in garlic sauce for the table, and then ask "hello is this not delicious??" every 30 seconds 😃

This is a bit of late reply. I have tried to take some of my family members and friends to vegetarian restaurants. Sometimes they are open to try it. Sometimes they outright refuses to go because "it is a waste of money" even if I promise to pay. I guess the lesson is that there no method that works universally. We just got to try different methods on different people.

That sounds very interesting!

Making things more pleasant for vegetarians and vegans is a good thing to do, even if it does not change other people's behavior too much.

In the long-run, we want to make vegetarianism seem just as "nice, natural, and normal" (https://www.sciencedirect.com/science/article/abs/pii/S0195666315001518) as eating meat.

I think things like a Meatless Monday Lunch are very helpful for that.

The Unjournal commissioned two expert evaluations of this paper -- see here (and links within) for all content.

A brief summary from our abstract:

I aim to discuss this further and put it up as a linkpost soon (once we add the evaluation managers' discussion).

(And the first author has indicated that they intend to provide a response soon. We will incorporate this in, of course).

We posted Seth's response here

Thanks for this research! Do you know whether any BOTECs have been done where an intervention can be said to create X vegan-years per dollar? I've been considering writing an essay pointing meat eaters to cost-effective charitable offsets for meat consumption. So far, I haven't found any rigorous estimates online.

(I think farmed animal welfare interventions are likely even more cost-effective and have a higher probability of being net positive. But it seems really difficult to know how to trade off the moral value of chickens taken out of cages / shrimp stunned versus averting some number of years of meat consumption.)

👋 Our pleasure!

To the best of my recollection, the only paper in our dataset that provides a cost-benefit estimation is Jalil et al. (2023)

There's also a red/processed meat study --- Emmons et al. (2005) --- that does some cost-effectiveness analyses, but it's almost 20 years old and its reporting is really sparse: changes to the eating environment "were not reported in detail, precluding more detailed analyses of this intervention." So I'd stick with Jalil et al. to get a sense of ballpark estimates.

Hi Ariel,

Relatedly:

I'm curating this post. It's concise and uses a straightforward methodology, and I think the takeaway finding is an important idea to spread awareness of (that people are quite resistant to stopping eating meat).

Hi Benny, Maya, and Seth,

I would be curious to know your thoughts on my post More animal farming increases animal welfare if soil animals have negative lives?. You are welcome to comment on the post.

Here is the reply from Seth.

I'll add this to the papers The Unjournal is considering evaluating. It seems potentially high-value and influential. Please let us know if there is a more recent version than the one linked here.

Thank you David! We will post any updates to https://doi.org/10.31219/osf.io/q6xyr

The paper is currently under submission at a journal and we likely won’t modify it until we get some feedback.

@Seth Ariel Green - thanks a lot for the helpful overview of this research! Do you know if any research has started by selecting the group of people who are reducing / eliminating meat from their diets and then analysing what it is that might have caused them to change behaviour?

E.g., I could imagine a study that selects Wallmart customers based on a decline in their meat purchases in their customer loyalty account, and then tests some hypothesis of change.

Curious if this is a viable path to get insights (I'm not a social scientist), or perhaps if you know of something similar :)

Hi Ruben, I am not expert on that strand of research, but here a few papers that may be of interest (lead author/year/title):

Thanks for sharing! Great work.

Agreed:

I only took a brief look at the post so perhaps you're covering some of that in the text but, quoting from the end of George Stiffman's article America Doesn't Know Tofu in Asterisk:

Do you have a sense of the acceptability rates (i.e. what proportions of the treatment population moderately decreased their meat consumption)? Additionally, how did you account for selection effects (i.e. if a study includes vegetarians, those participants presumably wouldn’t see behaviour change)?

My mental model right now is that some small proportion of Western populations are amenable to meat reductions, with a sharp fall-off after this. Using these techniques on less aware populations might work, but we could assume that most high-income Western populations have already been exposed to these techniques and made up their minds. Averaged over a study, seeing a handful of participants change their minds in moderate ways would show a small effect size, or none at all, depending on the recruited population.

But I know very little about this area, so I assume the above is wrong. I just wanted to know in what ways, and what’s borne out by the data you have.

👋 Great questions!

So I'd say we still have a lot of open questions...

Thank you so much for this research. Is there a more intuitive way to interpret SMD values? For example, how many standard deviations is an average vegetarian away from the average person in the general population?

Thank you for your kind words!

putting SMDs into sensible terms is a continual struggle. I don't think it'll be easy to put vegetarians and meat eaters on a common scale because if vegetarians are all clustered around zero meat consumption, then the distance between vegs and meat eaters is just entirely telling you how much meat the meat eater group eats, and that changes a lot between populations.

Also, different disciplines have different ideas about what a 'big' effect size is. Andrew Gelman writes something I like about this:

But by convention, an SMD of 0.5 is typically just considered a 'medium' effect. I tend to agree with Gelman that changing people's behavior by half a standard deviation on average is huge.

A different approach: here are a few studies, their main findings in normal terms, the SMD that translates to, and whether subjectively that's considered big or small

Here, the absolute change in the second study is a lot smaller than the absolute change in the first but has a bigger SMD because there's less variation in the dependent variable in that setting.

So anyway this is another hard problem. But in general, nothing here is equating to the kind of radical transformation that animal advocates might hope for.

I believe the thing that people would be willing to change their behaviour most for is feeling in-group. Eg, when people know that they are expected to do X, and people around them will know if they do not. But that is very hard to implement.

Agreed that it's hard to implement: much easier to say "vegetarian food is popular at this cafe!' than to convince people that they are expected to eat vegetarian.

See here for a review of the 'dynamic norms' part of this literature (studies that tell people that vegetarianism is growing in popularity over time): https://osf.io/preprints/psyarxiv/qfn6y

Have you looked into the correlation between effect size and sample size?

No meaningful relationship! (see code below.) However, big caveat here that we had to guess on some of the samples because many studies do not report how many subjects or meals were treated (e.g. they report how many restaurants or days were assigned to treatment and control but didn't count how many people participated)

Thanks for the feedback on all my questions, Seth!

I have just published a cost-effectiveness analysis of Veganuary and School Plates.

Do you happen to have an overall pooled estimate in percentage points?

Hi Vasco, I'm afraid not, sorry. The diversity of outcome measures makes this all but impossible, e..g one study measures "servings of meat per week", others it by the gram, others count how many meals are served in a given time period, etc.

Do you know about decent estimates for the standard deviation of meat consumption in kg over a given period (like the median delay of 2 weeks among the studies you reviewed) in a given country? One could multiply it by the meat consumption per capita to get the standard deviation in kg, and then multiply this by your effect size to get a rough estimate for the reduction in meat consumption in kg.

I don't know this, sorry, and not every study reports enough location data to begin to estimate this (e.g. studies that recruit an online sample from multiple countries)

Thanks, Seth. I assume it is also difficult to know at which rate the effect size decays across time. A 3 % reduction in consumption over 1 year would be more impressive than a 3 % reduction over 1 week. Do you have a sense of whether the pooled effect size of 0.07 you estimate should be interpreted as referring more to 1 month than 1 week?

This I can say more about!

The median delay, in days, is 14, and the mean is 52 (we have a few studies with long delays, the longest is 3 years (Jalil et al. 2023).

So I'd say, think "about 2 weeks on average with some lengthy outliers". Also there's basically no relationship between delay and effect size.

to replicate in R (from the root directory of our project):

Thanks, Seth! I thought you may not have that data easily available because I did not find it in your moderators' analysis in Table 2. Do the effect sizes refer to the whole period of the delay (e.g. 14 days), or just to the last part of it (e.g. last day of the 14 days)? It does not seem clear from section 3.2. I would expect a greater decay if the effect sizes refer to the last part of the delay.

Delay indicates the number of days that have elapsed between the beginning of treatment and the final outcome measure. How outcomes are measured varies from study to study, so in some cases it's a 24 hour food recall X number of days after treatment is administered (the last part of it), in others it's a continuous outcome measurement in a cafeteria (the entire period of delay).

Veganuary has "calculated that roughly 25 million people worldwide chose to try vegan this January [by participaing in Veganuary in 2024]". Do you have a guess for the reduction in the consumption of animal-based foods linked to those 25 M people caused by all Veganuary's activities in 2024, including corporate engagement, and effects in years after 2024, as a fraction of what their (counterfactual) consumption of animal-based foods in 2024 without Veganuary? I guess 1.5 % (= (0.03 + 0)/2), corresponding to an effect size decreasing from 3 % to 0 over 1 year. I suppose an initial reduction of 3 % because Seth mentioned the studies you analysed showed “changes on the order of a few percentage points”, and I guess these concern a short time period.

I don’t know, sorry. There would be a lot of additional assumptions needed to extrapolate from the RCTs we analyze to this.

Thanks, Seth. I wonder whether you are underestimating your own implicit knowledge. Would you be indifferent between my guess of 1.5 % and alternatives guesses of 0.015 % and 150 % (the value can be higher than 100 % because there could be effects after 2024)? Feel free to provide a range for the expected reduction if that helps.

My implicit knowledge on the topic of knowledge production (rather than of veganuary) is that rosy results like the one you are citing often do not stand up to scrutiny. Maya raised one very salient objection to a gap between the headline interpretation and the data of a past iteration of this work here.

I believe that if I dig into it, I’ll find other, similar issues.

Sorry for such a meta answer…

Executive summary: A meta-analysis of randomized controlled trials finds no well-validated approaches for reducing overall meat and animal product consumption, though reducing specifically red and processed meat consumption shows more promise.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Thanks for sharing the details of this research - it is very valuable towards arriving at an accurate assessment of various interventions.

One question with regard to the methodology of these RCTs is when and for how long did they record the consumption pattern of the participants following the intervention? Specifically, do we have any insights on short-term vs long-term impact of such interventions focused on behavioral change?

Also, I understand that you report the results as SMD. However, it is quite likely that there is a small minority in the treatment group in these interventions that probably contribute to most of the difference that is observed. Do we know anything about the percentage of individuals who are likely to make considerable changes to their dietary patterns based on these interventions?

Hi there,

Delays run the gamut. Jalil et al (2023) measure three years worth of dining choices, Weingarten et al. a few weeks; other studies are measuring what’s eaten at a dining hall during treatment and control but with no individual outcomes; and other studies are structured recall tasks like 3/7/30 days after treatment that ask people to say what they ate in a 24 hour period or over a given week. We did a bit of exploratory work on the relationship between length of delay and outcome size and didn’t find anything interesting.

I’m afraid we don’t know that overall. A few studies did moderator analysis where they found that people who scored high on some scale or personality factor tended to reduce their MAP consumption more, but no moderator stood out to us as a solid predictor here. Some studies found that women seem more amenable to messaging interventions, based on the results of Piester et al. 2020 and a few others, but some studies that exclusively targeted women found very little. I think gendered differences are interesting here but we didn't find anything conclusive.