This research report summarizes a new meta-analysis: Preventing Sexual Violence —A Behavioral Problem Without a Behaviorally-Informed Solution, on which we are coauthors along with Roni Porat, Ana P. Gantman, and Elizabeth Levy Paluck.

The vast majority of papers try to change ideas about sexual violence and are moderately successful at that. However, on the most crucial outcomes — perpetration and victimization — the primary prevention literature has not yet found its footing. We argue that the field should take a more behavioralist approach and focus on the environmental and structural determinants of violence.

The literature

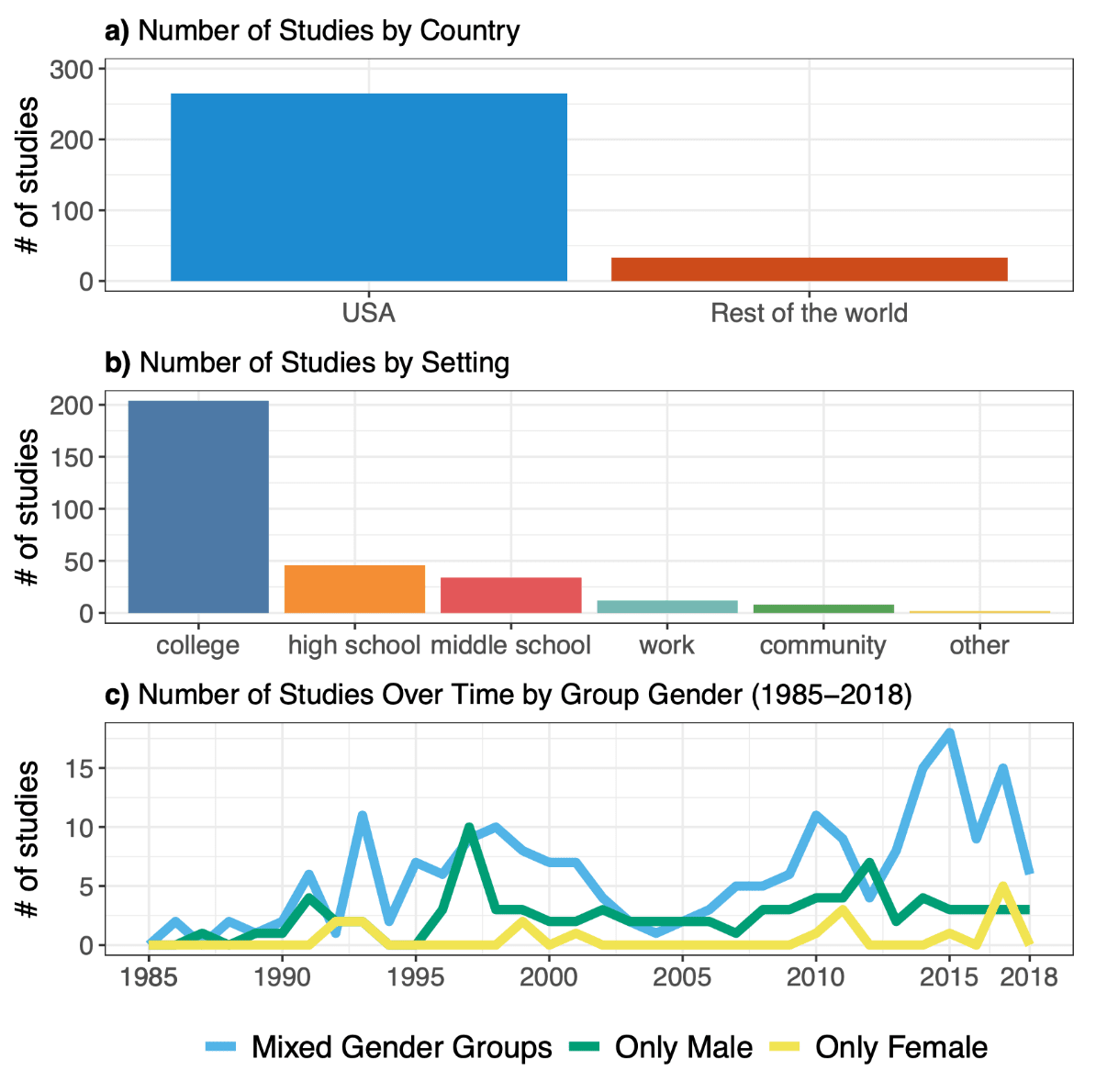

We surveyed papers written between 1986 and 2018 and found 224 manuscripts describing 298 studies, from which we coded 489 distinct point estimates.

We looked specifically at primary prevention efforts, which aim to prevent violence before it happens. Methodologically, studies needed to have a control group to be included, so no cross-sectional analyses or strictly pre-post designs.

We did not include

- secondary prevention, which deals with the aftermath of violence;

- anything where an impact on sexual violence was a secondary or unanticipated consequence of, e.g. giving cash to women unconditionally or opening adult entertainment establishments;

- studies of especially high-risk populations, like sex workers or people who are incarerated;

- anything that sought to exclusively change the behavior of potential victims, e.g. self-defense classes or "sexually assertive communication training."

These studies mostly take place on United States college campuses in mixed-gender settings. Six papers look at LMICs.[1]

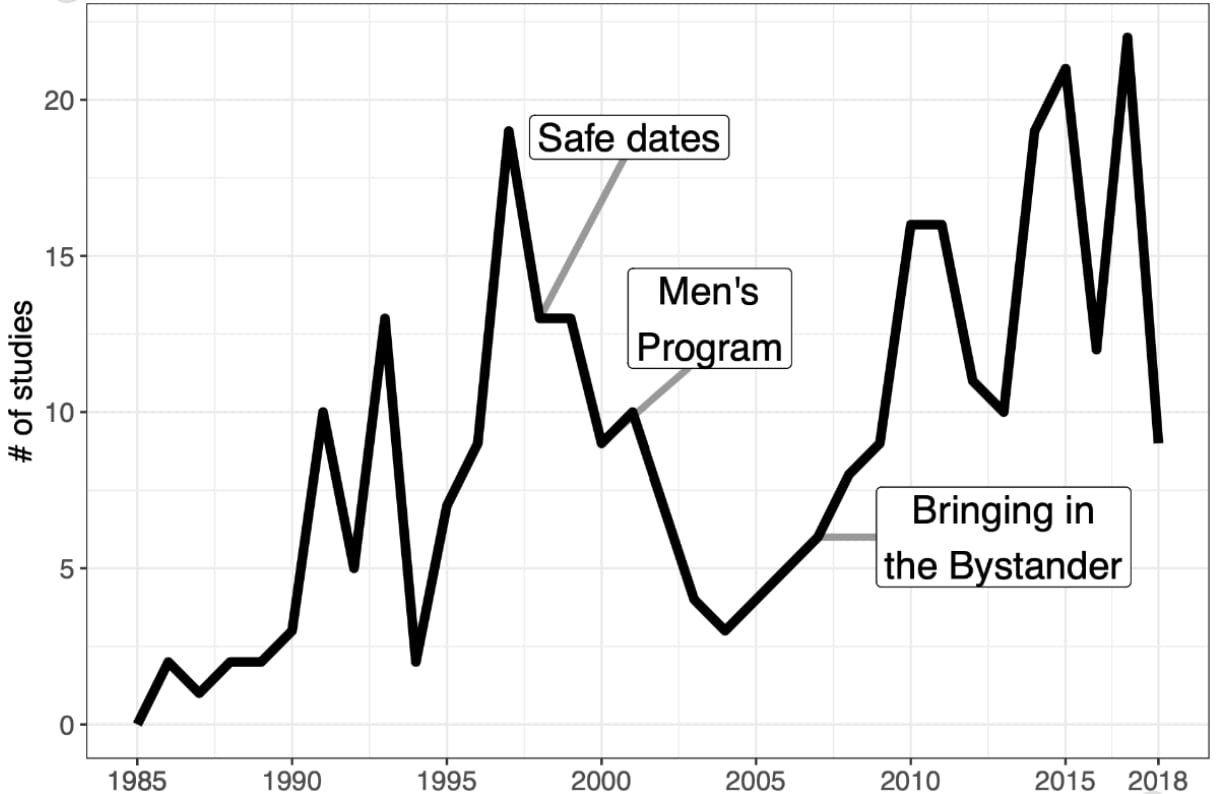

Here is the distribution of studies over time, with three "zeitgeist" programs highlighted.

Three zeitgeists programs

We identified three "pioneering and influential programs" that "represent the prevalent approaches to sexual violence prevention in a particular period of time."

The first is Safe Dates, which "makes use of multiple strategies, including a play performed by students, a poster contest, and a ten-session curriculum." Safe Dates starts from the assumption that perpetration and victimization can be reduced by changing dating abuse norms and gender stereotypes, as well as improving communication skills, e.g. anger management and conflict resolution.

The second is the Men's Program, which aims to prevent sexual violence by men by increasing their empathy and support for victims of sexual violence, and by reducing their resistance to violence prevention programs. In its initial form, participants watched a 15-minute dramatization of a male police officer who was raped by two other men, and then dealt with the aftermath. Participants then learned that the perpetrators “were heterosexual and known to the victim, and attempted to draw connections between the male police officer’s experience and common sexual violence experiences among women.” Participants then learned “strategies for supporting a rape survivor; definitions of consent;” how they might stop a peer from joking about rape or disrespecting women; and situations that were at high risk of sexual violence.

The third is Bringing in the Bystander, which centers on helping people in danger (e.g. separating a drunk friend from a risky situation) and speaking up against sexist ideas. These interventions shift the goalposts a bit from decreasing perpetration behavior to increasing bystander behavior. It also categorizes all people as potential interveners, rather than casting men as potential perpetrators and women as potential victims.

Outcome measurement

The vast majority of outcomes are self-reported. Perpetration and Victimization were measured with the Sexual Experiences Survey and ideas-based outcomes are typically measured with the Illinois Rape Myth Acceptance Scale.

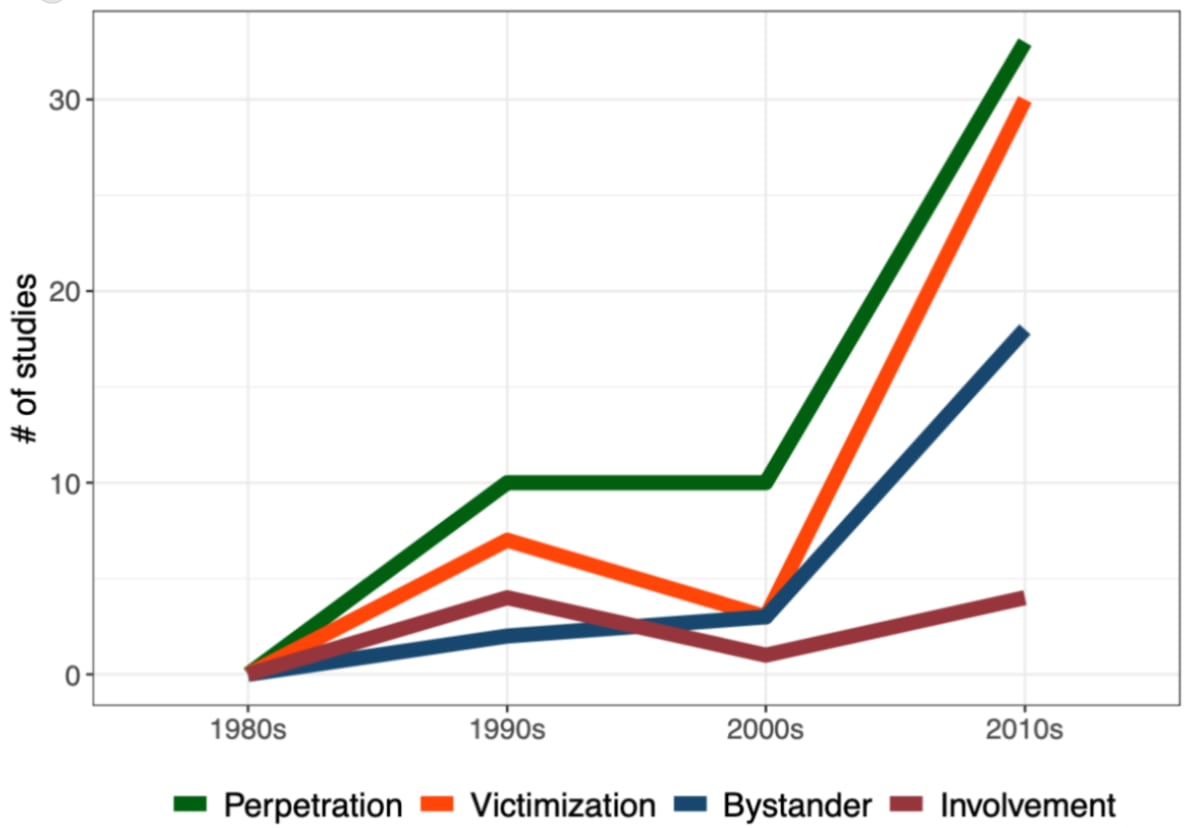

Perpetration, victimization, and bystander behaviors have become more popular outcomes over time.

Ideas-based approaches are dominant

Essentially every intervention we looked at tries to change ideas about sexual violence as a conduit towards reducing violence, and measures ideas as its main outcome.

The implicit model here is sometimes called KAP, or "Knowledge, Attitudes, and Practice." The typical measure of ideas is acceptance of rape myths (Payne, Lonsway, & Fitzgerald 1999), which take four forms: "disbelief of rape claims, the belief that victims are responsible for rape, that rape reports are manipulation, and that rape only happens to certain kinds of women."

One study tries to alter physical environments in addition to ideas. Taylor et al. (2013) deployed "a building-level intervention that included building-based restraining orders (“Respecting Boundaries Agreements”), posters to increase awareness of dating violence and harassment, and increased presence of faculty or school security in 'hot spots' identified by students." They evaluated this alongside "Shifting Boundaries," a curriculum designed to reduce dating violence and sexual harassment among adolescents ages 11-13.

Quantitative results

All results are presented in terms of Glass's ∆, an estimate of standardized mean difference. It takes the difference in average outcomes for the treatment and the control group and divides it by the standard deviation of the dependent variable for the control group. ∆ = 1.0 corresponds to a change of 1 standard deviation in the outcome.

An overall moderate effect

Our random effects meta-analysis reveals an overall effect size of ∆ = 0.28 (SE = 0.025). This effect is statistically significant at p < 0.0001, and corresponds to a small to medium effect size by convention. We did not detect meaningful evidence of publication bias.

Ideas are a lot easier to change than behaviors

The overall effect size for ideas-based outcomes is ∆ = 0.366 (SE = .031), p < 0.0001; for behaviors, it's ∆ = 0.071 (SE = 0.022), p = 0.0015.

Moreover, things look worse when we subdivide behaviors into their four constituent categories: perpetration, victimization, bystander behaviors, and involvement. (Involvement outcomes measure participants’ interest in participating in sexual violence awareness and prevention activities.)

| N (studies) | ∆ (SE) | |

| Perpetration | 53 | 0.033 (0.020) |

| Victimization | 40 | 0.046 (0.029) |

| Bystander | 23 | 0.129* (0.059) |

| Involvement | 9 | 0.236* (0.088) |

| Ideas-based | 264 | 0.366*** (0.031) |

* p < .05; ** p < .01; *** p < .001.

The effects on perpetration and victimization outcomes are neither clinically or statistically significant. In a commentary to the paper, Elise Lopez and Mary Koss calls this a "damning wake-up call."

Bystander efforts are generally ineffective

43 of 96 bystander studies measure behaviors. Twenty-two of those measured whether bystander interventions increase bystander behaviors, which they do to a modest extent ∆ = 0.154 (SE = 0.056), p = 0.011. In our database, twenty bystander studies measure perpetration or victimization, and had null effects on both perpetration (∆ = 0.019, SE = 0.019, p = 0.329) and victimization (∆ = -0.009, SE = 0.041, p = 0.835). This suggests, unfortunately, that nearly 1 in 3 studies in our database is pursuing a theory of change that does not meaningfully reduce sexual violence.

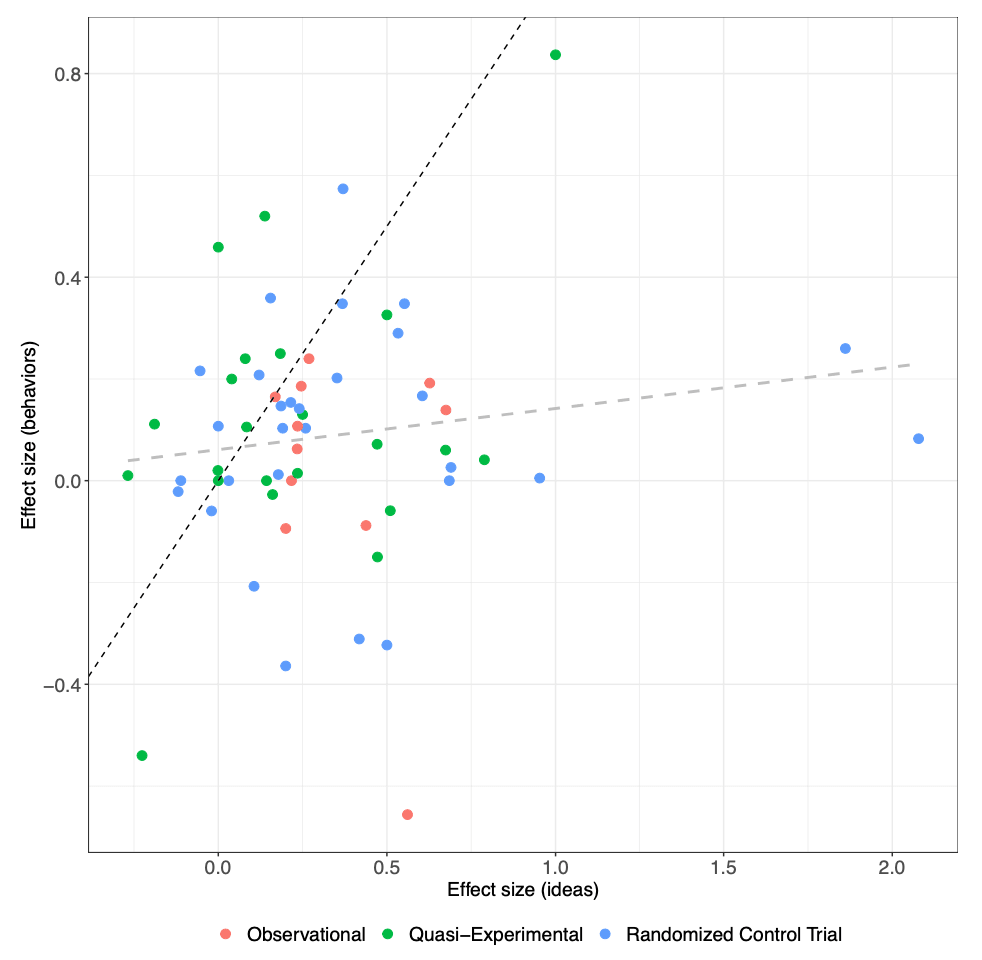

Attitude change does not predict behavior change

Sixty-two studies measure both ideas-based and behavioral outcomes. For those studies, the pooled behavior change effect is ∆ = 0.083 (SE = 0.029), p = 0.006, while the pooled idea change effect is ∆ = 0.290 (SE = 0.039), p <0.0001. Unfortunately, in these studies, we find a small, statistically non-significant correlation of r(60) = 0.136, p = 0.293 between changes in ideas and changes in behaviors. The relationship remains small and non-significant when looking solely at randomized evaluations (r = 0.138, p = 0.48). Basically, changing ideas does not appear sufficient for changing behaviors.

This figure displays this lack of relationship:

Each point is a study that measures both ideas and behaviors, with the average effect size for ideas about sexual violence on the X axis and the average behavioral effect size on the Y axis. The dotted black line shows what a correlation of 1.0 would look like, and the dotted gray line shows the correlation that we actually observe. Studies are color-coded by design.

Where do we go from here?

Environmental and structural theories of change are relatively untested

From the paper:

[W]e do not currently have many approaches that aim to solve the problem of sexual violence with interventions that target behavior change. Instead, we see predominantly efforts to change attitudes, beliefs, norms, and knowledge surrounding sexual violence with the assumption that behavior change will follow.

...Interventions that take a behavioral approach may do so by considering features of the environment. Physical spaces communicate local norms and expectations (Gantman & Paluck, 2022; S. McMahon et al., 2022) as well as make some behaviors easier than others to enact. Those features could be geographical configurations like a lack of common social space (Gantman & Paluck, 2022)...Another idea that is more relevant to the college setting is based on the finding that college students do not have enough private spaces to interact that are not bedrooms (Hirsch & Khan, 2020). Therefore, future work could quantitatively evaluate the impact of introducing more public spaces to reduce sexual violence on campus. Interventions may also consider who is afforded power in a given situation: For example, who owns the physical space, who has more money, who is driving home, and how the physical space communicates who is valued (Gantman & Paluck, 2022). For example, fraternities with poorly kept women’s restrooms were also places where sexual violence was more likely to occur (Boswell & Spade, 1996). We recommend a focus on geographical and other aspects of physical spaces as one untapped area for future theorizing and intervention programming that targets behavior change.

The Taylor et al. (2013) school-based intervention we mentioned earlier is one of the most effective interventions we looked at, and a great candidate for large-scale replication.

A few interventions that aren't primary prevention efforts came out looking comparatively effective

Unconditional cash transfers to women meaningfully reduces sexual violence in rural Kenya.

So does decriminalizing sex work (in Rhode Island and the Netherlands).

Ditto for opening adult entertainment establishments in NYC.

Some recent methodological innovation in measurement

We write:

We suggest researchers attempt to triangulate on rates of perpetration and victimization through multiple avenues of measurement. When testing interventions conducted in a college campus, researchers can collect complaints filed with the University’s Title IX offices, crime data observed by police on campus grounds, and self-reports of perpetration and victimization...

It is also possible to better assess the relationship between changing ideas about sexual violence and changing behavior. For example, Sharma (2022) tests a sexual harassment training aimed at men in India, and uses an innovative mix of women reporting on harassment behaviors of men in their class, trust games, direct questions and hypothetical scenarios to elicit opinions. Lastly, Schuster, Tomaszewska, and Krah ́e (2022) combine self-reported behavioral data at multiple timepoints with “risky sexual scripts” and open-ended questions to provide a fuller picture of how and when ideas about sexual violence and sexual violence itself vary together.

There are not a lot of cost-benefit-analyses in this literature

The one cost-benefit analysis that comes to mind is of the SASA! program in Uganda, which estimated they could prevent a single instance of IPV for $486 on average. We do not place much credence in this finding because the study had just 4 clusters in treatment and 4 in control. However, for folks working in this field, this is probably a good baseline estimate.

If a funder asked us about best current opportunities, we would say we need more basic research, i.e. randomized evaluations of the sorts of behavioral interventions we propose.

Further reading and supplementary materials

I (Seth) got a lot out of Sexual Citizens, which is an anthropology of sexual violence at Columbia University and some promising solutions. Here is a very nice Jia Tolentino article on the same program.

For more on whether violence against women and girls is an 'EA issue,' see Akhil's post on Violence against women and girls as a cause area, the Women and Girls Fund at The Life You Can Save, and Charity Entrepreneurship's report on violence against women and girls (discussion here).

Code and data are on GitHub and also Code Ocean.

Happy to answer any questions!

- ^

Those six are:

Alexander (2014), Adolescent Dating Violence: Application of a U.S. Primary Prevention Program in St. Lucia

Baiocchi (2017), A Behavior-Based Intervention That Prevents Sexual Assault: the Results of a Matched-Pairs, Cluster-Randomized Study in Nairobi, Kenya

Gage (2016), Short-term effects of a violence-prevention curriculum on knowledge of dating violence among high school students in Port-au-Prince, Haiti

Keller (2015), A 6-Week School Curriculum Improves Boys’ Attitudes and Behaviors Related to Gender-Based Violence in Kenya

Michaels-Igbokwe (2016), Cost and cost-effectiveness analysis of a community mobilisation intervention to reduce intimate partner violence in Kampala, Uganda

Rominski (2017), An intervention to reduce sexual violence on university campus in Ghana: a pilot test of Relationship Tidbits at the University of Cape Coast

Thank you for writing this up!

It's helpful that you flag that the large majority of studies are done in the US. I would find it helpful in discussing interventions if the location is flagged more - for example, the cash transfers intervention is in rural Kenya. My impression is that these interventions don't generalize well across different settings.

Our pleasure!

I edited a sentence about the UCT experiment to note where it took place.

Here is the country distribution for papers we meta-analyzed

Thanks for this post. Apologies I have not had to read through in detail, but I would suggest that perhaps:

Hi Akhil,

Thanks for engaging.

DM'd you

Super interesting, thanks for sharing! I have some possibly dumb questions about the finding that these programs don't change behaviors:

Great questions!

Our summary doesn't cover this much, but the paper discusses measurement error a lot, because it's a serious problem. Essentially everything in this dataset is self-reported.

The few exceptions are typically either involvement outcomes or actually somewhat bizarre, for instance, male subjects watch a video on sexual harassment and then teach a female confederate how to golf, while a researcher watches through a one-way mirror and codes how often the subject touches the confederate and how sexually harassing those touches were.

Perpetration and Victimization were measured with the Sexual Experiences Survey and ideas-based outcomes are typically measured with the Illinois Rape Myth Acceptance Scale.

It’s very plausible that people are misreporting what they do and what happens to them, and they might be doing so in ways that relate to treatment status. In other words, violence prevention programs might change how subjects understand and describe their lives. There is a dramatic illustration of this in Sexual Citizens where a young man realizes, in a research setting, that he once committed an assault. If this happens en masse, then treated subjects would be systematically more likely to report violence, and any true reductions would be harder to detect because of counteracting differences in reporting.

On the other hand, the median study in this literature lectures subjects on why rape myths are bad, and then asks them how much they endorse rape myths. There’s reason to think that people aren’t being entirely honest when they reply.

Beliefs about the magnitude of these biases -- downward bias for behavioral measures, upward bias for ideas-based measures -- are, unfortunately, very hard to quantify in this literature b/c nothing (to my knowledge) compares and contrasts self-reported outcomes with objective measures. But speaking for myself, I think the ideas-based outcomes are pretty inflated, and the behavioral outcomes are probably noisy rather than biased, so I believe our overall null on behavioral outcomes.

But yes, we need some serious work on the measurement front, IMO.

Nice. Thanks for an incredibly prompt and thorough response!

That makes sense to me. It is kind of interesting to me that the zeitgeist programs you mention are so different in terms of intervention size (if I'm understanding correctly, Safe Dates involved a 10 session curriculum, but the Men's Program involved a single session with a short video?), but neither seem effective at behavior reduction!

wow... oof...

Thank you for this summary + for conducting this research!

My pleasure and thank you! (I was able to mostly cut and paste my response from something else I was working on, FWIW)

By the way, I ended up buying a copy of Sexual Citizens because of this comment. I found it super interesting (if sad :( ), thanks for the rec!

Glad you liked it! I also got a lot out of Jia Tolentino's "We Come From Old Virginia" in her book Trick Mirror

Why did you chose 1986 as a starting point? Attitudes about sexual violence seem to have changed a lot since then, so I wonder if the potential staleness of the older studies outweighs the value of having more studies for the analysis. [Finding no meaningful differences based on study age would render this question moot.]

👋 our search extends to 1985, but the first paper was from 1986. We started our search by replicating and extending a previous review, which says "The start date of 1985 was chosen to capture the 25-year period prior to the initial intended end date of 2010. The review was later extended through May 2012 to capture the most recent evaluation studies at that time." I'm not too worried about missing stuff from before that, though, because the first legit evaluation we could find was from 1986. There's actually a side story to tell here about how the people doing this work back then were not getting supported by their fields or their departments, but persisted anyway.

But I think your concern is, why include studies from that far back at all vs. just the "modern era" however we define that (post MeToo? post Dear Colleague Letter?). That's a fair question, but your intuition about mootness is right, there's essentially zero relationship between effect size and time.

Here's a figure that plots average effect size over time from our

4-exploratory-analyses.htmlscript:And the overall slope is really tiny:

Yes, that was the question, and this is a helpful response.

I have no opinion on what the right cutoff would be if the slope were meaningfully non-zero, as there is no clear way to define the "modern" era. Perhaps I would have sliced the data with various cutoffs (e.g., 1985, 1990, 1995 . . .) and given partial credence to each resulting analysis?

Yeah, I was curious about this too, and we try to get at something theoretically similar by putting out all the "zeitgeist" studies in an attempt to define the dominant approaches of a given era. Like, in the mid-2010s, everyone was thinking about bystander stuff. But if memory serves, once I saw the above graph, I basically just dropped this whole line of inquiry because we were basically seeing no relationship between effect size and publication date. Having said that, behavioral outcomes get more common over time (see graph in original post), and that is probably also having a depressing effect on the relationship. There could be some interesting further analyses here -- we try to facilitate them by open sourcing our materials.

By the way, apologies for saying above that your "intuition is moot," I meant "your intuition about mootness is correct" 😃 (I just changed it)

Executive summary: A meta-analysis of sexual violence prevention programs finds that most focus on changing ideas rather than behaviors, and while they have a moderate overall effect, they do not significantly reduce perpetration or victimization.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.