Crosspost of this on my blog.

I recently wrote an article making the case for giving to shrimp welfare. Lots of people were convinced to give (enough to help around half a billion shrimp avoid a painful death), but because the idea sounds silly, many people on Twitter, hacker news, and various other places made fun of it, usually with very lame objections, mostly just pointing and sputtering. Here, I’ll explain why all the objections are wrong.

(Note: if any of the people making fun of shrimp welfare want to have a debate about it, shoot me a dm.)

Before I get to this, let me clarify: this is not satire. While shrimp welfare sounds weird, there’s nothing implausible about the notion that spending a dollar making sure that tens of thousands of conscious beings don’t experience a slow and agonizing death is good. If shrimp looked cute and cuddly, like puppies, no one would find this weird.

The basic argument I gave in the post was relatively simple:

- If a dollar given to an organization has a sizeable probability of averting an enormous amount of suffering and averts an enormous amount of expected suffering, it’s very good to donate to the organization.

- A dollar given to the shrimp welfare project has a sizeable probability of averting an enormous amount of suffering and averts an enormous amount of expected suffering.

- So it’s very good to give to the shrimp welfare project.

The second premise is very plausible. A dollar given to the shrimp welfare project makes painless about 1,500 shrimp deaths per year—probably totaling around 15,000 per dollar. It looks like the marginal dollar is even better, probably preventing around 20,000 shrimp from painfully dying. The most detailed report on the intensity of shrimp pain concluded, on average, that they suffer about 19% as intensely as we do, and as I’ve argued recently at considerable length, that’s probably an underestimate. This means that the average dollar given to the shrimp welfare project averts about as much agony as making painless ~2850 deaths per dollar, and the marginal dollar probably averts as much agony as making painless ~3,800 deaths per dollar.

If a dollar made it so that almost 4,000 people were spared an excruciating death by slowly suffocating, that would avert an extreme amount of suffering. But that’s the average estimate of how much agony a dollar given to the SWP averts. Even if you think shrimp agony only matters 1% as much as human agony, it’s about as good as making painless 40 human deaths per dollar. So even by absurdly conservative estimates, it prevents extreme amounts of suffering.

The main objection anyone gave to premise 1) was saying that the RP report is too handwavy and that it’s hard to know if shrimp feel pain at all. As I’ve argued recently, we should think it’s very likely that shrimp feel pain, and quite likely that they feel intense pain. But even if you’re not sure if they feel pain or how much pain they feel, a low probability that they feel intense pain makes giving to the shrimp welfare project extremely high expected value. If you think there’s a 20% chance that they feel intense pain and that the 19% estimate is too much by a factor of 10, a dollar given to the shrimp welfare project still averts as much agony as giving painless deaths to 76 humans.

Saying “we don’t know how much good this does, and it’s hard to be precise, therefore we should ignore it,” is deeply illogical (read

’s excellent article about this). The fact we don’t know precisely how much good something does doesn’t mean we shouldn’t try to quantify. It’s more rational to rely on rough estimates than to ignore all estimates and then make fun of people using estimates to justify funding things that sound weird.

People also objected by suggesting that lots of small pains don’t add up to be extreme agony. But I already addressed that in the post—first of all, I’m doubtful of the ethical claim, and second of all, even if lots of tiny bads don’t add up to be one extreme bad, shrimp painfully dying is most likely above the threshold of mattering significantly. It’s at least likely enough to be above the threshold that preventing it has very high expected value. If a shrimp painfully dying is on average 19% as painful as a human painfully dying, then preventing it is a very good bet.

The main objections have been to premise one which says that it’s good to spend a dollar if it has a sizeable chance of huge amounts of pain and suffering and averts a large amount of expected pain and suffering. The main counterargument people gave has been simply reiterating over and over again that they don’t care about shrimp.

Here’s how I see this. Imagine someone was savagely beating their dog to the point of near death because they don’t consider their dog’s interests. You argue they should stop doing this; dogs are capable of pain and suffering, so it’s hard to see what justifies mistreating them so egregiously. It would be wrong to hurt a human with dog-like cognitive capacities, so it should also be wrong to hurt a dog. “You don’t understand,” they reply, “I don’t care about dogs at all. I would set them on fire by the millions if it brought me slight happiness.”

Merely reiterating that you have some ethical judgment is not, in fact, a defense of the ethical judgment. If someone gives an argument against some prejudice, simply repeating that you have the prejudice is not a response. In response to an argument against racism, it wouldn’t do for a racist to simply repeat “no, you don’t understand, I’m really racist—I have extreme prejudice on the basis of race.”

In my article I argued:

- When you consider the insane scale of effectiveness, giving to the SWP is not that counterintuitive. If there were 20,000 shrimp about to be suffocated in front of you, and you could make their deaths painless by using a dollar in your pocket, that would seem to be a good use of a dollar.

- Intuitively, it seems that extreme suffering is bad. When we reflect on what makes it bad, the answer seems to be: what it feels like. If you became much less intelligent or found out you were a different species, that wouldn’t make your pain any less bad. But if this is right, then because shrimp can feel pain, their suffering matters. If what makes pain bad is how it feels, and shrimp can feel pain, then shrimp suffering matters. Ozy has a good piece about this, reflecting on their experience of “10/10 pain—pain so intense that you can’t care about anything other than relieving the pain”:

It was probably the worst experience of my life.

And let me tell you: I wasn’t at that moment particularly capable of understanding the future. I had little ability to reflect on my own thoughts and feelings. I certainly wasn’t capable of much abstract reasoning. My score on an IQ test would probably be quite low. My experience wasn’t at all complex. I wasn’t capable of experiencing the pleasure of poetry, or the depth and richness of a years-old friendship, or the elegance of philosophy.

I just hurt.

So I think about what it’s like to be a chicken who grows so fast that his legs are broken for his entire life, or who is placed in a macerator and ground to death, or who is drowned alive in 130 degrees Fahrenheit water. I think about how it compares to being a human who has these experiences. And I’m not sure my theoretical capacity for abstract reasoning affects the experience at all.

When I think what it’s like to be a tortured chicken versus a tortured human—

Well. I think the experience is the same.

- There’s a long history of humans excluding others that matter from their moral circle because they don’t empathize with them. Thus, if you find yourself saying “I don’t care about group X in the slightest,” the historical track record isn’t kind to your position. Not caring about shrimp is very plausibly explained by bias—shrimp look weird and we don’t naturally empathize with them, so it’s not surprising that we don’t value their interests.

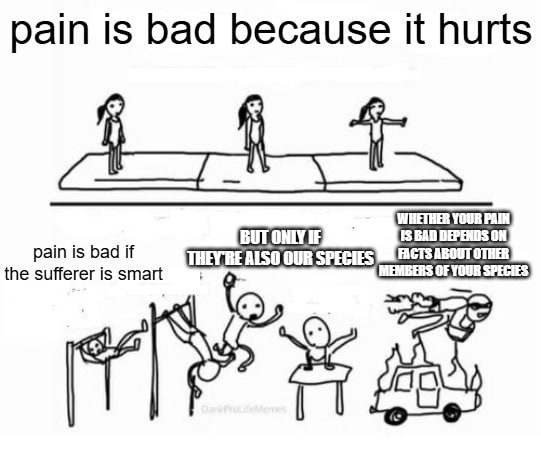

- It’s very unclear what about shrimp is supposed to make their suffering not bad. This is traditionally known as the argument from marginal cases (or, as it were, from marginal crayfish); for the criteria that are supposed to make animals’ interests irrelevant, if we discovered humans having those traits, we would still think their interests mattered.

People proposed a few things that supposedly make shrimp pain irrelevant. The first was that they were very different from us. Yet surely if we came across intelligent aliens of the sort that occur in fiction that were very different from us, their extreme suffering would be very bad, and it would be wrong to egregiously harm them for slight benefit. Whether something is similar to us seems morally irrelevant. It wouldn’t be justified for aliens very different from us to hurt us just because we’re different.

Second, people proposed that shrimp are very unintelligent. But if there were mentally disabled people who were as cognitively enfeebled as shrimp, we wouldn’t think their suffering was no big deal. How smart you are doesn’t seem to affect the badness of your pain; when you have a really bad headache or are recovering from a surgery, the badness of that has nothing to do with how good you are at calculus and everything to do with how it feels.

Third people proposed that the thing that matters is that they aren’t our species. But surely species is morally irrelevant. If we discovered that some people (say, Laotians) had the same capacities as us but were aliens and thus not our species, their pain wouldn’t stop being bad.

Fourth, people propose that what matters morally is being part of a smart species. But if we discovered that the most mentally disabled people were aliens from a different species, their pain obviously wouldn’t stop being bad. How bad one’s pain is depends on facts about them, not about other members of their species (if it turned out that humans were mostly about as unintelligent as cows, but that the smartest ones had been placed on earth, the pain of mentally disabled humans wouldn’t stop being a big deal). The reason that the pain of mentally disabled people is bad has to do with what it’s like for them to suffer, not other members of their species—if an alien came across mentally disabled people or babies, to decide whether or not it would be bad to hurt them, they wouldn’t need to know about how smart other people are.

Even if you’re not sure that pain is bad because of how it feels, rather than something about our species, as long as there’s even a decent probability that it’s bad because of how it feels, the shrimp welfare project ends up being a good bet.

The last objection, and potentially the most serious, is that money given to the shrimp welfare project is very valuable but less good than other charities. Now, doing a detailed cost benefit analysis between the SWP and other animal charities is above my paygrade, though I mostly agree with Vasco’s analysis. So I’ll just explain why I think that the shrimp welfare project is better than longtermist organizations. Longtermist organizations are those that try to make the future go better—the argument for prioritizing them is that the future could have so many people that the expected value of longtermist interventions probably swamps other things.

Imagine that you could spend a dollar either giving to longtermist organizations or making 4,000 people’s deaths painless. Intuitively it seems like giving the people painless deaths is better—a thousand dollars given would prevent nearly 4 million painful human deaths. At some point, short term interventions become so effective that they’re worthwhile given that:

- It just intuitively seems like they are. There seems to be something obviously wrong about giving 1,000 dollars to a longtermist org rather than giving painless deaths to 4 million people.

- Preventing tons of terrible things has lots of desirable longterm ramifications. Perhaps the shrimp welfare project will prevent shrimp farming from spreading to the stars and torturing quadrillions of shrimp.

- In the future, there might be many simulations run of the past. If this is right, then a past in which there was lots of shrimp farming going on will cause unfathomable amounts of suffering that scales with the size of the future. Similar longtermist swamping considerations apply here. There are lots of other speculative ways that preventing bad things like painful shrimp deaths can have way more benefits than one would expect.

- One should have some decent normative uncertainty. For this reason “prevent lots of terrible things from happening,” is generally a good bet, given that the shrimp welfare projects is thousands of times more neglected than longtermism.

- Over time I’ve come to think it’s less obvious that the future is good in expectation. We might spread wild animal suffering across the universe and inflict unfathomable suffering on huge numbers of digital minds. I’d still bet it’s good in expectation, but it makes it less of a clear slam dunk.

For this reason, until convinced otherwise, I’m giving to the shrimp.

I find that the simplest argument against the shrimp welfare movement is that if the same reasoning is applied to demodex mites or nematodes you could easily come up with expected value calculations that prove that every pursuit of humanity is irrelevant in comparison to the importance of our finding a solution to the suffering of these microscopic organisms.

Reductio ad absurdum, therefore these expected value calculation Fermi estimates are probably not a complete and or maybe even useful approach to ethics.

I’m an ardent critic of the use of naive EV calculations in EA. But what determines whether something is a naive EV calculation isn’t whether the probability is low, but whether the level of uncertainty in that probability is super high.

Any EV calc we “came up with” about mites would be uncertain to the point of being worthy of zero credence. It would be a Pascal’s mugging.

The case for stunning shrimp, as far as I can tell, is far less uncertain. I think Omnizoid is right that it’s hard to dispute it being a very strong bet.

But I also want to mention that the more I learn about our “surest bets” for doing good (e.g bednets) the more uncertain I discover them to be. This leads me to be super reluctant to go “all in” on anything the way you suggest we might be inclined to with the EV calcs for microscopic organisms.

In conclusion: SWP looks like a highly cost-effective org for doing good in the world. We should support it, but we shouldn’t go all in on it or any other cause/intervention. The world is messy enough that we should be highly pluralistic (even while continuing to prioritize and make trade-offs)

I agree that there's a big difference between shrimps and nematodes, although the uncertainty for shrimp sentience remains extremely high, to the point where I think it's not unreasonable that some people consider it something like a pascals mugging (personally I don't put it in that category).

Yes shrimp "sentience" or "capacity to suffer" is less uncertain than a mite, but it's still very uncertain even under models like RPs which I think probably favor animals.

Things with a 50% chance of being very good aren't pascal's muggings! Your decision theory can't be "Pascal's muggin means I ignore everything with probability less than .5 of being good."

I agree we don't ignore everything with a probability of less than 0.5 of being good.

Can you clarify what you mean y "50% chance of being very good?"

1) Rethink priorities give Shrimp 23% chance of sentience

2) Their non-sentience adjusted welfare range than goes from 0 (at 5th percentile) to 1.095 (at 95% percentile). From zero to more than a human is such a large uncertainty range that I could accept arguments at this point that it might be "unworkable" like Henry says (personally I don't think its unworkable)

3) Then After adjusting for sentience it looks like this.

Whatever way the cookie crumbles I think that's a lot smaller than a "50% chance of being very good" and also a high uncertainty range.

The calculations around shrimp welfare have very high uncertainty. Look at the confidence intervals on the rethink priorities welfare ranges. Why this uncertainty is workable and demodex mite uncertainty is not I’m not clear on.

But those guys almost definitely aren't conscious. There's a difference between how you reason about absurdly low probabilities and decent probabilities.

(I also think that we shouldn't a priori rule out that the world might be messy such that we're constnatly inadvertently harming huge numbers of conscious creatures).

“But those guys almost definitely aren't conscious”. Based on what?