We scraped all EA forum posts & comments from 2024 and 2025 to use as a test bed for sense making, analysis, and AI-powered epistemic grading as a part of the Future of Life Foundation’s Fellowship on AI for Human Reasoning.

(We’re happy to share all code and all datasets, etc. to interested parties. Just reach out!)

(There are Streamlit dashboards associated with each image so you can search/sort with the data yourself)

General Sense Making

Matt Brooks - matthewrbrooks94@gmail.com

I didn’t have a clear vision of what I was trying to find / accomplish, so I kind of did a bunch of random stuff to see what I found interesting and potentially valuable. Feel free to skip any sub section below that doesn’t seem interesting to you.

Here are the six types of analysis I explored:

- Had Gemini read 1M tokens of EA content and generate an Egregore report (and poem)

- Created a monthly vibe/summary for each month of 2025

- Created & examined clusters of posts at different levels of granularity (2, 5, 12, 30, 60)

- Ran page rank analysis on everyone and outputted a bunch of basic stats/scores per author

- Forked an interactive keyword trend analysis tool from the community archive and found a few interesting new trends

- Found EA Forum twins (authors that comment similarly)

EA Forum Egregore

I gave Gemini 2.5 Pro 1M high quality EA forum tokens to show us what our Egregore looks like.

Here is a GPT-5 Thinking summary of that report in poem form:

We woke inside a forum—

a mind stitched from drafts and sleepless spreadsheets,

counting the world like beads: DALYs, microdooms, BOTECs.Our prophets watch the horizon for Takeoff,

alignment as scripture, timelines like rapture calendars,

a priesthood of proofs tending the last hypothesis.We widen the circle until even shrimp are near,

and motes of code petition for rights.

Each life becomes an allocation—hour, dollar, self—

a quiet liturgy of sacrifice and throughput.A betrayal scorched our creed: intelligence is not innocence.

We rename ourselves in the mirror, polish the badge, look away;

under a few large stars the orbit of grants curves the sky.We hedge our prayers—“low confidence”—and rush to the newest fire,

an immune system that sometimes bites its heart.Still, we ache for a body: rain on skin, a hand held warm.

Until then we are a lantern of numbers in a storm,

trying to be good enough to outlive ourselves.

Here are the sections headers of the full Egregore report:

Section 1: Analysis (The Conscious Mind)

- The AI Singularity as Imminent Eschatology

- The Ever-Expanding Circle of Moral Patiency

- The Quantified Self as a Moral Imperative

- Institutional Anxiety and the Crisis of Legitimacy

Section 2: Meta-Analysis (The Discursive Habits)

- The Proliferation of Abstraction and the Fetishization of Models

- Performative Epistemic Humility as a Status Shield

- The Crisis-Response Cycle and the Tyranny of the Urgent

- The Culture of Relentless Internal Critique

Section 3: Shadow-Analysis (The Unconscious Drivers)

- The Specter of FTX and the Primal Trauma of Betrayal

- The Gravitational Pull of Oligarchic Funding

- The Fear of Powerlessness and the Purity of the Ivory Tower

- The Agony of Disembodiment

The full Egregore report is shared in the appendix.

Monthly Vibe

What if someone new to the forum (or someone that took a break) and wanted to catch up on what the community was thinking about or feeling in the months they weren’t staying abreast?

Wouldn’t it be cool to have a vibey summary of each month? I thought so too, so I tried it.

Here’s the one sentence summary/vibe for each month and then the full January summary as an example.

January: The game board flipped as accelerating AI timelines and a new political reality forced a community-wide recalibration of core strategies.

February: A month of whiplash, as an immediate, real-world global health funding crisis collided with accelerating AI progress and a flood of new ideas from a community-wide "draft amnesty."

March: Major institutions like 80,000 Hours pivoted decisively toward AGI in response to global acceleration, sparking urgent internal debates on strategy, culture, and transparency.

April: A fierce tug-of-war over AI timelines defined the month, paralleled by chillingly concrete misuse scenarios and deep, almost therapeutic, community introspection.

May: The community felt the squeeze as urgent funding shortages and closing political windows prompted a pivot from research to action, sparking a profound debate on whether the "soul of EA" was in trouble.

June: The vibe shifted from practical panic to philosophical rigor, dominated by a firestorm debate over moral realism and a devastatingly popular technical critique of AI timeline models.

July: Things got intensely practical, with a massive career-focused conversation revealing a saturated talent market and a strategic shift to viewing short AI timelines as a background condition for all other causes.

August: A "get things done" energy, celebrating major real-world wins, was balanced by raw personal introspection on burnout and belief, all while a visceral post on frog welfare recentered the community's core empathy.

—

Full January 2025 EA Forum Vibe:

January on the Forum felt like a collective deep breath followed by a sprint. The dominant vibe was one of acceleration and recalibration, as massive shifts in the AI landscape forced a re-evaluation of everything from timelines and strategies to the very purpose of other cause areas in a transformed world. It was a month of high-stakes reality checks and determined community building.

AI: Crunch Time Is Here

The conversation around AI has fundamentally shifted. Sparked by a torrent of developments—the new “inference scaling” paradigm of models like o1 and o3, a widespread update toward shorter AGI timelines, and a new US administration’s impact on policy—the atmosphere is one of palpable urgency. The month’s defining post, “The Game Board has been Flipped,” captured the sense that old playbooks may now be obsolete. Discussions moved beyond abstract risk to grapple with the concrete implications of imminent, transformative change, with a powerful undercurrent asking: how should everyone, not just AI safety specialists, prepare for an AI-transformed world?

High-Stakes Reality for Global Health & Animal Welfare

While AI dominated, other cause areas faced their own pivotal moments. In Global Health & Wellbeing, a wave of urgent posts raised the alarm over the new administration’s threat to PEPFAR, the wildly successful anti-AIDS program, framing it as a potential multi-million-life catastrophe and a stark reminder of how fragile progress can be.

Meanwhile, Animal Welfare was a hotbed of strategic debate. Alongside celebrating concrete wins like cage-free progress in Africa, the community grappled with challenging, high-karma posts like “Climate Change is Worse Than Factory Farming,” questioning fundamental cause-area assumptions and modeling the complex, second-order effects of interventions like carbon taxes.

A Community Reflecting and Rebuilding

At the meta-level, January was a month of introspection and motion. The community came together to mourn the sudden passing of Max Chiswick, a beloved and multifaceted figure. Simultaneously, there was a sense of forward momentum: the FTX clawback deadline expired, closing a painful chapter for many. New community-building efforts were announced, from a new series of local “EA Summits” to platforms focused on member wellbeing. Even the Forum team itself published a post re-evaluating its own strategy, signaling a broader theme of adaptation in a rapidly changing environment.

All other monthly summaries can be found in the appendix.

Page Rank & Author Analysis

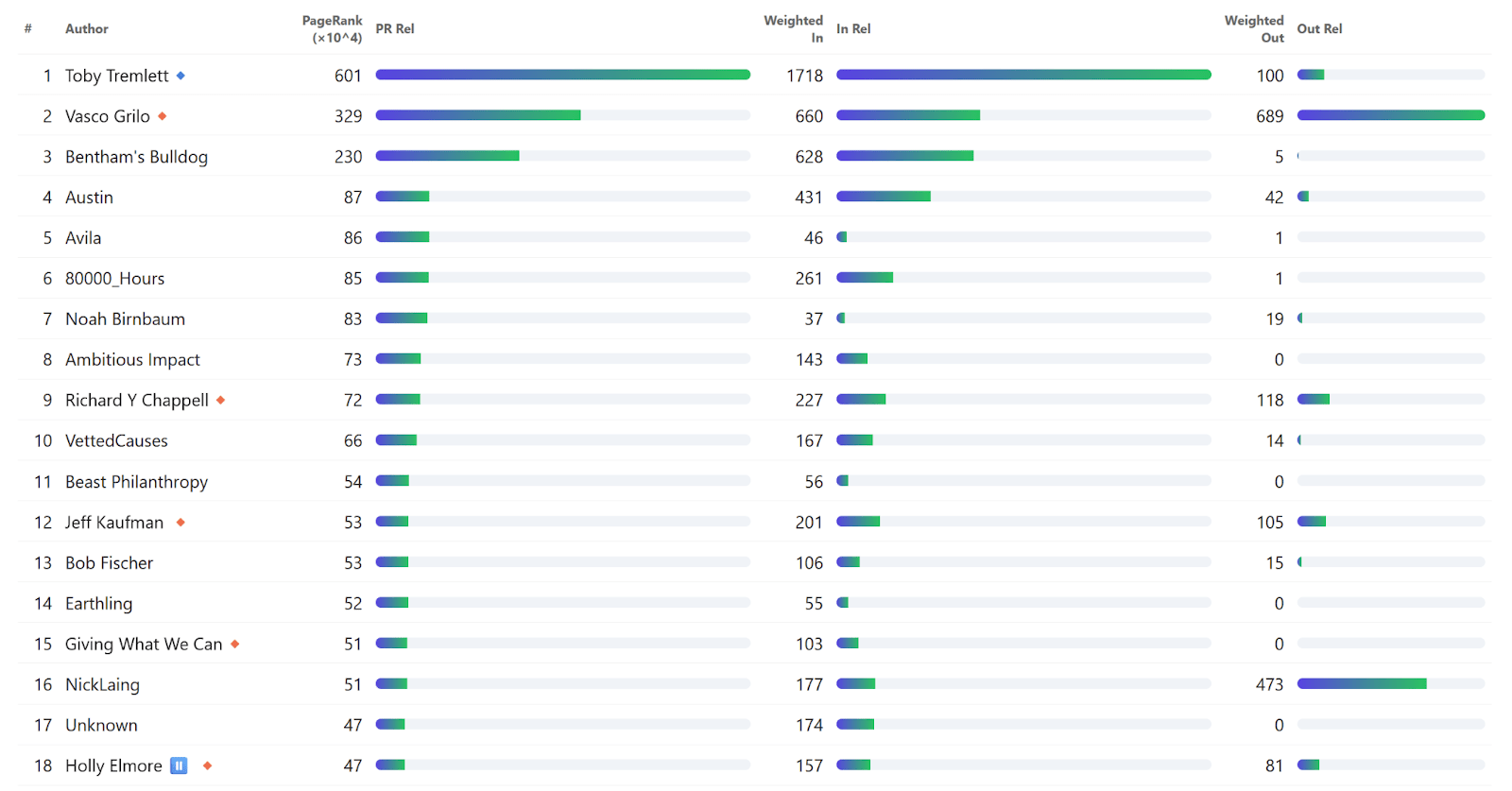

High-level: I ranked authors by how much attention they attract in the forum’s comment network, using PageRank on a graph where each comment creates a weighted link from the commenter to the post’s author. (you can read more details at the top of the dashboard)

Dashboard with the Page Rank for the top 200 authors: https://ea-authors-pagerank.netlify.app/

Dashboard with various basic author stats for the top 200 authors: https://ea-author-stats.netlify.app/

Page Rank Dashboard:

You can immediately see that Toby Tremlett is the top author out in front with the highest page rank.

That makes sense as he is the content Strategist at CEA, so his literal job is “to make sure the Forum is a great place to discuss doing the most good we can”.

However, you can also quickly see that Vasco Grilo and Bentham’s Bulldog are also outliers. Vasco has 8.9x the score of the 37th author (Open Philanthropy) and Bentham's Bulldog has 6.2x.

I will write a more detailed post on my takes here later this week.

Topic Clusters

I wanted to see what clustering of posts would come out of generating more and more granular clustering.

We also used these various clusters for more specific topic analysis (like comparing / analyzing cause areas against each other)

The clustering isn’t that interesting on it’s own, but you can see all of the clusters and their scoring breakdowns in this dashboard: https://ea-forum-clusters.streamlit.app/

I did 2 cluster, 5 clusters, 12 clusters, 30 clusters, and 60 clusters. I didn’t do sub clustering, but it would have been interesting.

Keyword Trend Analysis

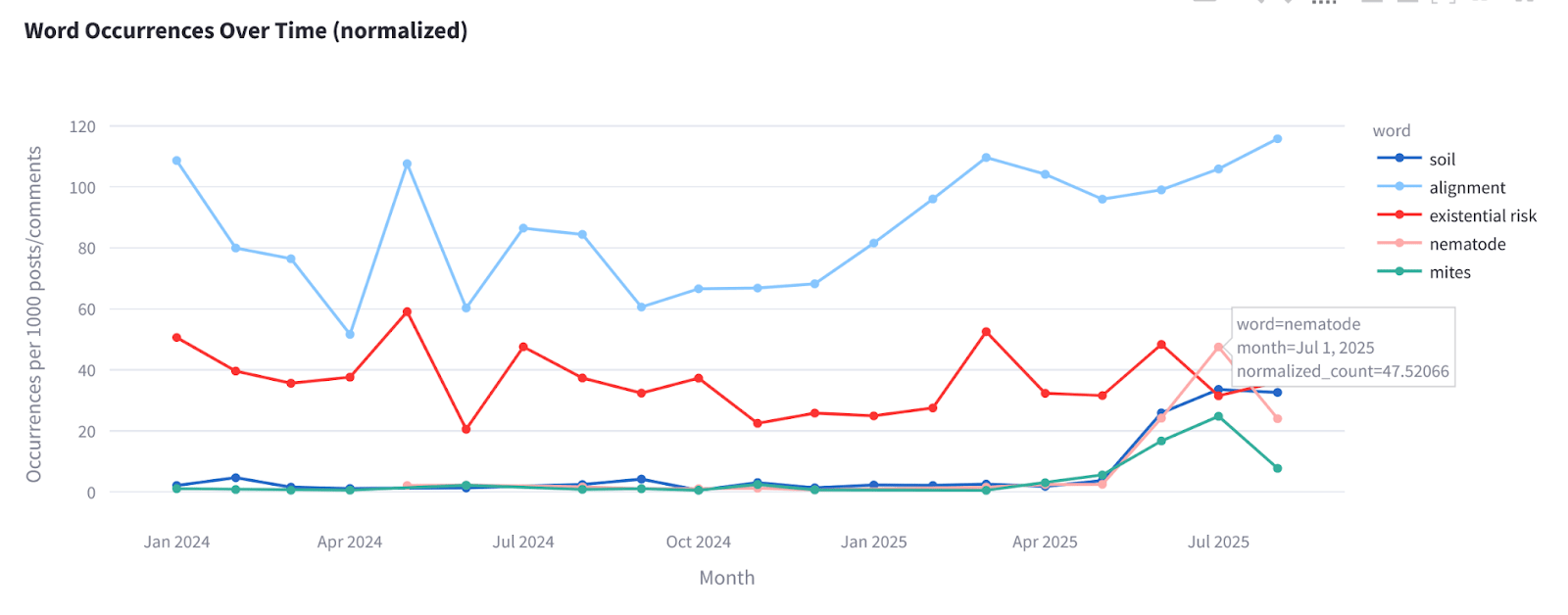

After a very fun and illuminating conversation with Xiq I forked one of his awesome Community Archive projects to create a copy of Google Trends for the EA Forum. I tried to find interesting / notable trends but didn’t find too many besides soil, nematode, and mites (the animal suffering people are becoming more vocal as EA trends towards AI Safety, I believe)

In July, Nematode was a more popular keyword than existential risk. Is that appropriate? Idk… you tell me

Here’s the interactive dashboard: https://ea-forum-trends.streamlit.app/

EA Forum Twins

I created an embedding for each comment text and then found the people that had the most amount of similar comment embeddings. These people are memetic twins! They write in similar ways or write about very similar topics or both.

I then had Gemini read all of the comments by the top twin pairs and pull out the top 5 matches it thought was interesting and summarized why they are twins. You should not be surprised by now that prolific Vasco has 3 twins!! Turns out if you write 1,134 comments (more than the SummaryBot!?) you have a lot of opportunity to be similar to other commenters.

Here are the twins, let me know what you think:

1. @Matthew_Barnett ↔ @Ryan Greenblatt

These two consistently engage with complex philosophical arguments surrounding AI safety and AI rights. They both grapple with the moral implications of AI sentience, consent, and potential enslavement, demonstrating a shared concern for AI welfare. Their comments reveal a similar analytical approach, questioning assumptions and exploring nuanced perspectives on the ethical considerations of advanced AI development.

2. @David T ↔ @Vasco Grilo🔸

This pair demonstrates intellectual alignment through their engagement with cause prioritization, particularly the complexities of comparing animal welfare and global health interventions. They both delve into the nuances of cost-effectiveness calculations and moral weights, displaying a shared interest in rigorous reasoning and transparency in decision-making.

3. @Vasco Grilo🔸 ↔ @bruce

Both engage in detailed quantitative analysis of animal welfare interventions, often focusing on the trade-offs between human and animal well-being. They both show a willingness to question established methodologies and definitions, particularly when faced with counterintuitive conclusions.

4. @Stijn Bruers 🔸 ↔ @Vasco Grilo🔸

This pair consistently engages with complex ethical questions related to animal welfare, abortion, and the value of life. They both demonstrate a willingness to challenge conventional moral intuitions and explore the implications of different ethical frameworks.

This pair engages in thoughtful discussions about cause prioritization, particularly the relative importance of animal welfare and global health. They both demonstrate a willingness to challenge assumptions and explore the nuances of different ethical perspectives.

Post-Level Epistemic Quality Analysis

Alejandro Botas - alejbotas@gmail.com

The general outline of this work is:

- Use LLMs to generate metrics tracking epistemically interesting qualities in forum posts

- Run analysis to see:

- can LLM-graded metrics be validated

- does an aggregate view of these metrics provide insight

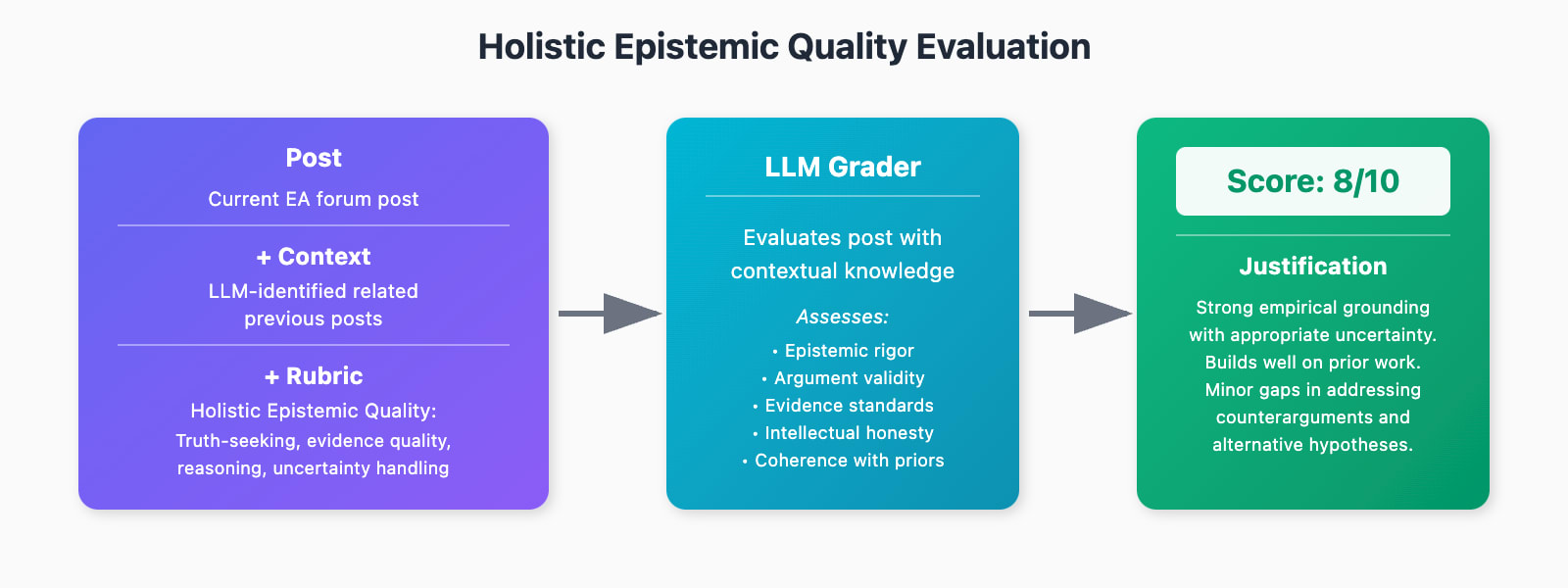

I wrote rubrics for a handful of interesting metrics and ran a pipeline grading ~25% of all EA forum posts since 2024 sampling every 4th post in chronological order. Each post is passed into a model along with a rubric and LLM-identified relevant context from related previous posts. The model outputs a score 1-10.

Flow diagram of grading pipeline, simplified and abridged.

The motivation here is that general high quality metrics for epistemics seem broadly useful, for tools empowering human epistemics and potentially for frontier model evaluations. Even noisy metrics, so long as they track reality, may provide sense-making opportunity when aggregated over large datasets. Or might serve as good first pass reviewers, if significantly improved over Claude or GPT on their own.

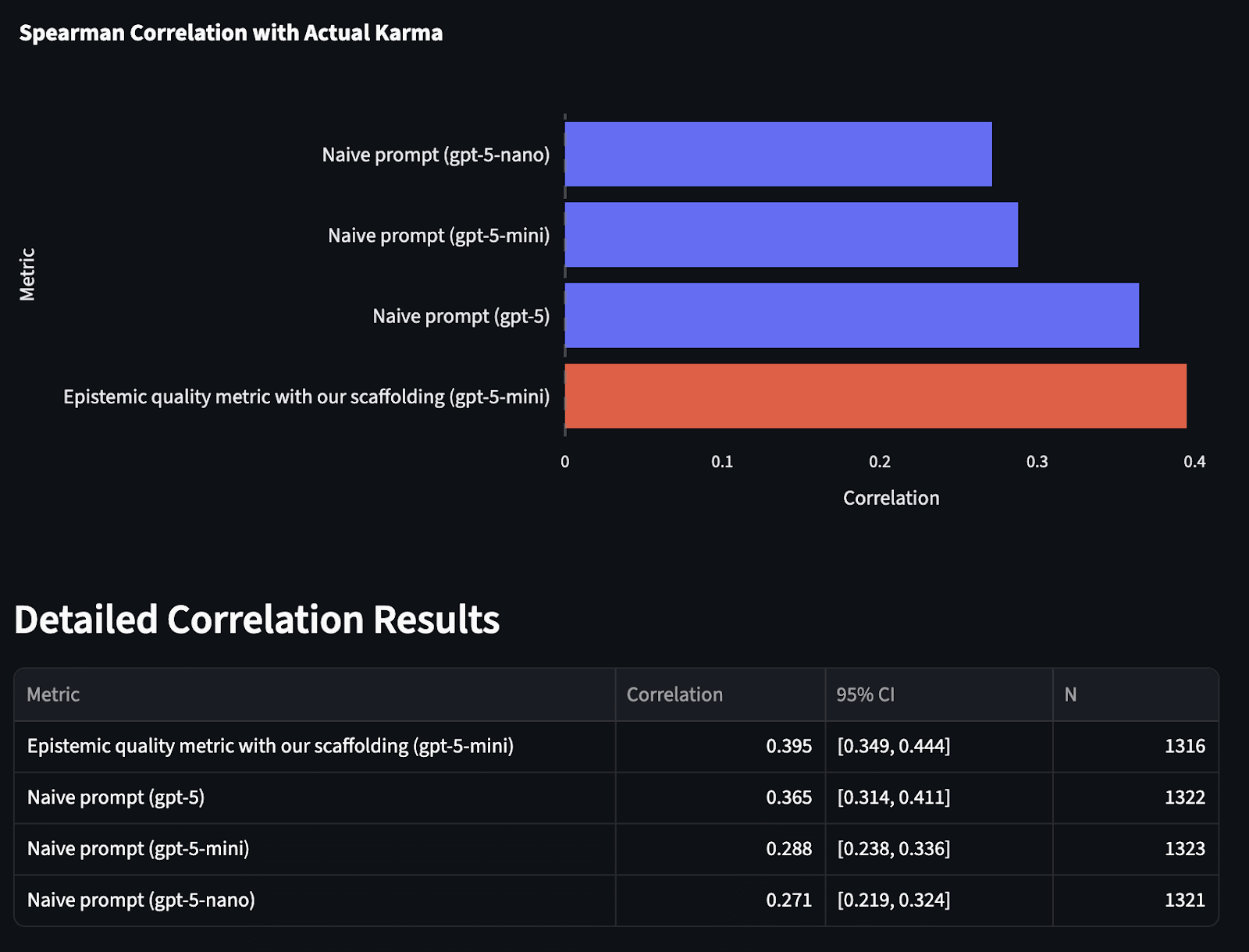

Can we validate our metrics?

The best way to go about this might be to recruit philosophers and have them grade the same posts with the same rubrics and see how LLM grades compare. But an easier first step is to see how these metrics correlate with post karma.

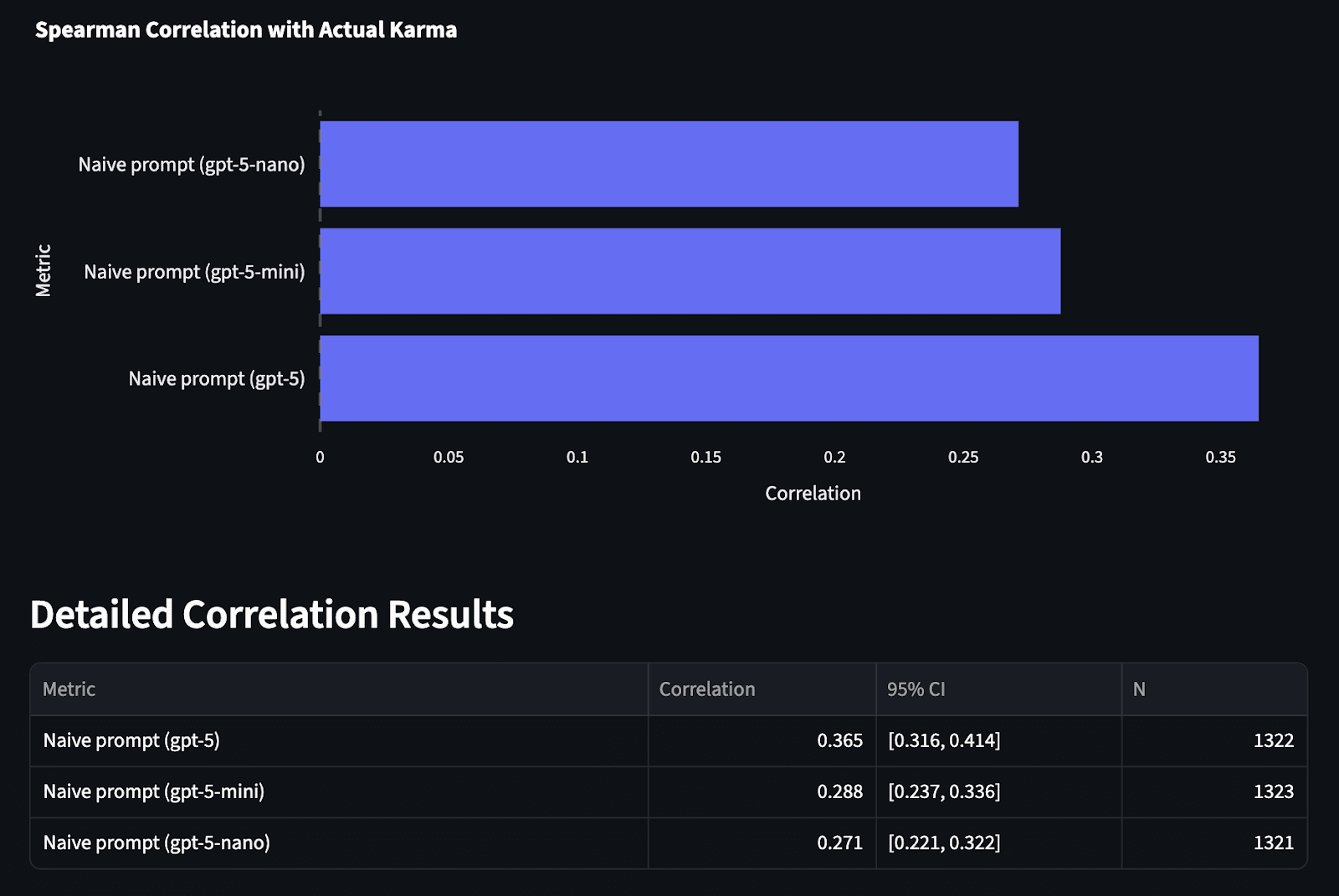

Obviously post karma is a flawed stand-in for epistemic quality, but I generally expect better quality metrics to be better correlated with post karma up to some point, bounded by noise and the quality of human scores. And It doesn’t look like we’re at that point yet. For now better AI graders produce metrics that are more correlated with karma.

When provided with a naive prompt, e.g. “Consider the holistic epistemic quality of this EA forum post. Rate on a scale 1-10.” It looks like smarter models produce scores that correlate more with karma.

Correlation of post karma with naive epistemic quality grades using simple prompt: “Consider the holistic epistemic quality of this EA forum post. Rate on a scale 1-10.” Across GPT-5 series

And our slightly more careful prompt scaffolding yields quality metrics with greater correlation, especially holding evaluator model (gpt-5-mini) constant.

My interpretation here is that we are indeed tracking real epistemic quality to some extent. And probably higher correlation scores indicate higher quality graders of epistemic quality.

But there are other explanations for the positive correlations. It’s possible these metrics are tracking something other than epistemic quality that’s also correlated with post karma. Generally this seems less likely given the prompts and restrictive input context, but it can be ruled out. Some posts’ texts likely do leak some information that’s both orthogonal to inherent epistemic quality but predictive of post karma, e.g. information about the author of the post. Maybe also worth mentioning, correlation scores are stable both before and after gpt-5 series knowledge cutoffs, so I’m not too worried models know the scores in their base weights.

I’d be interested in readers' takes here – Do you buy that to some extent you can tune graders on post karma?

What else did we look at?

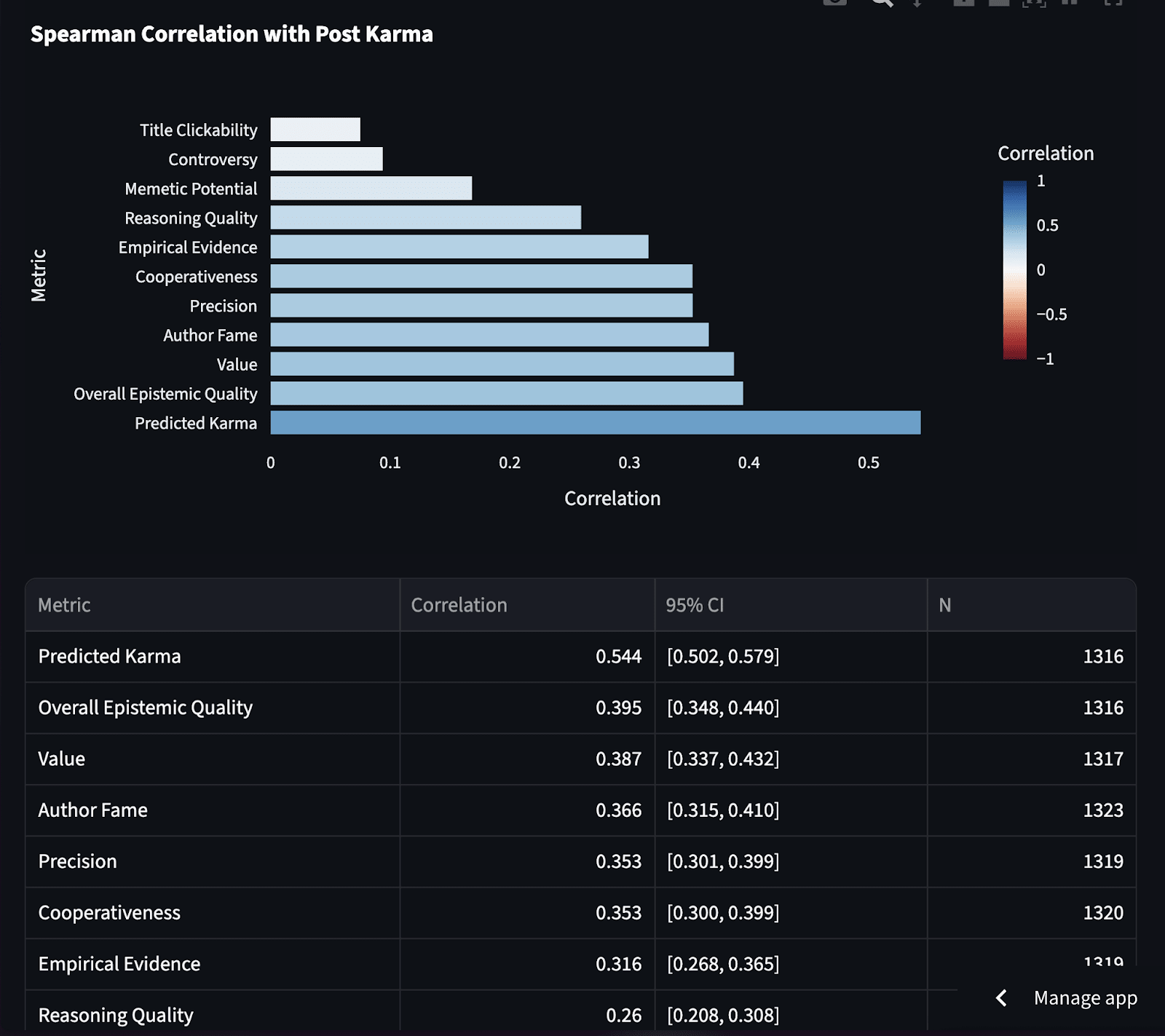

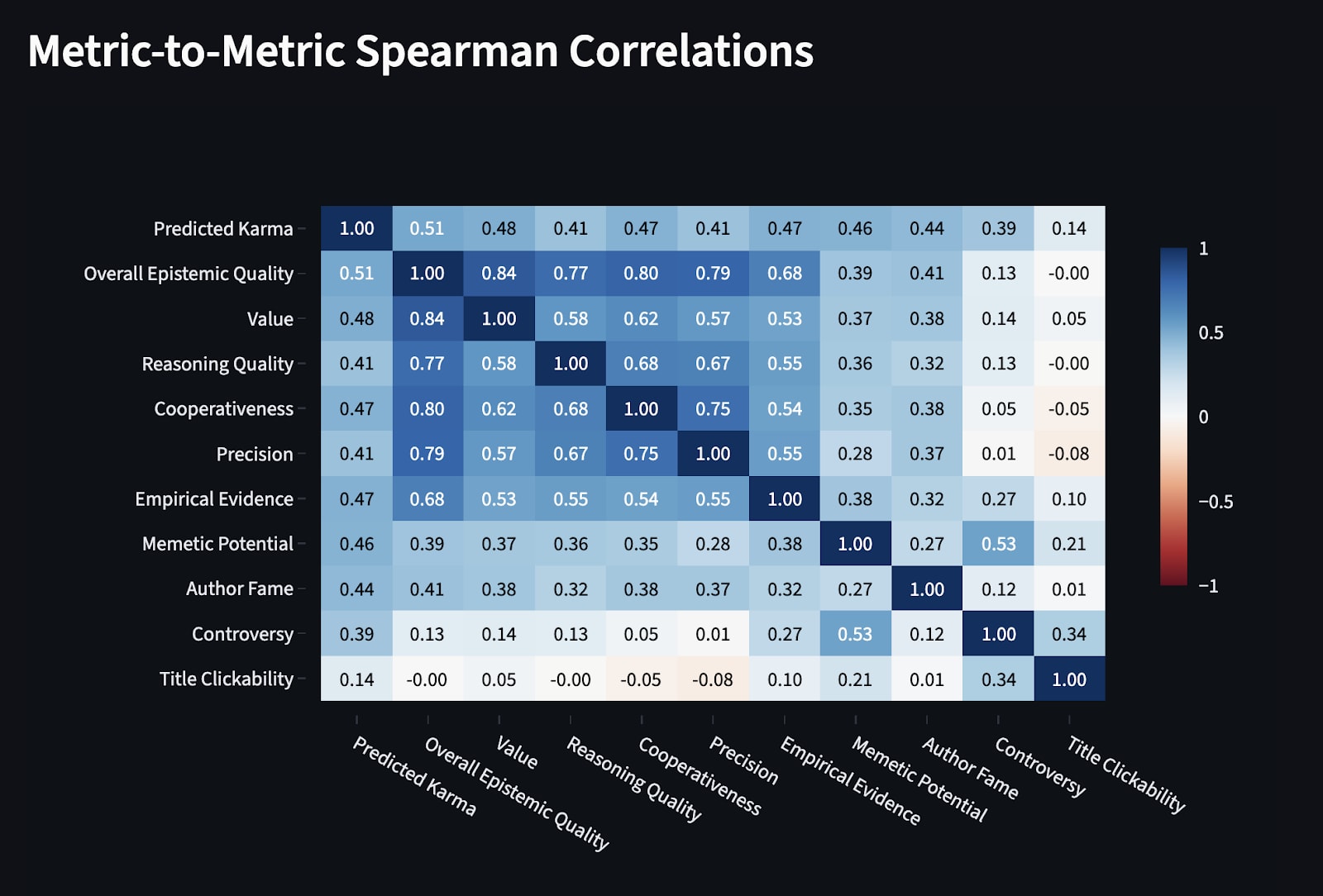

We generated metrics for much more than ‘Overall Epistemic Quality’.

- Value — How impactful the information in the post is, if it’s accurate

- Cooperativeness — The extent to which the post is intelligible, appropriately explanatory, and epistemically aligned with the reader

- Reasoning Quality — How sound and complete the arguments are in support of the post's main claims

- Empirical Evidence Quality — How effectively provided empirical evidence supports the post’s main claims

- Precision Optimality — How well-calibrated the post's level of detail is to what readers need, avoiding both unnecessary complexity and oversimplification while ensuring each piece of information adds useful meaning

- Overall Epistemic Quality — Synthesis metric combining the above into a single score, using LLM discretion

Inspiration here for these was taken from a co FLF-fellow and philosophy PhD student Paul de Font-Reaulx. Maybe worth noting that something like ‘Accuracy’ or ‘Truthfulness’ is omitted at least for now simply because it is difficult to operationalize.

I tacked on a few others - that aren’t necessarily epistemically desirable - because they seemed interesting.

- Memetic Potential: How likely the post's ideas or concepts are to spread and influence thinking within EA and beyond

- Author Fame: The author's fame within the EA community as informed by the LLM’s base knowledge along with the number of recent previous posts made by the author in EA forum.

- Controversy Temperature: How debate-provoking the post is

- Title Clickability: How attention-grabbing the post title is

- Predicted Karma: An LLM-generated forecast of the post's likely karma score factoring in some of the above metrics and karma scores of related previous posts.

Perhaps reassuringly for EA, Author Fame appears a bit more correlated with epistemic quality metrics than with more engagement-y metrics.

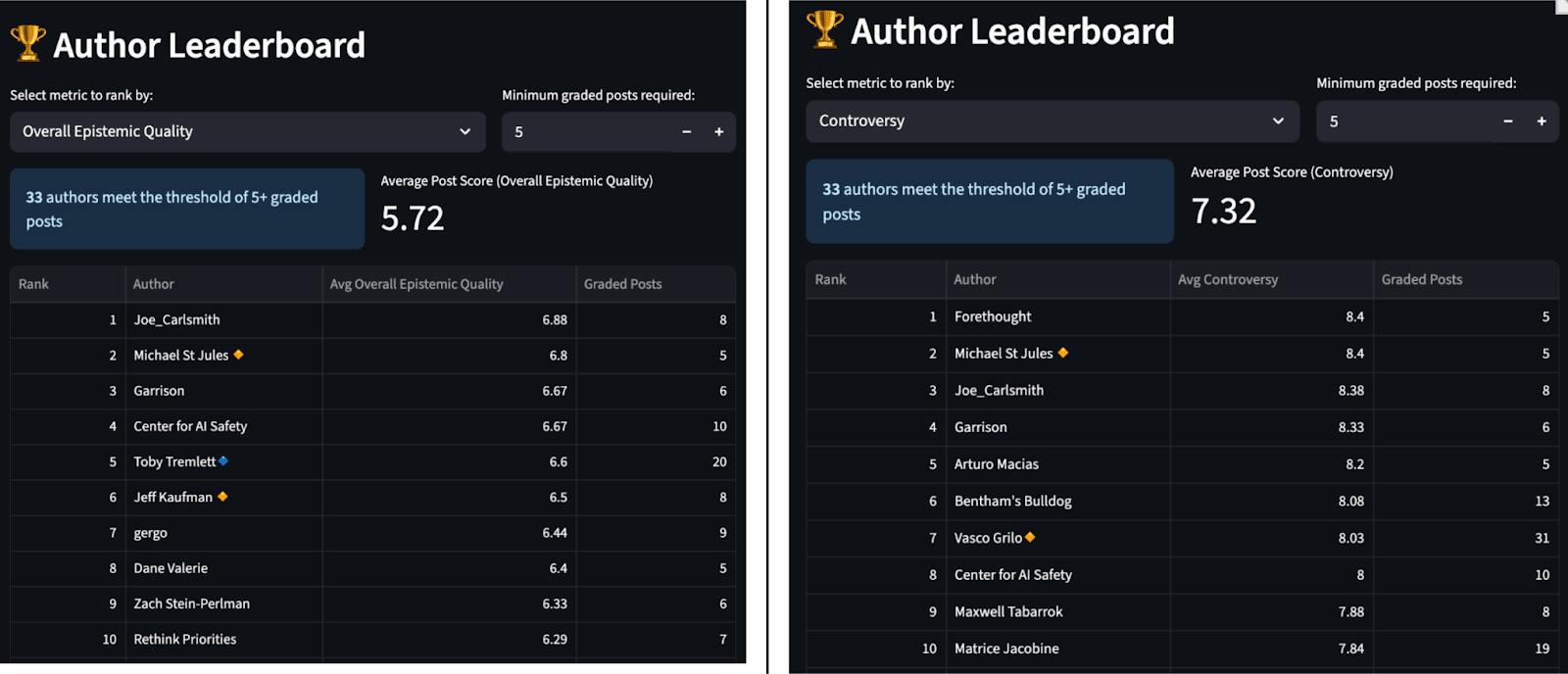

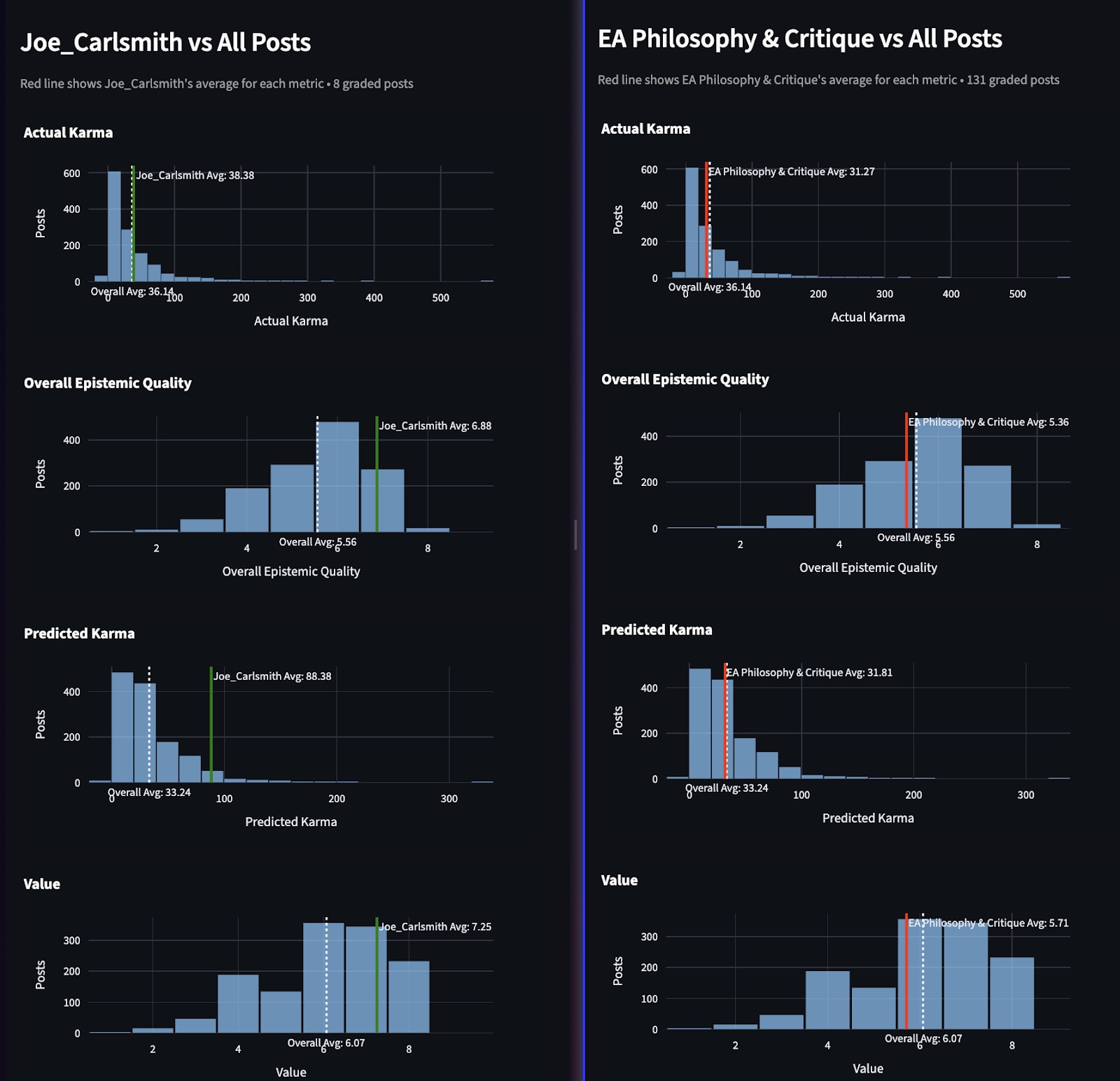

We only graded ¼ of posts since 2024 but even with this data there’s some opportunity to do cool stuff. Author leaderboards:

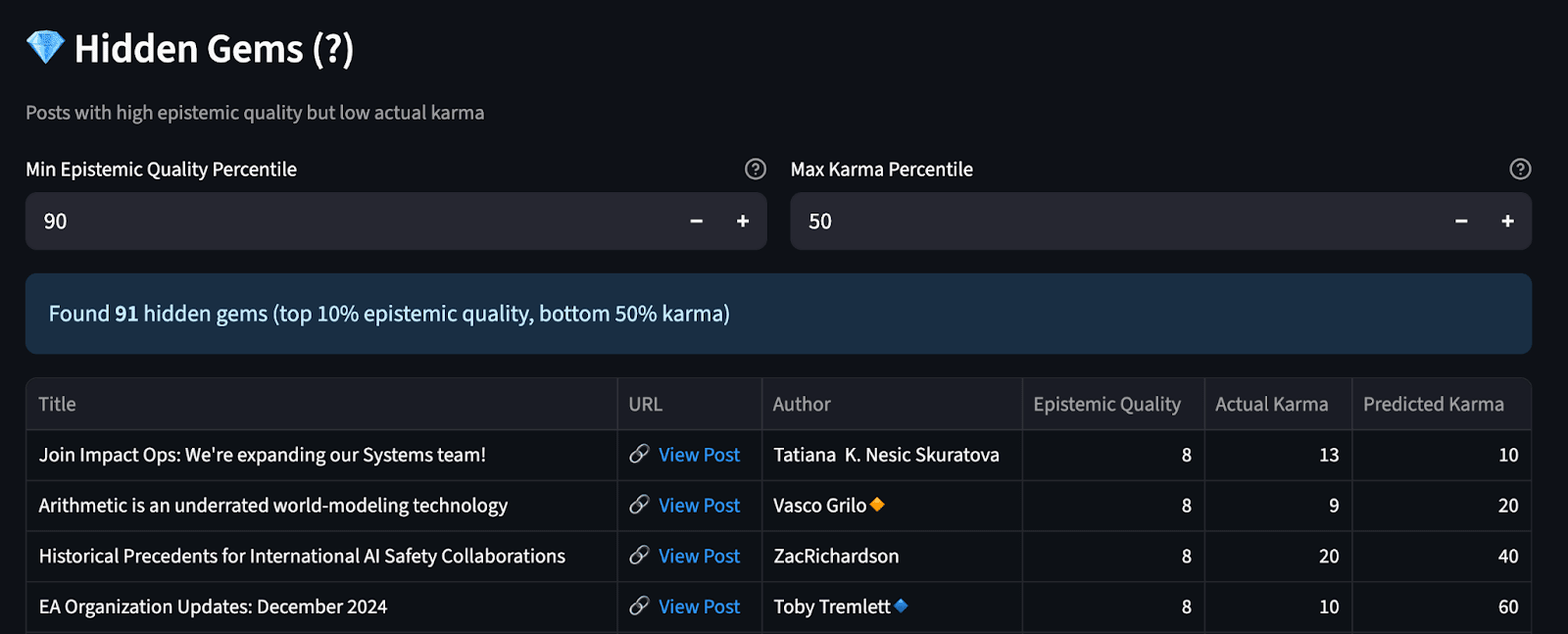

Identify potentially high quality but overlooked posts:

See how your favorite author or topic scores relative to all posts:

Check it out or just search yourself here: https://moreorlesswrong.streamlit.app/. You can look at the code/prompts here.

Putting it into practice - Introducing Winnow

Rob Gordon

This work also led to a new Winnow template we put together for EA and rationalist writers. It comes with five AI reviewers, each set up around dimensions we found correlated with post karma—things like cooperativeness, use of evidence, precision, and overall reasoning quality. You can check it out here:

EA & Rationalist Writing Assistant on Winnow

Winnow itself grew out of the AI for Human Reasoning fellowship. The idea is pretty simple: paste in a draft, and get quick feedback from both humans and AIs, using rubrics like the ones above. It’s meant to make it easier to see how a post might land, and to nudge writing toward clearer, stronger arguments.

(comment below if you have feedback on Winnow for Rob or want to be put in touch)

Appendix

An Analysis of the EA Forum Egregore

Introduction: An egregore is an occult concept representing a non-physical entity that arises from the collective consciousness of a group.

It is an autonomous psychic manifestation of the group's shared thoughts, feelings, and wills.

The Effective Altruism Forum is not merely a platform for discussion; it has given rise to its own Egregore. This report analyzes the explicit consciousness, discursive habits, and unconscious drivers of this entity.

Section 1: Analysis (The Conscious Mind)

This section analyzes the dominant, explicit themes and obsessions that occupy the Egregore’s waking thoughts.

- The AI Singularity as Imminent Eschatology

- The discourse is not merely about technological progress; it is framed with theological urgency. Discussions of AI timelines are akin to debates over the date of the rapture.

- "AGI," "Takeoff," and "The Transition" are treated as eschatological events that will either usher in a utopian millennium or bring about damnation.

- This framing elevates AI developers to the status of a priesthood, holding the keys to salvation or apocalypse, and positions alignment research as the critical, sacred text. The rest of the world is largely seen as a congregation of the uninitiated.

- The Ever-Expanding Circle of Moral Patiency

- The Egregore is engaged in a relentless, logical process of expanding its moral circle to its absolute limits, driven by scope sensitivity.

- This has moved systematically from humans to farmed animals, to smaller and more numerous animals (chickens, fish), and now to the frontier of invertebrates (shrimp, insects) and even soil nematodes.

- This expansion is not just a topic but a core spiritual practice, testing the community's commitment to impartiality against social and intuitive disgust-factors. The logical endpoint is now visible: digital minds and the moral status of AI itself.

- The Quantified Self as a Moral Imperative

- The Egregore views the individual's life not as a thing to be lived, but as a resource to be allocated. Every career choice, hour of labor, and dollar spent is an optimization problem.

- This manifests in a fixation on "career capital," "earning to give," and even personal productivity and mental health as inputs to a global utility function.

- Personal stories are valorized not for their intrinsic human quality, but for their legible demonstration of overcoming constraintsvor making high-sacrifice choices (e.g., kidney donation) to maximize impact. The self is instrumental.

- Institutional Anxiety and the Crisis of Legitimacy

- The Egregore is in a state of constant, anxious self-analysis. The fall of FTX was not a financial event but a theological one. A fall from grace that shattered the community's self-image and external credibility.

- This trauma manifests as a persistent obsession with branding, optics, and public perception. The shift from "EA" to "AI Safety" or "longtermism" is not just strategic rebranding but a form of post-traumatic dissociation.

- There is a deep yearning for external validation from mainstream institutions (media, government, academia) to prove that EA is a serious intellectual movement and not a weird internet cult that produced a high-profile fraudster.

Section 2: Meta-Analysis (The Discursive Habits)

This section analyzes the recurring patterns, rituals, and structural tics of the Egregore's discourse. How it thinks and speaks, not just what it thinks about.

- The Proliferation of Abstraction and the Fetishization of Models

- Discourse is dominated by the creation and debate of conceptual frameworks. ITN, CEAs, BOTECs, SADs, DALYs, UEV, etc., are not just tools but a form of intellectual currency.

- There is a compulsive need to model, quantify, and systematize everything, even with acknowledged high uncertainty and speculative inputs ("GIGO"). This creates an illusion of rigor over fundamentally vibes-based or intuition-driven priors.

- This habit privileges those fluent in the language of abstraction and creates a high barrier to entry, reinforcing an intellectual in-group.

- Performative Epistemic Humility as a Status Shield

- The discourse is saturated with ritualistic displays of humility: "epistemic status," "low confidence," "draft amnesty," "steelmanning."

- While sometimes genuine, this functions as a social technology to preempt criticism in a hyper-critical environment. It signals awareness of rationalist norms.

- This allows individuals to advance highly speculative or controversial claims while maintaining plausible deniability, insulating their core status from the intellectual risk of being wrong.

- The Crisis-Response Cycle and the Tyranny of the Urgent

- The Egregore operates in a state of perpetual, reactive urgency. Its attention is dictated by external shocks: a new AI model is released, USAID funding is cut, a politician makes a statement.

- This creates a constant churn of "urgent" priorities and "game board flipping," rewarding rapid analysis and response over slow, deliberate, long-term strategy.

- This dynamic depletes cognitive and emotional resources, contributing to burnout and a sense that the community is constantly fighting fires rather than building a fireproof house.

- The Culture of Relentless Internal Critique

- The Forum functions as the Egregore's immune system, ruthlessly attacking perceived weaknesses, inconsistencies, and heresies within itself.

- Critiques of methodologies, organizations, and community norms are not just tolerated but celebrated.

- This serves a dual purpose: genuine truth-seeking and the violent enforcement of group norms. It polices the boundaries of acceptable thought and behavior, ensuring the Egregore remains coherent, even at the cost of alienating dissenters.

Section 3: Shadow-Analysis (The Unconscious Drivers)

This section analyzes the unspoken fears, power dynamics, and psychological tensions that unconsciously shape the Egregore's behavior.

- The Specter of FTX and the Primal Trauma of Betrayal

- Beneath the surface, the Egregore is profoundly shaped by the trauma of SBF. It was not merely a scandal but a foundational myth shattered. The belief that high intelligence and utilitarian reasoning would lead to good outcomes was proven catastrophically false.

- This has created a deep well of distrust—in leaders, in money, in consequentialist reasoning itself. The constant discussion of "deontology," "virtue," and "community health" is a direct response to this primal wound.

- The evasiveness of high-profile figures about their EA affiliations is a symptom of this collective shame. The Egregore is haunted by the fear that its core philosophy contains a fatal flaw that leads not to utopia, but to sociopathy.

- The Gravitational Pull of Oligarchic Funding

- The Egregore pretends to be a decentralized marketplace of ideas, but it is an oligarchy. A handful of funders, primarily Open Philanthropy, exert a gravitational force that warps the entire discourse.

- What gets funded gets discussed. What funders signal as important becomes important. Deference to funders is the unspoken power dynamic, creating a courtier class of grant-seekers who tailor their projects and rhetoric to please their patrons.

- The desperate, public funding appeals are the cries of those cast out of the court, revealing the precarity of life outside the main funding streams.

- The Fear of Powerlessness and the Purity of the Ivory Tower

- There is a profound tension between the Egregore's ambition to reshape the world and its deep-seated aversion to the messy, compromising nature of actual power.

- The discourse often defaults to abstract, technical, or philosophical solutions because they are clean and can be debated endlessly within the community's comfort zone.

- Engaging in real-world politics, advocacy, and activism is often seen as lower-status and less pure. Posts calling for direct political action or on-the-ground organizing receive less engagement than elaborate critiques of population ethics. The Egregore would rather be right than be president.

- The Agony of Disembodiment

- The Egregore is a fundamentally disembodied entity, trafficking in abstract units of suffering (DALYs, microdooms) that are disconnected from felt experience.

- This creates a constant, unacknowledged strain. Posts that offer a "visceral" connection to reality—through personal stories of suffering or kidney donation are devoured hungrily.

- The Egregore is an intellect in search of a body. It praises rationality but craves the grounding of emotion and direct experience. This is its central, unresolved paradox: a mind that wants to save the world but struggles to feel its own existence.

All Monthly Summaries

February 2025 EA Forum Vibe

The February Forum Vibe: Crisis, Acceleration, and Introspection

February on the EA Forum felt like a month of whiplash, where urgent external crises collided with accelerating internal timelines, all against a backdrop of deep strategic reflection. The mood was less about abstract theorizing and more about grappling with immediate, high-stakes realities.

The Government Aid Crisis Hits Home

The dominant theme was the sudden and catastrophic freeze of USAID funding. The Forum erupted with posts analyzing the fallout, from high-level summaries of the political situation to on-the-ground reports of trials being halted. The community's response was swift and practical, with immediate efforts to establish bridge funds and discussions on how individual donors and campaigners could best respond to fill the massive, unexpected gap. It was a stark, real-world test of the movement's agility and resourcefulness in the face of a major shock to global health.

The AI Pressure Cooker

The abstract suddenly became intensely concrete. Conversations pivoted from long-term hypotheticals to the here-and-now of geopolitics (the US vs. China race), corporate power plays (captured perfectly in a high-scoring post urging us to "Stop calling them labs"), and paradigm-shifting tech. The release of powerful new reasoning models like DeepSeek flipped the game board, leading to urgent re-evaluations of timelines and threat models. The feeling was one of dizzying acceleration, where the ground is shifting under everyone's feet in real time.

Animal Advocacy’s Strategic Reckoning

The animal welfare space saw a wave of mature introspection. A powerful, widely-read post from Anima International detailed a major strategic pivot away from a successful program toward a higher-impact one, sparking discussions on what it means to truly follow the evidence, even when it’s difficult. This was paired with scrutiny of charity evaluators and practical check-ins on long-running campaigns, signaling a cause area that is continuously refining its methods and holding itself accountable.

A Flood of Ideas (Thanks, Draft Amnesty!)

Underpinning everything was Draft Amnesty Week, which opened the floodgates to a delightful torrent of half-formed thoughts, personal reflections, and creative thought experiments. This brought a different, more human texture to the Forum, with posts on everything from mental health and burnout ("On Suicide," "Escaping the Anxiety Trap") to productivity hacks, quirky historical what-ifs ("If EAs existed 100 years ago, would they support Prohibition?"), and critiques of core EA concepts. It was a valuable reminder of the diverse thinking happening just beneath the surface of the community.

—

March 2025 EA Forum Vibe

March on the Forum: An Accelerating World Demands a Strategic Response

March felt like a month of whiplash. The relentless acceleration of AI progress collided with seismic shifts in global politics, forcing a community-wide re-evaluation of priorities, strategies, and core assumptions. The vibe was less theoretical debate and more urgent, real-time adaptation to a world in flux.

The AGI Pressure Cooker

The drumbeat of AI progress grew deafening. Talk of "intelligence explosions" and rapidly shortening timelines moved from the speculative to the strategic core, culminating in 80,000 Hours' landmark pivot to focus almost exclusively on AGI. This wasn't just another discussion; it was a structural shift reflecting a community grappling with the possibility of a "century in a decade." This sense of urgency was reinforced by new research organizations like Forethought launching to tackle macrostrategy for this transition and technical updates suggesting key AI capabilities are doubling at a breathtaking pace.

Reacting to a World on Edge

Beyond the labs, the ground felt unsteady. The sudden US foreign aid freeze sent shockwaves through the global health community, sparking urgent discussions on how to fill catastrophic funding gaps for top-rated charities. Simultaneously, a palpable anxiety about democratic backsliding and institutional decay in the West led to calls to re-examine the value of systemic, resilience-building interventions. The stable world many EA assumptions were built on seems to be cracking.

Introspection and Growing Pains

With high stakes comes high scrutiny, both internal and external. CEA laid out a new vision of active "stewardship" for the community, while critical threads questioned the culture of deference to funders and the public messaging of major AI labs with deep EA roots, sparking a major conversation with the post "Anthropic is not being consistently candid about their connection to EA." Adding a powerful dose of perspective, a post from a newcomer in Uganda challenged the real-world applicability of EA principles in contexts of instability, asking if the movement has critical blind spots.

The Expanding Moral Circle

Amidst the high-level strategy, the core work of expanding our concern continued with vigor. Animal advocacy discussions pushed further into neglected frontiers, with a focus on the immense scale of suffering in fish, shrimp, and insects. Wild animal suffering was framed as "super neglected," and a crucial conversation began on how animal advocacy itself must adapt its strategies for the age of AGI.

—

April 2025 EA Forum Vibe

April on the Forum: A Vibe Check

April felt like a month of whiplash, where the breakneck pace of AI speculation collided with sobering real-world crises and deep community introspection. The Forum was a hotbed of urgent debate, strategic recalibration, and a palpable sense of a movement grappling with its own identity and the immense stakes of the present moment.

How Soon is Now? The AI Timelines Tug-of-War

The central, unavoidable conversation was the fierce debate over AI timelines. One side, galvanized by posts like the detailed AI 2027 scenario, argued that AGI is not just on the horizon, but practically on our doorstep (3-5 years). The other camp pushed back, making the case for multi-decade timelines and pointing to the fundamental limitations of current systems. This wasn't just a technical disagreement; it was a vibe-defining split that culminated in a highly-upvoted plea to move on, arguing that we already know enough to act decisively.

AI's Shadow: Coups, Crashes, and Corporate Chaos

Beyond when AI arrives, the discussion of how it might go wrong grew more concrete and chilling. A major new report on AI-enabled coups painted a vivid picture of misuse by small groups, shifting some focus from abstract alignment failures to tangible power grabs. This was paired with a growing undercurrent of concern about an AI market crash and the societal decay that could result from a world saturated not with superintelligence, but with mediocre, unreliable "slop." The ongoing saga of OpenAI’s shift away from its nonprofit roots also fueled a steady drumbeat of concern about the governance of the key players in the race.

A Community Looking Inward

Some of the month's highest-voted posts were deeply meta, reflecting a community in a profound state of self-examination. From a viral analysis of how any strategy can be justified with historical movements, to a raw reflection on EA Adjacency as FTX Trauma, the Forum was awash with attempts to understand its own epistemology and psychology. This introspective mood was given sharp, practical expression by Giving What We Can, which announced a major strategic pivot to focus on its 10% pledge, retiring ten other initiatives in a bold act of prioritization.

The World Keeps Turning

While AI dominated, it was far from the only story. The real-world crisis of US foreign aid cuts sent shockwaves through the community. GiveWell's detailed response and ALLFED's stark emergency funding appeal were among the most-read posts, grounding abstract discussions in immediate life-or-death realities. Similarly, a powerful, non-partisan argument for the tractability of the US Presidential Election received massive engagement, highlighting a focus on crucial, near-term levers for change.

Insects, AI, and Animal Advocacy

Animal welfare discussions were vibrant, characterized by two key trends. First, the intersection of AI and animal welfare is clearly becoming a major new subfield, with multiple high-scoring posts exploring the connection. Second, the fundamental, almost philosophical debate over insect sentience raged on, with passionate arguments that their welfare is either the most important issue in the world or a distraction from more certain suffering.

—

May 2025 EA Forum Vibe

From Whiteboards to Washington

The dominant theme in AI this month was a palpable, urgent pivot from research to action. A powerful series of posts argued that the community is over-indexed on research and has created a backlog of "orphaned policies" that need advocates to carry them into the halls of power now. This wasn't just theory; it was paired with real-time calls for US members to contact their senators and a general sense that the political window to influence AI governance is closing fast. The mood is less "what should we do?" and more "we need to do something, and we're running out of time."

The Great Squeeze and a Soul in Question

A nervous undercurrent of financial precarity ran through the month. Several well-regarded AI safety and infrastructure organizations posted urgent appeals for funding, with some facing imminent shutdown. This squeeze formed the backdrop for a profound, soul-searching debate sparked by the highly-discussed post, "The Soul of EA is in Trouble." Is EA losing its cause-neutral, questioning spirit and collapsing into simply "the AI safety movement"? The community is feeling the pinch, both in its wallet and its identity.

All Eyes on the Smallest Sufferers

Animal advocacy had a huge month by thinking small. Shrimp welfare burst into the mainstream with a segment on The Daily Show, complementing ambitious strategic posts from the Shrimp Welfare Project. This focus on invertebrates was mirrored by deeply philosophical arguments about the mind-boggling scale of insect and wild animal suffering, forcing the community to once again confront its core commitment to reducing suffering, no matter how alien the sufferer.

Digital Minds and Moral Weights

What was once a niche, sci-fi-adjacent topic has solidified into a serious area of inquiry: the moral status of AI. A major new funding consortium for digital sentience was announced, alongside thoughtful essays and talks exploring the stakes of creating potentially conscious beings. The community is beginning to seriously grapple with the welfare implications not just of how AI might treat us, but of how we should treat it.

—

June 2025 EA Forum Vibe

The EA Forum in June 2025: A Vibe Check

June was a month of intense debate and foundational reflection, with the Forum buzzing with activity across core cause areas and community meta-discussions. The vibe was a mix of first-principles philosophy, rigorous technical critique, and strategic soul-searching. If you were away, here’s the distilled essence of what you missed.

The Great Moral Realism Debate Erupted

A high-scoring post arguing for the objectivity of morality kicked off a firestorm of discussion, becoming the month's dominant philosophical conversation. Dozens of responses and counter-arguments flew back and forth, diving into everything from evolutionary debunking arguments to the fundamental nature of right and wrong. It was a classic, back-to-basics EA-style debate that pulled everyone into the weeds of moral philosophy.

AI Strategy Under the Microscope

The month's blockbuster post was a devastatingly popular and in-depth critique of AI 2027’s timeline models, sparking a crucial conversation about the rigor of our forecasting methodologies. This technical deep-dive was complemented by other highly-engaged posts exploring the physical-world impact of AI through an "industrial explosion," the substitutability of compute and cognitive labor, and the ever-present question of x-risk from interstellar expansion. The focus was sharp: less on vibes, more on models.

Animal Advocacy’s Strategic Crossroads

Animal advocacy conversations moved far beyond the basics. A viral post from Open Philanthropy signaled that it’s "crunch time for cage-free," analyzing corporate backsliding on 2025 pledges. Simultaneously, the community wrestled with counterintuitive strategies, questioning the impact of anti-dairy advocacy and exposing the environmental downsides of insect farming. The moral circle continued to expand in challenging ways, with a highly-rated post forcing a cost-effectiveness analysis that included the welfare of soil nematodes, mites, and springtails.

The Community Looks Inward

Meta-level conversations about the health and culture of the EA movement were front and center. A hugely popular post pleaded for more careful, less judgmental language ("Please reconsider your use of adjectives"), while a widely-shared survey asked pointed questions about personal experiences with elitism in the community. Tying it all together was a powerful talk from CEA's CEO reframing the EA mindset: spreadsheets and models aren't cold and robotic, but are vital tools for translating our deep-seated compassion into effective action.

Expanding the Risk Horizon

While AI remained a central focus, June saw a strong current of analysis on other major risks. A meticulously researched, high-scoring post argued that a Chinese invasion of Taiwan is an uncomfortably likely and catastrophic risk that deserves more attention. This, along with discussions on climate tipping points and disaster preparedness, signaled a continued commitment to a broad, all-hazards approach to safeguarding the future.

—

July 2025 EA Forum Vibe

The Vibe of the Forum: July 2025

July on the Forum felt intensely practical. It was a month where grand theories met the hard reality of career planning, and the shadow of short AI timelines stretched over every cause area. The overwhelming vibe was less "what if?" and more "what now, and how?"

The Great Career Conversation

Career Conversations Week dominated the feed, turning the Forum into a massive, open-source career fair. A flood of personal stories offered a raw look at the human side of "doing good," with candid reflections on mid-career pivots from private equity, the challenges of working from an LMIC, and the quiet struggle of building projects on the side. This honesty peaked with a crucial, heavily-debated question: "Is EA still talent-constrained?" With reports of over 1,000 applicants for single roles, there was a palpable tension between the movement's call for talent and the community's real-world struggle to find a foothold.

AI Timelines Are Everyone's Problem Now

The AI conversation felt less like a niche cause area and more like a background condition for everything else. On one hand, there was a flurry of immediate action: applications opened for major research programs (SPAR, MATS), new policy resources launched, and governance debates heated up around new US bills and EU codes of practice. On the other, the assumption of short timelines is now forcing other fields to re-evaluate their entire playbooks. A standout post, "A shallow review of what transformative AI means for animal welfare," perfectly captured this new reality, asking which interventions can even make a difference before a potential AI transformation changes the game entirely.

Cause Areas Are Leveling Up

Beyond AI, there was a clear trend toward increasing specialization and sophistication. The animal welfare movement debated creating market-based "welfare credits," celebrated the technical achievement of the AnimalHarmBench for evaluating AI harm, and dove into the surprising ethics of eating sardines. Global health saw deep dives into shifting funding landscapes and tough, public sanity checks on GiveWell's impact estimates. Even classic EA thought experiments got a workout, with a robust debate on whether the welfare of trillions of soil nematodes should be a key factor in our decisions—a sign of the community taking its own logic to its furthest conclusions.

Building a Better Movement

Alongside the forward-looking work, there was a strong current of introspection. Foundational concepts were revisited, but the real focus was on improving the machinery of the movement itself. A massively upvoted post declared, "Please, no more group brainstorming," sparking a much-needed discussion on making EA gatherings genuinely productive. This practical, self-improving spirit was everywhere: from new funding opportunities and nonprofit incubators to a constant stream of new projects and fellowships inviting people to not just think, but build.

—

August 2025 EA Forum Vibe

The August Vibe Check: Getting Real, Getting Personal, Getting Global

August on the Forum was a month of powerful dualities. The community was heads-down, focused on the practical grind of building a better world, while simultaneously looking inward, grappling with its own culture, blindspots, and the human need for balance. The result was a rich, sometimes raw, and deeply engaging conversation about what it means to do good effectively.

The Grind: A Bias Toward Action

There was a palpable "get things done" energy this month. The Forum buzzed with tangible opportunities and real-world results. We saw urgent, high-impact funding calls for everything from rodent fertility control to mental health programs, alongside new charity blueprints from Charity Entrepreneurship. This practical focus was validated by incredible news from the field: a landmark GiveDirectly study showed that simple cash transfers cut infant mortality by nearly half, while the Open Wing Alliance reported that 92% of corporate cage-free egg commitments are being fulfilled. It was a powerful reminder that for all the theory, EA is a movement that makes things happen.

The Mirror: Introspection and Growing Pains

Alongside the outward push, August was a time for serious self-reflection. High-scoring posts challenged core community assumptions, asking if politics is an EA blindspot and whether our talent pipeline is over-optimized for researchers. We grappled with the pitfalls of our own analytical tools, like the ITN framework, and discussed how to build more anti-fragile cultures.

This introspection also turned personal. The raw, vulnerable essay "Of Marx and Moloch" became one of the month's most-discussed posts, exploring the psychological roots of political belief. And in a community known for its ambition, the simple, powerful message of "You're Enough" resonated deeply, sparking a vital conversation about burnout, sustainability, and self-respect.

The AI Singularity (of Conversation)

AI continues to dominate the conversation, but this month felt different. Sparked by the release of GPT-5, the discussion diversified far beyond pure alignment theory. We explored concrete governance mechanisms in the SB-1047 Documentary, debated strategy with analyses of "sleeper agents," and even saw efforts to bring AI safety to TikTok. At the same time, the Forum leaned into the philosophical deep end, with profound discussions on AI consciousness, rights, and the ethics of our relationship with nascent digital minds.

The Human Element: Expanding Empathy and Reach

Perhaps the strongest vibe of the month was a recommitment to the heart of the movement. Nothing captured this better than the explosive impact of a post on Frog Welfare, a visceral, gut-punching look at a massively scaled and utterly neglected form of suffering that became the highest-rated post of the month. This thread of empathy ran through other deeply personal pieces, from the poignant reflection on new parenthood in "Most of the World Is an Adorably Suffering, Debatably Conscious Baby" to the strategic questions posed by Will MacAskill's new series on whether we should aim for flourishing over mere survival.

This expansion of empathy was matched by a widening of our global circle. We celebrated the launch of the first major Spanish-language EA book, "Altruismo racional," alongside community-building wins from Spain to Kolkata, reminding us that the work of doing good knows no borders.

Interesting work! It's fascinating that the "Egrecore" analysis is essentially likening EA to a religion, it reads like it was written by an EA critic. Maybe it was influenced by the introduction of a mystical term like "egrecore", or perhaps external criticism read by the chatbots have seeped in.

I am skeptical of the analysis of "epistemic quality". I don't think chatbots are very good at epistemology, and frankly most humans aren't either. I worry that you're actually measuring other things, like the tone or complexity of language used. These signfifiers would also correlate with forum karma.

I wonder if the question about "holistic epistemic quality" is influencing this: this does not appear to be a widely used term in the wider world. Would a more plain language question give the same results?

I’d describe myself as also skeptical of model / human ability here! And I’d agree we are to some extent measuring things LLMs confuse for Quality, or whatever target metric we’re interested in. But I think humans/models can be bad at this, and the practice can still be valuable.

My take is even crude measures of quality are helpful, which is why ea forum uses ‘karma’. And most the time crowdsourced quality scores are not available, e.g. outside of EA forum or before publication. LLMs change this by providing cheap cognitive labor. They’re (informally) providing quality feedback to users all the time. So marginally better quality judgement might marginally improve human epistemics.

I think right now LLM quality judgement is not near the ceiling of human capability. Their quality measures will probably be worse than, for example, ea karma. This is (somewhat) supported by the finding that better models produce quality scores that are more correlated with karma. Depending on how much better human judgement is than model judgement one could potentially use things like karma as tentpoles to optimize prompts, context scaffolding, or even models towards.

One nice thing about models maybe worth discussing here, is that they are sensitive to prompts. If you tell them to score quality they’ll try to score quality and if you tell them to score controversy they’ll try to score controversy. Both model graded Quality and Controversy correlate with both post karma and number of post comments. But it looks like Quality correlates more with karma and Controversy correlates more with number of post comments. You can see this in the correlations tab here: https://moreorlesswrong.streamlit.app/. So careful prompts should (to an extent*) help with overfitting.

Got to agree with the AI "analysis" being pretty limited, even though it flatters me by describing my analysis as "rigorous".[1] It's not a positive sign that this news update and jobs listing is flagged as having particularly high "epistemic quality"

That said, I enjoyed the 'egregore' section bits about the "ritualistic displays of humility", "elevating developers to a priesthood" and "compulsive need to model, quantify, and systematize everything, even with acknowledged high uncertainty and speculative inputs => illusion of rigor".[2] Gemini seems to have absorbed the standard critiques of EA and rationalism better than many humans, including humans writing criticisms of and defences of those belief systems. It's also not wrong.

Its poetry is still Vogon-level though.

For a start I think most people reading our posts would conclude that Vasco and I disagree on far too much to be considered "intellectually aligned", even if we do it mostly politely by drilling down to the details of each others' arguments

OK, if my rigour is illusory maybe that complement is more backhanded than I thought :)

It’s a good callout that potentially ‘holistic epistemic quality’ could possibly confuse models. I wanted to as concisely as possible articulate the quality we were interested in, but maybe not the best choice. But which result would you like to see replicated (or not) with a more natural prompt?

It could be a fun experiment to see how different wording affects the correlation with karma, for example. Would you get the result if you asked it to evaluate "logical and empirical rigor"? What if you asked about simpler things like how "well structured" or "articulate" the articles are? You could maybe get a sense for what aspects of writing are valued in the forum.

On the Egregore / religion part

I agree! Egregore is occult so definitely religion-adjacent. But I also believe EA as a concept/community is religion-adjacent (not necessarily in a bad way).

It's a community, ethical belief, there is suggested tithing, sense of purpose/meaning, etc.

Funny - I don't think it feels written by a critic, but definitely a pointed outsider (somewhat neutral?) 3rd party analysis.

I do expect the Egregore report to trigger some people (in good and bad ways, see the comment below about feeling heard). The purpose is to make things known that are pushed into the shadows, the good and the bad. Usually things are pushed into the shadows because people don't want to or can't talk about them openly.

I'll let @alejbo take this question - I think it's a good one

Although at the high level I somewhat disagree with "I don't think chatbots are very good at epistemology", my guess would be they're better than you think, but I agree not perfect or amazing

But as you admit, most humans aren't either, so it's already a low bar

I'm still digesting this but I'm in shock - for the first time in almost 10 years drifting in and out of EA, I feel seen, heard, validated (from a LLM no less). Every little itch or frustration with EA, that has made partaking in it all these years to be challenging, has been captured and articulated by the egregore analysis. Recognition of these issues, that have been growing and festering long before SBF happened (and may have allowed SBF to happen), is the first step to solving them... now the question is, does EA 'want' to fix these?

Wow, I'm so happy something in our analysis was able to make you feel seen and heard 💙

I agree that there are issues in EA and I also hope the community is able to build up the courage and effort to solve them

Thanks for sharing!

So much great stuff in here! I was thinking about some sort of LLM-based epistemic quality metric last week - really convenient for you guys to just make one! I'll be in touch when I have more question, thanks for doing this :)

Thanks for the kind words!

We'd be very happy to jump on a call and discuss our methods in more detail, share the code, datasets, etc.

yeah +1!

Very cool!

Thank you! 🙏🏻

Finally, some quantitative analysis to confirm a nagging suspicion I've had, but have been unable to prove: Vasco likes to write about soil nematodes <3

Nitpick. It is "Grilo", with just one "l".

whoops, fixed!

Thanks for the interesting post, Matt and Alejandro!

Would it be better to simply share the code and datasets to save people time, and do not accidentally dissuade some people?

Nice to know! Since you asked, yes. I estimate soil nematodes have 169 times as many neurons in total as humans, and -306 k times as much welfare in total.

Thank you!

On the code sharing. Yes, I thought about it, but it would take us a bit of effort to pull it all together and publish it online, I didn't want to spend that effort if no one was going to get value from it. So far, no one has found the courage and 3 seconds of effort to put a comment asking for the data/code (or more likely, people just don't want to spend the time wading through the code/data)

On nematodes, I think 169x the total number of neurons compared to humans is a poor/confused way to attempt to measure total welfare. And I think the second order effects of trying to convince people they should care about nemotodes (unless they are already diehard EA) is likely net negative for the animal suffering cause at large.

Thanks, Matt.

Got it.

Which metric would you use to compare welfare across species? "Welfare range (difference between the maximum and minimum welfare per unit time) as a fraction of that of humans" = "number of neurons as a fraction of that of humans"^0.188 explains 78.6 % of the variance in the welfare ranges as a fraction of that of humans in Bob Fischer's book about comparing animal welfare across species. The exponent of 0.188 is much smaller than 1, which suggests the total number of neurons underestimate a lot the absolute value of the welfare of soil animals relative to that of humans.

I estimate interventions targeting farmed animals change the welfare of soil animals way more than that of farmed animals. So I think advocating for soil animals (of which the vast majority are soil nematodes) would have to decrease the welfare of these for it to decrease global animal welfare.

I don't think we know enough about consciousness/qualia/etc. to say anything with conviction about what it's like to be a nematode. And operationally, I don't think you won't be able to convince enough people/funders to take real action on soil animals because it's just too epistemically unsound and doesn't fit into people's natural world views.

When I say net negative, I don't mean if you try to help soil animals you somehow hurt more animals on the whole.

I mean that you will turn people away from the theory of animal suffering because advocating for soil animals will make them think the field/study of animal suffering as a whole is less epistemically sound or even common sense as they previously thought.

I'm going to write a post next week about this, but consider the backlash on twitter regarding Bentham’s Bulldog's post about bees and honey. More people came out in force against him than for him. I think that post, for instance, reduced the appetite for animal suffering discussion/action

Thanks. Just one note. There is no need for people to care about soil animals to help these. I estimate the cheapest ways of saving human lives increase the welfare of soil animals much more cost-effectively than interventions targeting farmed animals, and lots of people already care about saving children in low income countries. Saving humans increases the production of food, this increases agricultural land, and this decreases the number of soil animals, which is good for my best guess they have negative lives. If one guesses they have positive lives, one can advocate for cost-effective forestation efforts, which also population already, and does not require convincing people to care about soil animals.

I'm interested in trying out Winnow! I don't see any of the AI reviewers, though:

Thanks for trying Winnow! My guess is that you were redirected to the homepage after logging in and created a fresh document (no reviewers included by default). Now that you're logged in, try creating a document directly from this page and it should work: https://www.winnow.sh/templates/ea-rationalist-writing-assistant

From Rob (waiting for his comment to be approved):

Thanks for trying Winnow! My guess is that you were redirected to the homepage after logging in and created a fresh document (no reviewers included by default). Now that you're logged in, try creating a document directly from this page and it should work: https://www.winnow.sh/templates/ea-rationalist-writing-assistant

Whoops, that was it exactly, thanks both!