Update 2023-06-15: Stanford AI Alignment has just wrapped up its first (IMO quite successful) academic year, but it has diverged significantly from some of the plans first laid out here. For updated context, contact me over DM or at gmukobi@cs.stanford.edu.

Crossposted on Less Wrong.

0. Preface

Summary: AI alignment needs many great people working on research, engineering, governance, social science, security, operations, and more. I think AI alignment-focused university groups are uniquely underexploited sources of that future talent. Here are my plans for running such a group at Stanford, broadly involving mass outreach to a wide range of students, efficient fit-testing and funneling to a core group of promising students, and robust pipelining of those students into a connected network of impactful opportunities.

Confidence: Moderate. This theory of change is heavily inspired by similar plans from Michael Chen (Effective Altruism at Georgia Tech) and Alexander Davies (Harvard AI Safety Team) as well as EA field-building learnings from Stanford Effective Altruism. While parts of this have been tested in those communities, parts are new or different. That said, I’m fairly confident this is at least a significant improvement over current local AI alignment field-building operations. The purpose of posting this is both to refine these plans and to share ideas that might be useful for groups with similar goals.

Terminology: I use the term “AI alignment” as an umbrella term throughout this to refer not only to technical AI alignment/existential AI safety, but also to AI governance, AI strategy, and other work that directly reduces existential risk from AI. The specific choice of this term (as opposed to e.g. “AI safety”) is to practically distinguish the long-term/catastrophic risk/alignment focus of the new group from the adjacent Stanford Center for AI Safety.

1. Introduction

1.1 Context

Stanford Effective Altruism has gradually been increasing its focus on AI alignment over the last year. It invited more AI-related guests to open Monday dinners, ran events like an ELK weekend and a Rob Miles binge party, organized one and a half alignment-focused retreats, and ran a local AGI Safety Fundamentals Technical Alignment reading group in the spring. From these activities, it saw some promising signs, like:

- Many people the organizers had never met before attended Monday dinners because of cold email list messages advertising an AI alignment speaker.

- Among the group of core EAs, many got more interested in AI alignment and asked for more activities like these.

However, SEA also found some potential issues:

- Many of those students the organizers had never met before, suggesting EA outreach was failing to reach them (e.g. some people interested in AI alignment who attended Monday dinners had heard about but decided not to apply to the intro EA fellowship).

- For core EAs who did get significantly interested in AI alignment, there was a big gap between “I have read and discussed AI enough to think this is the most pressing problem” and “I feel like I have the skills and opportunities to have an impact.”

- Some reported feeling at times like Stanford EA was just about AI alignment and that there wasn’t a space for other cause areas.

These initial activities showed a lot of promise for expanding and formalizing AI alignment opportunities at Stanford, but they also suggested trying a different approach.

1.2 SAIA: A New Organization

One day in spring 2022, the Stanford EA organizers decided to split off a sub-group solely dedicated to AI alignment field-building. The initial idea was simple: Run most existing AI-focused activities under this new AI-focused group in order to keep Stanford EA more EA/cause-prioritization focused and outreach to students who could be good fits for AI alignment without the direct EA association. And thus, Stanford AI Alignment (SAIA, pronounced "sigh-uhh") was (unofficially) born.

But I wanted to go further, to build a group and scale up a community extraordinarily more effective at AI alignment field-building than Stanford EA. This post is a high-level sketch of plans for robustly scaling up outreach, support systems, and formal operations over the coming 2022-23 school year and beyond. Hopefully, this helps achieve the goals of building the AI alignment community at Stanford, getting Stanford students into highly positively effective careers around AI, and ultimately making AGI go as best as possible.

It should be noted that much of this is heavily inspired by similar plans from Michael Chen (Effective Altruism at Georgia Tech) and Alexander Davies (Harvard AI Safety Team) who have been devising and locally testing ways to run AI alignment student groups. Credit for the parts that work goes to them!

1.3 Assumptions

Several assumptions inform these decisions, and different takes on these assumptions could lead to different plans:

- AI alignment is talent constrained, and getting more talented people working on various problems in the field will effectively improve the world.

- Universities have many talented students who would be good fits for AI alignment work in research, engineering, governance, social science, security, operations, and more.

- Of those many talented students, many aren’t working on AI alignment simply because they have yet to be exposed to it.

- EA groups are not the best way to expose students to AI alignment because working on AI alignment does not require an EA mindset and many students who could be good fits for AI alignment work are not good fits for EA (e.g. they might be turned off by certain aspects). That said, we still want to select students with decent levels of altruism, responsibility, and safety-focus to minimize the negative externalities of growing AI capabilities (more on this later).

- Many students who could be doing impactful AI alignment work are held back because they care but lack the skills necessary to do the work, they can get the skills but lack the confidence to start doing work, or they feel that they need a mentor in order to effectively learn. In all these cases, clear career guides, regular support, and simple accountability structures can significantly amplify their impact.

- 1-on-1 meetings between a developing student and an engaged community member are effective ways to guide and advance the student’s career.

- Peer accountability systems where many people work on similar things together and publicly agree to SMART goals are effective mechanisms for ensuring work gets done.

2. The Tree of Life

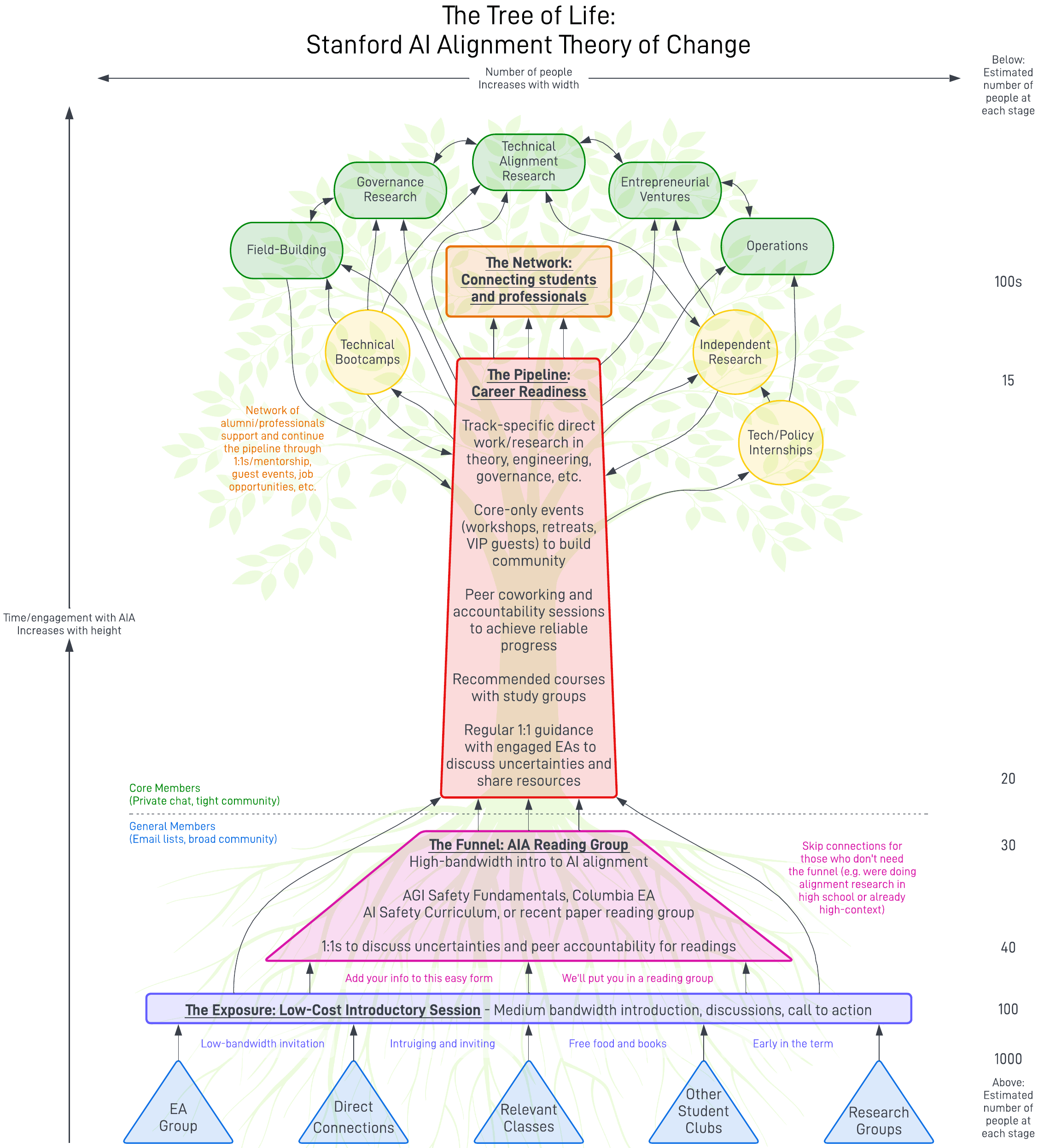

Below is the theory of change diagram for Stanford AI Alignment (click here to view a zoomable version). I explain each section in detail later, but in general, the plan consists of four phases: A Talent Pool that searchers much wider than EA outreach currently does to find promising students and expose them to AI alignment; a Funnel that efficiently selects the students who are interested in learning more about and could be good fits for AI alignment; a Pipeline to robustly up-skill and deploy those students into impactful careers; and a Network of professional communities to connect the university and “real-world” AI alignment spheres.

2.1 Soil: The Talent Pool

For the first phase, we aim to draw from a very wide pool of potentially impactful students, so we’ll have to reach far beyond the EA student group community. While we will direct EAs who go through the intro fellowship and express interest in AI alignment to this group, we’ll also directly invite non-EA connections, ask professors to speak at the beginning of their classes, send emails to school-wide email lists and especially to established student groups focused on AI/policy/entrepreneurship, and reach out to students involved in various forms of research.

Because we will mostly have low-bandwidth communication channels to each of these groups, the message we reach out to them with is something along the lines of: “Transformative Artificial Intelligence could come within our lifetimes and be tremendously good or bad for the world, but there’s a lot of evidence that indicates it will be bad by default. AI alignment is an exciting and growing field focused on the technical and societal problems of making it good, and the field greatly needs talented students like you studying engineering, policy, social science, entrepreneurship, communication, and more. If this sounds interesting, weird, or concerning to you, come to Stanford AI Alignment’s introductory session. We’ll have free food and books about alignment for all who attend! RSVP here now” (Note: this is placeholder language to communicate the general idea in this post—I will thoroughly workshop this invitation with help before using it). We want the invitation to be low-cost and enticing for students to attend this event. A key part of this will involve operations-style preparation to be able to send out these invitations very early in the term (especially at the start of each school year) and catch students before their schedules are already full with commitments.

The actual introductory session, which should also happen early in the term, seeks to rapidly expose these students to AI alignment ideas. With our audience captive with free food and books for an hour, we will do a few things:

- Present a medium-bandwidth introduction to some of the core ideas of AI alignment such as Rob Miles’ 20-minute Intro to AI Safety talk or the first 23 minutes of Vael Gates' Risks from Advanced AI talk.

- Lead group discussion through a few compelling prompts in the style of a reading group.

- Express the need for talented students across a wide range of skills (not just technical) and show some exciting recent work in the field

- Call for the action of joining an AI alignment fundamentals program by simply signing up their name and email on a simple form or paper.

We now have a pool of students wanting to learn more about AI alignment! From here, we can send a follow-up message to ask for their availability at a few candidate times and their focus area preferences (e.g. technical vs governance), match them to reading groups, and send them down the Funnel.

2.2 Roots: The Funnel

The second phase’s role is to funnel down that pool of students by exposing them to high-bandwidth communication about AI alignment and testing their fit for understanding the ideas of and participating in the work in the field.

A reading group seems like the best way to facilitate this. Many student groups have run local reading groups of the AGI Safety Fundamentals (AGISF) Technical Alignment or AI Governance curricula, the Columbia EA AI Safety Discussion Group curriculum, or a custom AI alignment and policy research curriculum (e.g. we might want to design one focused on empirical AI alignment research aimed at empirical ML researchers). By default, we’ll put everyone through the AGISF Technical Alignment Curriculum, or the AGISF AI Governance curriculum for groups of students who think they would better fit governance work (e.g. public policy students).

Although we think it’s generally good for everyone to go through some kind of reading group (e.g. future field-builders who won’t be doing direct work still benefit from knowing the problems and nature of the field), we will also have skip-connections around the Funnel for certain exceptions. These special cases might include students who have already read a lot about AI alignment and were planning to work on it anyway (but might still need a community and support structure to help them get there), or in rarer cases, perhaps people who are really good fits for non-technical roles and already motivated by AI alignment but who don’t necessarily need an in-depth reading group.

Naturally, we expect a significant number of students to be selected-out through this phase, primarily due to them realizing they don't want to work in AI safety. This isn’t a bad thing—such a broad outreach strategy is bound to catch many false positives, and we don’t want to force those people into careers they aren’t good fits for. However, we do want to minimize promising students dropping out because they feel they don’t belong, are too busy, get bored, or forgot to do readings. To protect against that, we’ll use a mixture of careful matching (e.g. putting non-technical students together, putting higher-context students together), direct accountability measures (e.g. sending reminder messages to everyone 1-2 days before each meeting), peer accountability systems (e.g. “When you’re done with the readings, write 1 question next to your name in this spreadsheet that the rest of your cohort can see”), and direct incentives (e.g. free snacks/drinks and guest alignment experts at certain meetings).

2.3 Trunk: The Pipeline

All students who graduate from the reading group Funnel, are still interested, and seem promising to SAIA organizers are then promoted to Core Member status and move on to the third phase. In this Pipeline, we are no longer funneling down the numbers and testing students for fit—rather, we are aiming to retain a high percentage of these talented students as we efficiently up-skill and deploy them into impactful careers. The various AI-related activities at Stanford EA were aimed at different audiences and didn’t quite have a unifying telos, but here the end goal is clear: If you are a Core Member in the Pipeline, we are going to do everything we can to get you an impactful job in AI alignment.

For most students, this will generally involve up-skilling in a particular career track like theoretical research science, empirical research science, research engineering, governance, field-building, etc. We’re lucky to be in a time when people are publishing great career guides specific to AI alignment subfields, so if we could just have them work through those, that might get the job done.

Of course, the reality isn’t so simple. Many students need specific advice about their specific situations, a space to talk through their uncertainties, or just someone to act as a mentor and push their “start” button, so we’ll connect Core Members to regular (e.g. every week/2 weeks/month) 1-on-1 meetings with SAIA organizers or people in our networks who are engaged in AI alignment work. Additionally, we’ll run regular (i.e. weekly) coworking sessions for students to block out time to get things done while making regular accountability pledges to the group (likely with free food as an incentive). As a group at a university, we can use our resources to suggest our students register for specific useful classes (e.g. in ML/policy/economics) and form study groups around them. And to build a sense of community, we will adopt and expand Stanford EA’s AI alignment activities like workshops, retreats, and VIP guest events as invite-only SAIA events. One of these activities should feel like a consistent central commitment that people can prioritize as the core of their activities in the group—for SAIA, that will be the weekly coworking sessions, but other groups have had success running a core paper reading group.

Additionally, the hope of the Pipeline is not only to get students into impactful work but to also be a space for Core Members to do impactful work while they are still students. For example, we’ll encourage theorists to produce distillations and proposed research directions, engineers to carry out AI safety experiments and publish empirical results, governance students to conduct policy and strategy research, entrepreneurs to start new projects, and field-builders to get involved in helping SAIA.

2.4 Branches: The Network

Eventually, Core Members will move out of the pipeline and into impactful roles beyond our student community (e.g. by graduating and working, participating in summer pre-professional opportunities, or conducting independent research). While this is where the work of SAIA organizers mostly stops, we don’t want this to be a one-way street—rather, it should be an interconnected intersection full of grand collisions in the most wonderful of ways!

I think there could be an even tighter relationship between students and professionals, so we’ll highly encourage our alumni and professionals in the Network to re-engage with and support Core Members through volunteering for 1-on-1 mentorship, coming as guests for special events, sharing job and apprenticeship opportunities, or just being great role models. The particular arrows in this phase of the theory of change diagram as presented don’t especially matter—the idea is more to cultivate a vibrant and cooperative community of AI alignment professionals in and out of university.

3. Considerations

There might be several different ways this could fail in practice. To reduce that risk, here are some concerns that I or others have identified and responses to them.

3.1 How do we measure success?

There’s no perfect solution here, but the best I can come up with is a combination of a quantitative output metric and a qualitative effectiveness metric. Quantitatively, our main measure will be the number of students we counterfactually get to steer their careers into impactful AI alignment work. However, maximizing person-count alone is probably a bad strategy, so we’ll balance this by qualitatively evaluating how skilled and effective those students are after they graduate, the sense of community we’ve built through our group, and our group’s reputation amongst other factions at our university (e.g. the general student body or AI professors).

3.2 Don’t we need a few exceptional people, not many good people?

I think university groups are currently missing many exceptional students who never hear about AI alignment and go on to work in non-impactful roles in finance/tech/consulting/etc., and this broad outreach strategy within a university is a way to miss fewer of them (i.e. sampling more from heavy-tailed distributions gets your more samples from the tail). Note that this depends on the crux that not all exceptional AI alignment workers will discover AI alignment on their own (which I think is especially true of non-technical-research roles).

Also, while this may be true for research scientists right now, I don’t think this is true for other neglected disciplines in governance, operations, security, engineering, entrepreneurship, or field-building. Not to mention, the field as a whole 4 years from now (the usual length of a U.S. undergraduate degree) might look very different and be able to absorb even more talent for shovel-ready projects.

3.3 This seems like a lot of work for students!

It does look complicated when viewed all at once, but hopefully what this looks like to an individual student over the course of several terms/years is “Go to an info session with free food” → “Join an interesting reading group” → “Participate in a welcoming and focused community where I learn cool skills” → “Oh, you really think I’m ready to apply for a job at that AI alignment org?!”

Regarding time, I worry about promising students having to drop out of things because they’re too busy with classes/extracurriculars/life. To address this, we'll have accountability and support processes in each phase, we’ll support varying levels of commitment in the Pipeline (e.g. perhaps the best thing for some members is to spend a lot of time on classes and research and minimally engage with our group), and we’ll run the introductory session and start the reading group very early in the quarter (i.e. within the first 2ish weeks) to get students to commit to this before committing to other things.

3.4 This seems like a lot of work for organizers!

Yeah… I probably wouldn’t recommend this to newer communities without an established EA group or at least 4 part-time AI alignment organizers. However, with a dedicated group of people who really want to make this happen, I think it’s very doable. Most of the critical work comes before each academic term to make the introductory session happen and start the reading group—beyond that, it’s mostly facilitating reading group discussions, doing 1-on-1s, and running some core events.

As a very rough calculation, if an organizer each week led 2 reading groups of 8 students (4 hours), ran 5 30-minute 1-on-1s (2.5 hours), and organized 1 event per week (3.5 hours), then with 10 hours of total work they could support 16 reading group fellows and 5 Core Members (or 64 fellows and 20 Core Members with 4 organizers). Not to mention some of these responsibilities could be shared with or outsourced to EA groups, locally hired operations managers, or professionals in the Network.

3.5 How will this be funded?

Organizing a program like this for an academic term would likely invoke costs for:

- Food/books for the Intro Session

- Snacks/drinks for certain Reading Group meetings

- Food for Core Coworking Sessions

- Other positive accountability incentives

- Rideshares/travel for guests

- Organizer/facilitator pay

When you add it up, the numbers are significant (though well within the range of a standard EA Infrastructure Fund grant). But if such a funding opportunity can produce just a few talented people working on AI alignment per year (and potentially generating millions of dollars worth of impact), then judiciously ambitious funders would be remiss to pass it up. Additionally, several new AI alignment infrastructure organizations are currently scaling up to provide funding as well as specific services like free books, professional networks, and community organizers to impactful groups.

3.6 How reputable will this group be?

Such a group might need a strong reputation to easily interact with academia and certain professional organizations. Unfortunately, it’s also the case that people often need a sense of status to be motivated. The hope is that by directly producing impactful work in the Pipeline (e.g. publishing research to mainstream ML conferences, advertising members winning thousands of dollars in prizes for open challenges like ELK), signaling that we’re very serious about AI alignment (e.g. hosting events with professional guests, working with external AI alignment organizations and funders), and demonstrating a path to a rewarding career (e.g. graduating several students each year into well-paid positions at top alignment organizations), such a group can eventually be held about as high as the top entrepreneurial and tech groups within a university. At Stanford specifically, we will try to incorporate SAIA as a child organization of the Stanford Existential Risks Initiative (a major contributor of work/opportunities in AI alignment) or even the Stanford Center for AI Safety (a leading research group that is the first Google search result for “AI safety”) in order to inherit some of their reputations and connections.

3.7 What about the risks of exposing many people to AI alignment?

There are attention hazards associated with broadly sharing information about the dangers and possibilities of AGI. These risks are genuinely concerning for a new, largely-untested approach to university AI alignment field-building, so organizers should thoughtfully consider possible failure modes and consult other field-builders for review before following plans like these.

Optimistically, I think the highly-supervised nature of this group will help us filter and steer most the of students whom we send through the whole up-skilling Pipeline away from AI capabilities and towards AI safety (e.g. by selecting for safety-focused students after the Funnel before offering up-skilling or discussing the risks of capabilities-advancing job opportunities in 1-on-1s). But even under a pessimistic view that this process will produce just as many capabilities researchers as safety researchers, for example, I still think the marginal upside risks of having an additional talented person work on AI alignment significantly outweigh the marginal downside risks of having an additional talented person work on AI capabilities. This is because many more people are already working on capabilities in industry (compared to a neglected community working on AI alignment), and only a small fraction of capabilities research is likely to be good enough to speed up AGI counterfactually (compared to a lot of potential for low-hanging fruit and future shovel-ready work in AI alignment).

There are also reputational hazards associated with building a public AI alignment community among people who disagree that AI alignment is worth working on. For this reason, we’ll prioritize students and avoid targeted outreach to unaligned AI professors (besides asking to briefly talk at the start of a class) until we can establish a more solid reputation and perhaps until the divide between the professional alignment and capabilities communities is narrowed.

4. Conclusions

Whew, those were a lot of plans! As I said before, only parts of this have been solidly tested (mostly the reading group as a Funnel at other universities and the various accountability/advising measures of the Pipeline at Stanford EA). The rest are mostly educated guesses, so if you’ve tested some of this or have ideas about what to improve, I’d love for you to let me know! We at SAIA will keep you posted on how this goes over the next year.

And if you’re an organizer at another university group or non-academic local group, definitely feel free to duplicate, modify, and test as much of this as you like. My hope in publishing this is that we as a community can form solid systems that leverage the full potentials of local AI alignment groups and make AGI go as best as it possibly can!

Many thanks to EJ Watkins, Eleanor Peng, Esben Kran, Jonathan Rystrøm, Madhu Sriram, Michael Chen, Nicole Nohemi, Nikola Jurković, Oliver Zhang, Thomas Woodside, and Victor Warlop for valuable feedback and suggestions!

The organizers of such a group are presumably working towards careers in AI safety themselves. What do you think about the opportunity cost of their time?

To bring more people into the field, this strategy seems to delay the progress of the currently most advanced students within the AI safety pipeline. Broad awareness of AI risk among potentially useful individuals should absolutely by higher, but it doesn’t seem like the #1 bottle neck compared to developing people from “interested” to “useful contributor”. If somebody is on that cusp themselves, should they focus on personal development or outreach?

Trevor Levin and Ben Todd had an interesting discussion of toy models on this question here: https://forum.effectivealtruism.org/posts/ycCBeG5SfApC3mcPQ/even-more-early-career-eas-should-try-ai-safety-technical?commentId=tLMQtbY3am3mzB3Yk#comments

Good points! This reminds me of the recent Community Builders Spend Too Much Time Community Building post. Here are some thoughts about this issue:

That's not to say I recommend every student who's really into AI safety delay their personal growth to work on starting a university group. Just that if you have help and think you could have a big impact, it might be worth considering letting off the solo up-skilling pedal to add in some more field-building.

Agreed with #1, in that for people doing AI safety research themselves and doing AI safety community-building, each plausibly makes you more effective at the other; the time spent figuring out how to communicate these concepts might be helpful in getting a full map of the field, and certainly being knowledgeable yourself makes you more credible and a more exciting field-builder. (The flip side of "Community Builders Spend Too Much Time Community-Building" is "Community Builders Who Do Other Things Are Especially Valuable," at least in per-hour terms. (This might not be the case for higher-level EA meta people.) I think Alexander Davies of HAIST has a great sense of this and is quite sensitive to how seriously community builders will be taken given various levels of AI technical familiarity.

I also think #3 is important. Once you have a core group of AI safety-interested students, it's important to figure out who is better suited to spend more time organizing events and doing outreach and who should just be heads-down skill-building. (It's important to get a critical mass such that this is even possible; EA MIT finally got enough organizers this spring that one student who really didn't want to be community-building could finally focus on his own upskilling.)

In general, I think modeling it in "quality-adjusted AI Safety research years" (or QuASaRs, name patent-pending) could be useful; if you have some reason to think you're exceptionally promising yourself, you're probably unlikely to produce more QuASaRs in expectation by field-building, especially because you should be using your last year of impact as the counterfactual. But if you don't (yet) — a "mere genius" in the language of my post — it seems pretty likely that you could produce lots of QuASaRs, especially at a top university like Stanford.

Interesting model, very keen to hear how this works out. The main challenge I see is that students may miss the course and then find it hard to get involved even if they would be a good fit.

Thanks Chris! Yeah, I think that's definitely a potential downside—I imagine we'll definitely meet at least a couple of people smack in the middle of the quarter who go "Wow, that sounds cool, and also I never heard about this before" (this has happened with other clubs at Stanford).

I think the ways to mitigate failure due to this effect are: