This post is, in part, inspired by Étienne Fortier-Dubois’s post on aesthetics and EA. I had a couple of thoughts, from the perspective of an artist/writer who is invested in a question Fortier-Dubois analyses (‘why should we value aesthetics?’). I am sympathetic to EA/Longtermism but a relative newcomer, so apologies for terminological errors; hopefully this doesn’t retread too much old ground.

Before diving into whether aesthetics should matter for EA, I wanted to think about the value of aesthetics (in which I include visual art as well as sound and literature/poetry) more broadly.

Why value art?

Restating Fortier-Dubois’ argument slightly, the proposed justifications for aesthetics sit in a spectrum between being non-instrumental (i.e. foundational goods to be valued in-themselves) & instrumental goals. I write ‘spectrum’ because most of the justifications I can think of seem to be both foundational and instrumental, albeit in differing proportions; moreover, humans don’t really know our ultimate or terminal goals/values anyway, as Anders Sandberg surveys brilliantly here.

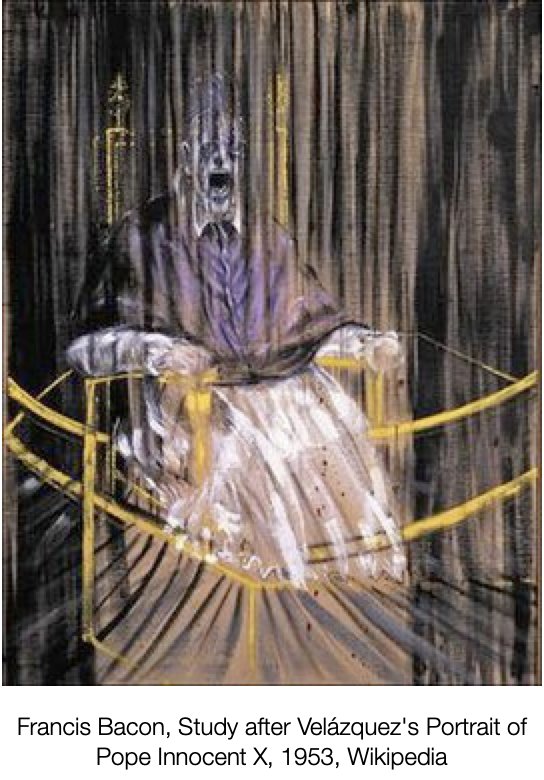

A foundational reason for why society should encourage aesthetics (through public/private funding, education, etc.) is because it is a (intergenerational) public good: artworks document our world in a way that might be interesting for future beings. I mean ‘interesting’ in the sense that future viewers (or artists) see something meaningful in today’s art, that they couldn’t attain through simply reading the historical record. This is analogous to how great ancient buildings inform later architecture, in ways referential as well as technical (e.g. the tomb of King Mausolus at Halicarnassus was apparently the inspiration for 14 Wall Street and other early skyscrapers). Or how the painter Francis Bacon mashed up Velázquez painting’s of Pope Innocent X with an image from Eisenstein’s film Potemkin, to make a moderately disturbing image of modernity.

More instrumental justifications for aesthetics are:

- Visual art (in particular) is a non-linear, highly associative, way of presenting/accessing knowledge, of thinking creatively about problems, that also happens to be very experimental, so not at all alien to an engineering/science mind-set

- To put the above in terms inspired a little by Joscha Bach: the artist is discontinuously (but not randomly) sampling the space of possible solutions, in a way that a traditional logical argument or academic paper can’t. They are engaging in a repeated try-eval-modify loop.

- Well-made art can destabilise our perception of the world (John Cage’s 4’ 33” should change one’s idea of what music can be)

- Art can reflect recursively on the world, again changing our perception. To take a canonical example, after Duchamp’s urinal, almost any object ('readymade') from the real world can be art today, which has completely rewritten the ‘rules’ of art.

- Being in the presence of art perhaps creates a space for the viewer to reflect or question, a moment of calm in a hectic world. It also creates a link with accumulated cultural history that a) is out of one’s own parochial context, b) is sufficiently ambiguous that viewers can bring their own meaning to the work

- At the most instrumental extreme, museums/public art seem to increase tourism, and mitigate urban/rural blight (albeit not without controversy i.e. gentrification)

Aesthetics in the context of EA

What are some ways of encouraging aestheticism in EA? Does EA ‘need’ a top-down aesthetic programme (from a PR or branding perspective)?

- EA feels rather early in its cycle as a belief-system, and is more a community or movement, with shared systems of thought and priorities, than (for the moment) a political party. Hence its need for an extensive branding programme seems questionable. Current efforts (as on this page or the EA Creatives Slack) might be sufficient.

- A company or charity has a strong incentive to brand, spend money on it, as well as have a bureaucracy (that might end up developing its own raison d’être). I’m not familiar with EA's org structure or objectives, but (understood as a ‘movement’) it feels more amorphous and distributed than a traditional charity, and (presumably) defines ‘success’ more broadly than just number-of-members, amount-raised, etc.

- EA funding is secure (asset market volatility aside), people are pretty motivated (at least from what I can see in the AI cause area), and bad publicity is still quite niche.

- I tentatively agree with comments in Fortier-Dubois’ post that maybe the aesthetics of EA, while a bit ‘meh’ (inheriting from Wordpress or blogs generally), are inoffensive.

- As an aside, I wonder if the examples of Christianity or Communism in Fortier-Dubois’ post might be slightly beside the point. The early Church (if one is to believe the tour guides at Rome’s catacombs) were small, secret, in-house affairs, and while there were wall-paintings, I suspect they were more devotional (remember the deceased) or instructional (show the Bible visually). It is we, i.e. contemporary viewers, that call them ‘art’ and derive aesthetic pleasure from them. In subsequent centuries, such massive displays of splendour of the Romanesque and Gothic were intricately bound up with wealth and power considerations.

However, if we accept that improved aesthetics are, at the margin, ‘good’ for EA, what would be the ask?

- A logo or website change? Here are a few art-culture-adjacent examples that have moderately good graphic design. Mostly they are museums, publishers, art journals, commissioning bodies, so the design requirements would be quite different from a hybrid network/blog/incubator. Aside from art sites, I also think Gwern’s website is nicely designed (in terms of form and functionality) while remaining minimal.

- Besides the ‘look’, if we are trying to encourage aesthetically-aware content, then I agree with Charles He that EA needs to create an appropriate aesthetic ecosystem. I would add that it should emphasise organic growth rather than a top-down approach (‘let a thousand flowers blossom’). There have been a number of literary competitions in the space, as well as the recent FLI Worldbuilding Contest. Again, the EA Creatives Slack is a good start, but maybe a Discord or Mastodon server would feel less ‘corporate’.

- Another, more complex, approach would be a funding/commission programme or maybe residencies to bring in artists (CERN is the best known, but there are others).

- But there are pitfalls: artists will do what they want (which might not always be perception-positive for EA). I don’t know how to square the objectives of avoiding bad outcomes (for EA) and preserving integrity/freedom (for the artists), in the context of a distributed movement that is already a little controversial (in a way that CERN [probably] is not). This particular tension might be easier to negotiate for specific cause areas (e.g. poverty reduction or pandemic prevention). In other words, there is a real chance an EA-funded artists outreach would increase publicity without necessarily improving perception.

- There are also advantages to engagement: having artists talk about and take an interest (again, perhaps in specific cause areas rather than the rather abstract EA umbrella) might be good. At the very least, to the extent that (some) artists are thought-leaders (e.g. James Bridle or Holly Herndon), having them ‘in the tent’ might be better than otherwise. I think the Berggruen Institute’s residency programme Transformation of the Human is interesting in this respect (I have no idea if it does them any good or indeed what their objectives are in setting this up).

- Artists also draw in an ecosystem of writers and curators, so to the extent it is desirable to get EA ideas into the cultural conversation, this could be good in the long term. But, again, I’m not sure: art (like contemporary social-media-infected life generally) is a messy place with lots of radically different (and often incommensurable) viewpoints, massive signalling, adversarial politics, bad faith and rampant self-promotion. This comment and related post are interesting in light of recent flak longtermism and AI x-risk have attracted.

Outside of art, what are some other ideas?

- Outreach without cultishness: perhaps EA-aligned symposia or workshops at schools or museums. For instance, longtermism feels really adjacent to climate-related good ancestor thinking. It would be great to have pre-university students aware and interested in these ideas…imagine hundreds of Derek Parfits (!) giving the Oxford Union talk at schools everywhere.

- Coordinated and considered interaction with journalists (I could be wrong, but I think this Vox piece on AI x-risk was moderately well-received i.e. at least there is no mention of ‘The Terminator’). See also this recent post on interacting with journalists. This article from The New Yorker on rationality seemed pretty well-balanced, and previous pieces on Bostrom and Parfit have been influential (on me anyway).

- Nicely made publications. I thought Engines of Cognition was beautifully done. Or Collapse magazine (which is from an UK-based arts publisher, but has solid science/maths articles i.e. Nick Bostrom, Milan Cirkovic, Carlo Rovelli, Thomas Metzinger, etc.). Nice paper, considered fonts, unconventional layout, etc. give books an aesthetic quality. There are other examples here.

- Relatedly, should we try to pay attention to aesthetic considerations in everything we do (like Engines of Cognition or The Precipice could have been fairly ugly objects e.g. Human Compatible or The Alignment Problem [it might just be my Amazon paperback, and of course this shouldn't disparage their excellent content] but editors/authors chose to make conscious design decisions [Ord’s book actually has a statement on typeface]). More generally, LaTeX is a really amazing package, but inevitably I find AI/ML papers are (visually speaking) pretty drab affairs. This is perhaps owing to academic publishing constraints, and is often balanced by well-made web content (Chris Olah’s Distill platform or DeepMind’s blog).

- Asking scientists to have aesthetics as a core value might seem absurd, but I don’t think it need be: Bostrom, Sandberg, Ord, etc. as well as plenty of natural scientists in history, are legibly poetic/visual. Perhaps the fact that Fortier-Dubois needed to make his points at all is notable?

- I know there is some related discussion around naming and identity, but would there be value in easing out the EA name, which is both unclear and a bit historically tainted? Longtermism sounds better to me.

- On that note, I don’t think we can emphasise enough (and act accordingly i.e. staffing, membership and worldview), the importance of diversity and inclusivity, and encourage EA orgs in the Global South. Although haters gonna hate, do try to minimise the attack surface !

Lastly, perhaps a cautionary tale - in a time of adversarial memes and rush-to-judgement, almost any publicity can be bad as well as good. For instance, crypto & blockchain have had a terrible ride in the mainstream media (even in not-obviously-left-leaning papers like the Financial Times or Business Insider). Although crypto’s issues are real and substantial, it has been an aesthetic and PR disaster: the image of the white, male crypto-bro sporting laser eyes, captioned ‘money-printer go brrrrr’ !

Note that the Engines of Cognition books were mostly neither written nor compiled or designed by Yudkowsky, but by members of the LessWrong community/the LessWrong team, respectively (there is one essay by Yudkowsky in there).

Thank you - my bad - changed !