In the wake of this community's second major brush with a sketchy tech CEO named Sam, I'd like to offer two observations.

- Don't panic: 90% of EAs or alignment-friendly tech CEOs are good people. I don't know of anything bad Dario Amodei has done (knock on wood). This doesn't reflect a fundamental problem in EA or at AI companies.

- Corollary: 10% aren't.

After SBF, EAs panicked and began the ritual self-flagellation about what they should have done differently. For people who dropped the guy like a hot potato and recognized he was a psychopath: Not much. You think someone should've investigated him? Why? You investigate someone before you give them money, not before they give you money. I don't launch a full-scale investigation of my boss every time he sends me a paycheck.

But a substantial number of EAs spent the next couple of weeks or months making excuses not to call a spade a spade, or an amoral serial liar an amoral serial liar. This continued even after we knew he'd A) committed massive fraud, B) used that money to buy himself a $222 million house, and C) referred to ethics as a "dumb reputation game" in an interview with Kelsey Piper.

This wasn't because they thought the fraud was good; everyone was clear that SBF was very bad. It's because a surprisingly big number of people can't identify a psychopath. I'd like to offer a lesson on how to tell. If someone walks up to you and says "I'm a psychopath", they're probably a psychopath.

"But Sam Altman never said that. Could we really have predicted this a year ag—"

Clearly the quote was a joke, I don't think he actually did anything bad for AI safe—

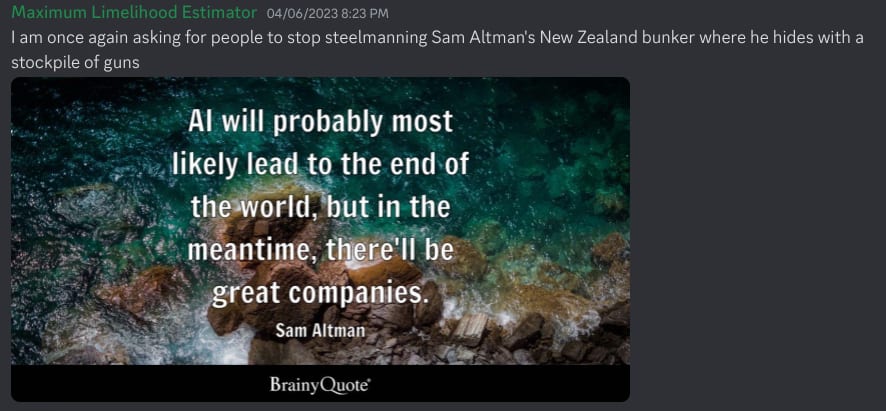

While Sam Altman publicly claimed to support AI regulation, he was also spending millions of dollars killing any meaningful regulations.

Anyone would've done the same things, the incentives to race are—

"If my company doesn't do this thing that might destroy the world, I will lose a lot of money" is not a good defense for doing things that might destroy the world. In fact, doing unethical things for personal benefit is bad.

But did he do anything clearly unethical before—

For starters, he was fired from Y Combinator for abusing his position for personal profit.

Stop overthinking it: some people and organizations are unethical. Recognizing this and pointing out they're bad is helpful because it lets you get rid of them. It's not about getting angry at them; it's just that past behavior predicts future behavior. I wish Sam Altman the very best, so long as it's far away from any position of power where he can influence the future of humanity.

(In related advice, if a company builds an AI that is obviously violent and threatening towards you, you should probably go "Hmm, maybe this company will build AIs that are violent and threatening towards me" instead of finding a 12d chess explanation for why OAI is actually good for AI safety.)

I'm confused by this post. Sam Altman isn't an EA, afaik, and hasn't claimed to be, afaik, and afaik no relatively in-the-know EAs thought he was, or even in recent years thought he was particularly trustworthy, though I'd agree that many have updated negative over the last year or two.

Very few EAs that I know did that (I'd like to see stats, of the dozens of EAs I know, none publicly/to my knowledge did such a thing except if I remember right Austin Chen in an article I now can't find). And for people who did defend Sam, I don't know why you'd assume that the issue is them not being able to identify psychopaths, as opposed to being confused about the crimes SBF committed and believing they were the result of a misunderstanding or something like that

Agree that this post is confusing in parts and that Altman isn’t EA-aligned. (There were also some other points in the original post that I disagreed with.)

But the issue of "not calling a spade a spade" does seem to apply, at least in SBF’s case. Even now, after his many unethical decisions were discussed at length in court, some people (e.g., both the host and guest in this conversation) are still very hesitant to label SBF’s personality traits.

This doesn't need to be about soul searching or self-flagellation - I think it can (at times) be very difficult to recognize when someone has low levels of empathy. But sometimes (both in one’s personal life and in organizations) it's helpful to notice when someone's personality places them at higher risk of harmful behavior.

Very strong +1, this is nothing like the SBF situation and there's no need for soul searching of the form "how did the EA community let this happen" in my opinion

I had substantial discussions with people on this, even prior to Sam Altman's firing; every time I mentioned concerns about Sam Altman's personal integrity, people dismissed it as paranoia.

In OpenAI's earliest days, the EA community provided critical funds and support that allowed it to be established, despite several warning signs already having appeared regarding Sam Altman's previous behavior at Y Combinator and Looped.

I think this is unlike the SBF situation in that there is a need for some soul-searching of the form "how did the EA community let this happen". By contrast, there was very little need for it in the case of SBF.

Like I said, you investigate someone before giving money, not after receiving money. The answer to SBF is just "we never investigated him because we never needed to investigate him; the people who should have investigated him were his investors".

With Sam Altman, there's a serious question we need to answer here. Why did EAs choose to sink a substantial amount of capital and talent into a company run by a person with such low integrity?

In the conversations I had with them, they very clearly understood the charges against him and what he'd done. The issue was they were completely unable to pass judgment on him as a person.

This is a good trait 95% of the time. Most people are too quick to pass judgment. This is especially true because 95% of people pass judgment based on vibes like "Bob seems weird and creepy" instead of concrete actions like "Bob has been fired from 3 of his last 4 jobs for theft".

However, the fact of the matter is some people are bad. For example, Adolf Hitler was clearly a bad person. Bob probably isn't very honest. Sam Altman's behavior is mostly motivated by a desire for money and power. This is true even if Sam Altman has somehow tricked himself into thinking his actions are good. Regardless of his internal monologue he's still acting to maximize his money and power.

EAs often have trouble going "Yup, that's a bad person" when they see someone who's very blatantly a bad person.

tldr, thanks for writing.

I would consider myself to be a very trusting and forgiving person who always starts with the mentality of "Yes, but most people are inherently good". I definitely went through a long period of "Ah well I mean surely Sam (SBF) isn't actually a 'bad' guy right". This felt like a productive slap in the face.

I would still prefer to be more than less trusting, but maybe I should dial it back a bit. Thanks.

"Trust but verify" is Reagan's famous line on this.

Most EAs would agree with "90% of people are basically trying to do the right thing". But most of them have a very difficult time acting as though there's a 10% chance anyone they're talking to is an asshole. You shouldn't be expecting people to be assholes, but you should be considering the 10% chance they are and updating that probability based on evidence. Maya Angelou wrote "If someone shows you who they are, believe them the first time".

As a Bayesian who recognizes the importance of not updating too quickly away from your prior, I'd like to amend this to "If someone shows you who they are, believe them the 2nd or 3rd time they release a model that substantially increases the probability we're all going to die".

(haven't read the entire post)

I think the "good people'' label is not useful here. The problem is that humans tend to act as power maximizers, and they often deceive themselves into thinking that they should do [something that will bring them more power] because of [pro-social reason].

I'm not concerned that Dario Amodei will consciously think to himself: "I'll go ahead and press this astronomically net-negative button over here because it will make me more powerful". But he can easily end up pressing such a button anyway.

If you're saying "it takes more than good intentions to not get corrupted," I agree.

But then the question is, "Is Dario someone who's unusually unlikely to get corrupted?"

If you're saying "it doesn't matter who you put in power; bad incentives will corrupt everything," then I don't agree.

I think people differ a lot wrt how easily they get corrupted by power (or other bad incentive structures). Those low on the spectrum tend to shape the incentives around them proactively to create a culture that rewards what they don't want to lose about their good qualities.

What percent of people do you think fall into this category? Any examples? Why are we so bad at distinguishing such people ahead of time and often handing power to the easily corrupted instead?

Off the cuff answers that may change as I reflect more:

I think incentives matter, but I feel like if they're all that matters, then we're doomed anyway because "Who will step up as a leader to set good incentives?" In other words, the position "incentives are all that matters" seems self-defeating, because to change things, you can't just sit on the sidelines and criticize "the incentives" or "the system." It also seems too cynical: just because, e.g., lots of money is at stake, that doesn't mean people who were previously morally motivated and cautious about their motivations and trying to do the right thing, will suddenly go off the rails.

To be clear, I think there's probably a limit for everyone and no person is forever safe from corruption, but my point is that it matters where on the spectrum someone falls. Of the people that are low on corruptibility, even though most of them don't like power or would flail around helplessly and hopelessly if they had it, there are probably people who have the right mix of traits to create, maintain and grow pockets of sanity (well-run, well-functioning organizations, ecosystems, etc.).

If I had to guess, the EA community is probably a bit worse at this than most communities because A) bad social skills and B) high trust.

This seems like a good tradeoff in general. I don't think we should be putting more emphasis on smooth-talking CEOs—which is what got us into the OpenAI mess in the first place.

But at some point, defending Sam Altman is just charlie_brown_football.jpg

The usefulness of the "bad people" label is exactly my point here. The fact of the matter is some people are bad, no matter what excuses they come up with. For example, Adolf Hitler was clearly a bad person, regardless of his belief that he was the single greatest and most ethical human being who had ever lived. The argument that all people have an equally strong moral compass is not tenable.

More than that, when I say "Sam Altman is a bad person", I don't mean "Sam Altman's internal monologue is just him thinking over and over again 'I want to destroy the world'". It means "Sam Altman's internal monologue is really good at coming up with excuses for unethical behavior".

Like:

I would like to state, for the record, that if Sam Altman pushes a "50% chance of making humans extinct" button, this makes him a bad person, no matter what he's thinking to himself. Personally I would just not press that button.