CEA and the EA community have both grown and changed a lot in the year since our last org-wide update. We (the CEA Executive Office team) have written this post to update the community on the work CEA has done in 2022.

This post is mostly focused on the progress of our core work in 2022, not on reflections on anything related to FTX's collapse (though we touch on these issues in the final sections of this post).

What is CEA?

CEA (The Centre for Effective Altruism) is dedicated to nurturing a community of people who are thinking carefully about the world’s biggest problems and taking impactful action to solve them. We hope that this community can help to build a radically better world: so far it has helped to save over 150,000 lives, reduced the suffering of millions of farmed animals, and begun to address some of the biggest risks to humanity’s future.

We do this by helping people to consider their ideas, values and options for and about helping, connecting them to advisors and experts in relevant domains, and facilitating high-quality discussion spaces. Our hope is that this helps people find an effective way to contribute that is a good fit for their skills and inclinations.

We do this by...

- Running EA Global conferences and supporting community-organized EAGx conferences.

- Funding and advising hundreds of local effective altruism groups.

- Building and moderating the EA Forum, an online hub for discussing the ideas of effective altruism.

- Supporting community members through our community health team.

We also produce the Effective Altruism Newsletter, which goes out to more than 50,000 subscribers, and run EffectiveAltruism.org, which hosts a collection of recommended resources.

Attendees at EAG London in April

Our priority is helping people who have heard about EA to deeply understand the ideas, and to find opportunities for making an impact in important fields. We think that top-of-funnel growth is likely already at or above healthy levels, so rather than aiming to increase the rate any further, we want to make that growth go well. (This is a shift from our thinking in 2021. The main reason for this shift is that we think that the growth rate of EA increased sharply in 2022.)

You can read more about our strategy here, including how we make some of the key decisions we are responsible for, and a list of things we are not focusing on. One thing to note: we do not think of ourselves as having or wanting control over the EA community. We believe that a wide range of ideas and approaches are consistent with the core principles underpinning EA, and encourage others to identify and experiment with filling gaps left by our work.

Our legal structure

CEA is, like Giving What We Can, 80,000 Hours and a bunch of other projects, a project of the Effective Ventures group — the umbrella term for EVF (a UK registered charity) and CEA USA Inc. (a US registered charity), which are separate legal entities which work together.

(We know the US charity name is confusing! To make matters even more complicated, until recently EVF was also known as CEA — that confusion is why EVF changed its name.)

2022 in summary

2022 was a year of continued growth for CEA and our programs.

What went well?

Growth of our key public-facing programs

Many of our programs scaled up very rapidly, while maintaining (in our opinion) roughly constant quality.[1]

Some examples of this (with more examples and details below):

- Events: The number of connections made at our events grew by around 5x this year, which should help many more people find a way to contribute to important problems.

- Online: Engagement on the EA Forum grew by around 2.9x, helping the spread of important new ideas and richness of discussion.

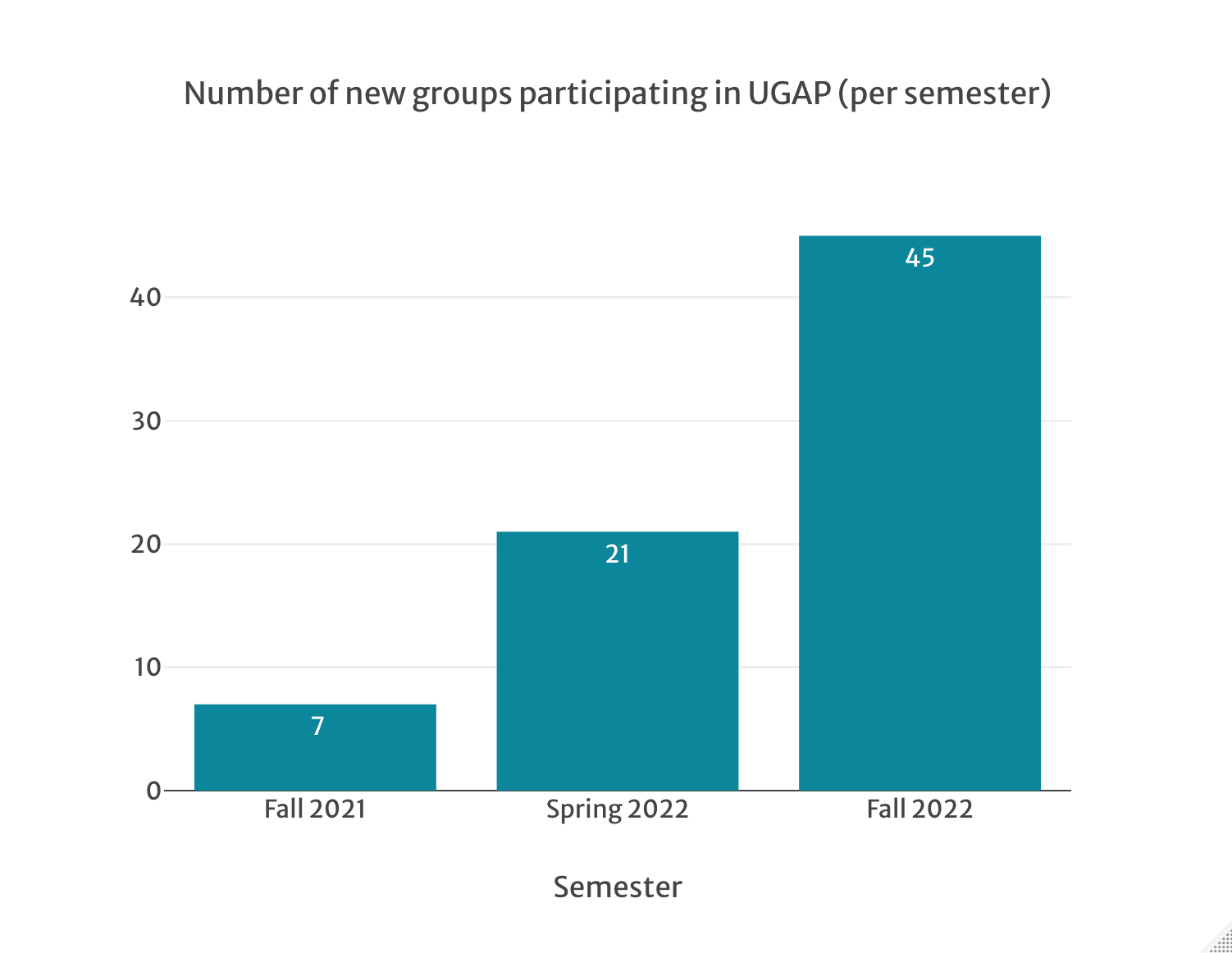

- Groups: 208 organizers went through our University Groups Accelerator Program (10x growth for a new program starting from a low base), receiving 8 weeks of mentorship designed to accelerate EA journeys for organizers and their groups.

Our top-of-funnel metrics (e.g. for EA.org and Virtual Programs) were more steady, partly (but not entirely) because we focused less on growing them (and more on the other programs and on quality improvements).

You can see a public dashboard with some of our key metrics here. (2023-01-02 edit: This dashboard was previously showing incorrect EffectiveAltruism.org user data. The incorrect data has now been removed.)

Expanding community health and communications work

The community health and special projects team has made some extremely strong hires who have taken on important new strands of work. While we continue our work on interpersonal harm, the team’s mandate is now much broader than this, aiming to address important (and potentially trajectory-changing) issues in EA that are receiving insufficient attention.

We helped with media and communications work throughout the year, including spearheading an update to EffectiveAltruism.org, with a new intro essay which we think is a much better representation of EA. In September we hired a head of communications to lead this work, with a focus on communicating EA ideas and related work to the outside world (rather than on CEA’s brand).

Team

The CEA team has roughly doubled in size, while maintaining high retention and morale[2]. People Operations have been focused on helping the team to grow in a healthy way: assisting in hiring rounds and improving onboarding (which now gets very positive feedback). We’ve added many people with crucial new skills (e.g. in product, communications, design), or who add to our existing strengths (e.g. in engineering and event production).

I think that our leadership team has developed especially well over the course of the year, and we’ve begun to train up a strong set of new(er) managers.

What went badly?

As discussed in the conclusion of this post, we're still reflecting on the FTX collapse, and what (if anything) we should change based on that. So for now, this focuses on non-FTX-related mistakes.

- We were too slow to get started on communications

- We were too deferential to other organizations here, and this meant that we started seriously leading and hiring for communications work for EA later than we otherwise would have.

- This lost us vital time, and probably set discussion about EA on a worse trajectory than if we had begun preparing when we initially wanted to.

- Cost control

- In particular, we could have reduced EAG spending without much reduction in impact if we had focused more on contract negotiation early in the year. (Though we had a smaller and newer team at the time, so we’re not sure whether focusing heavily on this would have sacrificed our focus on growing EAG.)

- Overall, this still meant that we came in at our “expansion” budget – but we could and should have come in lower than this.

- Product failures

- We allowed the EA Forum homepage to be “too meta” for too long in the middle of the year, which promoted lower-quality posts and community drama rather than interesting and productive discussion about how to improve the world. (We addressed the visibility issue by changing the frontpage algorithm, though there isn’t a clear sign that this affected total engagement time with these posts.)

- Our attempts in late 2021 and early 2022 to support top uni groups didn’t pay off as we hoped, and we passed on this strand of work to Open Philanthropy in early 2022.

- Although these were failures, and we should have changed more quickly, we’re still pleased that we changed our plans within a few months in both cases (and in similar less important cases).

(This isn't an exhaustive list, but covers some of the high-level issues.)

Some of the Online team in discussion at our team retreat

Our programs

In this post, we’re just going to give a quick overview of results from each program. If there is interest, we may follow this up with more detailed reviews of some programs.

Groups

2022

Early in the year, we discontinued our work to fund full-time organizers at top universities, passing this to Open Philanthropy. Generally, we think that this transition went well, with one caveat discussed below.

Instead, we focused on improving and scaling up our University Groups Accelerator Program (UGAP). UGAP aims to take a university group from a couple of interested organizers to a mid-sized EA group. It offers stipends for main organizers, regular meetings with an experienced mentor, training, and resources to run a group’s first intro fellowship. It was launched in 2021, and we mentored 208 organizers in 2022. Participants gave very positive feedback.

We also expanded our support for non-university groups. We funded 27 city and national group organizers in building local EA professional networks, and ran two group organiser retreats. Organizers rate this program highly (5.8/7). This year, we ran, or helped to run, hiring rounds that found extremely strong new organizers in Australia, NYC, Germany, Switzerland, Netherlands, and London. We hope that this helps these important local communities to flourish.

Attendees at a city and national group organizer retreat in April

We also continued to run Virtual Programs, which make learning about EA accessible to anyone with an internet connection. This year around 2,500 people took part in this program. Overall total numbers per cohort, participation rates and feedback scores remained relatively constant. We’re disappointed that we didn’t make more progress here, but we’re increasing staffing so that we have more capacity for improvements rather than maintenance work.

We also provided advising calls and funding to groups not supported by the above programs, and have added staff capacity to help with this line of work in the future.

Impact story examples

- City and National Groups help people identify and pursue opportunities for impact

- An industry researcher initially sceptical of EA ideas was re-introduced to EA by their friends through groups, and is now responsible for multiple EA education initiatives (EA Israel)

- A student group organiser became an active member of their national group, who cites high motivation and accountability provided by that community, and went on to found a successful startup (EA Sweden)

- One of our own employees credits a group leader with being highly influential in their decision to leave a job in parliament and join CEA (EA Germany)

- Virtual Programs provides access to the EA community as well as its ideas. An improved user journey from VP to in-person groups uses a CRM-enabled exit survey to allow participants to select from all the EA groups we know about, and share their details. Over 100 people got connected with groups in one October week!

What’s next

One downside of handing top university support over to Open Philanthropy is that they only supply funding to groups. We are beginning to investigate whether we can provide advice, support, and retreats to top university groups and group organizers of well established groups.

We’re currently investigating the key ways we can further improve Virtual Programs (and our introductory fellowships, which are used across groups).

Aside from this, we plan to continue our work in these areas.

Events

2022

We think that this was by far the most successful year in the history of the events program.

We held 3 EA Globals, 9 EAGx conferences, and funded or ran 24 smaller events.

Our headline metric, the number of connections between attendees, grew by 5 times since 2021, generating around 80,000 connections across our events. This was mostly driven by a 4x increase in the total number of attendances at our events, as well as partly by an increase in the number of connections made by the average attendee.

And we did this while continuing to grow satisfaction with our events to our highest ever level, 8.9/10 (with people working full-time at EA-affiliated organizations giving even higher satisfaction scores).

We are also proud that we provided much more support to EAGx conferences, and that these events were held across the world, including in Prague, Rotterdam and Singapore.

Attendees at EAGxSingapore in September

We also supported or ran a smaller number of retreats — a coordination forum for EA leaders, a biosecurity event, a retreat for EA professionals in the events space, and retreats for groups. These were well-received and produced some outcomes that we are excited about, and we plan to run more events of this sort in the coming year.

As mentioned above, the key failure of the program this year was in cost control: we focused on scaling at the expense of spending for most of the year (which we think made sense given the community’s financial position at the beginning of the year, and our limited staff capacity). But we didn’t update quickly enough as the community’s position changed gradually throughout the year. This meant that we missed opportunities to negotiate to reduce costs, as well as to make (moderate) programmatic cuts. This led to the program coming in somewhat over the top end of our budget estimates (and driving CEA’s overall budget towards the top end of estimates). We are now more focused on cost control, and negotiating contracts further in advance (which gives us more leverage).

We also note there was some confusion in the community over the admissions policies to our EAG and EAGx events, and are exploring ways to improve our communication here in the future.

Impact story examples

- Events connect orgs and funders across the community with hires and grantees. Examples include an operations hire made by Alvea (EAGxBoston) and a senior civil servant getting a 3-year grant to engage in policy advocacy (EAGxAustralia).

- Events cause individuals to pursue more impactful paths. One EAG attendee who left a software engineering job to skill up to do technical AI alignment credits their decision to finding a strong social support network at EAG, which helped translate their intellectual interest in AI into an appealing plan of action.

- EAG/x is one of the top sources of co-founders for Charity Entrepreneurship. Of the 64 top-scoring candidates for CE’s program, 13 were found via EAG/x, making it their second biggest source. CE’s own estimate indicates the value of connections which resulted in co-founders being accepted to their program, unadjusted for counterfactual impact, was $1.25m (5 co-founders connected via events, each estimated to have a value equivalent to at least $250,000).

What’s next

We plan to continue with a similar program of EAG and EAGx events next year, including events in Mexico and India early in the year. We expect to cut some meals and furnishings to manage costs, and to reduce the amount that we spend on travel grants for attendees this year. Wherever possible, we still want to support people to attend if they couldn’t otherwise afford to and attending could cause them to have much more impact.

We'll continue to support retreats and conferences via the Community Events program, and will be exploring how we can actively support events in this space alongside more passive grantmaking.

We also plan to help other organizations and people run events (sometimes on particular topics). We hope to partner with people who have strong domain knowledge: we’ll run operations and collaborate with them on event design. We’ve begun to do this with some events already, and this program may scale throughout the year.

Online

2022

Discussion on the EA Forum

Our headline metric (engagement with the Forum) grew by around 2.9x this year.

We expect that this growth is correlated with a lot of the things that we care about: people learning about EA ideas and new research being shared and discussed.

A lot of this growth was organic, but we think that we contributed both to the growth and the quality of the Forum by:

- Running key events and contests on the Forum, most notably the EA criticism contest. Many of the spikes in Forum traffic were around these contests, which suggests that they helped with engagement (even though this was not the primary purpose of those events).

- Tweaking key Forum algorithms to maintain good discussion (e.g. tweaking the homepage algorithm to de-emphasize “community” content).

- Shipping features to improve the experience for readers and writers (most popularly, footnotes, improved importing from Google Docs, and subforums)

- We also introduced some features designed and built by the LessWrong team, most notably agree/disagree voting and curation.

We’ve monitored Forum discussion quality too. Overall, we think that the quality of posts and discussion is roughly flat over the year, but it’s hard to judge. However, we have heard from some users that they think post quality has gone down: we think that this is mostly about good posts being harder to find as the volume of posts has increased: this is a problem, and something we're working to fix.

In our recent EA Forum survey, 40% of respondents said they applied to a job because of the EA Forum, and 12% said the Forum had significant influence in them getting funding for a project.[3]

Impact story examples

- Forum readers have gotten jobs by connecting with authors on the Forum

- One reader was doing forecasting for fun until they met with Ben Snodin after reading this post, which resulted in them contacting Metaculus and getting hired (despite there being no open positions at the time)

- Another was offered a part-time job when they contacted an author of this post and got an EA Infrastructure Fund grant based on an idea posted in a Forum shortform

- The criticism contest brought new people with valuable perspectives into EA discussions. New user Froolow joined the Forum to participate, and submitted winning entries related to GiveWell’s cost-effectiveness model and uncertainty analysis in EA cost-effectiveness models.

Newer products

Aside from the key Forum discussion product, we tried a number of things, with varying degrees of success:

- Community platform: We built a variety of features for finding local groups and events. While we think that these are good features, we haven’t seen as much engagement with them as we hoped, and we’re not sure if they were worth the time.

- Subforums: We’ve been exploring ways to facilitate more specialised discussion, which has become more important as the number of Forum posts and users has grown, and as fields become increasingly specialised. We’re still experimenting with this, but we feel optimistic about the direction we’re pursuing. An example subforum — effective giving — is here.

- Connections: Sparked by seeing many people making connections on the Forum, we spent several months investigating ways to help people make new connections. We haven’t yet found anything that we thought was cost-effective.

- Job ads: We experimented with showing Forum users job opportunities that they might be interested in, based on their EAG applications and Forum tag use. We’ve had extremely strong results from our online ads, surveys, and emails so far: in particular, 5% of people who saw one of our most recent ads applied to a high-impact job because of it. If we can replicate this it could be incredibly impactful. However, we don’t want to bombard people with ads, and we’ll be careful to listen out for any negative feedback too.

- EffectiveAltruism.org: We revamped this site, which we think is now much more appealing, and gives a more accurate understanding of what EA is. We’re grateful to external collaborators for developing the new intro essay and advising on content.

Overall, we’re happy with this hit rate, and happy that we adapted when things weren’t working out. You can see our Forum updates here.

What’s next

We are planning to continue testing and developing both job ads and subforums, as well as continuing to moderate and monitor Forum discussion.

Community Health & Special Projects

2022

I think that the community health team did various things that significantly improved the trajectory of EA and related projects.

The team:

- Continued working to address interpersonal harm in the community

- Assisted high school programs with lowering risks and setting a strong culture for their programs

- Started some projects (and products) aimed at supporting community epistemics

- Helped to reduce some risks related to policy, politics, and geopolitical work

- Hired some very strong staff, and set up good internal processes for monitoring and discussing risks

- Supported efforts to provide mental health support to community members.

- Supported efforts to communicate EA ideas and related work to the outside world, which culminated in hiring a head of communications to lead this work.

We have released a team website to share more about our work. We encourage you to get in touch with us if you have any concerns about the community.

Some of the Community Health & Special Projects team

Recently the community took a significant hit from the collapse of FTX and the suspected illegal and/or immoral behaviour of FTX executives. The CH&SP team’s role is to anticipate and mitigate risks, and ideally we would have been able to do more here. We’re uncertain to what extent we mis-estimated this cluster of risks, and whether we could have or should have acted differently to mitigate them. We plan to reflect on this (while trying to avoid over-updating based on hindsight bias).

What’s next

The team (and CEA more broadly) is still focusing on addressing some of the fallout from recent events, but are in a state of mostly being back to normal with regard to ongoing work and hiring. We and the rest of the CEA leadership team plan to reflect on what, if anything, we should have done differently. We’ll also spend time reflecting on how this should change other aspects of our work and the community.

We expect to continue to expand the team and the range of areas it focuses on.

Closing thoughts

In an effort to make CEA’s work more legible to the community, we’ve created a dashboard of key metrics from some of our programs. In the spirit of our values, we are publishing this prototype now rather than waiting until we have a perfect product. We’ll monitor community feedback to decide whether to continue, drop, or improve this dashboard, so if you have feedback on what you’d like to see next, we’d welcome comments either on this post or via our anonymous feedback form!

We think that this year was, in many ways, very successful for EA and for CEA. However, the events of the last month or so have cast a pall over some of those achievements. We’re actively reflecting on what this means for CEA and for EA, and making longer-term plans for CEA. We plan to share more of our reflections and plans in due course.

In some ways this is an odd time to post an annual review. But, while we’re reflecting on what we should do differently, we think it’s important to stay committed to our mission, and celebrate the growth we’ve seen in our core programs this year. We’re aware that only with a combination of reflection and hard work can we help the community to have the impact that we hope it can have.

(We've slightly edited this post based on feedback in comments below.)

- ^

While we are not focused on “top of funnel” growth, we think that growth in these more “mid funnel” programs (which help people who are already involved find a way to contribute) is very valuable.

- ^

Morale dipped around the FTX collapse, but I think that it's generally true that morale has been steady as we've grown quickly.

- ^

This survey presumably suffers from response bias. Respondents to the survey also indicated the frequency with which they use the Forum; using this data to match respondents with cohorts from the general population, results in us estimating that these numbers are inflated by approximately a factor of 2.

Several nitpicks:

I realise there are legal and other constraints, so maybe I am being harsh, but overall, several components of this post seemed not very "real" or straightforward relative to what I would usually expect from this sort of EA org update.

Hey Ryan, thanks for writing this up - keen to explain this and reflect more.

First, I do think that I could have written this post better: e.g. given the disclaimer about not focusing on FTX up front.

I think that a lot of this is a substantive disagreement about how CEA’s year went, which I think might be driven by a substantive disagreement about what CEA is.[1]

I’m aware that some people (possibly including you) have a model that’s a bit like “CEA is responsible for what happens in the EA community. A big bad thing happened to the EA community, so CEA is responsible, and so they had a pretty bad year.” (I expect that this is a bit of an oversimplification of your view, but hopefully it helps make the direction clear.)

An oversimplification of my view is “CEA is responsible for running EAG, EAGx, UGAP, groups support, virtual programs, the EA Forum, EA.org. A couple of these things had middling years, but many of them seemed to grow or improve significantly (like 5x, 2.9x growth). So CEA had a pretty good year, and “a year of continued growth” is a good summary.”

The key way in which that is an oversimplification is that the Community Health & Special Projects team does have a broader mandate: scanning for risks and trajectory changes to the community and trying to address them. As mentioned in that section, we clearly didn’t prioritise or eliminate this risk - we’re reflecting on how much that was a mistake, versus a good decision (to focus on other significant risks). We are reflecting on what we should do differently to reduce this and related risks in the future, and we’ll communicate more about that in due course. As I say in response to Larks, there may well be things that we end up thinking were big mistakes here, that should cause us to course-correct (and should go on our mistakes page). But we’re not yet confident in if/what the mistakes were, so we didn’t want to add them in this post yet. I should have noted that in that section though - I’ll go and edit.

So one way I think of this is, should CEA’s annual review be kinda the same thing as EA’s annual review? If I were writing EA’s annual review, there would be a bunch of negative stuff in there, and the overall tone would be pretty different to the above. If this is what CEA’s annual review should be, I did a pretty bad job and you’re right.

Or should it be more like Rethink Priorities[2] annual review? I think that Rethink Priorities could reasonably be like “Our core work went well! (Footnote: though it's impact may be attenuated by one of EA’s major funders blowing up in a very visible way. We’ll reflect on what that changes for us.)” I think there’s a legit version of CEA’s annual review that is like this, if you view CEA as more being responsible for running EAG, groups support, etc.

Overall, I think that CEA is clearly somewhere in between these extremes - we’re more focused on and accountable for how EA overall goes than RP are. But my current take, based on how I view CEA's role/mandate, is that it’s a bit more on the “Rethink Priorities” end, whereas I guess you think it’s more on the “EA annual review” end. I expect that this partly explains why I took a different approach to the annual review.

Going through your nitpicks:

To sum up, despite the big-picture disagreement above, I think that you’re right that we should have added a bit more on the setbacks and caveats to the big picture. But my perspective is that the big picture on CEA's year really is positive.

As a meta point, I’m aware that I’m clearly biased to think that CEA went well, and to want to say that CEA went well. This post is an attempt to accurately convey the big picture as I see it, but I’m aware that I may be biased here! I think that I can speak somewhat definitively to how I view CEA’s scope though.

I chose Rethink Priorities kind of at random as an example - this could be many EA orgs, and I don't mean it to reflect anything on RP in particular.

Thanks for the response. Out of the four responses to nitpicks, I agree with the first two. I broadly agree about the third, forum quality. I just think that peak post quality is at best a lagging indicator - if you have higher volume and even your best posts are not as good anymore, that would bode very poorly. Ideally, the forum team would consciously trade off between growth and average post quality, and in some cases favouring the latter, e.g. performing interventions that would improve the latter even if they slowed growth. And the fourth, understatement, I don't think we disagree that much.

As for summarising the year, it's not quite that I want you say that CEA's year was bad. In one sense, CEA's year was fine, because these events don't necessarily reflect negatively on CEA's current operations. But in another important sense, it was a terrible year for CEA, because these events have a large bearing on whether CEA's overarching goals are being reached. And this could bear on on what operating activities should be performed in future. I think an adequate summary would capture both angles. In an ideal world (where you were unconstrained by legal consequences etc.), I think an annual review post would note that when such seismic events happen, the standard metrics become relatively less important, while strategy becomes more important, and the focus of discussion then rests on the actually important stuff. I can accept that in the real world, that discussion will happen (much) later, but it's important that it happens.

I disagree. I think the metric I care about is “quality of the average post that a person reads.”

The Forum will have a long tail of posts that are written by newbies just exploring some area for the first time, or are kinda confused, or are bad takes, etc. Many of these posts are net positive! I’m rather in favor of people having their learning experiences in public. Many times the comments on those posts are good places to recapitulate the best of EA. The good news is that most of those posts don’t get much karma or readership. I’m sure you can think of posts that you don’t like that got lots of karma. There’s a complex conversation to be had there, I hope Lizka will post her draft on that soon. But I’m talking more about the much more common post that sits at 0-20 karma. There are lots of them. But they’re by and large pretty harmless. I don’t want my metric to be reducing them.

Totally, this is what I had in mind - something like the average over posts based on how often they are served on the frontpage.

I don't know how many people use RSS feeds, but they interact somewhat poorly with this, because the algorithmic post prioritization does not affect RSS readers.

Not sure if this fully helps but you can use a karma cutoff for the RSS feed

I don't mean for me personally - I just mean it will bias the web analytics, because low-karma posts will look like they make up a smaller fraction of impressions than in reality (assuming most RSS feed users do not apply such a cutoff).

Hm, if you don’t mind the question — what are other significant risks you are thinking about? IMO, the FTX fraud scheme is a big deal if anything deserves to be called a big deal, from what I’ve read in the devastating legal documents about the situation, and given how SBF was framed as a poster child of EA and was a significant funder.

The only more significant risk I can think of is EA funding dangerous AI capabilities research via Anthropic, but that isn’t even unrelated to FTX (since FTX slash Alameda slash their leadership was Anthropic’s main funder). Also, my guess is that mitigating AI risk is not within the Community Health team’s scope.

Thanks for sharing this review.

I'm a bit surprised to see a 'What went badly?' section without at least mentioning FTX. Even a very small mistake in this area would be vastly more important that cost overruns at EAG. Similarly, you mention the criticism contest, but in retrospect I think a red-teaming contest with >200 entries that failed to surface FTX risk was a disappointment.

Hi Larks, as we mentioned in the post (especially the last two sections) we're actively reflecting on what, if anything, we should have done differently around FTX. I'm not yet confident what the conclusion of that will be, so it seemed premature to include it in the mistakes section, but maybe we should have mentioned it still.

Once we're done with our reflection, we'll share more about our takeaways and (if necessary) update our mistakes page etc.

Re criticism contest, agree that it's a disappointment that that contest didn't surface more criticism of FTX. I also think that overall it drove some meaningful and useful criticism of core EA ideas and institutions, and that's worth highlighting. Not sure if we got the right balance between those two sides in the post.

Which criticisms did you find meaningful or useful?

They do mention FTX in the section on community health, the text reads as if they're still trying to figure out what went badly there.

Agree about the contest. Something was submitted but it wasn't about blowup risk and didn't rise to the top.

This update would be more useful if it said more about the main catastrophe that EA (and CEA) is currently facing. For whatever reasons, maybe perfectly reasonable, it seems you chose the strategy of saying little new on that topic, and presenting by and large the updates on CEA's ordinary activities that you would present if the catastrophe hadn't happened. But even given that choice, it would be good to set expectations appropriately with some sort of disclaimer at the top of the doc.

Thanks - that’s a good point. We do discuss this in the latter sections of the post, but I think you’re right that we should have mentioned it up front to set expectations.

"Our attempts in late 2021 and early 2022 to support top uni groups didn’t pay off as we hoped, and we passed on this strand of work to Open Philanthropy in early 2022."

"One downside of handing top university support over to Open Philanthropy is that they only supply funding to groups. We are beginning to investigate whether we can provide advice, support, and retreats to top university groups and group organizers of well established groups."

I'm a bit confused by this pair of points. What was the problem? Why was OP a better fit for this task? How is the support you're now looking to provide different from the support which didn't pay off as you had hoped? How will you avoid duplicating work with OP?

Hi Robert,

Thanks for the questions!

I am just adding a quick response now because I think Max’s response does a good job of covering most of your questions. I would be happy to expand if you like, though.

We are more optimistic now because, as mentioned, the landscape is quite different and we are testing out focusing on different types of support than before. For example, we are not currently planning on restarting the campus specialist program but are investigating things like group organizer retreats for top universities (which was a more well-received aspect of the campus specialist program).

You can read a bit more about why we did this here. We handed the funding side off to OP, and we hoped someone else would take on the support side, but no-one did. OP are currently handling funding only, and we would work on support only, which reduces much of the risk of duplication.

I'll ask someone on the groups team to explain more about why we're more optimistic about our approach now.

On a positive. Cool dashboard :p

More pls.

Thanks for the update! It would be interesting to get more statistics on CEA. Like

(Maybe these exist elsewhere?)