‘We are in favour of making people happy, but neutral about making happy people’

This quotation from Jan Narveson seems so intuitive. We can’t make the world better by just bringing more people into it...can we? It’s also important. If true, then perhaps human extinction isn’t so concerning after all...

I used to hold this ‘person-affecting’ view and thought that anyone who didn’t was, well...a bit mad. However, all it took was a very simple argument to completely change my mind. In this short post I want to share this argument with those who may not have come across it before and demonstrate that a person-affecting view, whilst perhaps not dead in the water, faces a serious issue.

Note: This is a short post and I do not claim to cover everything of relevance. I would recommend reading Greaves (2017) for a more in-depth exploration of population axiology

The (false) intuition of neutrality

There is no single ‘person-affecting view’, instead a variety of formulations that all capture the intuition that an act can only be bad if it is bad for someone. Similarly something can be good only if it is good for someone. Therefore, according to standard person-affecting views, there is no moral obligation to create people, nor moral good in creating people because nonexistence means "there is never a person who could have benefited from being created".

As noted in Greaves (2017), the idea can be captured in the following

Neutrality Principle: Adding an extra person to the world, if it is done in such a way as to leave the well-being levels of others unaffected, does not make a state of affairs either better or worse.

Seems reasonable right? Well, let’s dig a bit deeper. If adding the extra person neither makes the state of affairs better or worse, what does it do? Let’s consider that it leaves the state of affairs equally as good as the original state of affairs.

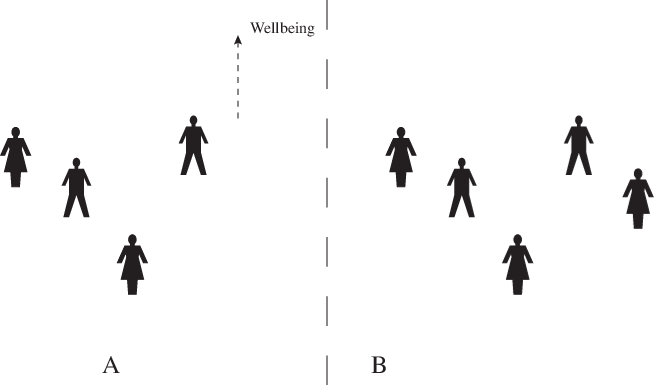

In this case we can say that states A and B below are equally as good as each other. A has four people and B has the same four people with the same wellbeing levels, but also an additional fifth person with (let’s say) a positive welfare level.

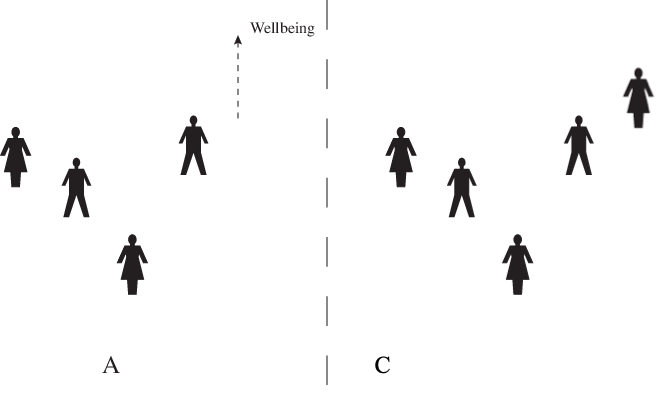

We can also say that states A and C are equally as good as each other. C again has the same people as A, and an additional fifth person with positive welfare.

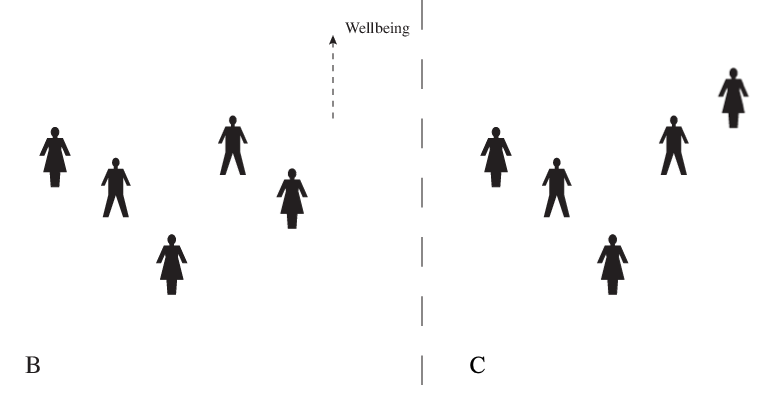

So A is as good as B, and A is as good as C. Therefore surely it should be the case that B is as good C (invoking a reasonable property called transitivity). But now let’s look at B and C next to each other.

Any reasonable theory of population ethics must surely accept that C is better than B. C and B contain all of the same people, but one of them is significantly better off in C (with all the others equally well off in both cases). Invoking a person-affecting view implies that B and C are equally as good as each other, but this is clearly wrong.

You might be able to save the person-affecting view by rejecting the requirement of transitivity. For example, you could just say that yes… A is as good as B, A is as good as C, and C is better than B! Well...this just seemed too barmy to me. I’d sooner divorce my person-affecting view than transitivity.

Where do we go from here?

If the above troubles you, you essentially have two options:

- You try and save person-affecting views in some way

- You adopt a population axiology that doesn’t invoke neutrality (or at least one which says bringing a person into existence can only be neutral if that person has a specific “zero” welfare level)

To my knowledge no one has really achieved number 1 yet, at least not in a particularly compelling way. That’s not to say it can’t be done and I look forward to seeing if anyone can make progress.

Number 2 seemed to me like the best route. As noted by Greaves (2017) however, a series of impossibility theorems have demonstrated that, logically, any population axiology we can think up will violate one or more of a number of initially very compelling intuitive constraints. One’s choice of population axiology then appears to be a choice of which intuition one is least unwilling to give up.

For what it’s worth, after some deliberation I have begrudgingly accepted that the majority of prominent thinkers in EA may have it right: total utilitarianism seems to be the ‘least objectionable’ population axiology. Total utilitarianism just says that A is better than B if and only if total well-being in A is higher than total wellbeing in B. So bringing someone with positive welfare into existence is a good thing, and bringing someone with negative welfare into existence is a bad thing. Bringing someone into existence with “zero” welfare is neutral. Pretty simple right?

Under total utilitarianism, human extinction becomes a dreadful prospect as it would result in perhaps trillions of lives never coming into existence that would have otherwise. Of course we have to assume these lives are of positive welfare to make avoiding extinction desirable.

It may be a simple axiology, but total utilitarianism runs into some arguably ‘repugnant’ conclusions of its own. To be frank, I’m inclined to leave that can of worms unopened for now...

References

Broome, J., 2004. Weighing lives. OUP Catalogue. (I got the diagrams from here - although slightly edited them)

Greaves, H., 2017. Population axiology. Philosophy Compass, 12(11), p.e12442.

Narveson, J.,1973. Moral problems of population. The Monist, 57(1), 62–86.

The intransitivity problem that you address is very similar to the problem of simultaneity or synchronicity in special relativity. https://en.wikipedia.org/wiki/Relativity_of_simultaneity Consider three space-time points (events) P1, P2 and P3. The point P1 has a future and a past light cone. Points in the future light cone are in the future of P1 (i.e. a later time according to all observers). Suppose P2 and P3 are outside of the future and past light cones of P1. Then it is possible to choose a reference frame (e.g. a non-accelerating rocket) such that P1 and P2 have the same time coordinate and hence are simultaneous space-time events: the person in the rocket sees the two events happening at the same time according to his personal clock. It is also possible to perform a Lorentz transformation towards another reference frame, e.g. a second rocket moving at constant speed relative to the first rocket, such that P1 and P3 are simultaneous (i.e. the person in the second rocket sees P1 and P3 at the same time according to her personal clock). But... it is possible that P3 is in the future light cone of P2, which means that all observers agree that event P3 happens after P2 (at a later time according to all clocks). So, special relativity involves a special kind of intransitivity: P2 is simultaneous to P1, P1 is simultaneous to P3, and P3 happens later than P2. This does not make space-time inconsistent or irrational, neither does it make the notion of time incomprehensible. The same goes for person-affecting views. In the analogy: the time coordinate corresponds to a person's utility level. A later time means a higher utility. You can formulate a person-affecting axiology that is 'Lorentz invariant' just like in special relativity.

My favorite population ethical theory is variable critical level utilitarianism

https://stijnbruers.wordpress.com/2020/04/26/a-game-theoretic-solution-to-population-ethics/

https://stijnbruers.files.wordpress.com/2018/02/variable-critical-level-utilitarianism-2.pdf

This theory is in many EA-relevant cases (e.g. dealing with X-risks) equal to total utilitarianism, except that it avoids the very repugnant conclusion: situation A involves N extremely happy people, situation B involves the same N people, now extremely miserable (very negative utility), plus a huge number M of extra people with lives barely worth living (small positive utility). According to total utilitarianism, situation B would be better if M is large enough. I'm willing to bite the bullet of the repugnant conclusion, but this very repugnant conclusion is for me one of the most counterintuitive conclusions in population ethics. VCLU can easily avoid this.

Thanks, I'll check out your writings on VCLU!