In April, I ran a small and fully anonymous cause prioritization survey of CEA staff members at a CEA strategy retreat. I got 31 responses (out of around 40 people),[1] and I’m summarizing the results here, as it seems that people sometimes have incorrect beliefs about “what CEA believes.” (I don’t think the results are very surprising, though.)

Important notes and caveats:

- I put this survey together pretty quickly, and I wasn’t aiming to use it for a public writeup like this (but rather to check how comfortable staff are talking about cause prioritization, start conversations among staff, and test some personal theories). (I also analyzed it quickly.) In many cases, I regret how questions were set up, but I was in a rush and am going with what I have in order to share something — please treat these conclusions as quite rough.

- For many questions, I let people select multiple answers. This sometimes produced slightly unintuitive or hard-to-parse results; numbers often don’t add up unless you take this into account. (Generally, I think the answers aren’t self-contradictory once this is taken into account.) Sometimes people could also input their own answers.

- People’s views might have changed since April, and the team composition has changed.

- I didn’t ask for any demographic information (including stuff like “Which team are you on?”).

- I also asked some free-response questions, but haven’t included them here.

Rough summary of the results:

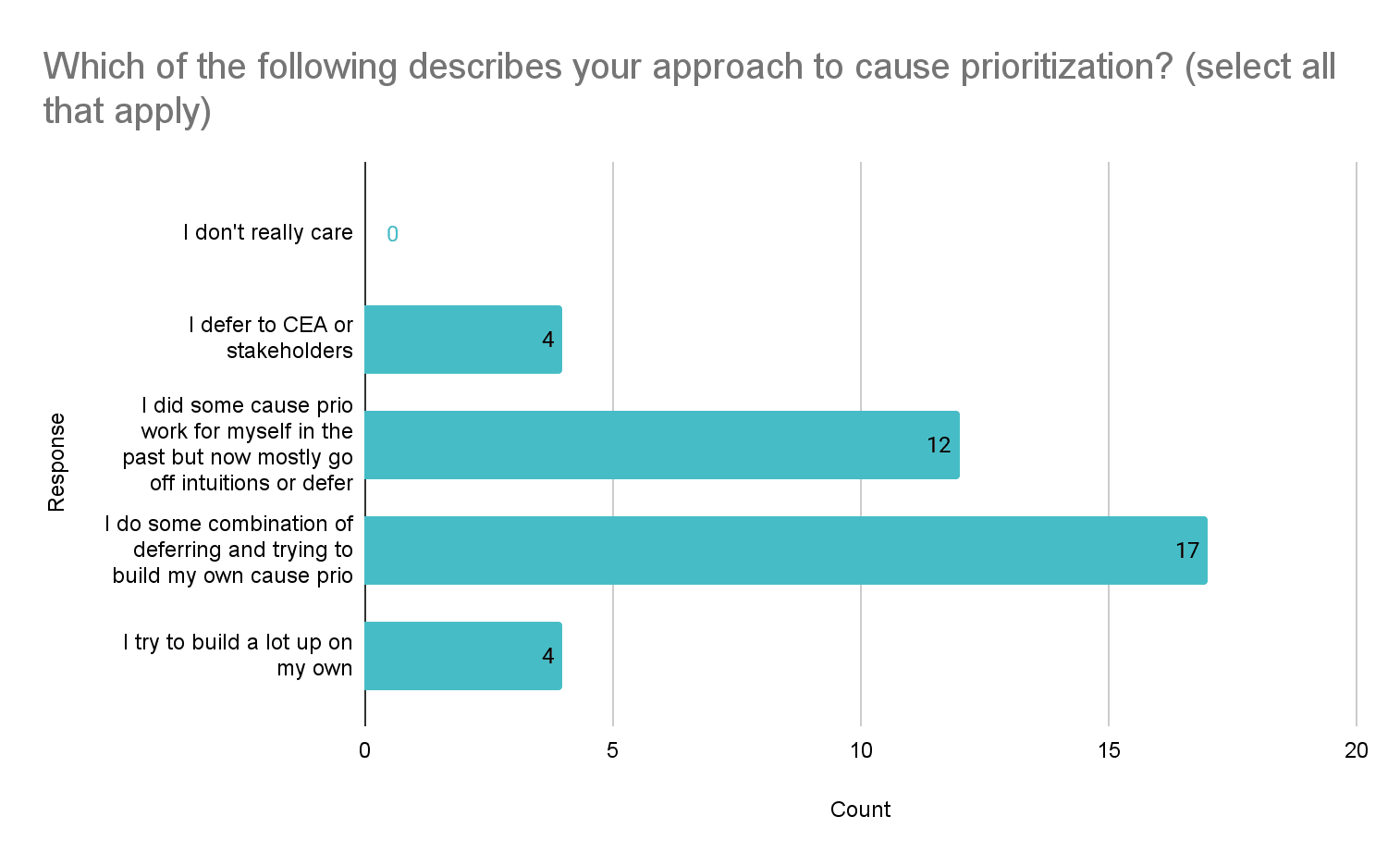

- Approach to cause prioritization: Most people at CEA care about doing some of their own cause prioritization, although most don’t try to build up the bulk of their cause prioritization on their own.

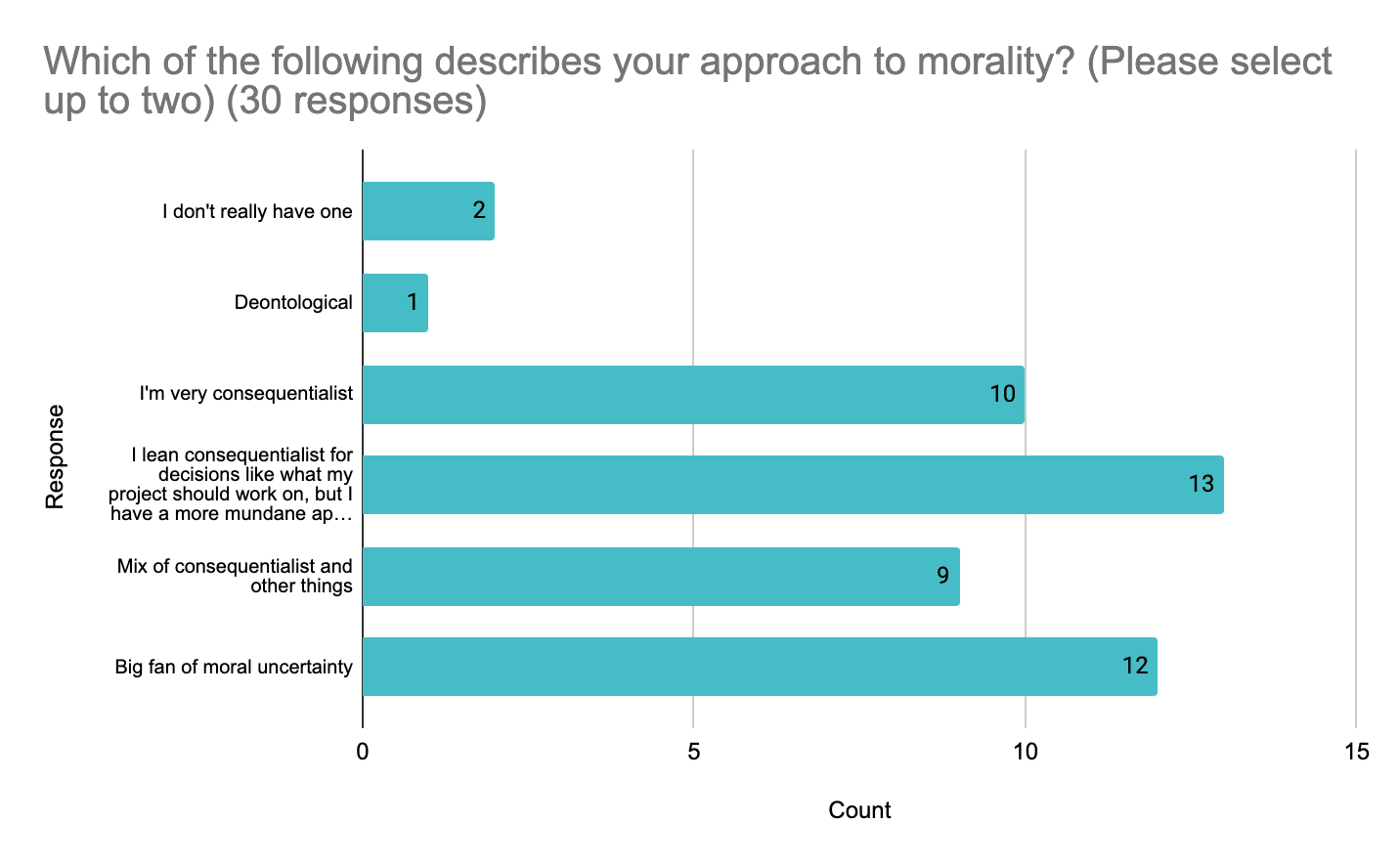

- Approach to morality: About a third of respondents said that they’re “very consequentialist,” many said that they “lean consequentialist for decisions like what their projects should work on, but have a more mundane approach to daily life.” Many also said that they’re “big fans of moral uncertainty.”

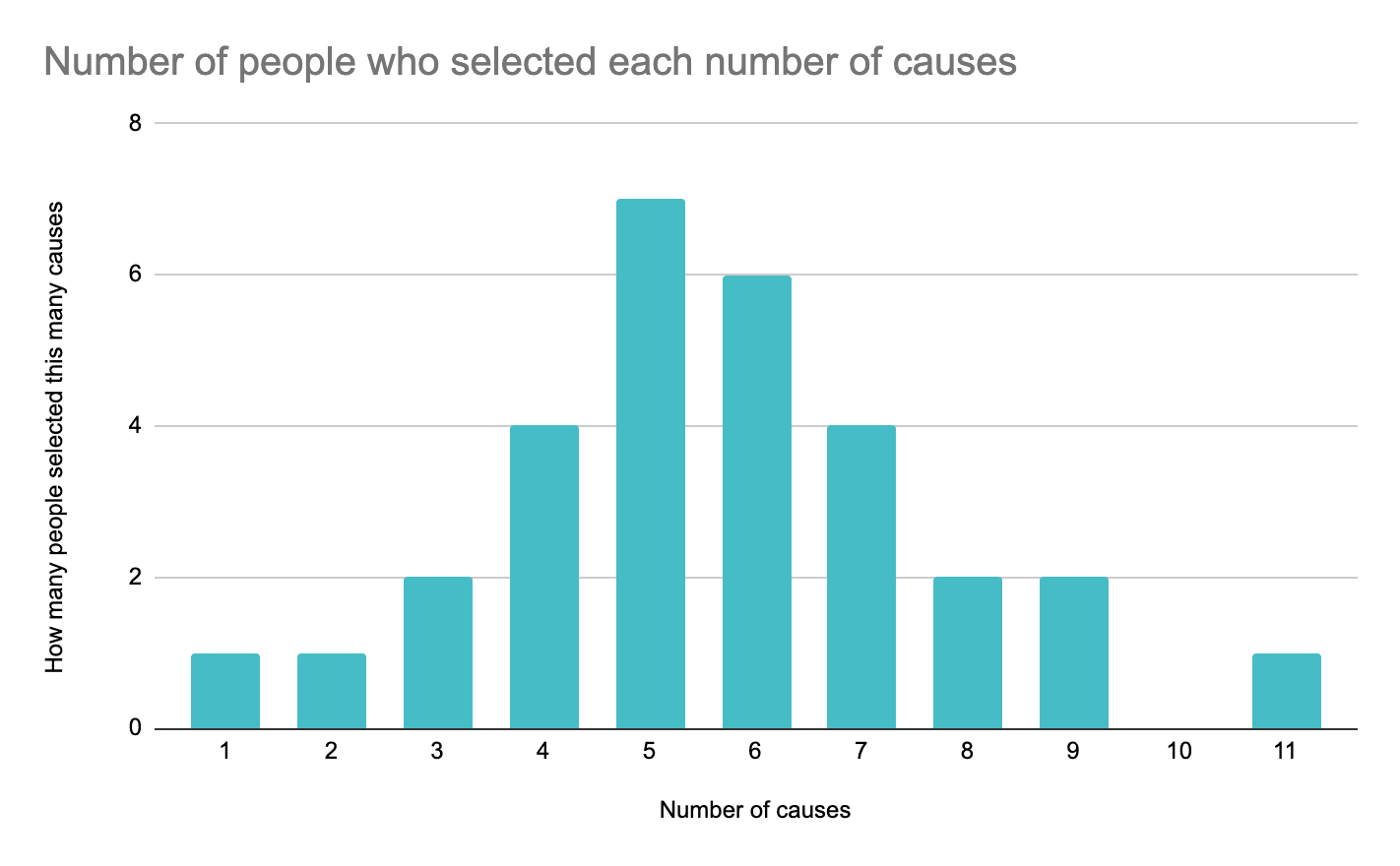

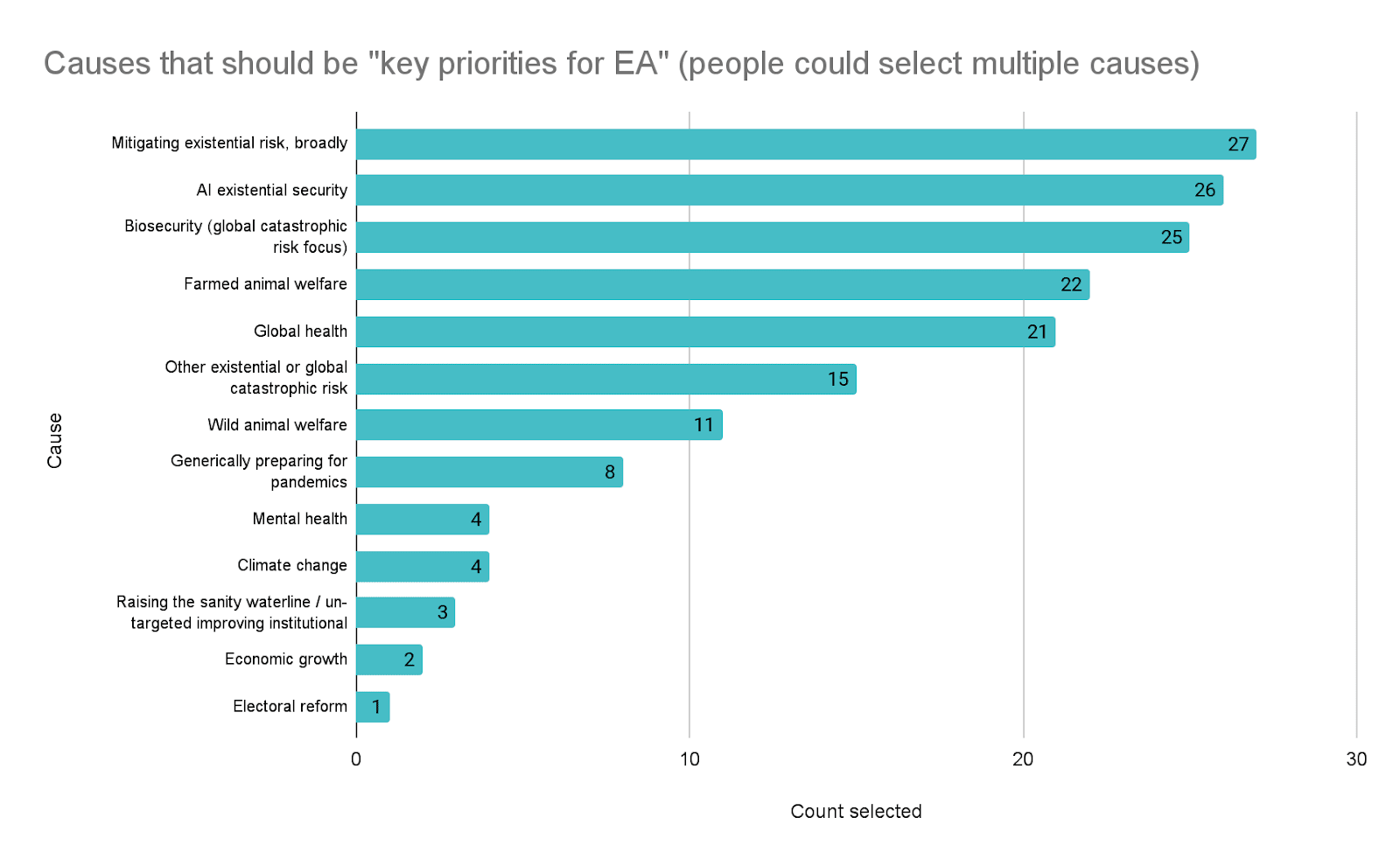

- Which causes should be “key priorities for EA”: people generally selected many causes (median was 5), and most people selected a fairly broad range of causes. Two (of 30) respondents didn’t choose any causes not commonly classified as “longtermist/x-risk-focused” (everyone else did choose at least one, though). The top selections were Mitigating existential risk, broadly (27), AI existential security (26), Biosecurity (global catastrophic risk focus) (25), Farmed animal welfare (22), Global health (21), Other existential or global catastrophic risk (15), Wild animal welfare (11), and Generically preparing for pandemics (8). (Other options on the list were Mental health, Climate change, Raising the sanity waterline / un-targeted improving institutional decision-making, Economic growth, and Electoral reform.)

- Some highlights from more granular questions:

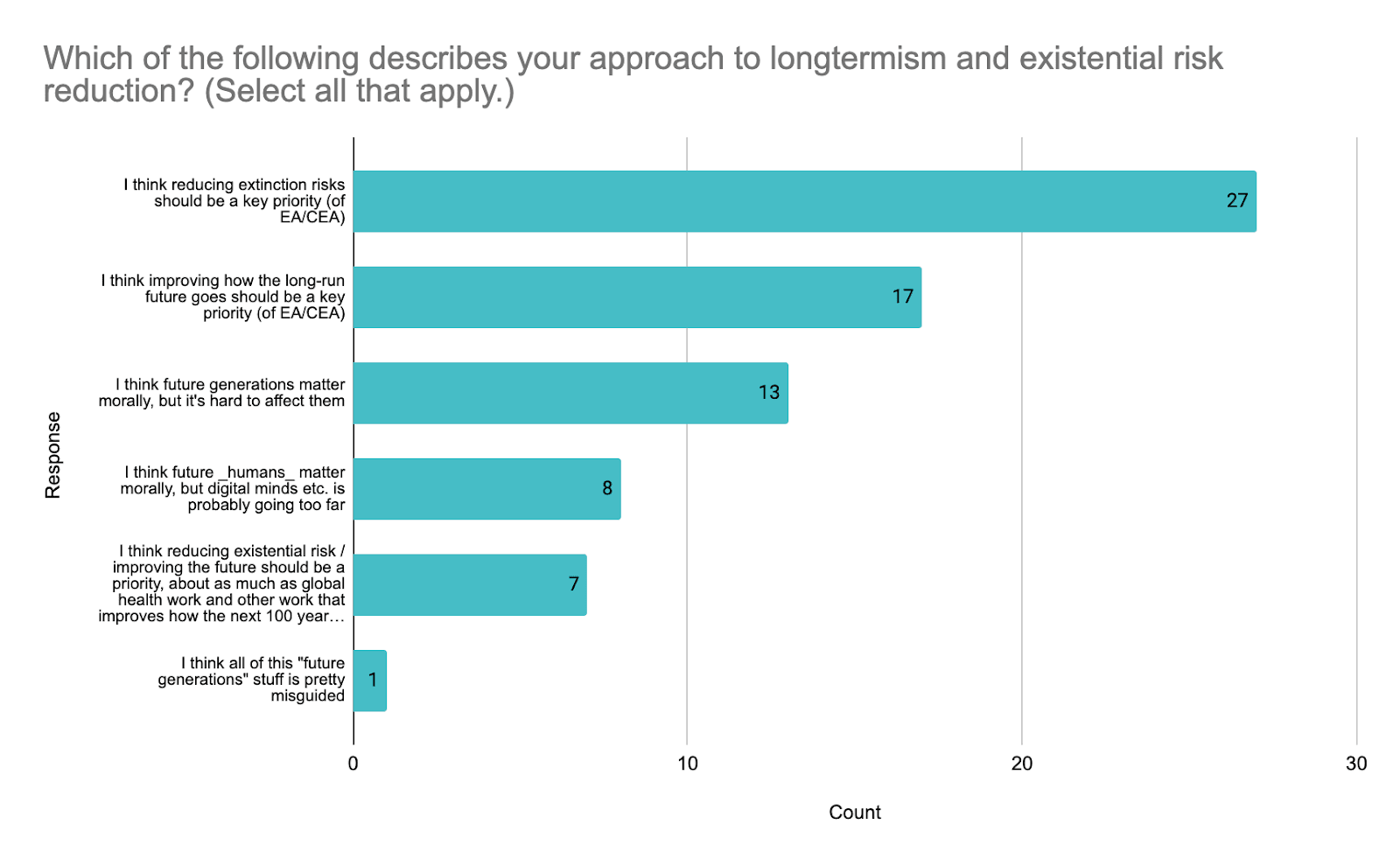

- Most people selected “I think reducing extinction risks should be a key priority (of EA/CEA)” (27). Many selected “I think improving how the long-run future goes should be a key priority (of EA/CEA)” (17), and “I think future generations matter morally, but it’s hard to affect them.” (13)

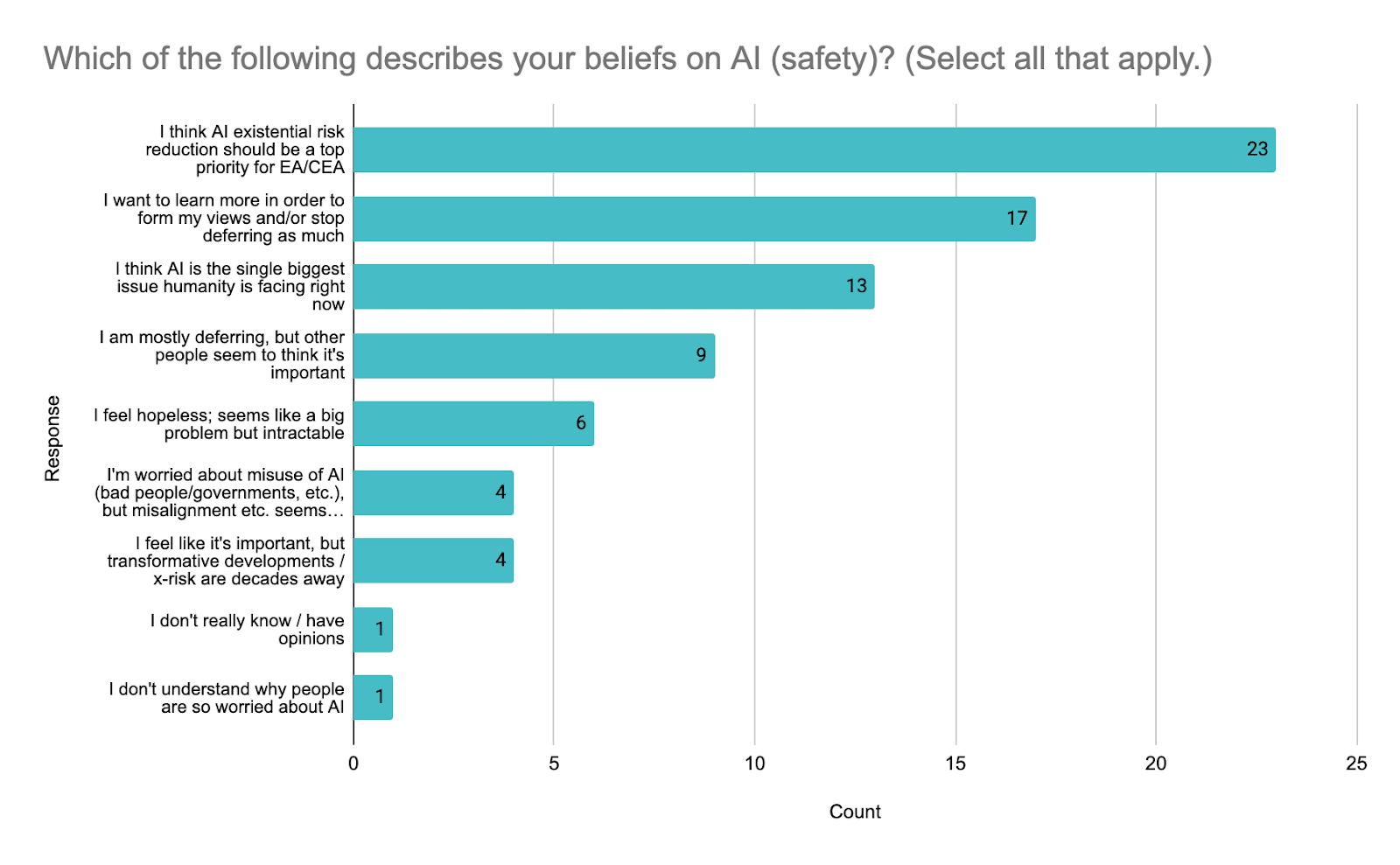

- Most people selected “I think AI existential risk reduction should be a top priority for EA/CEA” (23) and many selected “I want to learn more in order to form my views and/or stop deferring as much” (17) and “I think AI is the single biggest issue humanity is facing right now” (13). (Some people also selected answers like “I'm worried about misuse of AI (bad people/governments, etc.), but misalignment etc. seems mostly unrealistic” and “I feel like it's important, but transformative developments / x-risk are decades away.”)

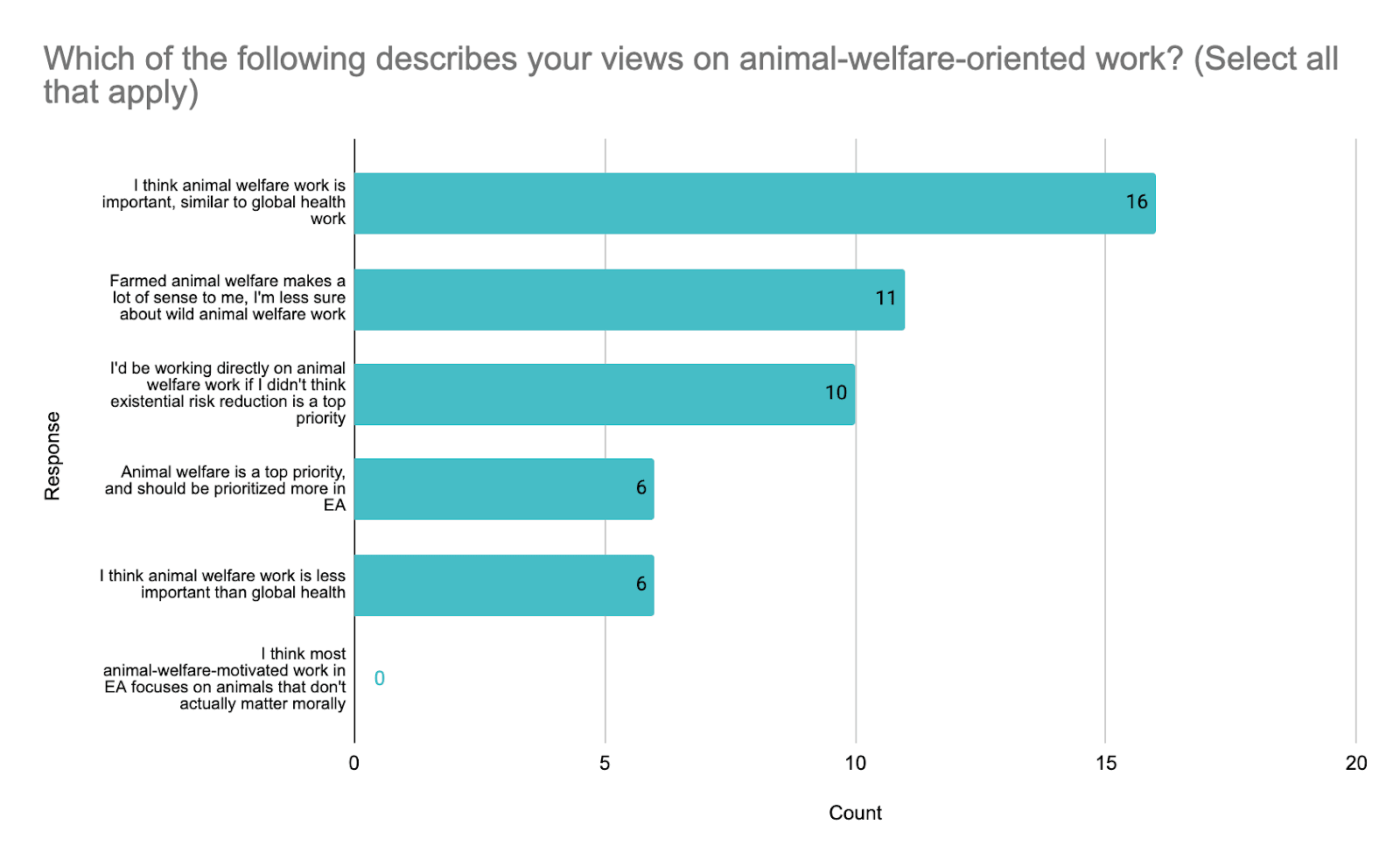

- Most people (22) selected at least one of “I think animal welfare work is important, similar to global health work” (16) and “I'd be working directly on animal welfare work if I didn't think existential risk reduction is a top priority.” (10)

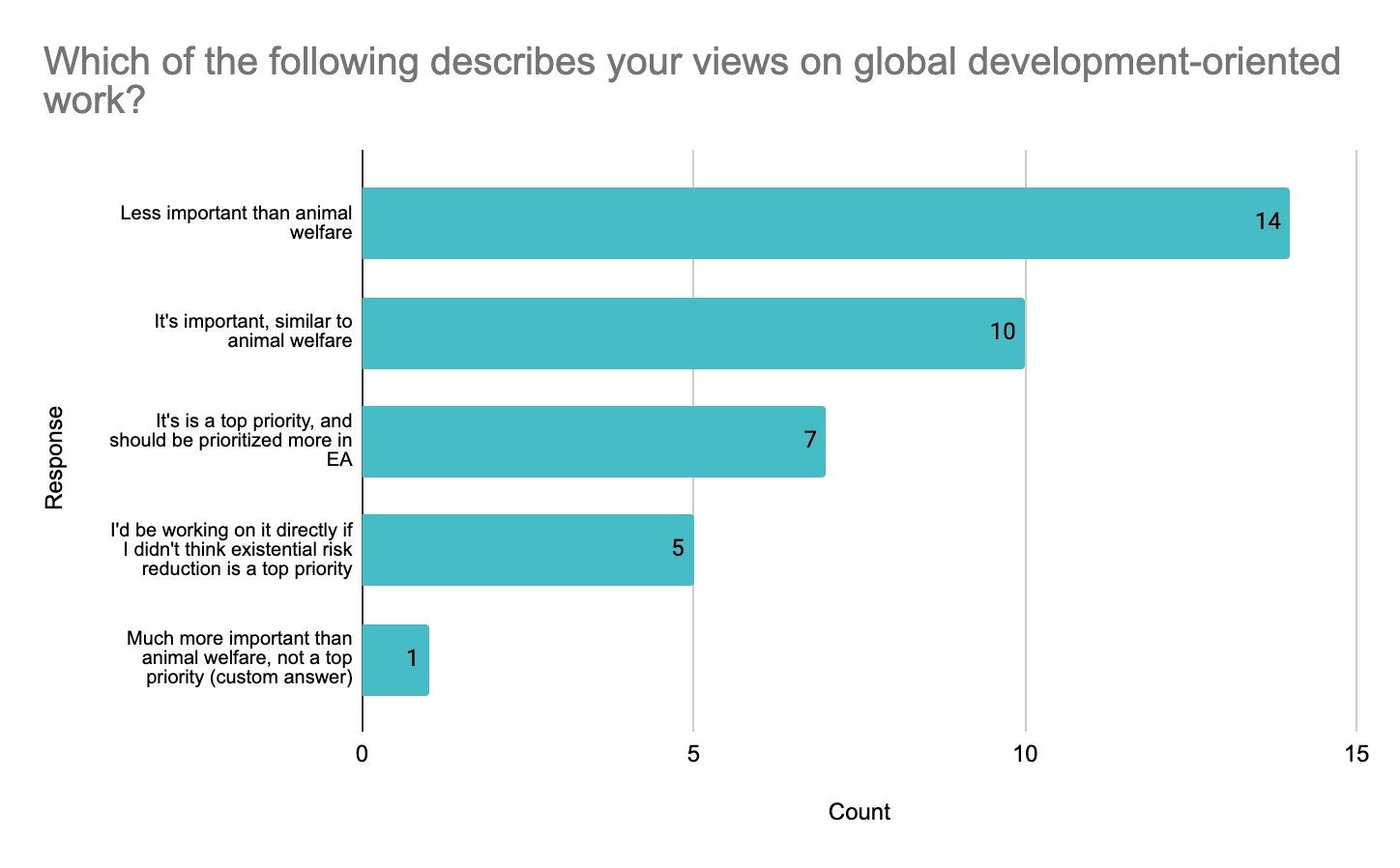

- Slightly fewer than half of respondents (14) say global health work is less important than animal welfare work, while 17 selected at least one of “It's important, similar to animal welfare,” “It's is a top priority, and should be prioritized more in EA,” and “I'd be working on it directly if I didn't think existential risk reduction is a top priority.”

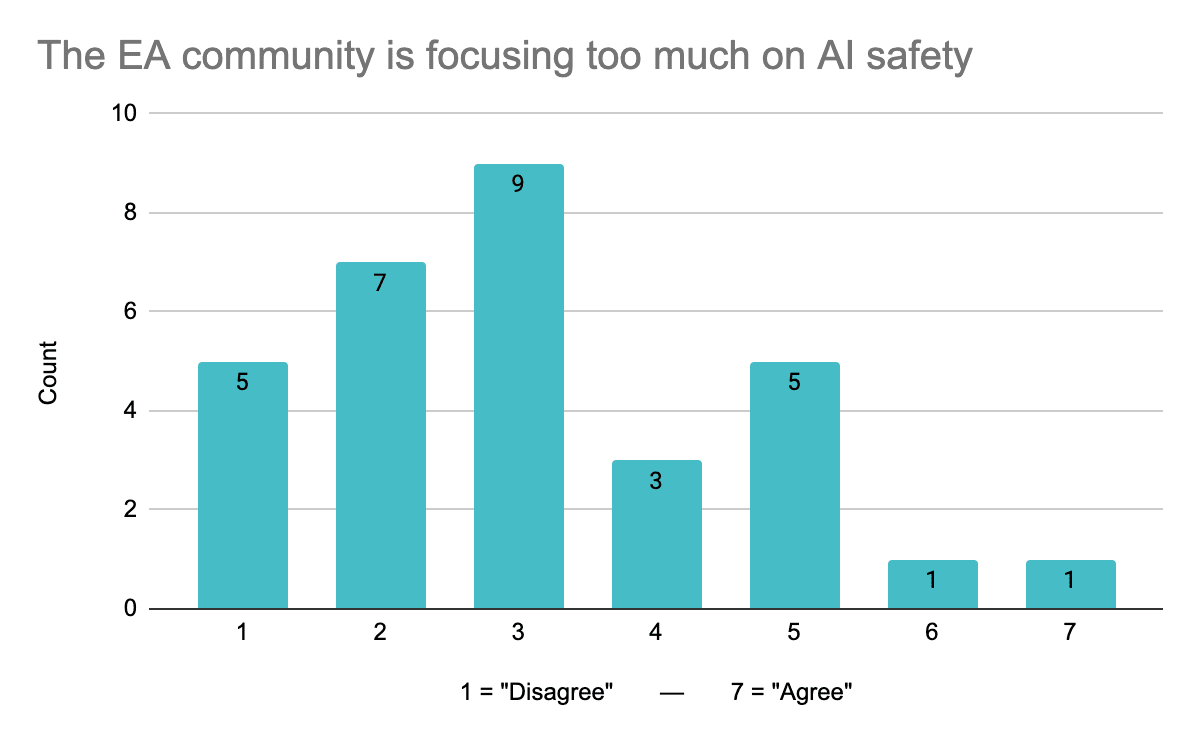

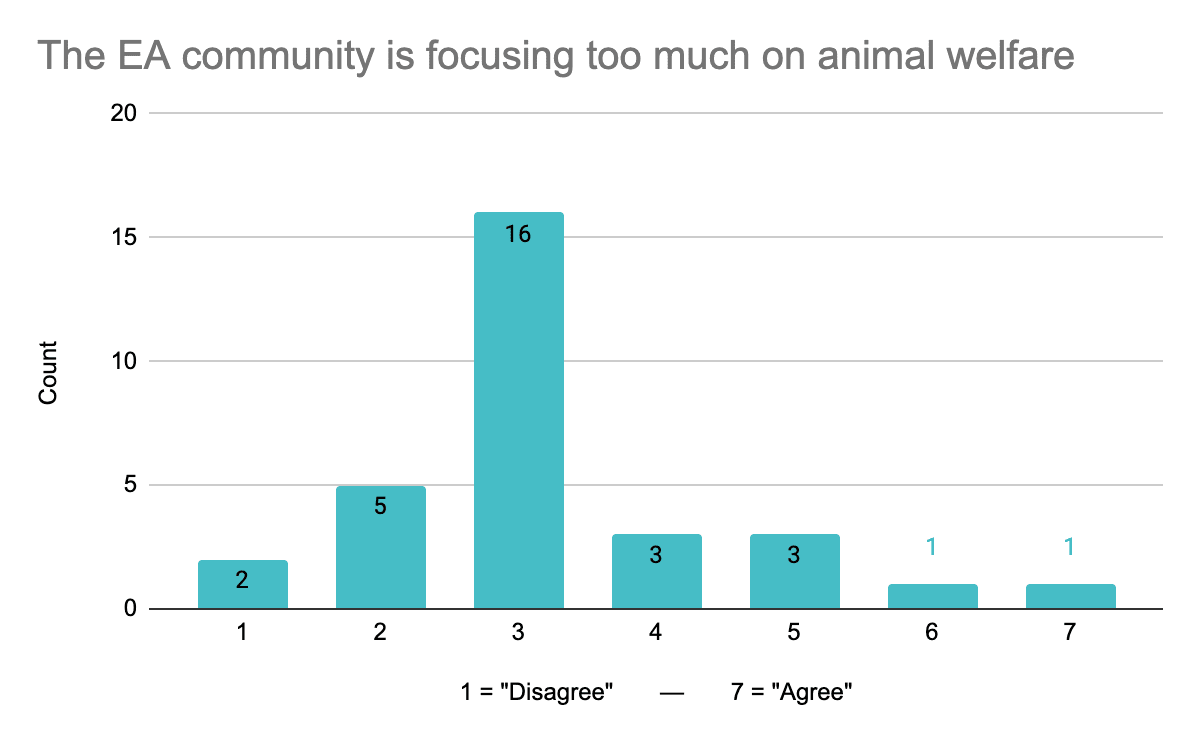

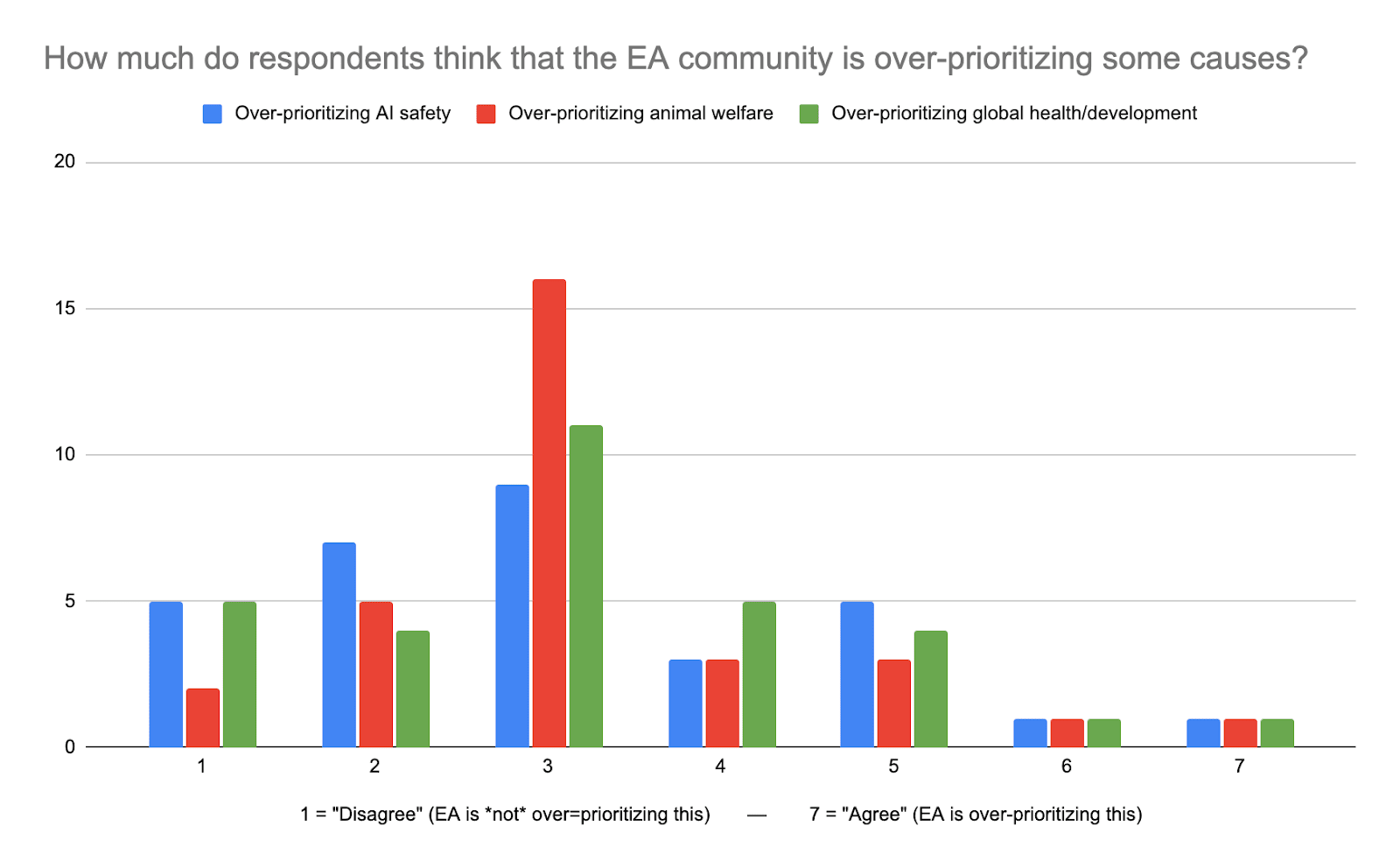

- Views relative to what the EA community is doing: respondents generally didn’t think that the EA community was over-prioritizing AI safety, animal welfare, and global health/development. For each of the three areas, about ~6 people (out of ~30 respondents) thought they were at least a bit over-prioritized.

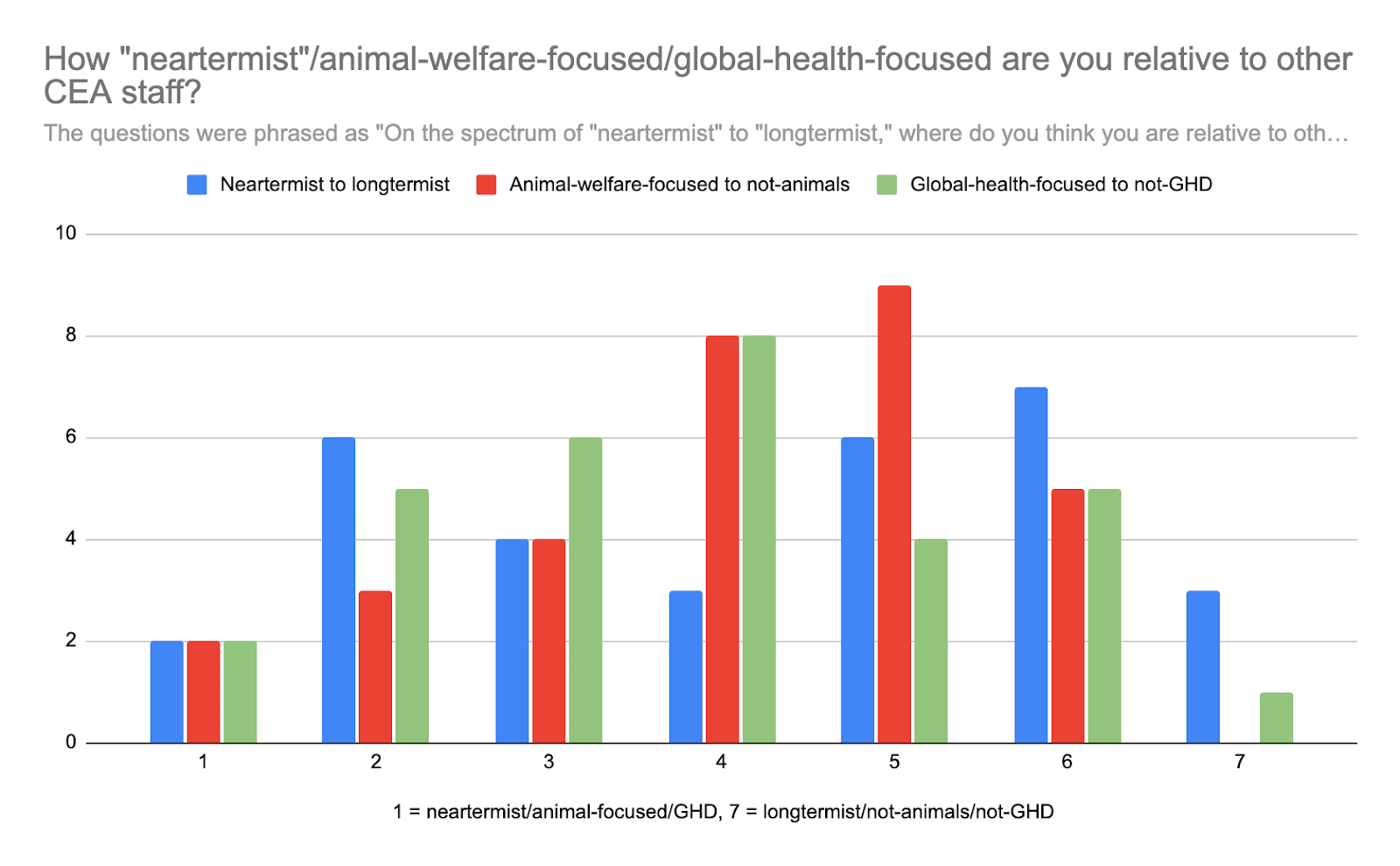

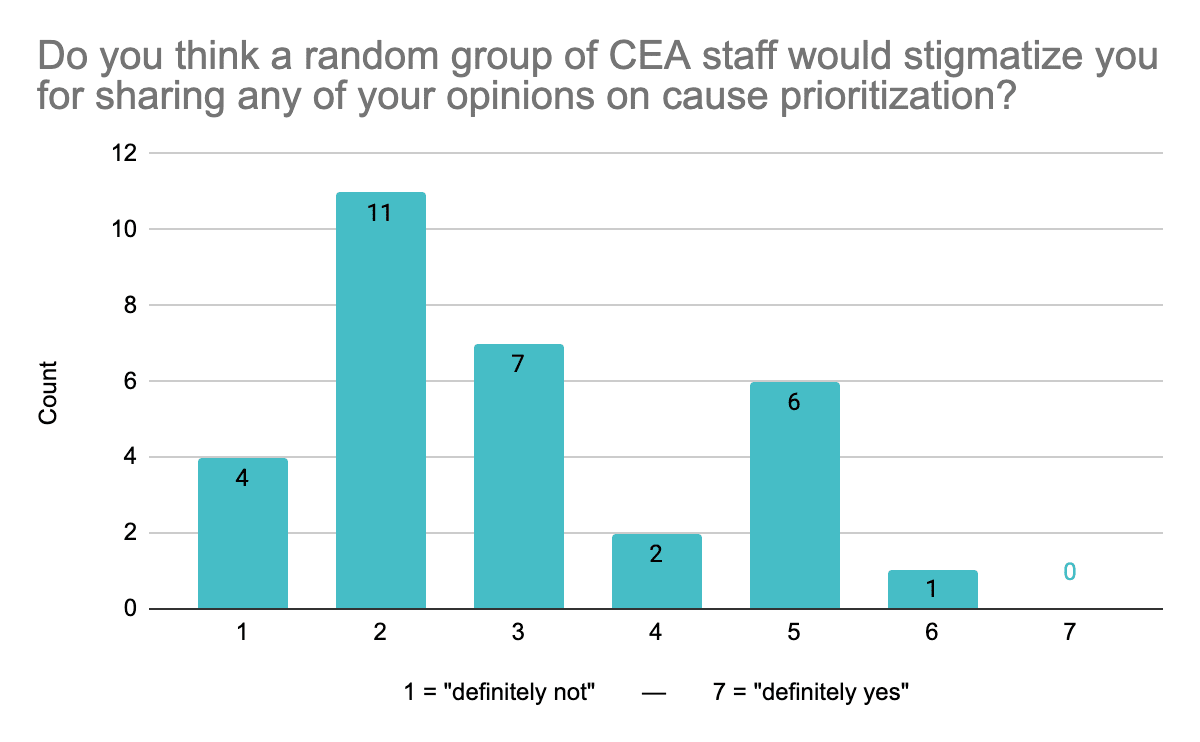

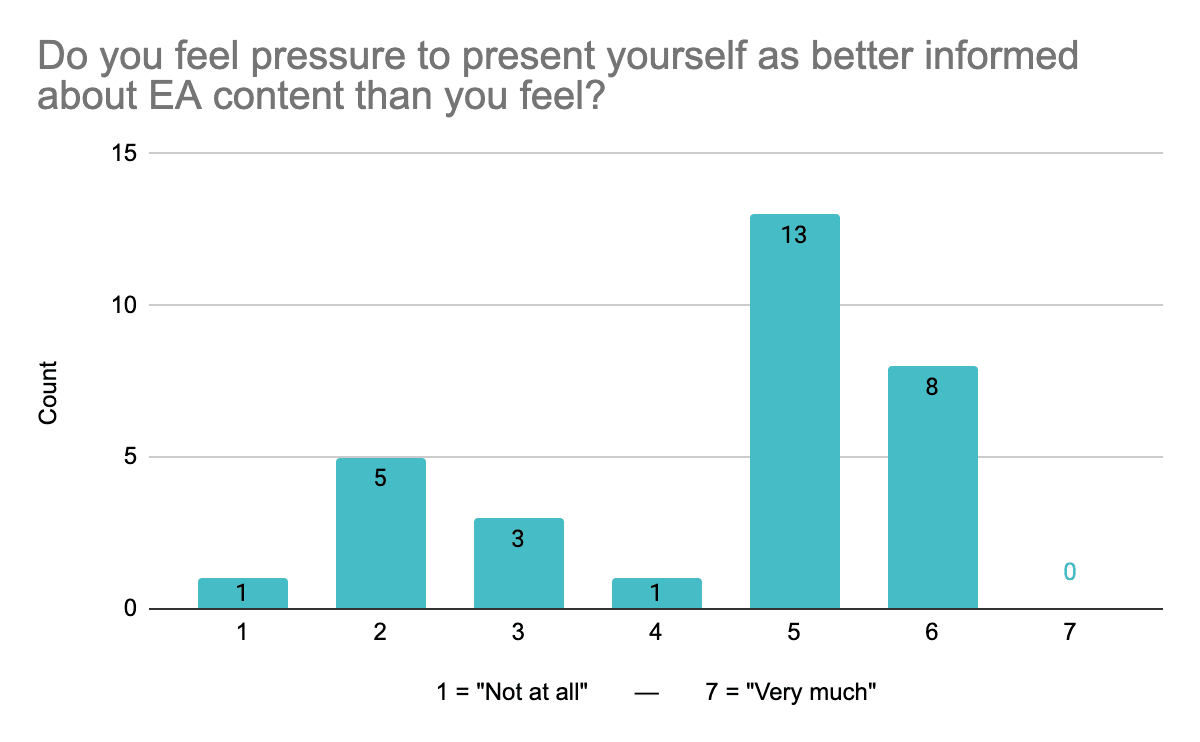

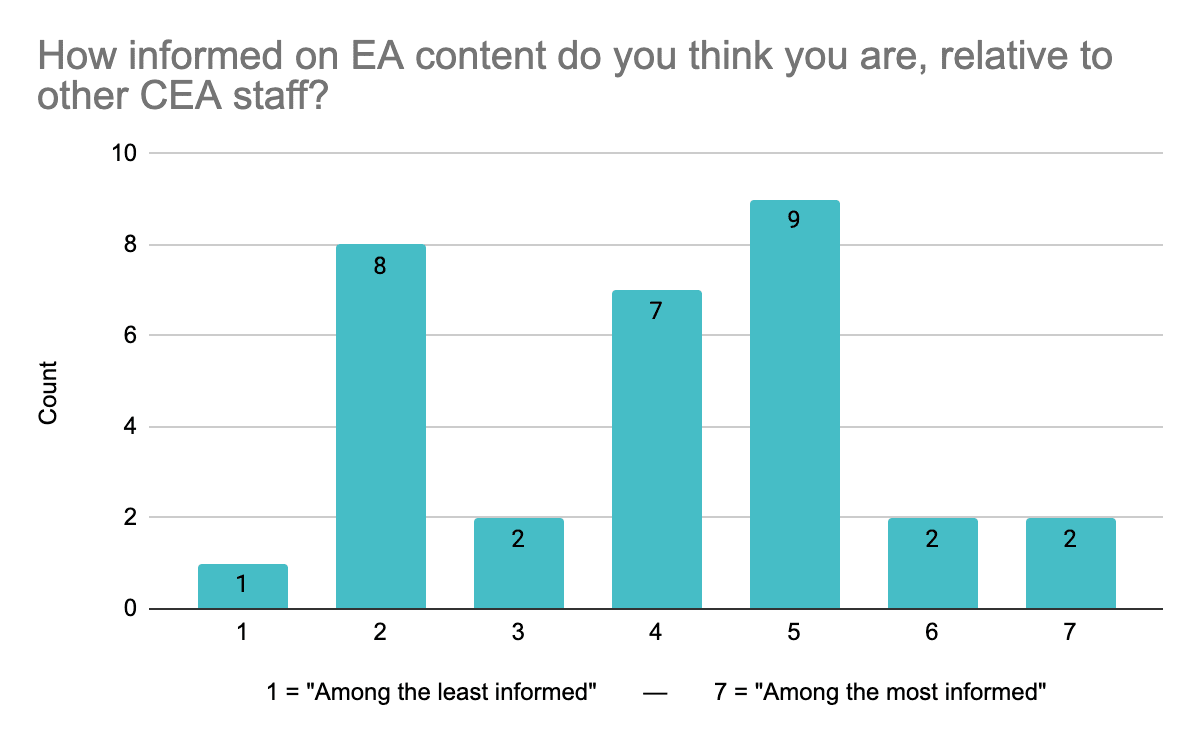

- Appendix: views relative to CEA and experience with cause prio at CEA: I had asked a variety of questions aimed at seeing if staff felt free to disagree about cause prioritization, and how staff perceived their cause prioritization relative to others at CEA. Broadly, most people didn’t think they’d be stigmatized for their cause prio but a few thought they would be (I couldn’t find real patterns in the cause prio of people who were on the higher end of this), a number of people seemed to feel some pressure to present themselves as better informed about EA content than they feel, and people had a pretty reasonable distribution of how informed on EA content they thought they were, relative to other CEA staff (e.g. about half of people thought they were above average, but this still could mean that people were still totally off), and the median respondent thought they were slightly less focused on animal welfare than other CEA staff.

1. How people approach cause prioritization and morality

Approaches to cause prioritization

I asked “Which of the following describes your approach to cause prioritization? (select all that apply)”, and the options were:

- I don't really care

- I defer to CEA or stakeholders

- I did some cause prio work for myself in the past but now mostly go off intuitions or defer

- I do some combination of deferring and trying to build my own cause prio

- I try to build a lot up on my own

Results:

- 19/31 respondents said they’re trying to build up their own cause prioritization at least a bit (4 of these selected “I try to build a lot up on my own”, and 17 selected “I do some combination of deferring and trying to build my own cause prio”).

- Of the other 12 people, 10 selected “I did some cause prio work for myself in the past but now mostly go off intuitions or defer” (2 of the 19 people who still do some cause prio of their own also selected this option).

- Two people simply selected “I defer to CEA or stakeholders,” and no one selected “I don’t really care.”

Here are the raw counts for how many people selected each response:

Morality

I asked “Which of the following describes your approach to morality? (Please select up to two)” — 30 responses, and the options were:

- I don't really have one

- Deontological

- I'm very consequentialist

- I lean consequentialist for decisions like what my project should work on, but I have a more mundane approach to daily life

- Mix of consequentialist and other things

- Big fan of moral uncertainty

Results:

- 2 people picked 3 options (:)), and the median respondent selected 1.5 options

- The most popular responses were “I lean consequentialist for decisions like what my project should work on, but I have a more mundane approach to daily life” and “Big fan of moral uncertainty”

- 7 people selected only “I’m very consequentialist” (3 more chose that option and something else)

- 5 people selected only “I lean consequentialist for decisions like what my project should work on, but I have a more mundane approach to daily life” (8 more chose that option and something else)

- 2 people selected only “Mix of consequentialist and other things” (7 more selected that option and other stuff)

- 1 person selected only “I don't really have one” (1 more also chose “I lean consequentialist for decisions like what my project should work on, but I have a more mundane approach to daily life”)

Response counts:

2. What should we prioritize?

I asked a number of questions about specific causes and worldviews.

Causes that should be priorities for EA

A. I asked “Which of the following should be key priorities for EA? (mark all that apply.)” The options were (not in this order):

- Mitigating existential risk, broadly

- AI existential security

- Biosecurity (global catastrophic risk focus)

- Farmed animal welfare

- Global health

- Other existential or global catastrophic risk

- Wild animal welfare

- Generically preparing for pandemics

- Mental health

- Climate change

- Raising the sanity waterline / un-targeted improving institutional decision-making

- Economic growth

- Electoral reform

- There was also a write-in: “Growing the number of people working on big problems (= building effective altruism?)”

Results:

- 30 people responded, and the median respondent selected 5 options.

- Most people think the following should be key priorities:

- Mitigating existential risk, broadly (27)

- AI existential security (26)

- Biosecurity (global catastrophic risk focus) (25)

- Farmed animal welfare (22)

- Global health (21)

- “Other existential risk,” wild animal welfare, and generically preparing for pandemics were also popular (see counts below).

- Some relationships between causes (I’ve excluded the smallest causes, as they were only selected by 1-3 people):

- Perfect subsets — the following causes were selected exclusively by people who also selected specific other causes:

- Biosecurity — (only people who also selected) AI existential security

- Other existential or global catastrophic risk — Bio (and AI)

- Generically preparing for pandemics — Farmed animal welfare, Bio (and AI)

- Mental health — Bio (and AI) (perhaps surprisingly, not global health)

- (Almost everyone who selected wild animal welfare also selected farmed animal welfare, except one person.)

- Unsurprisingly, the less-popular selections were chosen by people who selected more causes on average. Relative to that group, wild animal welfare and climate change were selected by people who selected fewer causes — median 6 and 7 respectively

- Perfect subsets — the following causes were selected exclusively by people who also selected specific other causes:

Raw counts for causes that staff selected:

Here’s the distribution of how many people selected a given number of causes:

More granular views on longtermism/existential risk reduction, AI safety, animal welfare, and global health/wellbeing/development

“Which of the following describes your approach to longtermism and existential risk reduction? (Select all that apply.)”

“Which of the following describes your beliefs on AI (safety)? (Select all that apply.)”

“Which of the following describes your views on animal-welfare-oriented work?”

“Which of the following describes your views on global development-oriented work?”

Views relative to what people think the focus of EA is currently on AI safety, animal welfare, and global health/wellbeing/development

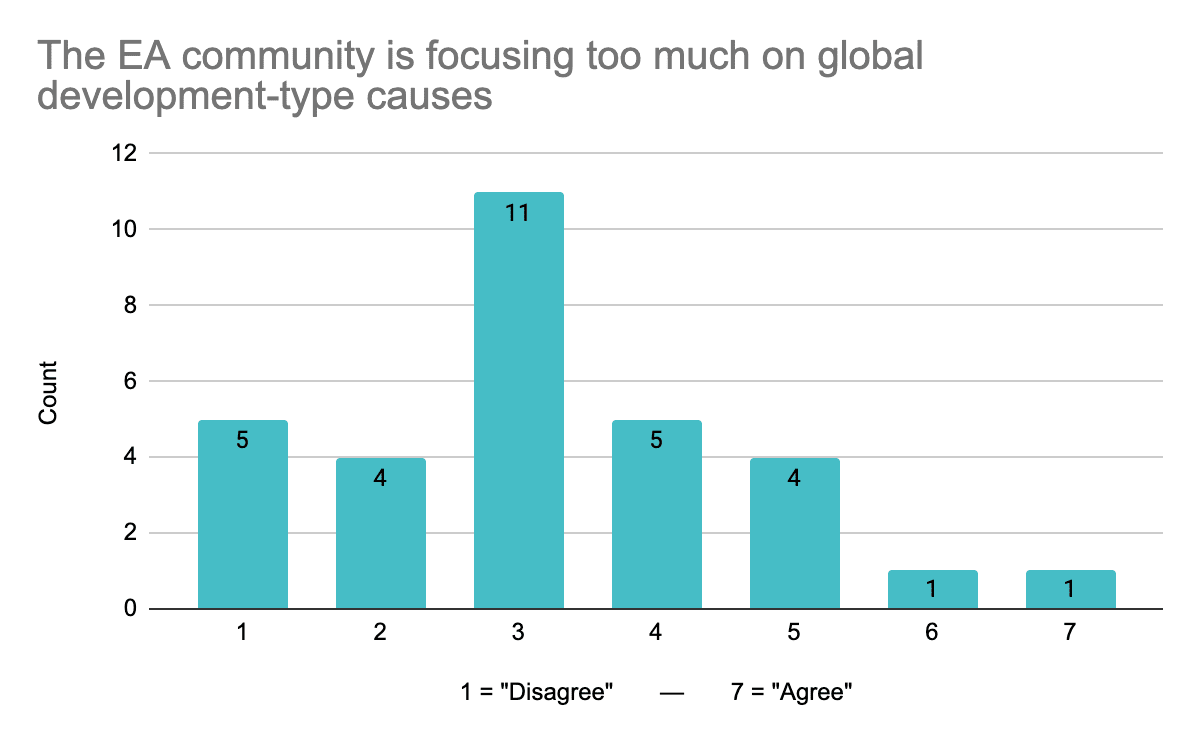

TLDR: CEA staff seem to broadly think things aren’t being overprioritized, with a couple of people disagreeing.[2]

“The EA community is focusing too much on AI safety”

“The EA community is focusing too much on animal welfare”

“The EA community is focusing too much on global development-type causes”

3. Appendix: Where staff think they are relative to others at CEA, pressure to appear a certain way, etc.

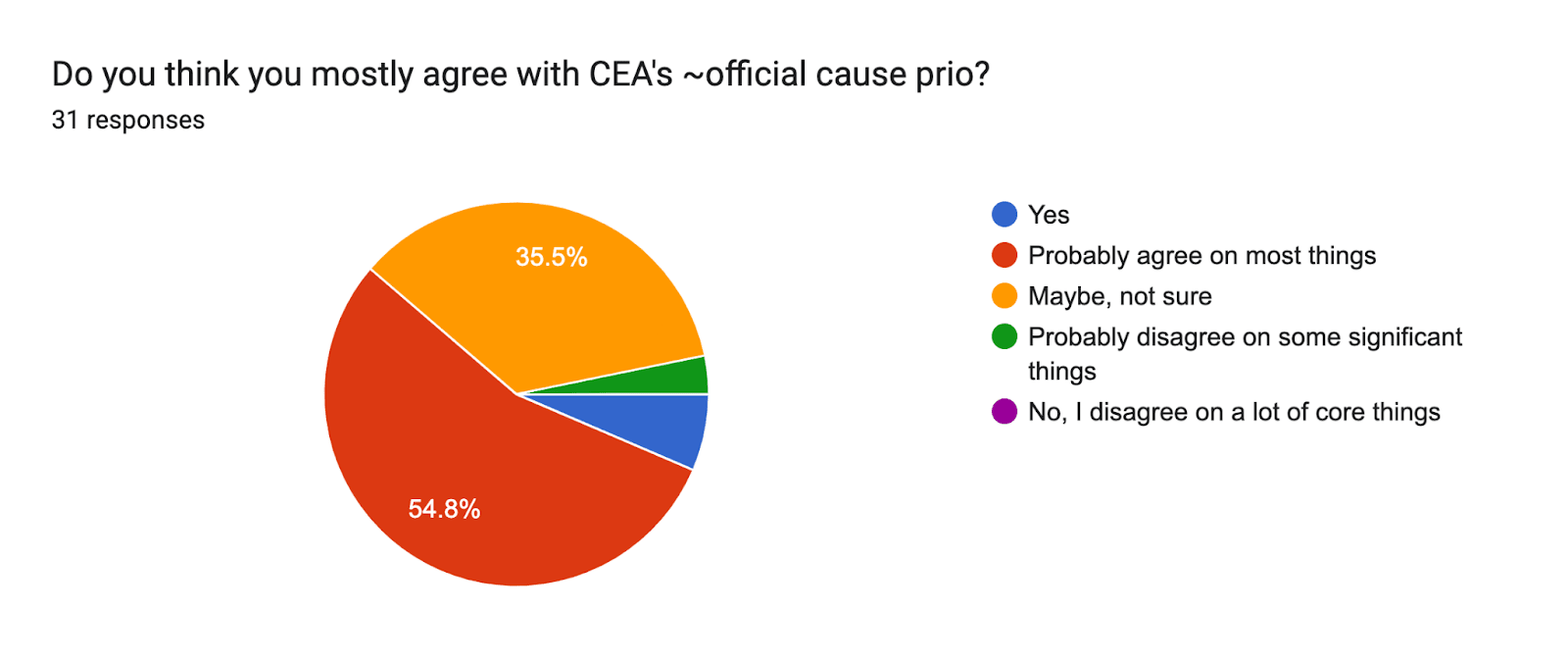

I asked “Do staff agree with “CEA’s ~official cause prio?”” I don’t really know what I was asking; we don’t really have an official cause prio (lots of people expressed something like “Do we have an official cause prio?” in the comments of this question). I expect that people interpreted “official cause prio” as “in practice, what CEA does,” although it’s possible that people were trying to guess “leadership’s” cause prio, or something like that. (I think it would have been useful to find out if people felt like they disagreed a lot.) I think I personally put “Maybe, not sure.” Anyway, here are the responses:

“Do you think a random group of CEA staff would stigmatize you for sharing any of your opinions on cause prioritization?” — 7 people are above neutral, which made me a bit worried. Most people think they wouldn’t get stigmatized:

“Do you feel pressure to present yourself as better informed about EA content than you feel?” — most people (21) feel this a decent amount:

“How informed on EA content do you think you are, relative to other CEA staff?”

What staff thought their cause prio was, relative to other CEA staff

- ^

This is an OK response rate, but you might imagine that the people who didn’t respond have non-representative beliefs. E.g. they’re the folks who care less about cause prioritization, or who felt that it would take a long time to fill out a survey like this because their beliefs are so complex, etc.

- ^

Here’s a combined view:

Thanks, I'm really encouraged by the diversity of thought here, there seems to be a range of beliefs (that aren't necessarily that tightly held) across the staff spectrum.

Definitely a bit less X-risk focused than I would have expected, although perhaps this is at least partially explained by the low response rate.

Thanks!

I would be pretty surprised if the people who didn't respond are significantly/systematically more x-risk-focused, for what it's worth.