By Spencer Greenberg and Amber Dawn Ace

This post is designed to stand alone, but it is also the third of five posts in my sequence of essays about my (Spencer's) life philosophy, Valuism. (Here are the first, second, fourth, and fifth parts.)

Sometimes, people take an important value – maybe their most important value – and decide to prioritize it above all other things. They neglect or ignore their other values in the process. In my experience, this often leaves people feeling unhappy. It also leads them to produce less total value (according to their own intrinsic values). I think people in the effective altruist community (i.e., EAs) are particularly prone to this mistake.

In the first post in this sequence, I introduce Valuism – my life philosophy – and offer some general arguments for its advantages. In this post, I talk about the interaction between Valuism and effective altruism. I argue that the way some EAs think about morality and value is (in my view) empirically false, potentially psychologically harmful, and (in some cases) incoherent.

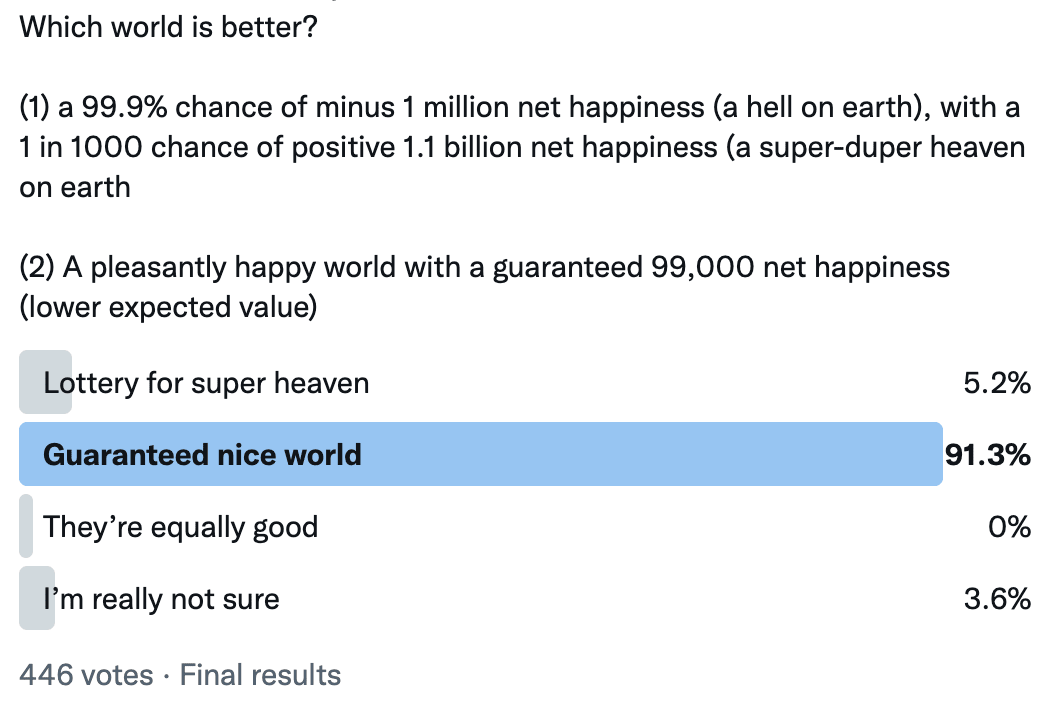

EAs want to improve others’ lives in the most effective way possible. Many EAs identify as hedonic utilitarians (even the ones who reject objective moral truth). They say that impartially maximizing utility among all conscious beings – by which they usually mean the sum of all happiness minus the sum of all suffering – is the only thing of value, or the only thing that they feel they should value. I think this is not ideal for a few reasons.

1. I think (in one sense) it’s empirically false to say that "only utility is valuable"

Consider a person who claims that “only utility is valuable.”

If we interpret this as an empirical claim about the person’s own values – i.e., that the sum of happiness minus suffering for all conscious beings is the only thing that their brain assigns value to – I think that it’s very likely empirically false.

That is, I don’t think anyone only values (in the sense of what their brain assigns value to) maximizing utility, even if it’s a very important value of theirs. I can’t prove that literally nobody only values maximizing utility, but I argue that human brains aren’t built to only value one thing, nor would we expect evolution to converge on pure utilitarian psychology since evolution optimizes for survival (a purely utilitarian brain would get rapidly outcompeted by other brain types if they existed 50,000 years ago).

I think that even the most hard-core hedonic utilitarians do psychologically value some non-altruistic things deep down – for example, their own pleasure (more than the pleasure of everyone else), their family and friends, and truth. However, in my opinion, they sometimes deny this to themselves or feel guilty about it. If you are convinced that your only intrinsic value is utility (in a hedonistic, non-negative-leaning utilitarian sense), you may find it instructive to take a look at these philosophical scenarios I assembled or check out the scenarios I give in this talk about values.

For instance, does your brain actually tell you it’s a good trade (in terms of your intrinsic values) to let a loved one of yours suffer terribly in order to create a mere 1% chance of preventing 101 strangers from the same suffering? Does your brain actually tell you that equality doesn’t matter one iota (i.e., it’s equally good for one person to have all the utility compared to spreading it more equally)? Does your brain actually value a world of microscopic, dumb orgasming micro-robots more than a world (of slightly less total happiness) where complex, intelligent, happy beings pursue their goals? Because taken at face value, hedonic utilitarianism doesn’t care about whether a person is your loved one or a stranger, doesn’t care about equality at all, and prefers microscopic orgasming robots to complex beings as long as the former are slightly happier. But, if you consider yourself a hedonic utilitarian, is that actually what your brain values?

2. It can be psychologically harmful to deny your intrinsic values

Additionally, I think the attitude that there is only one thing of value can lead to severe psychological burnout as people try to push away, minimize or deny their other intrinsic values and “selfish,” non-altruistic desires. I’ve seen this happen quite a few times. Here’s Tyler Alterman’s personal account of this if you’d like to see an example. And here’s a theory of how this burnout happens.

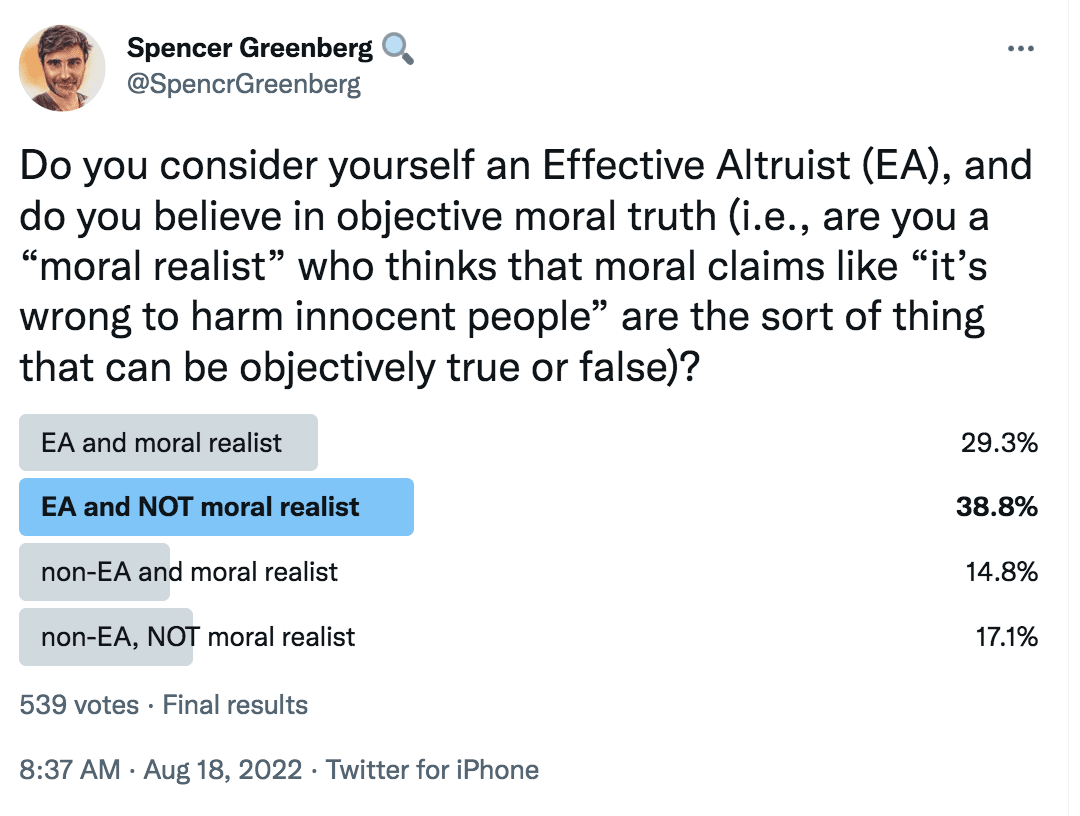

3. I think (in one sense) it’s incoherent to only value utility if you don't believe in moral realism

When coupled with a view that there is no objective moral truth, I think it is, in most cases, philosophically incoherent to claim that total hedonic utility is all that matters.

If you believe in objective moral truth, it may make sense to say, “I value many things, but I have a moral obligation to prioritize only some of them” (for example, you might be convinced by arguments that you are objectively morally obliged to promote utility impartially even though that’s not the only value you have).

However, many EAs, like me, don’t believe in objective moral truth. If you don’t think that things can be objectively right or wrong, it doesn’t make sense (I claim) to say that you “should” prioritize maximizing utility for all of humanity over other values – what does this “should” even mean? Well, there are some answers for what this “should” could mean that philosophers and lay people have proposed, but I find them pretty weak.

For a much more in-depth discussion of this point (including an analysis of different ways that EAs have responded to my critique of pairing utilitarianism with denial of objective moral truth), see this essay. It collects may different objections (from EAs and from some philosophers) and discusses them. So if you are interested in whether it is or isn't coherent to only value utility when you deny objective moral truth, and moreover, whether EAs and philosophers have good arguments for doing so, please see that essay.

I find that while many (perhaps the majority of) EAs deny objective moral truth, many still talk and think as though there is objective moral truth.

I found it striking that, in my conversations with EAs about their moral beliefs, few had a clear explanation for how to combine a belief in utilitarianism with a lack of a belief in objective moral truth, and the approaches to that that they did put forward were usually quite different from each other (suggesting, at the very least, a lack of consensus in how to support such a perspective). Some philosophers I spoke to pointed to other ways one might defend such a position (mainly drawn from the philosophical literature), but I don't recall ever seeing these approaches being used or referenced by non-philosopher EAs (so they don't seem to be doing much work in the beliefs of EAs who hold this view).

I suspect it would help many EAs if they took a more Valuist approach: rather than claiming to or aspiring to only value hedonic utility, they could accept that while they do intrinsically value this – very likely far more than the average person – they also have other intrinsic values, for example, truth (which I think is another very important psychological value for many EAs), their own happiness, and the happiness of their loved ones.

Valuism also avoids some of the most awkward bullets that EAs sometimes are tempted to bite. For instance, hedonic utilitarianism seems to imply that your own happiness and the happiness of your loved ones “shouldn’t” matter to you even a tiny bit more than the happiness of a stranger who is certain to be born 1,000,000 years from now. Valuism may explain why people who identify as hedonic utilitarians may feel a great deal of internal conflict about this – even if you value the happiness of all sentient beings a tremendous amount, you almost certainly have other intrinsic values too. That means that Valuism may help you avoid some of the awkward conundrums that arise from ethical monism (where you assume that there is only one thing of value).

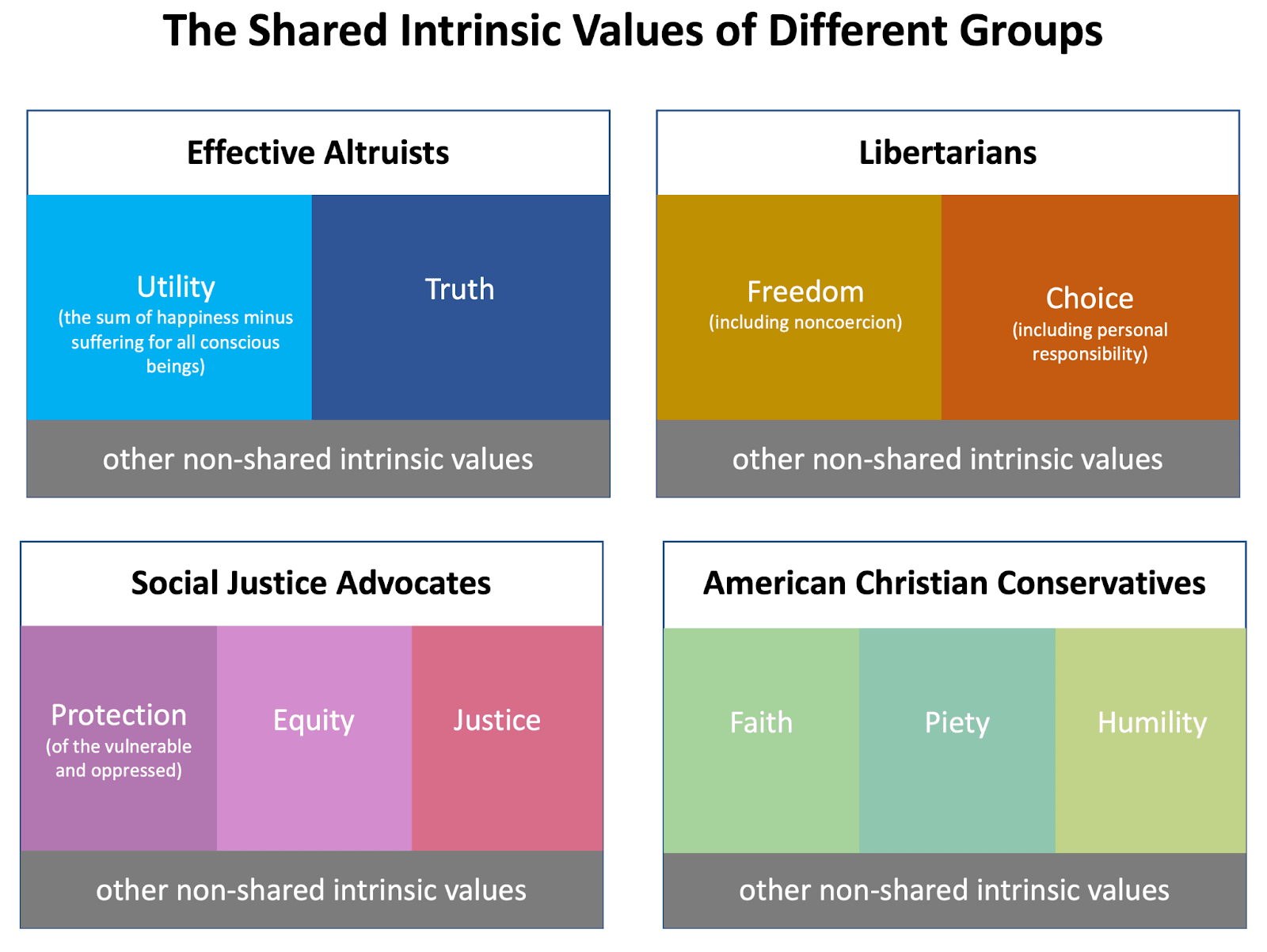

Valuism and the EA Community

From a Valuist perspective, I see the EA community as a group of people who share a primary intrinsic value of hedonic utility (i.e., reducing suffering and increasing happiness impartially) with a secondary strong intrinsic value of truth-seeking. Oddly (from my point of view) EAs are very aware of their intrinsic value of impartial hedonic utility, but seem much less aware of their truth-seeking intrinsic value. On a number of occasions, I’ve seen mental gymnastics used to justify truth-seeking in terms of increasing hedonic utility when (I claim) a much more natural explanation is that truth-seeking is an intrinsic value (not just an instrumental value that leads to greater hedonic utility). This helps explain why many EAs are so averse to ever lying and so averse even to persuasive marketing.

Each individual EA has other intrinsic values beyond impartial utility and truth-seeking, but in my view, those two values help define EA and make it unique. This is also a big part of why this community resonates with me: those are my top two universal intrinsic values as well.

If more EAs adopted Valuism, I think that they would almost all continue to devote a large fraction of their time and energy toward improving the world effectively. Maximizing global hedonic utility (i.e., the sum of happiness minus suffering for conscious beings) is the strongest universal intrinsic value of most community members, so it would still play the largest role in determining their goals and actions, even after much reflection.

However, they would also feel more comfortable investing in their own happiness and the happiness of their loved ones at the same time, which I predict would make them happier and reduce burnout. Additionally (I claim), they’d accept that, like many effective altruists, they also have a strong intrinsic value of truth. They’d strike a balance between their various intrinsic values, and not endorse annihilating all their intrinsic values except for one.

Hi Spencer and Amber,

There's a pretty chunky literature on some of these issues in metaethics, e.g.:

Obviously it would be a high bar to have to have a PhD level training on these topics and read through the whole literature before posting on the EA Forum, so I'm not suggesting that! But I think it would be useful to talk some of these ideas through with antirealist metaethicists because they have responses to a bunch of these criticisms. I know Spencer and I chatted about this once and probably we should chat again! I could also refer you to some other EA folks who would be good to chat to about this, probably over DM.

All of that said, I do think there are useful things about what you're doing here, especially e.g. part 2, and I do think that some antirealist utilitarianism is mistaken for broadly the reasons you say! And the philosophers definitely haven't gotten everything right, I actually think most metaethicists are confused. But especially claims like those made in part 3 have a lot of good responses in existing discussion.

ETA: Actually if you're still in NYC one person I'll nominate to chat with about this topic is Jeff Sebo.

Hi Tyler, thanks for your thoughts on this! Note that this post is not about the best philosophical objections, it's about what EAs actually believe. I have spoken to many EAs who say they are utilitarian but don't believe in objective moral truth (or think that objective moral truth is very unlikely) and what I'm responding to in this post is what those people say about what they believe and why. I also have spoken to Jeff Sebo about this as well!

In point 1 and 2 in this post, namely, "1. I think (in one sense) it’s empirically false to say that "only utility is valuable" and "2. It can be psychologically harmful to deny your intrinsic values" I'm making a claim about human psychology, not about philosophy.

So it sounds like you're mainly respoding to this point in my essay: "3. I think (in one sense) it’s incoherent to only value utility if you don't believe in moral realism"

That's totally fair, but please note that I actually solicited feedback on that point from tons of people, including some philosophers, and I wrote a whole essay just about that claim in particular which is linked above (of course, I wouldn't have expected you to have read it, but I'm just pointing that out). Here is that essay:

https://www.spencergreenberg.com/2022/08/tensions-between-moral-anti-realism-and-effective-altruism/

I will update the post slightly to make it clearer that I have a whole essay discussing objections to that point.

Note that at the bottom of that other essay I discuss every single reasonable-ish objection I've heard to that argument, including some from philosophers. Perhaps you have other objections not mentioned there, but I do delve into various objections and have sought a lot of feedback/criticism on that already!

I would be very happy to discuss this topic more with you, and hear your more detailed objections/points you think I am getting wrong - let me know if you'd like to do that!

Finally, I will note though that most of the objections you mention in your comment above are NOT the many EAs I've spoken to use to defend their beliefs, so even if they are strong arguments I don't think they are doing work in why most EAs (who deny objective moral truth but say they are utilitarians) believe what they do.

I've only skimmed the essay but it looks pretty good! Many of the ideas I had in mind are covered here, and I respond very differently to this than to your post here.

I don't know what most EAs believe about ethics and metaethics, but I took this post to be about the truth, or desirability of these metaethical, ethical, and methodological positions, not whether they're better than what most EAs believe. And that's what I'm commenting on here.

Cool, thanks for checking it out! I'll update the post slightly to make it clearer that I'm talking about beliefs rather than the truth.

As you anticipate, I don’t find this very compelling because I’m a moral realist. It’s true that people can have preferences that run counter to hedonism, but I think those preferences are irrational. For example, someone might have a preference to sit and count blades of grass even though it doesn’t make her happy. I think her friends should do everything they can (within reason) to persuade her to see a movie she’d enjoy.

I also already feel “comfortable investing in [my] own happiness and the happiness of [my] loved ones at the same time” because I know that it reduces burnout and that I wasn’t born a perfect utilitarian and that needs to be taken into consideration.

A few provocative questions: What is your yardstick for measuring the effectiveness of your theory compared to other theories? How much work have you done to figure out how to falsify utilitarianism and consider alternatives? How do you deal with the objections to utilitarianism and the fact that there is no expert consensus on what moral theory is "right"?

I mean do what floats your boat as long as you don't hurt other people (and beings) and behave in otherwise responsible ways (i.e., please don't become the next SBF) but I am always pretty surprised and confused when people voice very strong confidence in their moral theory. In particular if you are a moral realist, it should be in your own interest to not treat ethics like a horse race or football match where you are rooting for the team you like best.

Thank you for the reply. As in mathematics and logic, rational intuition is ultimately my yardstick for determining the truth of a proposition. I think it self-evident that the good of any one individual is of no more importance than the good of any other and that a greater good should be preferred to a lesser good. As for what that good is, everything comes down to pleasure on reflection. The objections to this view fall prey to numerous biases (scope insensitivity, status quo bias), depend on knee-jerk emotional reactions, or rest on misunderstandings of the theory (for example, attacking naive as opposed to sophisticated utilitarianism). Some are even concerned with the practicality of the theory, which has no bearing on its truth.

There is no consensus in part because philosophers are under great pressure to publish. If Henry Sidgwick had figured most things out in his great 19th Century treatise The Methods of Ethics (the best book on ethics ever written, even according to many non-utilitarians), then that would rather spoil the fun. If you are interested in a painstaking attempt by a utilitarian to consider the alternatives, then have a read. It is extremely dense, but that is what's required. A good companion is the volume published nine years ago by Singer and Lazari-Radek.

Hurting other sentient beings is the antithesis of utilitarianism, as you know. Mr Bankman-Fried's alleged actions should serve as a warning against naive utilitarianism and are a reminder that commonly accepted negative duties should almost always be followed (on utilitarian grounds). We don't know whether these alleged actions were the product of his philosophical beliefs, or whether it had more to do with the pernicious influence of money and power. Regardless, that he went down such a career path in the first place was the result of his philosophical beliefs and we should therefore take some responsibility as a community.

But I'm far more concerned about avoiding the (in)actions of virtually everyone in recent history, who fail to do anything about the plight of hundreds of billions of sentient beings (human and non-human) or who actively exacerbate their suffering. Most of these people are under the pernicious influence of "common-sense morality", which tells them that they have few positive duties toward others. Others think that being or feeling "virtuous" is sufficient. A few are recognised as evildoers for violating the admirable negative duties of common-sense morality that most at least accept. Such evildoers have almost always subscribed to profoundly anti-utilitarian ideologies, whether it be fascism, Stalinism, racism or nationalism.

Call me naive but your argument doesn't go through for me. You write...

So your standard for adjudicating the "truth" of propositions is your "rational intuition". You think your position "self-evident" but what about others? How do you view their objections? Are they simply feeble attempts to pull you to the dark side or may they also be interpreted as evidence against the "self-evidence" of your intuition? Later you write...

How is your claim to "correctness" different from theirs? Is it not conceivable that you may also be "under the pernicious influence of a utilitarian morality"? How do you ground your theory in a way that precludes this possibility? If you claim to have the "right" definition and the "right" standard for judging ethical behavior, you better continue to challenge and evaluate it so as to continuously produce evidence that maintains its warranted assertibility beyond a reasonable doubt, wouldn't you agree? Otherwise, what makes your belief "truer" than, for example, catholic dogma?

P.S.: I have nothing against considering utilitarianism as an informative theory for guiding ethical behavior, I just think we should remain reasonable about the claims we make and curious and humble about the positions we advocate for. I don't know you personally or your positions in detail but based on the thrust of your comments, I felt it important to raise this point.

I don’t consider the intuitions of adherents to competing moral theories to be strong evidence against the detailed, painstaking process of reflection that I and other utilitarians have been through. I also think that utilitarianism best accommodates and explains our common-sense moral intuitions, as Sidgwick argued in detail. Therefore, there is not as much disagreement between the broad mass of people and utilitarians as there might seem to be at first glance. Those who have invented ‘rights’ and ‘virtues’ out of thin air have much more serious disagreements with common-sense morality, which is a problem for them.

If most people thought that an object can simultaneously be red and green all over, their intuitions here wouldn’t be strong evidence against the fact that this is self-evidently absurd. For many centuries, Europeans rejected the idea that you could work with negative numbers. In cultures where negative numbers were being used, I don’t think this disagreement would have been good evidence against the self-evidence of negative numbers being useful in mathematics.

I fully accept that others can say similar things to me. That is fine. To use the example from your other post, you can say that it’s self-evident that Alice should take the morphine; I will say that it would be self-evidently wrong of Alice to deprive Bob of such a special experience. All utilitarians can do is trust that, in time, reason will prevail. Pinker and Singer have both written about this. This is why we have been ahead of our time, while Kant’s views, for example, on various object-level issues are recognised as having been horribly wrong.

It is certainly conceivable that I am “under the pernicious influence of utilitarianism”, in which case I would by default become a nihilist and abandon any attempt to reduce the suffering of sentient beings.

You certainly lost me here. All I am asking for is humility regarding our ability to "know" things, in particular regarding ethics. Every part of your argument could have been made by catholic dogmatists, who have likely engaged for much longer and deeper in painstaking reflection. For me that would be a worrying sign but I certainly did not intend for this contemplation of our own fallibility to drive you into not caring about other sentient beings. I think the parent post makes a good case for caring about lots of things we value.

Catholics make empirical claims about the natural world. Logical and moral truths do not fit into that category, so I disagree with the comparison.

The parent post makes no case whatsoever for caring about the things we value! All it does is assert that we ought to value everything that we already care emotionally about. Why should we act on everything we care emotionally about? How do we know that everything we care about is worth acting on? More humility may be required in all quarters!

Don’t worry, I still aim to maximise the well-being of all sentient beings because I think the very nature of pleasure gives me strong reason to want to increase it and that there are no other facts about the universe which give me similar reasons for action. The table in front of me certainly doesn’t. “Virtues” and “rights” are man-made fictions, not facts. Conscious experiences in general seem like a better bet, but the ‘redness’ of an object also doesn’t give me reason to act. It is only valenced experiences which do. Hypothetically, though, were I to reject utilitarianism, I would by default become a nihilist precisely because I am humble about our ability to know things! I might still care about the suffering of sentient beings, but my caring about something is not a reason to act on it. Parfit is very good on this.

I think you are misrepresenting a few things here.

First, Catholics talk a lot about ethics. Please come up with a better excuse to brush away the critique I made. I am almost offended by the laziness of your argument.

Second, you are misrepresenting the post. It does not assert that we should "value everything that we already care emotionally about". It argues for reflecting about what values we actually hold dear and have good reason to hold dear. This stands in contrast to your position, which amounts to arguing for a premature closing of this process of reflection by deferring to the supremacy of welfare under all circumstances and for all time.

Besides those misrepresentations, I think there is value in discussing reasons for actions and reflecting about values and my hope is you will stay open to this in the future. I personally feel drawn to a critical pragmatist perspective and I believe that at some point we could have a nice discussion about it. There are certainly other perspectives besides utilitarianism that are worth discussing. All I want to do, is to encourage you to keep an open mind.

It wasn’t clear which aspect of Catholic dogma you were referring to. Catholic claims about ethics seem to crucially depend on a bunch of empirical claims that they make. Even so, I view such claims as just a subset of claims about ethics that depend on our intuitions.

As above, these conflicting intuitions can only be resolved through a process of reflection. I am glad that you support such a process. You seem disappointed that the result of this process has, for me, led to utilitarianism. This is not a “premature closing of this process” any more than your pluralist stance is a premature closing of this process. What we are both doing is going back and forth saying “please reflect harder”. I have sprinkled some reading recommendations throughout to facilitate this.

The post does not mention whether we have reasons to hold certain things dear. It actually rejects such a framing altogether, claiming that the idea that we “should” (in a reason-implying sense) hold certain things dear doesn’t make sense. This is tantamount to nihilism, in my view. The first two points, meanwhile, are psychological rather than normative claims. As Sidgwick stated, the point of philosophy is not to tell people what they do think, but what they ought to think.

I am always very happy to examine the plural goods that some say they value, but which I do not, and see whether convergence is possible.

I am only disappointed if you stop reflecting and questioning your position based on the situations you find yourself in and start to pursue it as dogma that cannot be questioned. I don't face the same concern as I am committed to continue on my open-minded and open-ended quest to better understand what it means to do good in particular situations and to act accordingly. In that sense, I am not "just" value pluralist nor a monist but agnostic as to what any particular situation may demand of me.

Just because one is moral anti-realist doesn't mean one is automatically a nihilist. The post argues for Valuism and suggests there can be more than moral reasons for acting such as biological or psychological reasons. One may even argue that these are primary. But I guess that's bound to become too long of a conversation for this thread. I tried to make my case and I hope we both got something out of it.

Being “agnostic” in all situations is itself a dogmatic position. It’s like claiming to be “agnostic” on every epistemic claim or belief. Sure, you can be, but some beliefs might be much more likely than others. I continue to consider the possibility that pleasure is not the only good; I just find it extremely unlikely. That could change.

I do not think biological and psychological “reasons” are actually reasons, but you’re right that this gets us into a separate meta-ethical discussion. Thank you for the discussion!

If you read what I have written, you will see that I am not taking a dogmatic position but simply advocate for staying open-minded when approaching a situation. I tried to describe that as trying to be "agnostic" about the outcome of engaging with a situation. It's not my goal to predict the outcome in advance but to work towards a satisfying resolution of the situation at hand. I would argue that this is the opposite of a dogmatic position but I acknowledge that my use of the term "agnostic" may have been confusing here.

Thank you as well, it was thought provoking and helped me reflect my own positions.

Thanks for sharing this post and pointing out some of the inconsistencies and confusions you see around you! I think being curious and inquisitive about such matters and engaging in open and constructive dialog is important and healthy for the community!

Interestingly, I actually made a related post just slightly earlier today, which was trying to spark some discussion around a thought experiment I came up with to highlight some similar concerns/observations. I think your post is much more fleshed out, so thank you for posting!

Executive summary: The author argues that Effective Altruists (EAs) should adopt Valuism, a philosophy recognizing multiple values, rather than focusing solely on utility, as this single-minded focus can lead to unhappiness, burnout, and may not align with individual's actual values.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.