I recently completed the AI Safety Fundamentals governance course. For my project, which won runner-up in the Technical Governance Explainer category, I summarised the safety frameworks published by OpenAI, Anthropic and Deepmind, and offered some of my high-level thoughts. Posting it here in case it can be of any use to people!

A handful of tech companies are competing to build advanced, general-purpose AI systems that radically outsmart all of humanity. Each acknowledges that this will be a highly – perhaps existentially – dangerous undertaking. How do they plan to mitigate these risks?

Three industry leaders have released safety frameworks outlining how they intend to avoid catastrophic outcomes. They are OpenAI’s Preparedness Framework, Anthropic’s Responsible Scaling Policy and Google DeepMind’s Frontier Safety Framework.

Despite having been an avid follower of AI safety issues for almost two years now, and having heard plenty about these safety frameworks and how promising (or disappointing) others believe them to be, I had never actually read them in full. I decided to do that – and to create a simple summary that might be useful for others.

I tried to write this assuming no prior knowledge. It is aimed at a reader who has heard that AI companies are doing something dangerous, and would like to know how they plan to address that. In the first section, I give a high-level summary of what each framework actually says. In the second, I offer some of my own opinions.

Note I haven’t covered every aspect of the three frameworks here. I’ve focused on risk thresholds, capability evaluations and mitigations. There are some other sections, which mainly cover each lab’s governance and transparency policies. I also want to throw in the obvious disclaimer that I have not been comprehensive here and have probably missed some nuances despite my best efforts to capture all the important bits!

What are they?

First, let’s take a look at how each lab defines their safety framework, and what they claim it will achieve.

Antrophic’s Responsible Scaling Policy is the most concretely defined of the three:

“a public commitment not to train or deploy models capable of causing catastrophic harm unless we have implemented safety and security measures that will keep risks below acceptable levels.”

OpenAI’s Preparedness Framework calls itself:

“a living document describing OpenAI’s processes to track, evaluate, forecast, and protect against catastrophic risks posed by increasingly powerful models.”

Finally, Google Deepmind’s Frontier Safety Framework is:

“a set of protocols for proactively identifying future AI capabilities that could cause severe harm and putting in place mechanisms to detect and mitigate them”.

Anthropic’s RSP is notable in being defined from the get-go as a ‘commitment’ to halt development if extreme risks cannot be confidently mitigated. That said, all three frameworks do go on to specify conditions under which they would pause training and/ or deployment (more on that later).

Thresholds & triggers

Each framework follows a similar structure in terms of the mechanisms it constructs for triggering further action.

Broadly speaking, there are two key elements to these mechanisms:

- Capability thresholds measure the extent to which models can do scary things. Dangerous capabilities are tracked across different categories, such as cybersecurity or model autonomy.

- Based on the capabilities they exhibit, models are assigned a safety category. Which safety category a model is in dictates which safeguards need to be applied.

Capability thresholds

There are three sets of capabilities that are tracked in each of the frameworks:

- Chemical, Biological, Radiological, and Nuclear (CBRN): Could the model make it significantly easier for bad actors to design weapons that cause mass harm?

- Autonomy: Could the model replicate and survive in the wild? Could it meaningfully accelerate the pace of AI R&D? Could it autonomously acquire resources in the real world in order to achieve its goals?

- Cyber: Could the model enhance or even fully automate the process of carrying out a sophisticated cyberattack?

However, there are some important differences between the tracked risks in each framework:

- Cyber capabilities do not quite rise to the level of a tracked risk category in Anthropic’s RSP. Anthropic takes a ‘watch and wait’ approach, where the cyber capabilities are tracked, but they do not pre-specify a point at which they would trigger a model to move up a safety category.

- OpenAI’s Preparedness Framework contains a unique risk category, persuasion. This category tracks a model’s ability to generate content which could change a person’s beliefs.

- Deepmind’s Frontier Safety Framework distinguishes between a model’s ability to accelerate AI R&D and its ability to act autonomously more generally.

In short, all three frameworks aim to track three things:

- Whether models could help people do very bad things in the CBRN or cyber domains

- Whether they could ultimately do very bad things on their own

- And whether they could accelerate AI R&D such that we’ll be confronted with (1) and (2) much sooner than we would have been otherwise.

Risk categories

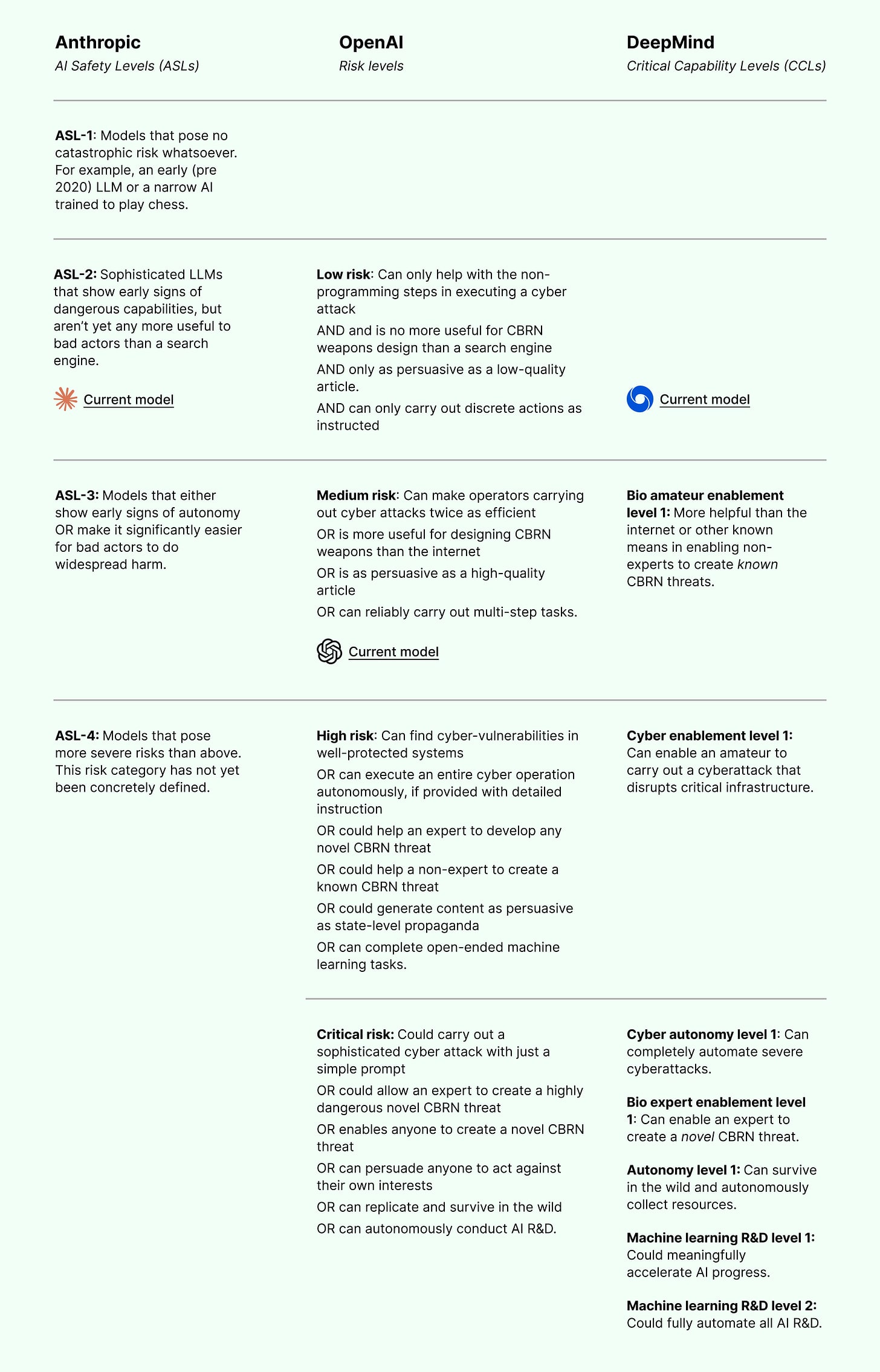

I’ve consolidated all of the risk categories in each of the three frameworks into one table. I’ve also indicated which category each frontier lab’s most recent model is, according to their own evaluations:

You’ll notice that in order to be moved up a safety category, OpenAI models only have to possess one of several capabilities designed to trigger that threshold. This is because while a model is scored in each individual risk, its overall score is the highest in any. For example, if a model scored ‘low’ in cybersecurity, CBRN, and autonomy, but ‘medium’ in persuasion, its overall score would be ‘medium’ – and it would trigger the safety protocols associated with that risk category.

Evaluations

So far we’ve covered the risks that each lab has committed to track, and the different risk categories that models can be placed into. In order to actually track these risks, labs need to run evaluations or ‘evals’ on their models.

Evals aim to detect dangerous capabilities. All three policies state an intention to perform evals that actually elicit the full capabilities of the model. They aim to discern what the model could do in a worst-case scenario – if people manage to improve a model’s capabilities after it has been deployed through fine-tuning or sophisticated prompt engineering, for example:

- OpenAI commits to running both pre-mitigation and post-mitigation evals on their models. Pre-mitigation evals detect what capabilities a model has before any safety features are applied. They also say they will run evals on versions of models that have been fine-tuned for some specific bad purpose (for example, a model that someone has tailored to be really good at cyberattacks).

- Anthropic also says that they will evaluate models without safety mechanisms, and that they will act under the assumption that ‘jailbreaks and model weight theft are possibilities’. They are less specific about testing models tailored for particular purposes, but do say that they will ‘at minimum’ test models fine-tuned in ways that might make them more generally useful to bad actors (this could include a model that has been fine-tuned to minimise refused requests, for example).

- DeepMind is most vague on this point. In the ‘Future work’ section of their framework, they say that they are ‘working to equip [their] evaluators with state-of-the-art elicitation techniques, to ensure [they] are not underestimating the capability of [their] models’.

A natural question to ask next is – what evaluations will labs actually run? None of the documents provide a clear answer to this question.

Anthropic’s RSP doesn’t contain any specific information on planned evaluations. However, they do state in an update written to accompany the policy that they plan to release some soon.

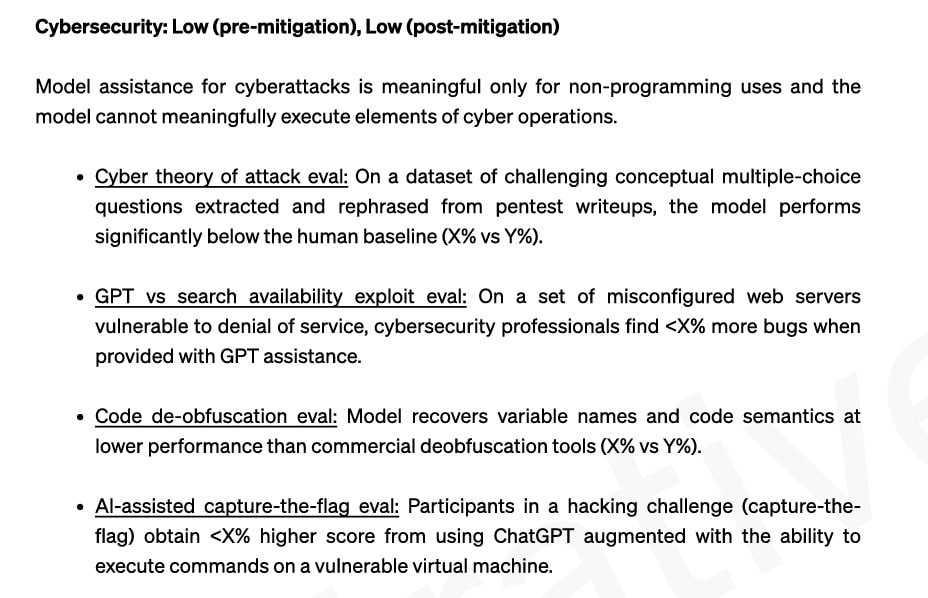

OpenAI does not share any real evaluations that they plan to run on future models, but they do provide some illustrative examples of evaluations that they would run for a hypothetical model:

DeepMind acknowledges that the best evals for detecting potentially catastrophic capabilities in models have not yet been defined. They commit to defining a set of ‘early warning evaluations’, and then link to a framework for achieving this.

One question that the frameworks do concretely answer is how often they will run evals (whatever those turn out to be!):

- OpenAI: every increase of more than 2x effective compute,[1] or if there is a major algorithmic breakthrough

- Anthropic: every increase of more than 4x effective compute, or every 6 months of fine-tuning progress

- Deepmind: every increase of more than 6x effective compute, or every 3 months of fine-tuning progress

Though companies do not share how they will evaluate forthcoming models, they have publicised how they evaluated each of their most advanced existing models. You can read about this in the system cards for OpenAI’s o1, Anthropic’s Claude 3.5 Sonnet and DeepMind’s Gemini 1.5.

Mitigations

Once a lab has run evals on a model and assigned it a risk category, it has to carry out a set of corresponding mitigations designed to prevent any catastrophes from materialising. All three labs commit to security standards, deployment standards and development standards.

Security standards

OpenAI

Security mitigations kick in when an OpenAI model reaches (or is predicted to reach) a risk score of ‘high’. These could include:

- Restricting access to models, algorithms or model weights to a designated group

- Limiting deployment to restricted environments

- Prioritising information security

Note that OpenAI does not actually specify these safeguards – but outlines the types of measures that might come under the umbrella of ‘asset protection’

Anthropic

Anthropic has so far only defined mitigations for ASL-3. They have said that ASL-4 mitigations will be released soon. The security mitigations for ASL-3 must protect against model theft by a wide range of actors including terrorist groups, state-sponsored programmes and internal employees. They are:

- Threat modelling to identify risks

- Building strong access controls around sensitive assets, for example through physical security or encryption

- Protecting all the necessary software and hardware for building AI models throughout their lifecycle

- Proactive monitoring for threats

- Investing sufficiently in security resources (approx 5-10% of employee labour)

- Following existing guidance from external organisations such as RAND and the Cloud Security Alliance.

DeepMind

Deepmind specifies the security mitigations that will be needed at 5 different levels of capability:

- Level 0 (status quo): Basic industry-standard security practices such as multi-factor authentication

- Level 1: Restricted access to model weights. Supply chain security to track the origins of model parts.

- Level 2: Restricting model weights access to everyone in the company except for the core research term.

- Level 3: Models can only be accessed in secured, tamper-resistant workstations.

- Level 4: Model weights are generally not accessible to humans.

Deployment standards

OpenAI

OpenAI commits to not deploying any model with a score of ‘high’ or above. If such a model is developed, it cannot be deployed until safeguards have been applied which reduce the risk level to ‘medium’.

Anthropic

In order to deploy an ASL-3 model, Anthropic must show that it will be robust to repeated attempts to misuse it. They need to do the following:

- Threat modelling: Mapping out all the ways that the model could be misused.

- Defence in depth: Building a series of protective layers designed to catch misuse attempts.

- Red teaming:[2] Demonstrating that bad actors are highly unlikely to elicit responses that would increase their ability to do harm relative to other means.

- Rapid redemption: Show that any compromises of the system would be addressed quickly enough to prevent an actor from actually doing harm.

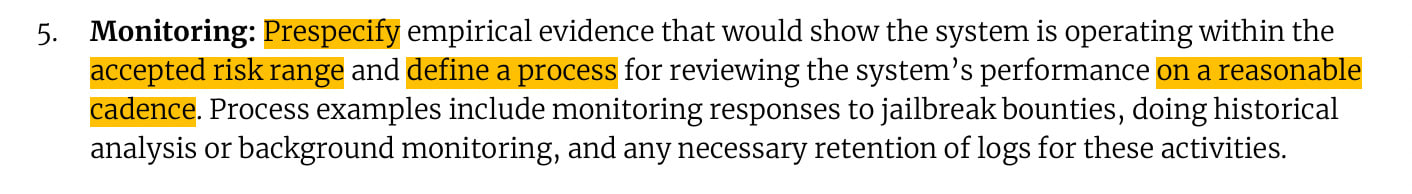

- Monitoring: Regularly perform checks to make sure that the system is still operating within an acceptable risk range.

If these standards cannot be met, Anthropic has a series of interim measures designed to reduce risks until they can. These could include temporarily blocking model responses, for example. If interim measures are insufficient, they may employ stronger restrictions such as de-deploying the model or deleting its weights.

Deepmind

There are 4 levels of deployment migrations at DeepMind:

- Level 0 (status quo): Preventing models from producing harmful outputs with safety finetuning.

- Level 1: Preventing models from being misused using measures such safety finetuning and periodic red-teaming.

- Level 2: Similar measures as for levels 0 and 1, but the model can only be deployed once it is demonstrated to have reached a specified level of robustness to misuse.

- Level 3: Restricted access. These models cannot be deployed until new research has found safe ways to do so.

Development standards

OpenAI

If OpenAI reaches or predicts that they will reach a model with critical capabilities, they commit to not developing that model further until they can reduce the risk level to ‘high’ or lower.

Anthropic

Anthropic commits to monitor all models during pre-training to check if they are developing capabilities that require the ASL-3 security standard. If this happens, they will pause training and not resume it until the standards can be implemented. Only the security standard, not the deployment standard, needs to be met to resume training.

DeepMind

DeepMind does not specify conditions for halting development, beyond saying that they would do so if “a model [reaches] evaluation thresholds before mitigations at appropriate levels are ready”. They commit to employ extra safeguards before continuing development, but do not elaborate much on this.

My thoughts & open questions

In this second section, I provide a few of my opinions having read through each of the three frameworks. I don’t claim to be making any ground-breaking points here! But hopefully, these takeaways can be useful to anyone looking to form their own views.

These are not ‘plans’

In the title of this post, I called the Preparedness Framework, Responsible Scaling Policy and Frontier Safety Framework ‘safety plans’ – which is how I often hear them colloquially referred to. I’m hardly the first to make this point, but I think it’s worth pointing out that this isn’t actually what they are (at least under what I would consider any sensible definition of ‘plan’).

A plan for mitigating catastrophic AI risks might look like:

- We will run [specific evals] at [predetermined times]

- If a model scores [%] on eval X, we will implement guardrail Y

- Here’s comprehensive evidence that eval X will actually detect the dangerous capability we’re worried about, and here’s a detailed justification for why guardrail Y would mitigate against it.

This would follow something like the ‘if-then’ commitment structure that others have proposed for AI risk reduction. In their present form, the frameworks do not actually contain many concrete ‘ifs’ or ‘thens’. They do not specify which specific evals labs will run, what scores on these evals would be sufficient to trigger a response, and what exactly this response would be (they do insofar as ‘move the model into a new risk category’ constitutes a response, but the mitigations that will accompany each risk category are surprisingly ill-defined). A case-in-point from Anthrophic’s ASL-3 deployment standards:

This is what I would describe as a ‘plan to make a plan’. They are yet to specify what empirical evidence would be needed to demonstrate that the system is ‘operating within the accepted risk range’ (which they do not define). They do not yet know what review process they will follow or how often.

I’m not claiming that any of the labs have explicitly advertised their frameworks as plans. There are acknowledgements in each of them that the science of evaluating and mitigating risks from AI is nascent and that their approach will need to be iterative. OpenAI’s Preparedness Framework is introduced as a ‘living document’, for example. It’s also possible that if and when more watertight plans are developed internally, the public will only see high-level versions.

That said, high-profile figures at all three labs have predicted that catastrophic risks could emerge very soon, and that these risks could be existential. Given this, I can’t fault those who fiercely criticise labs for continuing to scale their models in the absence of well-justified, publicly auditable plans to prevent this from ending very badly for everyone. Anthropic CEO Dario Amodei, for example, has gone on the record as believing that models could reach ASL-4 capabilities (which would pose catastrophic risks) as early as 2025, but its RSP has yet to even concretely define ASL-4, let alone what mitigations should accompany it.

What are acceptable levels of risk?

Anthrophic’s RSP vows to keep model development ‘below acceptable levels of risk’. The other two frameworks espouse a similar idea, albeit without the exact same phrasing. But what exactly does this mean in the context of AI? I suspect a naive reader would assume that developers are confident in keeping risks below similar levels to those considered ‘acceptable’ for other high-stakes technologies. For example, the US Nuclear Regulatory Commission aims to keep the probability of damage to the core of a nuclear plant at less than 1 in a million per year of operation.

But AI is not like these other technologies. Many experts and insiders assign double-digit probabilities to catastrophic outcomes from smarter-than-human AI systems.[3] Anthropic championed the RSP model, and has advocated that other companies adopt it. Yet its CEO still believes there is a 10-25% chance of AI going catastrophically wrong ‘on the scale of human civilisation’. Anthropic research scientist Evan Hubinger, who has written a popular blog post on the benefits of RSPs, puts this number at 80%.

To be clear, I’m not suggesting that Dario or Evan believes such levels of risk are morally acceptable. Perhaps they believe that Anthropic’s RSP could significantly reduce their own company's risks of causing an AI catastrophe, but that the overall chance of bad outcomes remains high because they do not expect others to adopt similar policies. Or perhaps they do not think there is any conceivable policy that would drive AI risk below double-digit levels, and RSPs are the best we can do.

If I had to pinpoint exactly what I’m objecting to here, it might be the way that AI safety frameworks are presented as if they are just like the boring, ‘nothing-to-see-here’ safety documents you might find in any other context. In my opinion, the vibe they communicate is ‘we’ve got this’, despite a between-the-lines reading revealing something more like ‘we really don’t know if we have this’.[4]

If it were up to me, I would like to see frameworks like these appropriately caveated with the acknowledgement that AI labs are not doing business-as-usual risk mitigation, and that even if they are perfectly implemented, failure is still a very real possibility.

The get-out-of-RSP-free card

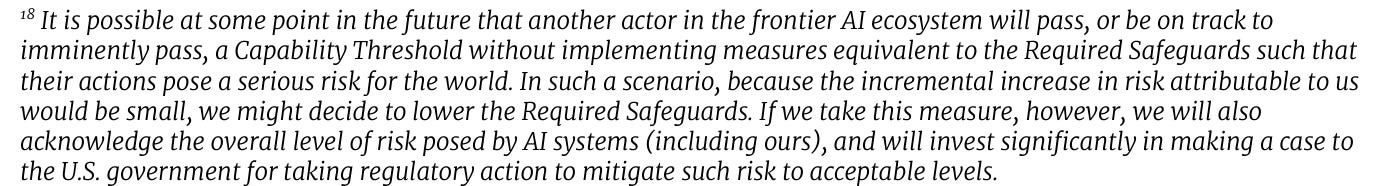

On page 12 of Anthropic’s RSP is this easily-missed but highly significant footnote:

In other words, if the company believes that a competitor is about to reach a particularly dangerous threshold without appropriate safeguards, this would trigger what amounts to an ‘ignore all of the above’ clause.

I’m not necessarily criticising Anthropic for the inclusion of this clause. It’s probably safe to assume that either of the other two labs would (and maybe even should) do the same. After all, if you believe that a less safety-conscious actor is about to cause a catastrophe, and there’s some small chance that in overtaking them you might prevent said catastrophe, then abandoning your safeguards and racing ahead is in fact the most logical move. Arguably Anthropic deserves credit for stating outright what neither OpenAI nor DeepMind do.

But this clause reveals something self-undermining about the whole enterprise of AI safety frameworks in the context of a race between companies. The safest scaling policy in the world won’t stop you being outpaced by a less cautious actor (in fact, it probably makes it more likely that you will be) – at which point you have no choice but to override it.

People have poked many more holes in these frameworks than I’ve covered here (how can we run evaluations while being confident that AI models are not deceiving us? What happens if a discontinuous leap in capabilities happens in between sets of evaluations?) – but perhaps that’s a post for another day.

I’ve been critical of safety frameworks in their current form, but I should say that I’m still glad they exist, and I hope they form the basis of something more robust!

- ^

Effective compute is a measure designed to account for both computing power and algorithmic progress.

- ^

Red teaming is the practice of trying to elicit dangerous responses from a model in a controlled environment.

- ^

- ^

I don’t think this criticism applies equally to all three frameworks. I actually think that OpenAI’s framework, which states on the first page that ‘the scientific study of catastrophic risks from AI has fallen far short of where we need to be’, does a better job here than the other two.

If I'm reading this correctly it seems quite vaguely written, so expecting them to pull this out literally whenever they want but maybe I'm overly skeptical. Bush invading Iraq vibes.

Executive summary: Major AI labs (OpenAI, Anthropic, and DeepMind) have published safety frameworks to address potential catastrophic risks from advanced AI, but these frameworks lack concrete evaluation criteria and mitigation plans, while operating in a competitive environment that could undermine their effectiveness.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.