The waste of human resources caused by poor selection procedures should pain the professional conscience of I–O psychologists.[1]

Short Summary: I think that effective altruist organizations can do hiring a lot better.[2] If you are involved in hiring, you should do these three things: avoid unstructured interviews, train your interviewers to do structured interviews, and have a clear idea of what your criteria are before you start to review and filter applicants. Feel free to jump to the “Easy steps you should implement” section if you don’t want to go through all the details.

Intro

If we view a hiring process as an attempt to predict which applicant will be successful in a role, then we want to be able to predict this as accurately as we can. That is what this post will be about: how can we increase the accuracy of our predictions for something that is as complex as human beings.[3] Whenever I write about predicting success in this post, it is about trying to predict whether (and to what extent) a job applicant would be successful if hired.

This post will be full of suggestions about hiring. Like all suggestions anyone gives you about how to do things, feel free to ignore aspects that you think aren’t applicable to your situation.

What this article is not, and epistemic status

This isn’t original research. This is mostly just me summarizing things I’ve read, with a dash of my own perspectives sprinkled in here and there. I think it should be quite clear from context which ideas are my own, but if you have any questions about that please do make a comment and I will clarify.

This also isn’t legal advice for employment law, for avoiding adverse impact, etc.

This isn’t an operational plan for how to implement a hiring system. While I have designed hiring systems in the past and most good hiring systems should make use of the techniques/tools described here, describing how to put these ideas into practice would be a separate post.

And of course, the standard caveat that these general recommendations might not fit the particular situation you face.

Although I asked a few strangers to review this, this is not a professionally edited piece of writing: all errors are my own.

My background

I ran a good deal of operations and a good deal of hiring (as well as some other HR functions) for a couple of small organizations (headcount 20-60), and I had some insight into the hiring practices of a larger organization (headcount of about 2500) when I worked as a director in the HR department there. I've read some books that are relevant to hiring; this includes books on mistakes/biases in decision making, books specifically about human resources, as well general business books which have given me relevant tidbits.[4] I am wrapping up a graduate level course in Organizational Behavior[5] as part of my effort to earn a master's degree in management. I've recently become aware of the Scientist–Practitioner Gap (sometimes called the The Research-Practice Gap), and I'm interested in it. I've discovered a few academic papers recently by following and exploring the sources cited in my textbook and in my professors lectures, and Stubborn Reliance on Intuition and Subjectivity in Employee Selection has inspired me to write this post.

I want to emphasize that I am not an expert on personnel selection. I am a man who has a few years of work experience in operations and HR at small organizations, and who designed hiring processes for a couple of startup-y teams. Over the past few months I've read some relevant journal articles, over the past several years I've read a lot of relevant popular press articles and books, and I am about 1/12 of the way through a relevant master's degree.[6] I'd encourage you to view this not as an expert advising you on how to do something, but rather as advice from someone who is interested in this topic, is learning more about this topic, and wants to share some knowledge.

What the research says about hiring

How to do Interviews

Use Structured Interviews

I'm going to quote from my textbook, because I think it does a fairly good job of describing the problem with unstructured interviews.

The popular unstructured interview—short, casual, and made up of random questions—is simply not a very effective selection device. The data it gathers are typically biased and often only modestly related to future job performance... Without structure, interviewers tend to favor applicants who share their attitudes, give undue weight to negative information, and allow the order in which applicants are interviewed to influence their evaluations.

You want to avoid bias, right? You want to have something better than the unstructured interview, right? Fortunately, there is a simple solution readily available: use a structured interview. Again, quoting from from my textbook:

To reduce such bias and improve the validity of interviews, managers should adopt a standardized set of questions, a uniform method of recording information, and standardized ratings of applicants’ qualifications. Training interviewers to focus on specific dimensions of job performance, practicing evaluation of sample applicants, and giving interviewers feedback on how well they were focused on job-relevant characteristics significantly improves the accuracy of their ratings.

One thing that I want to emphasize is that you need to have this all prepared before you interview the first applicant. Ideally, you should have clear criteria set before you start fielding applicants. If you value qualities/traits A, B, and C in applicants, you should define what minimum score on a scale of A, or B, or C is required in order for an applicant to do the job well.

If you want to read about the research, you can start with the paper A Counterintuitive Hypothesis About Employment Interview Validity and Some Supporting Evidence and Is more structure really better? A comparison of frame‐of‐reference training and descriptively anchored rating scales to improve interviewers’ rating quality, but the short version is that structured employment interviews have higher validity for predicting job performance than do unstructured interviews.[7] There is a lot more research that I want to read about structured, unstructured, and behavioral interviews, but I haven't gone through all of it yet.

As a small note, I also recommend that you avoid asking brainteaser questions. Google was famous for these, but has since disavowed them.[8]

Use Behavioral interviews

Behavioral interview questions are a particularly effective type of interview question. I spent about four years working for a company at which a major part of the product/service was conducting behavioral interviews, where I conducted several hundred of them and served as operations manager. Once every few weeks I would fly to a different city and conduct a day or two’s worth of behavioral interviews. Although it has been a few years since I worked there, I do consider myself quite familiar with behavioral interviews.

Behavioral interviews are simple and easy: ask about past behavior.

- Instead of asking applicants what would you do in situation X? ask them tell me about a time you faced situation X.

- Instead of asking applicants how do you tend to deal with Y? ask them The last time you dealt with Y, how did you handle it?

- Rather than asking tell me about how to design Z, ask last time you designed Z, how did you do it?

My former colleagues and I used to joke that we could just stick a "tell me about a time when" at the beginning of any question to make it behavioral. The textbook definition of a behavioral interview question is to require "applicants to describe how they handled specific problems and situations in previous jobs, based on the assumption that past behavior offers the best predictor of future behavior."

Not all questions can be made behavioral. If you want to ask an applicant if they would be willing to move to a new city, or when they are able to start or if they have a particular certification, you should just ask those directly. But to the extent possible I encourage you to try and make many questions behavioral.

Edit: here is a list of behavioral questions that I put together, adapted from the US Department of Veteran's Affairs Performance Based Interviewing. Feel free to use and adapt these as you see fit.

Note: I've updated my opinion regarding behavioral questions. While a 2014 meta-analysis suggests that situational questions have slightly lower criterion-validity than behavioral questions for high-complexity jobs, this does not mean that only behavioral questions should be used, as both situational questions and behavioral questions have good validity for most jobs. I haven't yet delved into the research about what counts as a "high-complexity job." This section on behavioral interviews should be viewed as mostly personal opinion rather than a summary of the research.

Applicant–organization fit

You might want to use an interview to evaluate how well a person would mesh with other people at your organization. People often describe this as cultural fit or as a cultural interview, but the academics seem to call it applicant–organization fit. While in theory I do think there is value in working with people that you enjoy working with, in practice I'm wary of this type of filtering, because it sounds like we are just using motivated reasoning to find a way to let our biases creep in. Paul Green (who taught my Organizational Behavior class) said that if he designed a good hiring system he would be happy to hire people without worrying about fit at all, instead just using an algorithm to accept or reject. I know that people tend to be resistant to outsourcing decisions to an algorithm,[9] but I have also read so much about how we tend to be good at deceiving ourselves that I am starting to give it more consideration. However, I haven't read much about this yet, so as of now I still don't have much to say on this topic. I’d love to hear some perspectives from other people, though.

I also think that people influenced by the culture of EA are particularly susceptible to a “hire people similar to me” bias. From what I’ve observed, EA organizations ask questions in interviews that have no relevance to an applicant’s ability to perform the job. These types of questions will create a more homogeneous workforce, but rather than diving into that issue more here, I’ll consider writing a separate post about it.

Use Work Sample Tests

Using work sample tests seems to already be very common within the culture of EA, so I won't spend a lot of time on them here. While accurately predicting applicant success is difficult, work sample tests seem to be one of the best predictors. I'll quote from Work Rules, by Lazlo Bock:

Unstructured interviews... can explain only 14% of an employee’s performance. The best predictor of how someone will perform in a job is a work sample test (29 percent).[10] This entails giving candidates a sample piece of work, similar to that which they would do in the job, and assessing their performance at it.

Thus, if the nature of the job makes it feasible to do a work sample test, you should have that as part of your hiring process.

Intelligence Tests & Personality Tests

Regarding personality tests, it is pretty widely accepted among researchers that conscientiousness is a predictor of work success across a wide variety of contexts,[11] but that the details of the job matter a lot for using personality to predict success. It is also well established that cognitive ability is quite predictive of work success in a wide variety of contexts, but I am concerned about the many biases involved in testing cognitive ability.[12]

I’m intrigued by this topic, and there is quite a bit of research on it. But I haven't read much of the research yet and I don't have any experience in using these types of tests in a hiring process. My starting point is that I am very wary of them in terms of the legal risks, in terms of ethical concerns, and in terms of whether or not they are actually effective in predicting success. Scientists/researchers and job applicants tend to have very divergent views about these assessments.[13] There is also a plethora of companies pushing pseudoscience “snake oil” solutions involving tests and a few buzzwords (AI, automate). Thus, rather than typing a few paragraphs of conjecture I’ll simply state that I am cautious about them, that I urge EA organizations to only implement them if you have very strong evidence that they are valid, and that I hope to write a different post about intelligence tests and personality tests after I'm more familiar with the research on their use in hiring.

Additional thoughts

I've put some ideas here that I like and that I think are helpful for thinking about hiring. However, as far as I am aware there isn't much scientific research to support these ideas. If you know of some research related to these ideas, please do let me know.

Tailoring the hiring process to the role

Like so many things in life, the details of the particular situation matter. Deciding how to design a hiring campaign will change a lot depending on the nature of the job and of the organization. It is hard to give clear advice in a piece of writing like this, because I don’t know what the specific context of your open positions are. One helpful step that is relatively easy is to think about which of these two scenarios your organization is in for the particular role you are hiring:

- It is easy for us to find people to do the job. We have too many qualified applicants, I don’t want to take the time to review them all, so I am going to filter out a bunch of them and then look at the ones that remain. I need a more strict hiring process. This gives a very low chance to make bad hires, but at the cost of missing out on good hires, thus you will get some false negatives.

- We need more qualified applicants. We are having trouble finding people who are able to do the job. I need to be more lenient in filtering out and eliminating candidates. This gives a very low chance to miss out on good hires, but at the cost of a higher probability of hiring some bad fits as well, thus you will get some false positives.

My rough impression is that most roles in EA fall into the first category (a strict hiring process, accepting of false negatives). One method I’ve used in the past to deal with this kind of situation is what I call an auto-filtering quiz[14]. Depending on how the respondent answers the questions in the quiz, the applicant will see either a rejection page or a link for the next step in the process (such as a link to upload a resume and a calendly link to book an interview). The auto-filtering quiz can automate some very clear/strict filtering, allowing less time to be spent manually reviewing applications. The upfront cost is that the quiz would have to be designed for each distinct role, because the traits we want to filter for are different for each role.

As an example, for a position for a Senior Front End Developer only applicants that answer “yes” to all of the following three questions would progress to the next stage, and any applicant that does not answer “yes” to all three of these questions would be shown the rejection page:

- “Do you have three or more years of full-time work experience in a Front End role?”

- “Are you able to use HTML, CSS, and JavaScript?”

- “Do you have consistent and reliable internet access?”

I do think that such a quiz should be used with caution, as it can make the hiring process feel fairly impersonal for the applicant. Depending on what population you want to recruit from, some applicants may strongly prefer human contact rather than to use a quiz, so much then when prompted to do the quiz they choose to drop out of the application process.[15] But I have found it to be quite effective in preventing me from manually reviewing dozens and dozens of applications that don’t meet minimum requirements. If you do use a quiz, I recommend only using it for initial screening, not for secondary or tertiary screening.

Irreducible Unpredictability

I like the idea of irreducible unpredictability that Scott Highhouse describes in Stubborn Reliance on Intuition and Subjectivity in Employee Selection. He writes that most of the variance in success is simply not predictable prior to employment. I think that he described it fairly well, so I'll quote him rather than putting it into my own words.

The business of assessment and selection involves considerable irreducible unpredictability; yet, many seem to believe that all failures in prediction are because of mistakes in the assessment process. Put another way, people seem to believe that, as long as the applicant is the right person for the job and the applicant is accurately assessed, success is certain. The ‘‘validity ceiling’’ has been a continually vexing problem for I–O psychology (see Campbell, 1990; Rundquist, 1969). Enormous resources and effort are focused on the quixotic quest for new and better predictors that will explain more and more variance in performance. This represents a refusal, by knowledgeable people, to recognize that many determinants of performance are not knowable at the time of hire. The notion that it is still possible to achieve large gains in the prediction of employee success reflects a failure to accept that there is no such thing as perfect prediction in this domain.

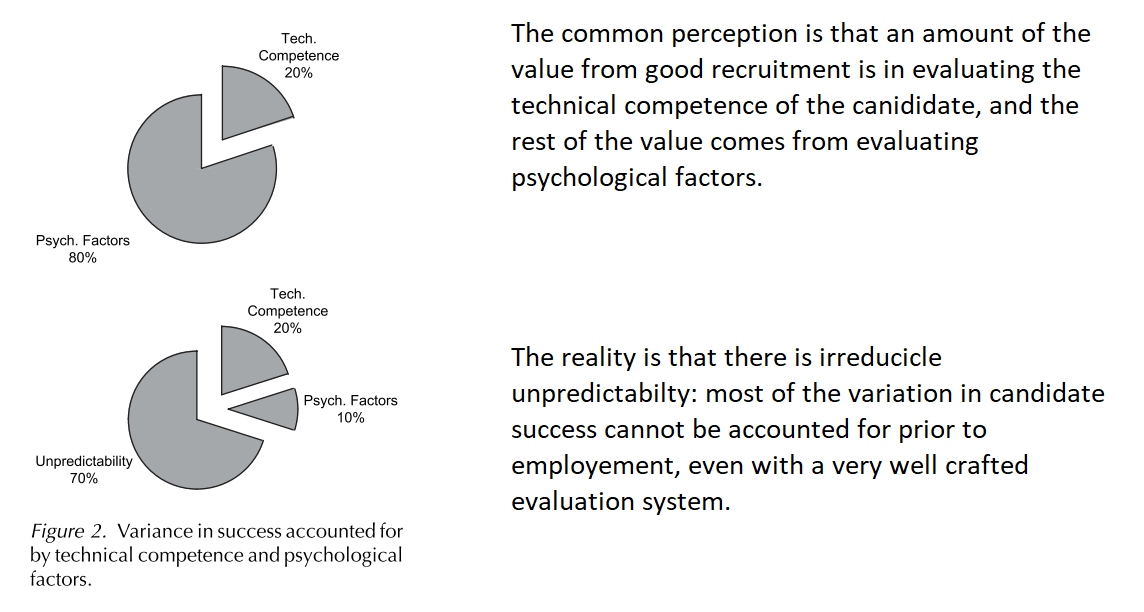

Scott Highhouse included the below Figure 2 in the paper which I think helps to solidify the idea in memory.

My own summary of the idea is that people think hiring can be done so well as to have a very high success rate, and refuse to believe that the process involves so much uncertainty. This meshes well with a lot of what I read regarding people having difficulty thinking probabilistically and people refusing to accept that high rates of failure are inherent to a particular task.

In a similar vein, here is my paraphrasing of the same idea from Personnel Selection: Adding Value Through People: In hiring, the very best methods of predicting performance only account for about 25% to 33% of the variance in performance. It may not be realistic to expect more. This is primarily because performance at work is influenced by other factors that are orthogonal to the individual (management, organizational climate, co‐workers, economic climate, the working environment).

So you should be aware that if you do everything optimally, using all the best tools and all the best processes, you will be able to predict less than 50% of the variability in performance. If I was more of a marketer, I would encourage people to adopt humble hiring: being aware that we are flawed and biased decision-makers, and even when using all the right tools we generally make suboptimal decisions.

A holistic hiring process

This is an idea that I was introduced to by Paul Green. I had a vague idea of it previously, but I like how explicitly he described it.[16]

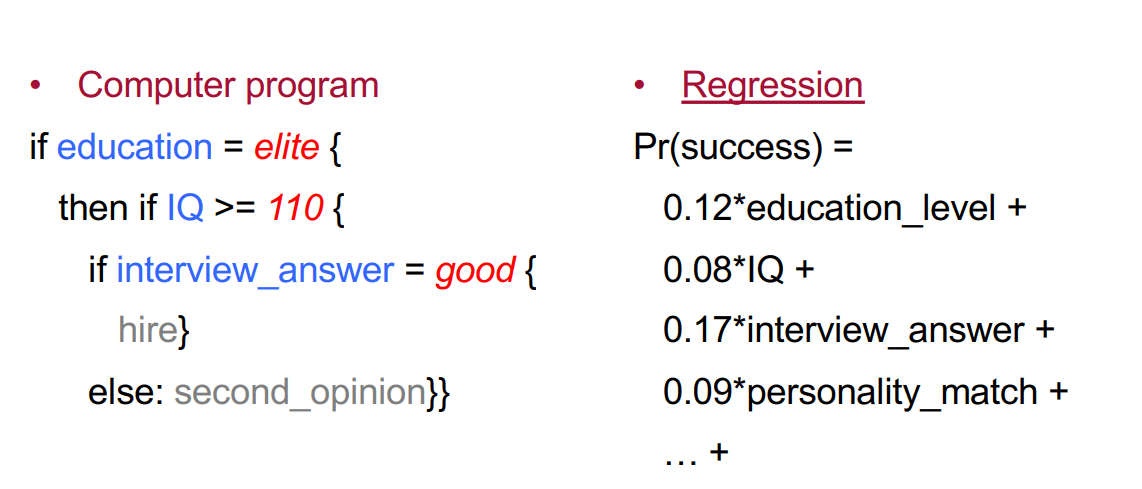

He described two approaches that one can use in hiring. The approach on the left is a linear if-else linear model, in which applicants are eliminated at each stage if they don't meet the requirement. After taking applicants through these stages, you are left with a pool of applicants who meet all your requirements. The approach on the right is what he describes as more like a regression model, in which you give weights to various traits depending how important they are. I view a major benefit of the regression model is if an applicant is abnormally strong in one area, this can make up for a slightly weaker ability in another area. He suggests that using a holistic regression model rather than an if-else model, will result in more successful hiring, although I haven't seen research about this yet.

Rather than a hiring process in which applicants are eliminated step by step for not meeting the standard A, or for not meeting standard B, Paul Green encourages a more holistic approach, in which various aspects are considered in order to evaluate the applicant.

The benefit of the if-else model is its simplicity: it is relatively easy to design and execute. It also has a relatively low chance of making a bad hire (assuming that the criteria you use to filter are good predictors of success). The downside of the if-else model is that it is can be overly strict in filtering out and eliminating applicants, meaning that you will miss out on good hires (potentially the best hires). The benefit of the regression model is that you have a very low chance of missing out on a good hire. However, the cost is that it requires extra resources to design a more complicated hiring process.

Get feedback from applicants

This is another idea that I first learned from Lazlo Bock’s book, Work Rules. Creating a survey to send out to every applicant that you interview is probably the easiest thing to do among all of the recommendations here. You can use the template that is provided at re:Work or you can make your own survey.

I’d encourage you to make it anonymous so that applicants are clear that this survey doesn’t influence whether or not they progress through the selection process. I’d also encourage you to send the link to both applicants that “pass” the interview as well as those that “fail” the interview. You want to better understand applicants’ experiences in the hiring process, regardless of whether they received a job offer. You can use this feedback to improve the hiring process.

Easy steps you should implement

Easy is a relative term.[17] Although these are some of the easier actions to take for improving your hiring process, they will still take effort to implement.

Use work sample tests

My impression is that this seems to be a common practice within EA culture, so I won't spend much time on this. If the nature of the job allows you to use a work sample test, I encourage you to use one.

Use structured interviews, avoid unstructured interviews

Unstructured interviews make it difficult to compare applicant A to applicant B, they allow biases to creep in, and they are not as predictive of success as structured interviews. Create a set of questions addressing the traits/qualities you want to evaluate in applicants, create a rubric, train your interviewers, and score each answer an applicant gives according to the rubric. If you need some help to start doing structured interviews, start with re:Work.

Get feedback from applicants

You can use a survey such as Google Forms, Survey Money, Airtable, or whatever other system you prefer. In order to improve the hiring process, you want to get feedback from the people involved in the hiring process.

Have a plan before you start the hiring campaign

This is a bit more vague, and is more of a personal opinion rather than a summary of research-supported best practices. This is also somewhat related to the idea of a holistic hiring process that I mentioned above.

I think that anybody running hiring should be really clear about what knowledge, skills, abilities and other characteristics (KSAOs) are actually important, and what level of each KSAOs you need. You can figure this out by looking at the people who are already doing the job successfully and figuring out what KSAOs lead to their success. This is called job analysis, and while doing a full job analysis can be very tedious and time-consuming, sometimes a quick and dirty version is sufficient to get some benefits. As a simplistic example, rather than simply stating that a successful applicant needs “good excel skills” you could instead specify that a successful applicant “is able to complete at least 6 out of the 10 tasks on our internal excel test without referring to any resources.” Anything above 6 makes the applicant more competitive, but anything below 6 disqualifies him/her.[18]

Be clear about must haves, and nice to haves. When I was hiring a junior operations manager for a former employer, the only real must haves were good communication skills, basic computer literacy, and an optimization mindset. There were lots and lots of nice to haves (strong spreadsheet skills, experience with data analysis, familiarity with Asana and Google Sheets, excellent attention to detail, comfort giving and accepting feedback, experience managing projects and people, etc.). For that hiring campaign, we weren't considering applicants that only had the must haves; they needed the must haves plus a good combination of as many nice to haves as possible.[19]

I also think that you should decide in advance how to select among the qualified applicants. Thus, if you have multiple applicants that all pass your requirements and all seem that they would be good fits but you only want to hire one person, which one should you hire? Should you hire the overall best one? The cheapest one that can do the job to an acceptable level of quality[20]? One that gives you the most bang for buck (maybe he costs 80% of the best applicant but can produce 95% of his workload)? The answer will vary depending on your specific circumstances, but I recommend that you consciously make the decision. This is something that I have opinions about, but very little reading about. Maybe I'll have more to say about this after reading a more of the research on personnel selection.

Questions, concerns, and topics for further investigation

If you have answers for any of these items or relevant readings you can point me to (even just a nudge in the right direction), I'd love to receive your suggestions. It is possible that some of these are common knowledge among practitioners or among researchers, and perhaps I'll find answers to some of these questions during the coming months as I continue to work my way through my to-read list.

- Certainly there is research on personnel selection at non-profit or impact-focused organizations, and I just haven't read this research yet, right?

- For some jobs, it is easy to create work sample tests, but for a lot of knowledge work jobs it is hard to think up what a good work sample test could be. I'd love to see some kind of a work sample test example book, in which example work samples for many different jobs are written out. Does something like this exist?

- How to create good structured interviews? The gap between this is more effective than unstructured interviews and here are the details of how to implement this seem fairly large. Are there resources that go more in-depth than re:Work?

- How can an organization effectively use intelligence tests & personality tests in hiring, while avoiding/minimizing legal risks? I know that many are rubbish, but are they all varying degrees of rubbish, or are some of them noticeably better than the rest? How good is Wonderlic? The gap between this can help you select better applicants and here are the details of how to implement this seem fairly large. What research has been done in the recent two decades? Are there any intelligence tests that aren't rife with cultural bias? I plan to explore this, but if you have suggestions I'd love to hear them. As of now, I would like to read Assessment, Measurement, and Prediction for Personnel Decisions, and also to explore papers related to and papers citing The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings.

- I'm considering reading Essentials of Personnel Assessment and Selection or Personnel Selection: Adding Value Through People - A Changing Picture in order to learn more of the research on hiring. Are there any better textbooks that you can recommend to me?

Sources and further reading

- Organizational Behavior, by Stephen P. Robbins & Timothy A. Judge. This is the textbook for my Organizational Behavior class.

- Work Rules!: Insights from Inside Google That Will Transform How You Live and Lead. I first read this book in 2017 and I really enjoyed it. It was one of the things that really opened my eyes to how impactful good design of an organization's systems/processes can be. I got a lot of good ideas about how to run an organization in general, and there are some good concepts specifically related to hiring in Chapter 3 through Chapter 5. It isn't peer reviewed, but it does cite sources so you can track down the research if you want. If you are brand new to HR work or to hiring and you want something that is more easily digestible than an academic paper or a textbook, then I recommend this book.

- Stubborn Reliance on Intuition and Subjectivity in Employee Selection, an academic paper written by Scott Highhouse which got me thinking about why so many organizations have such bad hiring processes, and which gave me the inspiration to write this post.

- ^

This is a quote from the paper Stubborn Reliance on Intuition and Subjectivity in Employee Selection, written by Scott Highhouse.

- ^

Actually, I think that most organizations (both EA and non-EA) do hiring fairly poorly and could do a lot better.

- ^

I want to emphasize the complexity. I used to think of social sciences as more fuzzy than the hard sciences, but now I simply view them as having more variables. The simple example I think of is dropping a rock. If I am a physicist and I want to know what will happen to the sand when I drop the rock, then I can figure that out by running some trials. But what if the shape of the rock is different each trial? What if the size of the sand grains vary each time? What if the rock's relationship with her mother and how much sleep she got last night and her genetics all affect how fast it falls? I think of social sciences sort of like that: theoretically we could isolate and control all the variables, but realistically it isn't feasible. I wish I could just do randomized control trials, having each applicant do the job one hundred times in one hundred different identical universes, and then see which applicant was successful in the highest number of trials. But as one of my favorite quotes in all of the social sciences says, "god gave all the easy problems to the physicists."

- ^

My partner tells me that I read an impressive number of books each year and that I should brag about it, but I haven't yet found a way to do that without making myself cringe due to awkward boasting. Thus, I'll just leave it at "quite a few." However, if you want recommendations for books on a particular topic I am happy to share recommendations.

- ^

Organizational Behavior has a lot of overlap with I/O Psychology, and I generally view them as almost the same field. However, I am quite new to both of them and I don't feel qualified to give a good description of how they differ, nor of the exact borders of each field.

- ^

I am required to take twelve courses to get a full degree and I am just finishing up my first course, so we'll see how far I get. Doing a degree as a working adult while maintaining all the other aspects of life (household, job, relationships, etc.) feels very different from being a full-time student living on campus.

- ^

- ^

These are questions such as: “Your client is a paper manufacturer that is considering building a second plant. Should they?” or “Estimate how many gas stations there are in Manhattan” or “How many golf balls would fit inside a 747?” There’s no correlation between fluid intelligence (which is predictive of job performance) and insight problems like brainteasers. (excepted from Work Rules, by Lazlo Bock)

- ^

You can read more about this topic in the paper Overcoming Algorithm Aversion: People Will Use Imperfect Algorithms If They Can (Even Slightly) Modify Them

- ^

The data that Lazlo Bock refers to here comes from The validity and utility of selection methods in personnel psychology: Practical and theoretical implications of 85 years of research findings.

- ^

Although there is at least one paper that suggests there is a negative relationship between conscientiousness and managerial performance. This is something I want to look into more.

- ^

If you want a quick description of the biases, this excerpt from Work Rules is a quick overview: "The problem, however, is that most standardized tests of this type discriminate against non-white, non-male test takers (at least in the United States). The SAT consistently underpredicts how women and non-whites will perform in college. Phyllis Rosser’s 1989 study of the SAT compared high school girls and boys of comparable ability and college performance, and found that the girls scored lower on the SAT than the boys. Reasons why include the test format (there is no gender gap on Advanced Placement tests, which use short answers and essays instead of multiple choice); test scoring (boys are more likely to guess after eliminating one possible answer, which improves their scores); and even the content of questions (“females did better on SAT questions about relationships, aesthetics, and the humanities, while males did better on questions about sports, the physical sciences, and business”). These kinds of studies have been repeated multiple times, and while standardized tests of this sort have gotten better, they are still not great."

- ^

Some interesting comments and discussions here.

- ^

Here is an example from a company I used to work for. They’ve changed it since I was there, but it still serves as a decent demonstration of a simple auto-filtering survey.

- ^

A Chinese company I did some consulting for described to me how non-Chinese applicants are fairly comfortable filling out a form to submit their information, but Chinese applicants don't want to fill out a form to submit their info; Chinese applicants want to talk to an HR person via a phone call for a “quick interview.”

- ^

To my knowledge, Paul Green doesn't use the word "holistic" to describe this. The use of the word "holistic" to describe this is my own choice.

- ^

Easy in the way that learning Spanish or German is easy for a monolingual English speaker: It still takes a lot of effort, but far less than learning Arabic or Mandarin or Basque.

- ^

Note that this is nothing more than a simplistic example. In reality I wouldn’t recommend rejecting an applicant simply because she/he doesn’t know how to use INDEX MATCH in excel. Skills that can be learned to a satisfactory level of performance in less than an hour or that can be easily referenced should, in my opinion, generally not be used to filter applicants out.

- ^

I think that the analogy of classes for a degree in an American university is helpful here. In order to graduate with a particular major you have to take some specific classes within that major/department. You also have to take some electives, but you get to choose which electives you take from a list of approved classes. I was looking for somebody that has all the core classes, plus a good combination of electives.

- ^

The idea that the resource (in this context the resources is a person) able to complete the task to a sufficient quality should do the task is described by Manager Tools as Managerial Economics 101.

Thanks for writing this! I've personally struggled to apply academic research to my hiring, and now roughly find myself in the position of who "Stubborn Reliance" is criticizing, i.e. I am aware of academic research but believe it doesn't apply to me (at least not in any useful way). I would be interested to hear more motivation/explanation about why hiring managers should take these results seriously.

Two small examples of why I think the literature is hard to apply:

GMA Tests

If you take hiring literature seriously, my impression is that the thing you would most take away is that you should use GMA tests. It repeatedly shows up as the most predictive test, often by a substantial margin.[1]

But even you, in this article arguing that people should use academic research, suggest to not use GMA tests. I happen to think you are right that GMA tests aren't super useful, but the fact that the primary finding of a field is dismissed in a couple sentences seems worthy of note.

Structured versus unstructured interviews

The thing which primarily caused me to update against using academic hiring research is the question of structured versus unstructured interviews. Hunter and Schmidt 1998, which I understand to be the granddaddy of hiring predictors meta-analyses, found that structured interviews were substantially better than unstructured ones. But the 2016 update found that they were actually almost identical – as I understand it, this update is because of a change in statistical technique (the handling of range restriction).

Regardless of whether structured or unstructured interviews are actually better, the fact that the result you get from academic literature depends on a fairly esoteric statistics question highlights how difficult it is to extract meaning from this research.[2]

My impression is that this is true of psychology more broadly: you can't just read an abstract which says "structured > unstructured" and conclude something useful; you have to really dig into the statistical methods, data sources, and often times you even need to request materials which weren't made public to actually get a sense of what's going on.

I'm not trying to criticize organizational psychologists – reality just is very complicated. But I do think this means that the average hiring manager – or even the average hiring manager who is statistically literate – can't really get much useful information from these kinds of academic reviews.

E.g. Hunter and Schmidt 1998: "The most wellknown conclusion from this research is that for hiring employees without previous experience in the job the most valid predictor of future performance and learning is general mental ability ([GMA], i.e., intelligence or general cognitive ability; Hunter & Hunter, 1984; Ree & Earles, 1992)"

I briefly skimmed the papers you cited in favor of structured interviews. I couldn't immediately tell how they were handling range restriction; no doubt a better statistician than myself could figure this out easily, but I think it proves my point that it's quite hard for the average hiring manager to make sense of the academic literature.

Thanks, to me this comment is a large update away from the value of structured interviews.

As someone else who casually reads literature on hiring assessment, I am also confused/not convinced by OP's dismissals re: GMA tests.

I think that "dismissal" is a bit of a mischaracterization. I can try to explain my current stance on GMA tests a little more. All of the research I've read that link GMA to work performance uses data from 1950s to 1980s, and I haven't seen what tool/test they use to measure GMA. So I think that my concerns are mainly two factors: First, I haven't yet read anything to suggest that the tools/tests used really do measure GMA. They might end up measuring household wealth or knowledge of particular arithmetic conventions, as GMAT seems to do. I don't know, because I haven't looked at the details of these older studies. Second, my rough impression is that psychological tests/tools from that era were mainly implemented by and tested on a sample of people that doesn't seem particularly representative of humanity as a whole, or even of the USA as a whole.

My current stance isn't that GMA is useless, but that there are a few obstacles that I'd like to see overcome before I recommend it. I also have only a vague knowledge of the legal risks, thus I want to encourage caution for any organization trying to implement GMA as part of hiring criteria.

If you have recommended readings that you would be willing to share, especially ones that could help me clarify the current concerns, I'd be happy to see them.

For anyone who is curious, I have a bit of an update. (low priority, feel free to skip with no hard feelings)

It has puzzled me that a finding not supported by theory[1] didn't get more press in the psychology world. It appears to have made almost no impact. I would expect that either A) there would be a bunch of articles citing it and claiming that we should change how we do hiring, or B) there would be some articles refuting the findings. I recently got the chance to ask some Industrial Organizational Psychologists what is going on here. Here is my little summary and exceprts of their answers:

My takeaway is that (roughly speaking) I didn't have enough domain knowledge and context to properly place and weigh that paper (thus supporting the claim that "average hiring manager can't really get much useful information from these kinds of academic reviews").

The finding that unstructured interviews have similar predictive validity to structured interviews, originally published as Rethinking the validity of interviews for employment decision making, and later cited in 2016 working paper.

This and the other quotes are almost direct quotes, but I edited them to remove some profanity and correct grammar. People were really worked up about the issues with this paper, but I don't think that kind of harsh language has a place on the EA Forum.

Interesting, thanks for the follow-up Joseph! It makes sense that other meta-analyses would find different outcomes.

TLDR: I agree with you. It is complicated and ambiguous and I wish it was more clear-cut.

Regarding GMA Tests, my loosely held opinion at the moment is that I think there is a big difference between 1) GMA being a valid predictor, and 2) having a practical way to use GMA in a hiring process. All the journal articles seem to point toward #1, but what I really want is #2. I suppose we could simply require that all applicants do a test from Wonderlic/GMAT/SAT, but I'm wary of the legal risks and the biases, two topics about which I lack the knowledge to give any confident recommendations. That is roughly why my advice is "only use these if you have really done your research to make sure it works in your situation."

I'm still exploring the area, and haven't yet found anything that gives me confidence, but I'm assuming there has to be solutions that exist other than "just pay Wonderlic to do it."

I strongly agree with you. I'll echo a previous idea I wrote about: the gap between this is valid and here are the details of how to implement this seem fairly large. If I was a researcher I assume I'd have mentors and more senior researchers that I could bounce ideas off of, or who could point me in the right direction, but learning about these topics as an individual without that kind of structure is strange: I mostly just search on Google Scholar and use forums to ask more experienced people.

Thanks for the thoughtful response!

My anecdotal experience with GMA tests is that hiring processes already use proxies for GMA (education, standardized test scores, work experience, etc.) so the marginal benefit of doing a bona fide GMA test is relatively low.

It would be cool to have a better sense of when these tests are useful though, and an easy way to implement them in those circumstances.

Regarding structured versus unstructured interviews, I was just introduced to the 2016 update yesterday and I skimmed through it. I, too, was very surprised to see that there was so little difference [Edit: different between structured interviews and unstructured interviews]. While I want to be wary of over-updating from a single paper, I do want to read the Rethinking the validity of interviews for employment decision making paper so that I can look at the details. Thanks for sharing this info.

While I'm familiar with literature on hiring, particularly unstructured interviews, I think EA organizations should give serious consideration to the possibility that they can do better than average. In particular, the literature is correlational, not causal, with major selection biases, and is certainly not as broadly applicable as authors claim.

From Cowen and Gross's book Talent, which I think captures the point I'm trying to make well:

> Most importantly, many of the research studies pessimistic about interviewing focus on unstructured interviews performed by relatively unskilled interviewers for relatively uninteresting, entry-level jobs. You can do better. Even if it were true that interviews do not on average improve candidate selection, that is a statement about averages, not about what is possible. You still would have the power, if properly talented and intellectually equipped, to beat the market averages. In fact, the worse a job the world as a whole is at doing interviews, the more reason to believe there are highly talented candidates just waiting to be found by you.

The fact that EA organizations are looking for specific, unusual qualities, and the fact that EAs are generally smarter and more perceptive than the average hiring committee are both strong reasons to think that EA can beat the average results from research that tells only a partial story.

I think you are right. Like many things related to organizational behavior, I often think "in the kingdom of the blind the one-eyed man is king." So many organizations do such a poor job with hiring, even if we choose to merely adopt some evidence-based practices it can seem very impressive in comparison.

Great post. I have had multiple conversations lately about people's disappointing experiences with some EA org's hiring processes, so this is a timely contribution to the Forum.

A lot of your recommendations (structured interviews, work samples, carefully selecting interview questions for relevance to the role) are in line with my prior understanding of good hiring practices. I am less convinced, however, that behavioural interviewing is clearly better than asking about hypothetical scenarios. I can see arguments both for and against them but am unsure about which one is the net winner.

For instance, behavioural interviews may be favourable because past behaviour tends to be a strong predictor of future behaviour and because they ask about actual behaviour, not idealised situations and behavioural intentions. On the other hand, they may introduce noise, as some capable applicants will not have very good examples of "past situation X" (and vice versa), and they may introduce bias because they judge applicants based on their past behaviour, not their current capabilities.

What are your top arguments in favour of behavioural interviews? Are you aware of any high-quality studies on this topic? Your section on behavioural interviews doesn't have any references...

I agree with you completely. I view the main problem of evaluating someone based on the last time they had to do X, is that perhaps this person has never had to do X (even though they are capable of doing X well).

I was always told that an applicant is less likely to make up an answer if we ask about past behavior than if we ask about how he or she would act in a future hypothetical scenario, but I actually don't have any sources for that. As a result of you asking this question I poked around a few PDFs that I have on my to-read list and I've updated my beliefs a bit: I used to strongly recommend behavioral interview questions, but know I'm going to lessen the strength of that recommendation and only moderately recommend. I'm really glad that you brought this up, because now my view of reality is ever-so-slightly more accurate. Thank you. I'll add a sentence in the body of the post to clarify.

According to The Structured Employment Interview: Narrative and Quantitative Review of the Research Literature, it turns out that:

I will provide a caveat by saying that there seem to be different ups and downs according to different research. Regarding biases in interviews, according to one journal article when applicants of different racial groups apply, behavioral interviews seem to had lower group differences than situational interviews: the average of the differences between the two groups was .10 with behavioral interviews and .20 with situational interviews (this is from a paper called Racial group differences in employment interview evaluations). Although there are many ways to interpret this, my rough interpretation would be that behavioral questions allow less bias to creep in. However, this is only a single journal article, and I have to admit that I am not as well-read on behavioral interviews as I would like.

Thank you for a very thoughtful reply! (and for the sharing those papers :))

Thank you for the very in depth post! I've had a lot of conversations about the subject myself over the past several months and considered writing a similarly themed post, but it's always nice to find that some very talented people have already done a fantastic job carefully considering the topic and organizing the ideas into a coherent piece :)

On that note, I'm currently conducting a thesis on effective hiring / selection methods in social-mission startups with the hopes of creating a free toolkit to help facilitate recruitment in EA (and other impact-driven) orgs. If you have any bandwidth I'd love to learn more about your experience regarding the talent ecosystem in EA and see if I could better tailor my project to help address some of the gaps/opportunities you've identified

For a counterpoint to much of this:

"avoid unstructured interviews, train your interviewers to do structured interviews"

I suggest reading Tyler Cowen / Daniel Gross's Talent. They argue structured interviews might be fine for low-skill, standard jobs; but for "creative spark", high impact hires and, or, talent then the meta/challenging/quirky interview - which they talk about is superior.

They argue most of the academic research focused on low skill, standard jobs so not apply to "elite" or any creative spark type of job.

There is much much more - and would summarise more if interested, but it pretty much runs counter to many of your points, but might be aimed at a different thing. That said, I think many EA hires are probably of the Cowen/Gross type.

You might be right in your points, but I found Cowen/Gross interesting. Be well and thanks for writing.

Thanks for mentioning Tyler Cowen / Daniel Gross's Talent.

I'm upvoting this and commenting so that it gets high in the comments and so that there is an increased possibility of readers seeing it. While I haven't yet read the book Talent, it is on my to read list and it seems very relevant. (it is now a few ranks higher up on my to read list as a result of more than one person mentioning it in the comments on this post!) I also want to read it so that I can evaluate their arguments myself and judge how much I should update my opinions on hiring as a result. At face value, I do think that a lot of the research on hiring has been done on roles that are fairly standardized. That makes it hard to apply some of this research if you are hiring one operations manager at a small company, and the nature of the operations manager job is also very fluid.

As a more general thought, one of the challenges/frustrations I feel with a lot of social science, and especially a lot of evidence-based management is that there seems to be so much variation depending on context, such as one thing working well for low-skill jobs and another thing working well for more complex jobs. I really wish that there were fewer complicating and confounding factors in this kind of stuff. 🤦♂️

Brilliant post. I have been reading about and dabbling in evidence-led HR for some time, and on my to-do list was writing this exact post for the forum (although I suspect mine would've likely been of a significantly lower quality, so I'm not mad at all that you beat me to it)

I didn't see you cite it, but I assume you've come across Schmidt's 2016 update to his '85 years' paper? See here.

I wasn't aware of that China-specific example you outline in the footnote, so it's a nice reminder that cross-cultural considerations are important and often surprising. However, that aside, in general I sense that it's a win-win if applicants drop out of the process because they don't like the initial quiz-based filtering process. Self-selection of this kind saves time on both ends. While candidates might think it impersonal, I have a strong prior against using that as a reason to change the initial screening to something less predictive like CV screening.

Would be curious to see this list, for my own purposes :)

I often hear people asking for resources like this. Like you, I suspect that the significant effort investment required in closing the implementation gap is what keeps some people form implementing more predictive selection methods.

I'm not sure what your MO is, but I'd be keen to collaborate on future posts you're thinking of writing or even putting together some resources for the EA community on some of the above (e.g. example work samples, index of General Mental Ability tests, etc). Won't be offended if you prefer to work solo; we can always stay in touch to ensure we're not both going to publish the same thing at the same time :)

I'd be open to that. I don't have any posts like that on my docket at the moment, but I'll keep it in mind. If nothing else, I'd be happy for you to share a Google Doc link with me and I can use suggesting mode to make edits on a draft of yours.

I haven't come across it, but this is just the kind of thing I was hoping someone would refer me to! Thank you very much. I've had many concerns about The Validity and Utility of Selection Methods in Personnel Psychology: Practical and Theoretical Implications of 85 Years of Research Findings, mainly about the data used, and I am very happy to see an update.

Very good post. Would love to see more summaries of research on hiring but also what makes employees happy, and similar topics. A question about work samples: What are your thoughts, and what is the research, on using real (paid) work instead of work samples? Meaning, identifying some existing task that actually needs to be done, rather than coming up with an "artificial" one.

There is a lot of research on that (and on closely related topics, such as motivation, sense of belonging, citizenship behavior, etc.), and I have only barely scratch the surface of it in my own readings. One important thing to keep in mind is that the variations (different people, different roles, different cultural contexts, different company cultures, etc.) really do matter, so what tends to make a particular group of employees happy in one situation might not tell us much about what tends to make another group of employees happy in a very similar situation. Some of the research confirms what many people suspect to be true: having clearly established goals helps, having a psychologically sage environment helps, etc. Some of the research shows results that are somewhat surprising: money actually does tend to be a pretty good motivator, when you ask people what they want and then give it to them they tend to be less happy than people for whom you make a decision.

I suspect there are whole textbooks on the topic of what tends to make employees happy, and I'd love to learn more about this research area. I have lots of loosely held opinions, but I'm familiar with very little of the research/

One framework that I am familiar with that I find helpful is the job characteristics theory, which roughly states that five different things tend to make people happy in their jobs: autonomy, a sense of completion, variety in the work, feedback from the job, and a sense of contribution. You can look the details of it more, but an interesting and simple exercise is to try reading through The Work Design Questionnaire while thinking about your own job.

Thank you for an excellent reply. I've for a long time found the "mastery, autonomy, purpose" concept useful and think of it as true – for lack of a better word. That these three aspects determine drive/motivation/happiness to a large extent, in a work context.

I've read only a little research on work samples, and I unfortunately haven't yet read anything about being paid for work samples. Thus, I'm only able to share my own perspectives. My personal perspectives on work samples are roughly that:

It should probably not even be called a work sample under the circumstances I describe, but rather just work.

For example, if I'm hiring a communicator, I could ask them to spend two hours on improving the text of a web page. That could be a typical actual work task at some point, but this "work sample" also creates immediate value. If the improvements are good, they could be published regardless of whether that person is hired or not. This is also why you would pay an applicant for those two hours.

A very simplified example, but I hope the point comes across. And like you mention, for some types of work such isolated tasks are much more prevalent.

Thanks for this post! I am actively working on improving hiring for EA, especially to support longtermist projects, and appreciate this summary of some key best practices.

I’m currently focusing particularly on roles that are more common, such as personal assistants, where there is a high probability of replicability of the hiring process. The challenge is more on the end of “it is easy for us to find people to do the job”, where there is a strong need for filtering. This might be able to be simplified (in some ways you mention, like strong parameters/ a quick quiz) or being outsourced to an org (like what I’m starting, possibly). While there are unlikely to be many "really-not-a-fit" candidates, the challenge seems to be sorting the "okay" from the "exceptional" and ensuring good work style and workplace culture alignment between the hire and their manager.

I currently have some of the same “topics for further investigation” written down as possible interventions to experiment with. For example, is there a need/demand for interviewing training, or a bank of work sample questions to make hiring processes easier for organizations and higher quality? The creation of these processes, especially without relevant examples to work from, is challenging and time-consuming from scratch!

I’d be interested to talk with you more about your experience and see if there’s an opportunity to collaborate on developing these kinds of things in the next few months.

As far as interviewing training and a bank of questions go, I strongly recommend training interviewers. One relevant anecdote is that the author of Work Rules! mentioning how at Google they created a bank of interview questions for interviewers to use, with each question intended to inquire about a particular trait. Interviewer compliance with the structure is hard, as interviewers tend to want to do their own thing, but at Google they designed a system in which the interviewer could choose questions from a set presented to them, and thus the interviewer still felt as if they were choosing which of the questions to ask the applicant. While they had a computer programmed system with lots of automation, it wouldn't be too hard to put together a spreadsheet like this with a bunch of questions corresponding to different traits.[1]

Regarding sorting the "okay" from the "exceptional," I found the idea of this Programmer Competency Matrix helpful (I think it is originally from Sijin Joseph). While I've never run a hiring campaign for a programmer, I think that this template/format provides a good example of a fairly simple version for how you could differentiate the different levels of programmers, personal assistants, or any other role. If you want to get a bit more granular than a binary accept or reject, then building a little matrix like this could be quite helpful for differentiating between applicants more granularly.

I'd be happy to lend a hand or share my perspectives on hiring-related efforts at any point.

I copied and adapted these questions a few years ago, but I don't remember clearly where I got them from. I think it was some kind of a US government "office of personnel" type resource, but I don't recall the specific details. EDIT: I figured out where I got them from. Work Rules! referred to US Department of Veterans Affairs, which has Sample PBI Questions, from which I copied and pasted most of that spreadsheet.