Thanks to Isaia Gisler, Isabel Dahlgren and Dawn Drescher for feedback on this post.

Many people new to AI safety find the field hard to navigate. This has been my experience: there seem to be countless numbers of orgs, and numerous different kinds of work that one can pursue. To make matters worse, the two main paths to impact - technical alignment research and governance - are increasingly competitive,[1] and bottlenecks are likely to exist elsewhere.[2]

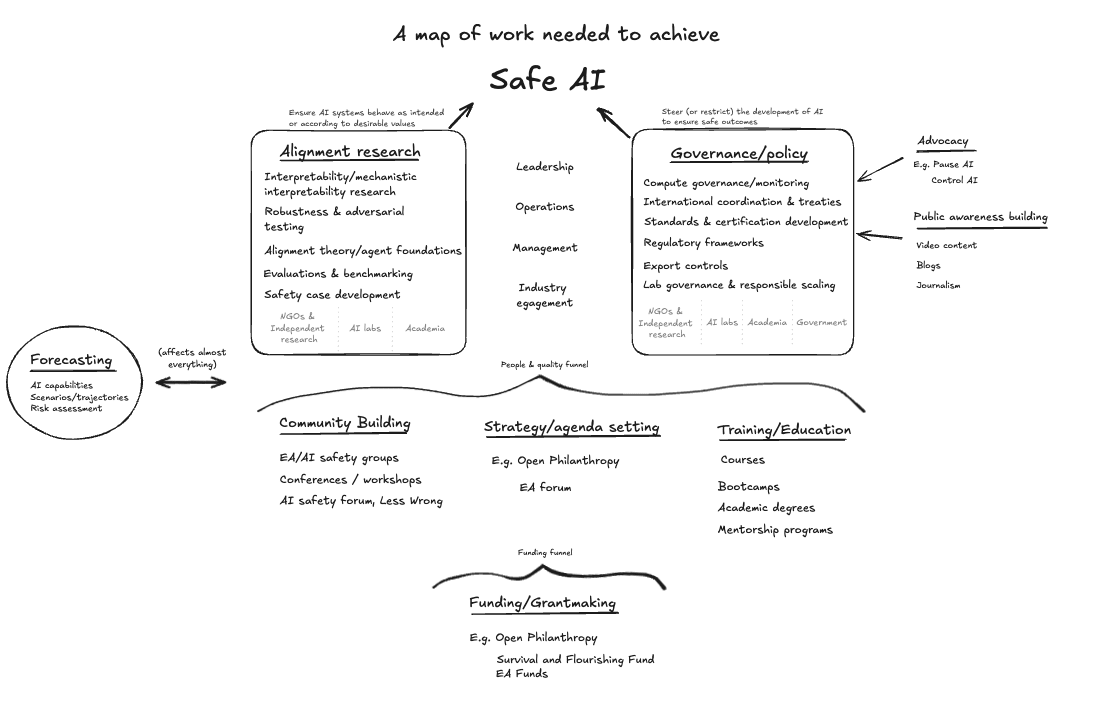

For this reason, gaining a birds-eye view of the AI landscape may be important. There is already some good work in this direction: for example this summary of current work in AI governance, and this map of different organizations in the space. But as far as I'm aware there is no single overview of all the work being done (or that should be done) to achieve safe AI, and of how the different efforts link together. To fix this gap, I've tried to map the movement below. I hope that this map will help newcomers to better understand the field, and that it can be used to identify bottlenecks or missing pieces (although I have not attempted to do so here).

You can view this in greater magnification, and customise it for your own use, here.

Disclaimer: I am no expert on AI safety! This is based on 6-12 months of familiarizing myself with the topic and feedback from a few people with greater expertise. I expect it to be incomplete and am very happy to receive suggestions for improvement.

- ^

- ^

Some bottlenecks that people have pointed to include:

- a shortage of mentors (1, 2),

- a shortage of organizations

- a shortage of people with leadership, management, policy and communication skills.