Crossposted from my blog.

When I started this blog in high school, I did not imagine that I would cause The Daily Show to do an episode about shrimp, containing the following dialogue:

> Andres: I was working in investment banking. My wife was helping refugees, and I saw how meaningful her work was. And I decided to do the same.

>

> Ronny: Oh, so you're helping refugees?

>

> Andres: Well, not quite. I'm helping shrimp.

(Would be a crazy rug pull if, in fact, this did not happen and the dialogue was just pulled out of thin air).

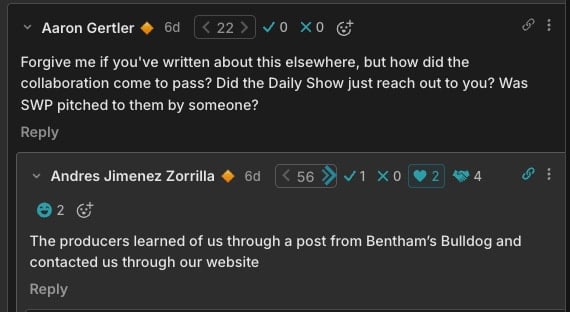

But just a few years after my blog was born, some Daily Show producer came across it. They read my essay on shrimp and thought it would make a good daily show episode. Thus, the Daily Show shrimp episode was born.

I especially love that they bring on an EA critic who is expected to criticize shrimp welfare (Ronny primes her with the declaration “fuck these shrimp”) but even she is on board with the shrimp welfare project. Her reaction to the shrimp welfare project is “hey, that’s great!”

In the Bible story of Balaam and Balak, Balak King of Moab was peeved at the Israelites. So he tries to get Balaam, a prophet, to curse the Israelites. Balaam isn’t really on board, but he goes along with it. However, when he tries to curse the Israelites, he accidentally ends up blessing them on grounds that “I must do whatever the Lord says.”

This was basically what happened on the Daily Show.

They tried to curse shrimp welfare, but they actually ended up blessing it! Rumor has it that behind the scenes, Ronny Chieng declared “What have you done to me? I brought you to curse my enemies, but you have done nothing but bless them!” But the EA critic replied “Must I not speak what the Lord puts in my mouth?”

Chieng by the end was on board with shrimp welfare! There’s not a person in the episode who agrees with the failed shrimp torture apologia of Very Failed Substacker Lyman Shrimp. (I choked up a bit at the closing song about shrimp for s

Alexander Hamilton