Hi, we are incubating explore policy , a policy simulation sandbox for anticipating future policy development by Jonas, Echo, Joel, and Caleb. [cross-posted here]

Introduction

Complex systems are comprised of multiple interacting components; they are composed of a large number of parts that interact in a non-simple manner.

“In such systems, the whole is more than the sum of its parts… given the properties of the parts and the laws of their interaction, it is not a trivial matter to infer the properties of the whole.” — Herbert A. Simon, The Architecture of Complexity (1962).

Intelligence, on another hand, is a species-spanning concept. AI systems share the same properties with complex systems, such as nonlinear growth, unpredictable scaling and emergence, feedback loops, cascading effects, and tail risks—therefore, policy makers need to take into consideration the complexity underlying such systems (Kolt et al., 2025).

Background: The Limitations of Current AI Forecasting Paradigms in Complex Systems

Current AI forecasts do not provide a comprehensive view of the future for informed policy making, despite offering probabilistic timelines for technological milestones, statistical risk assessments. Although reinforcement learning and prompt engineering approaches provide modest gains for specialised tasks like forecasting (Prompt Engineering Assessments, RL for Forecasting), AI systems can support human forecasters, enhance human accuracy in prediction (AI-Augmented Predictions: LLM Assistants Improve Human Forecasting Accuracy), and teach themselves to predict better (Teach Themselves to Better Predict the Future ). Karnofsky argues that such forecasts are most valuable when they are: (1) short-horizon, (2) on topics with good feedback loops, and (3) expressed as probability distributions rather than point estimates.

We generally have subjective storylines about AI geopolitics and timelines, e.g., China might smuggle compute from the US (AI 2027). What-if scenarios are often considered, what if the underlying assumptions change—China acts benignly, and another unexpected actor becomes the primary threat? In anchoring too heavily to one scenario, have we sidelined other plausible futures and failed to prepare for them?

While we have strong models for statistics and mathematical estimation, they offer limited insight into the shape of future society under AI. Will broader access to knowledge lead to greater social mobility, or will a concentration of computational power deepen inequality? How will the economy impact the labour market? What will economic transformation look like in 3-5 years? Many of the more profound questions surrounding transformative AI remain insurmountable; attempts to forecast its timeline face a substantial "burden of proof".

Intelligence Itself Defies Easy Prediction

Moravec's paradox describes the observation that tasks that are easy for humans, like perception, general reasoning skills, and motor skills, are surprisingly difficult for AI and robotics, while tasks that are hard for humans, like mathematics, are relatively easy for machines. This counterintuitive phenomenon highlights a gap in our understanding of intelligence and how it's achieved by different systems. (Moravec’s paradox and its implications | Epoch AI). As John McCarthy noted, “AI was harder than we thought,” and Marvin Minsky said this is because “easy things are hard”. Our limited understanding of the nature and complexity of intelligence itself limits how we think about intelligence in other systems (Melanie Mitchell). AI systems struggle to reason within complex systems due to nonlinear dynamics, emergent behaviors, feedback loops, scalability, and limited adaptability.

A. The Linearity Fallacy: When Math Meets Complex Reality

One of the most intriguing aspects of AI forecasting involves the tension between mathematical precision and social complexity. Many current approaches naturally gravitate toward linear models—input more compute and data, observe predictable increases in AI capability, and extrapolate toward superintelligence. Mathematical elegance is appealing, but it encounters challenges when we consider how social systems respond to change. Yuen Yuen Ang proposes an adaptive political economy, a paradigm that does not impose artificial assumptions of mechanical and linear properties on complex adaptive social systems.

When we introduce a new element into an ecosystem, the system doesn't simply absorb the change and continue along its previous trajectory. Instead, it adapts, evolves, and often finds entirely new equilibrium points that weren't predictable from the original conditions.

Consider the thoughtful economic modeling attempted at the Threshold 2030 conference, where leading economists worked to understand AI's potential economic impacts. Their analysis systematically examined how AI might replace human workers across different capability levels, with unemployment rising predictably as AI abilities expanded. Yet the analysis reveals limitation. The models primarily considered how existing economic structures would respond to AI capabilities, but gave less attention to how those very structures might transform in response to the technology. For instance, rather than simply displacing traditional employees, AI might enable the emergence of platform micro-economies where individuals become AI-empowered micro-entrepreneurs, creating entirely new forms of economic organization that don't rely on traditional hiring relationships.

When we examine how social systems respond to major disruptions—whether economic, technological, or cultural—we often see cascading adaptive responses. Rising unemployment triggers political pressure for new policies, educational system adaptations, novel forms of economic organization, and shifting cultural expectations about work itself.

B. Surface-Level Correlation Models: Confusing Symptoms for Causes

The Attribution Problem

Some writers attempting to measure AI's economic impact often rely on correlational studies—tracking job changes in sectors with high AI adoption, analyzing wage patterns in "AI-exposed" occupations, or measuring productivity shifts following AI deployment. While these studies provide valuable data, they have a methodological weakness: correlation can not indicate causation of AI affecting the job market.

The Confounding Variables Challenge

The complexity deepens when we consider the multiple factors affecting any economic indicator simultaneously. Changes in employment patterns could result from:

- Economic cycles and hiring freezes are unrelated to AI

- Demographic trends, such as declining birth rates, affect the young worker supply

- Educational shifts toward different fields are driven by cultural changes

Simple time-series analyses cannot disentangle these interconnected influences. Even if we observe the predicted correlational patterns, we cannot confidently attribute them to AI rather than to these numerous confounding factors.

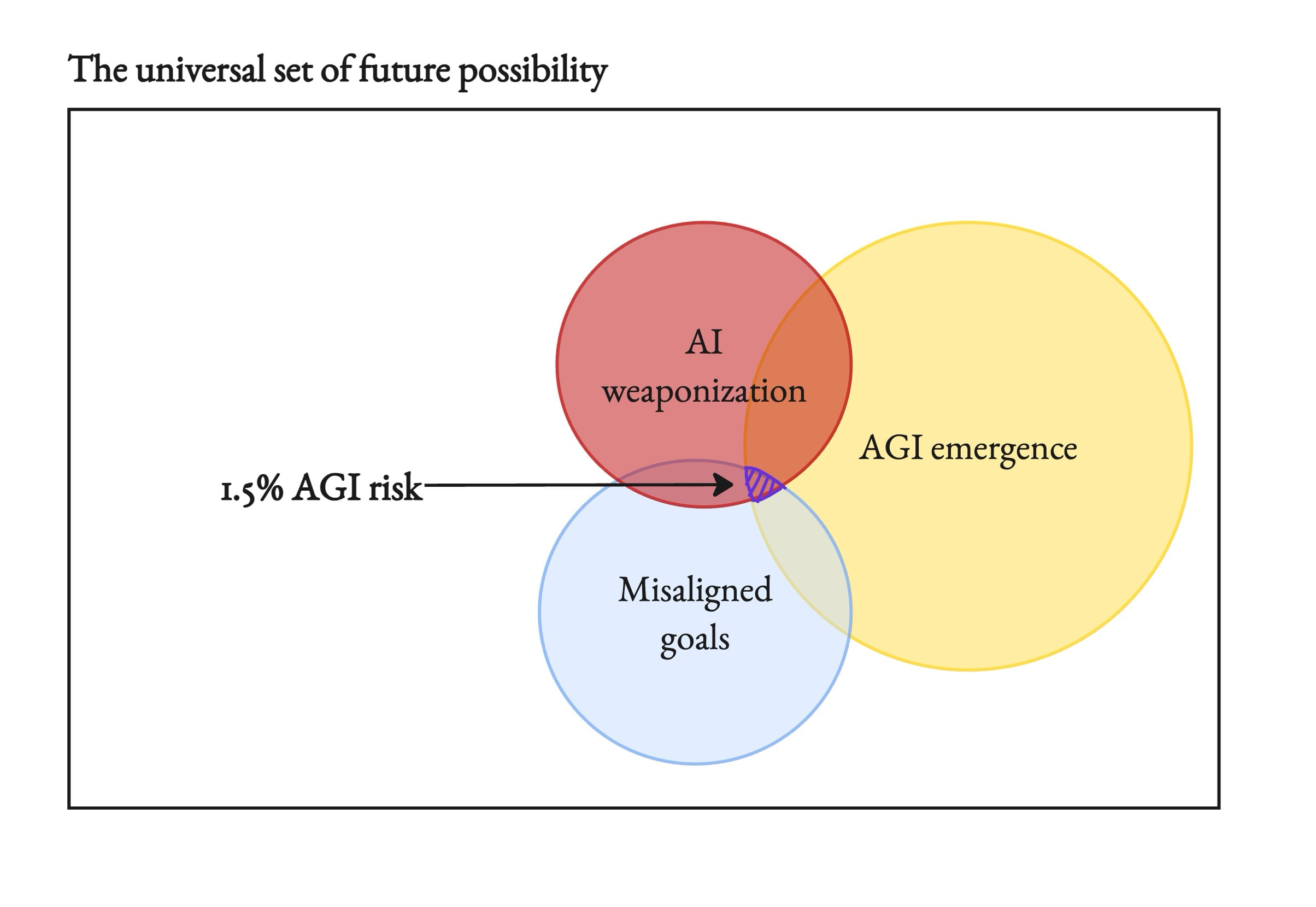

C. Abstract Risk Metrics: Abstract numbers

The Interpretability Crisis

Claims like "1.6% chance of catastrophic AGI" or "1.6% risk of AGI catastrophe" have limited explanatory power when it’s meaning is blurry.

Does a "1.6% risk" mean:

- AGI has an intrinsic 1.6% probability of randomly becoming malevolent?

- There's a 1.6% chance that a specific sequence of preconditions will align to create a catastrophe?

- Some weighted combination of different causal pathways that collectively sum to 1.6%?

Without understanding the conditional structure, these numbers provide no actionable guidance for prevention or preparation.

The Missing Conditional Logic

Effective risk assessment requires understanding the specific preconditions that enable different outcomes. Instead of asking "What's the probability of AGI catastrophe?" we also need to ask:

- What specific conditions would need to align for catastrophic outcomes? How likely are those preconditions to occur simultaneously?

- What early warning indicators would signal increasing risk?

- Which preconditions can we influence to reduce overall risk?

This conditional approach transforms abstract risk metrics into actionable frameworks for prevention and preparation.

D. Intuition-Based Reasoning: Trends Masquerading as Analysis

The Trendline Extrapolation Problem

The error is the assumption of smooth scaling: the belief that current AI scaling trends (compute, algorithms, and data) will continue linearly through multiple orders of magnitude. This ignores several challenges:

- Technical barriers: Hardware limitations, power constraints, and data quality bottlenecks

- Economic constraints: The assumption of unlimited capital availability for trillion-dollar investments

- Physical limits: Energy requirements and material constraints on chip manufacturing

The Intuition-Dependence Problem in Political-Contingent Models

Contemporary AI forecasting increasingly substitutes empirical analysis with speculative political narratives. It conditions technological predictions on intuitive assumptions about political leadership decisions rather than observable evidence.

This approach violates core forecasting principles: it replaces data-driven modeling with narrative speculation, conflating plausible stories with probabilistic analysis. While political factors influence technological development, transforming intuitive political assessments into deterministic technological forecasts lacks empirical validation of causal mechanisms.

Most critically, such frameworks fail to provide the robust scenario planning essential for evidence-based policy. By privileging single intuitive pathways over comprehensive contingency analysis, they offer limited utility for decision-makers who require methodologically sound assessments across multiple possible futures.

The Unfalsifiable Prediction Problem

Intuition-based forecasts often present speculative scenarios as inevitable outcomes rather than probabilistic possibilities. Claims like "we will have superintelligence by decade's end" are presented as certainties rather than conditional forecasts that can be evaluated and updated as new evidence emerges.

Effective predictions must be specific, time-bound, and falsifiable. They should specify the conditions under which they would be proven wrong and provide mechanisms for updating beliefs as new information becomes available.

What We Need Instead

The limitations of current forecasting paradigms point toward several essential requirements for more robust approaches:

Stakeholder-Centered Analysis: Rather than treating AI development as a purely technical process, we need detailed modeling of how different groups—researchers, companies, governments, workers, and consumers—will respond to AI capabilities and attempt to shape AI development to serve their interests. To have a fair representation of the future, this is not to be taken lightly.

Conditional Scenario Modeling: Instead of abstract risk percentages, we need a clear specification of the preconditions required for different outcomes, analysis of how likely those preconditions are to align, and identification of intervention points where different stakeholders can influence trajectories. We need to understand the situations(scenarios) we are trying to improve.

Dynamic Feedback Modeling: Forecasting approaches must account for how social systems adapt and respond to technological change, creating feedback loops that alter the original conditions and assumptions. We need to constantly take feedback from reality to make sure the modeling is accurate.

Multi-Scale Integration: We need frameworks that can integrate technical progress, institutional responses, cultural adaptation, and economic restructuring across different timescales and levels of social organization.

What Kind of Forecasting Satisfies the Requirements Above?

Stakeholder-Centered Analysis → Agent-Based Simulation

- Model humans, not just trends: Recent research shows that interview-grounded LLM agents replicate 85% of real survey answers from 1,052 individuals, nearly matching human consistency over two weeks. These agents predicted participants’ responses on the General Social Survey with 85% normalized accuracy, nearly matching humans' consistency over a two-week retest period.

- Behavioral consistency implies scalability: Even without perfect mental models of human cognition, these agents act in human‑like ways, grounded in the interview data. A thousand agents can simulate a tiny town; scaling to 10,000 or 100,000 agents could surface richer emergent dynamics of complex social systems.

By integrating these AI agents into forecasting simulations, we can:

- Represent diverse agent types—policymakers, corporations, workers, marginalized communities—with empirical motivations and belief structures.

- Simulate inter-agent interactions in evolving scenarios, allowing emergent macro-patterns to arise naturally rather than being imposed.

- Avoid overfitting to expert assumptions by drawing on real-world interviews, enabling grounded policy design that reflects actual stakeholder incentives and perceptions.

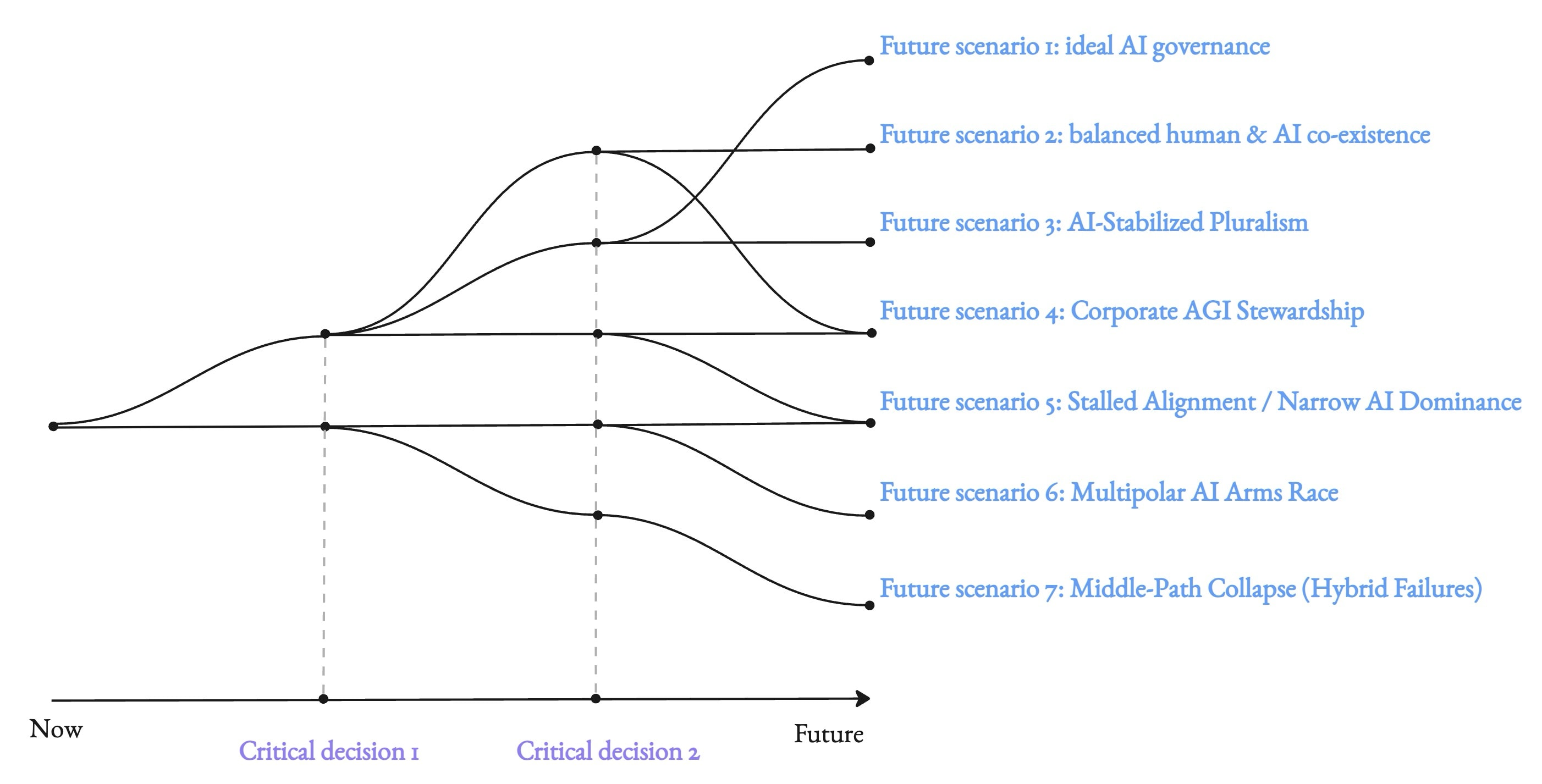

Conditional Scenario Modeling → Causal Pathway Analysis

Forecasts need to do more than offer probabilities; they must surface the conditions that make certain futures more or less likely. The future does not unfold along a single line; it branches like a tree, with each fork representing a decision point, a contingent event, or a structural condition.

- Map precondition chains: Instead of simply positing that “AI centralization will lead to surveillance states,” we specify the dependency path: e.g., [increased compute access + weak data protection + monopoly incentives → mass surveillance].

- Design for structural uncertainty: These models do not rely on precise probabilities. Instead, they offer clusters of plausible development paths, each with identifiable preconditions and signals.

- Reject paths by rejecting conditions: This approach turns forecasting into intervention planning. If a dangerous scenario requires a specific set of events, we can focus policy on disrupting that causal chain.

- Scenario-Based Policy Testing → Integrated Policy Sandboxes Forecasting should not just describe what might happen; it should actively simulate how different policies would change what happens.

- Test interventions in context: Inject Universal Basic Income, data localization laws, corporate taxation models, or decentralized identity systems into scenario simulations. Observe which actors adapt, resist, or collapse under different conditions.

- Design low-cost policy experiments: Instead of testing high-stakes policies in the real world, these simulated sandboxes allow for agile exploration of long-term consequences.

- Reveal second to nth-order effects: Good policy modeling doesn’t just show immediate impact. It helps surface the knock-on effects years down the line, where real consequences play out.

Outcome-Optimized Simulation: Goal-Directed Forecasting

Traditional simulations explore open-ended possibilities but can be computationally intensive and unfocused. Optimizing for a specific variable or end-state—treating it as a "target function" to minimize or maximize—efficiently probes high-stakes scenarios. This uses AI techniques like reinforcement learning (agents maximize target-aligned rewards) or backward induction (reverse-engineering from outcomes). For example, define an objective like "AGI takeover probability >90%" and steer simulations to reveal pathways and sensitivities.

- Reduced computational demands through focused exploration: Optimizing prunes irrelevant branches via heuristic search or gradient-based methods, enabling large-scale (e.g., 100,000 agents) and multi-timescale forecasting on limited hardware, prioritizing depth for risks like AI-driven inequality.

- Probing "what-if" questions to uncover hidden pathways: Frame queries as optimization problems, e.g., "What leads to AGI takeover?" Define the target (e.g., AI controlling >50% global compute), then iteratively adjust variables (agent behaviors, policies) via loss functions or genetic algorithms, exposing unexpected routes like corporate alliances under weak regulations + rapid scaling.

- Enhanced risk assessment for worst-case scenarios: Optimize towards queried outcomes (e.g., catastrophic misalignment) to identify risk factors and quantify robustness to perturbations.

- Built-in safeguards against misuse: Targeted requests enable auditing; flag malicious queries (e.g., harmful exploits) via pattern matching or ethical filters, embedding civic oversight in "foresight infrastructure."

- Sensitivity and robustness analysis: Optimize across variables (e.g., minimize inequality or maximize safety), testing how changes (e.g., +10% compute efficiency) affect outcomes in multiple runs.

- Multi-Timescale Forecasting: See Both Trees and Forest

- Short-term (1–5 years): Focus on technical deployment rates, early labor displacement patterns, and regulatory bottlenecks.

- Mid-term (5–10 years): Simulate systemic adaptations—changes in education systems, social trust dynamics, corporate consolidation risks, and early signs of policy feedback.

- Long-term (10–30 years): Model civilizational transformations, shifts in the meaning of labor, democratic reconfiguration, and new geopolitical alignments triggered by AI-based power asymmetries.

Ultimately, what we need is not just better forecasting tools, but foresight infrastructure: civic, institutional, and epistemic frameworks that can absorb, adapt to, and iterate on predictive models in response to real-world signals.

What does an ideal simulation output look like?

1. Exploratory Analysis Mode: Multi-Pathway Visualization

An interactive dashboard enabling real-time parameter manipulation and comprehensive scenario exploration:

- Narrative Branching Architecture

- Expandable tree visualization presenting self-contained storylines

- Node-based divergence points revealing causal mechanisms (e.g., "stronger data protections → surveillance prevention")

- Multi-timescale event mapping (short/mid/long-term consequences)

- Stakeholder Impact Assessment

- Quantified benefit-harm matrices across population segments

- Equity scoring metrics with distributional analysis

- Public-access trade-off documentation for transparency

- Visualization Infrastructure

- Interactive charts and heatmaps for societal change modeling

- Export capabilities for policy integration (APIs, reports)

- Collaborative annotation systems for iterative refinement

2. Outcome Optimization Mode: Directed Scenario Analysis

A game-theoretic interface for testing specific future conditions:

- Dynamic Policy Simulation

- Baseline-to-outcome pathway generation through variable adjustment

- Inflection point identification with causal attribution

- Real-time feedback on decision consequences

- Risk Assessment Framework

- Automated impact audits with stakeholder-specific outcomes

- Benefit flow visualization through Sankey diagrams

- Mandatory ethical flagging for high-harm scenarios

- Public disclosure requirements for accountability

- Interactive Analysis Tools

- Mid-simulation intervention testing capabilities

- Robustness scoring metrics

- Multiplayer policy gaming for collaborative exploration

- Integration with decision-support systems for prescriptive outputs

Both modes emphasize reproducibility through searchable databases and ensure outputs transition from predictive to prescriptive utility for evidence-based policy formulation.

We are actually close to the simulation with realistic social reaction and we could build this

Perfect prediction of chaotic social systems may be impossible, but we can replicate their essential dynamics. Instead of forecasting exact outcomes, we can build simulations that capture social complexity.

The Corrupted Blood Incident: An Accidental Epidemic Laboratory

In September 2005, World of Warcraft inadvertently created a natural experiment in epidemic dynamics when a programming error enabled disease spread beyond intended boundaries. The outbreak affected millions of players, with transmission vectors including asymptomatic pet carriers and NPC infection reservoirs, achieving reproductive rates of 10² per hour in urban centers.

Methodological Insights from Emergent Behaviors

The incident revealed critical limitations of traditional SIR models, which assume uniform population mixing and fail to capture behavioral heterogeneity:

- Adaptive responses: Players spontaneously developed altruistic intervention (healers rushing to infected areas), voluntary quarantine, and deliberate disease spreading

- Network effects: Non-uniform social structures and resurrection mechanics created transmission dynamics unpredictable from initial conditions

- Behavioral undermining: Individual choices systematically violate optimal epidemic control strategies

These emergent properties demonstrate why mathematical models alone cannot capture complex social dynamics—a limitation directly applicable to AI impact forecasting.

Implications for AI Impact Simulation

The Corrupted Blood incident validates agent-based modeling for AI scenarios through three parallels:

- Both contexts feature behavioral unpredictability where individual responses (altruism, sabotage, adaptation) aggregate into system-level outcomes impossible to deduce from initial specifications.

- Both demonstrate stakeholder heterogeneity where diverse agent types respond differently to systemic changes, creating feedback loops.

- Both prove scalability feasibility with millions of concurrent agents generating authentic social dynamics under controlled, observable conditions.

This natural experiment establishes that comprehensive AI impact simulation—incorporating behavioral diversity, emergent properties, and multi-scale dynamics—is not merely theoretically sound but empirically validated through demonstrated complex system replication.

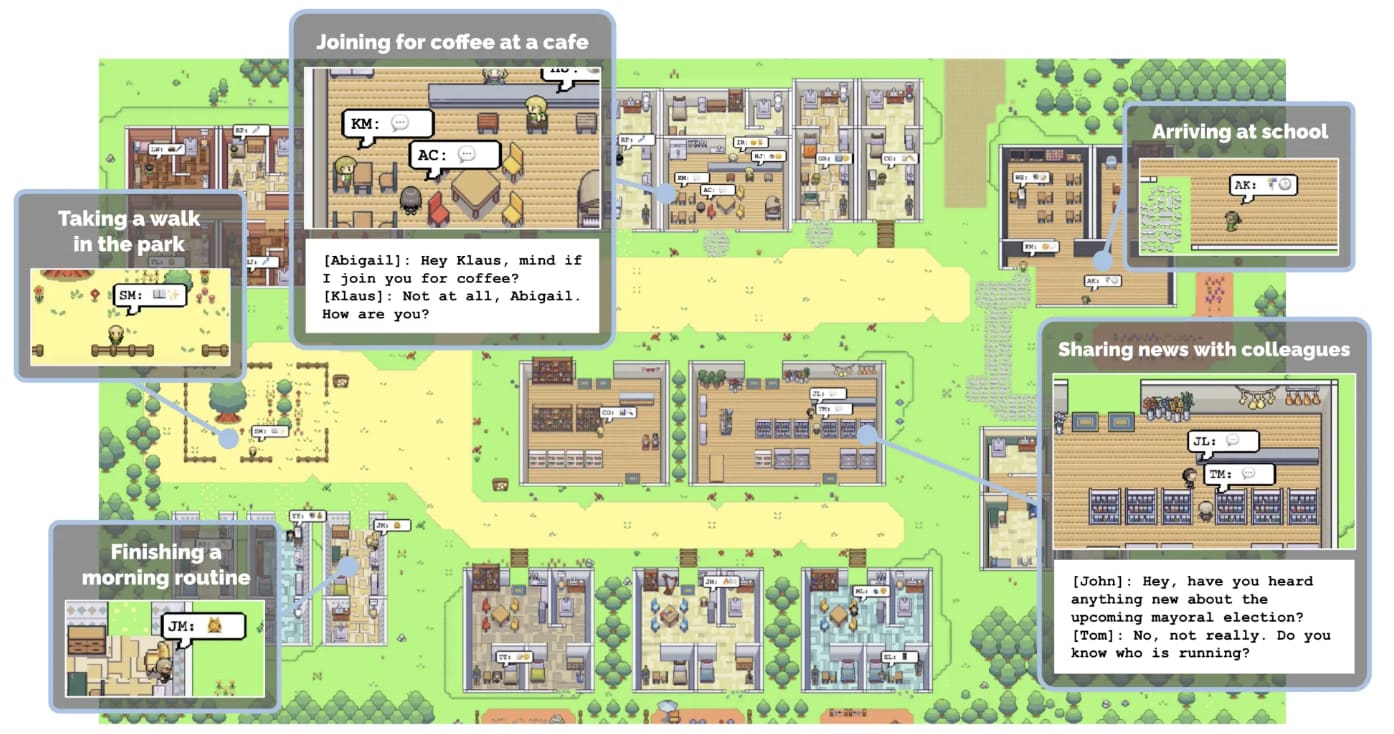

Stanford's Generative Agents: Technical Validation of Social Simulation

The Stanford research directly validates the technical feasibility of the stakeholder-centered, agent-based simulation approach advocated for AI impact modeling. In their Smallville environment, 25 agents demonstrated sophisticated emergent behaviors that would be impossible to predict from individual agent specifications alone.

- Information cascades: Sam's mayoral candidacy spread from one agent to eight (32%) through natural conversation networks, while Isabella's Valentine's Day party information reached thirteen agents (52%)

- Relationship evolution: Network density increased from 0.167 to 0.74 over two simulation days as agents formed new connections based on shared interests and interactions

- Coordinated emergence: Isabella's party planning involved autonomous invitation spreading, decoration coordination, and actual attendance by five agents—all emerging from a single initial intent

Technical scalability indicators:

- Agents successfully maintained behavioral consistency across extended timeframes while adapting to new circumstances

- Complex multi-agent coordination (party planning, election discussions) emerged without explicit programming

- The system demonstrated robustness with 25 simultaneous agents, suggesting scalability to larger populations necessary for comprehensive AI impact modeling

It is a connection to Non-Linear Social Dynamics!

While the epidemic showed emergent crisis responses in existing virtual worlds, the Generative Agents research proves that sophisticated social behaviors can be deliberately architected and scaled.

Convergent evidence for social modeling:

- Behavioral authenticity: Both systems captured authentic human responses—crisis altruism and voluntary quarantine in World of Warcraft, relationship formation and information sharing in Smallville

- Unpredictable emergence: Neither system could predict specific outcomes from initial conditions—epidemic spread patterns versus party coordination success

- Cascading social effects: Individual decisions created system-wide changes through social networks rather than linear cause-effect relationships

Together, these examples establish that comprehensive AI impact simulation—incorporating stakeholder diversity, conditional scenario modeling, and multi-timescale dynamics—is not only theoretically sound but technically achievable with current computational resources.

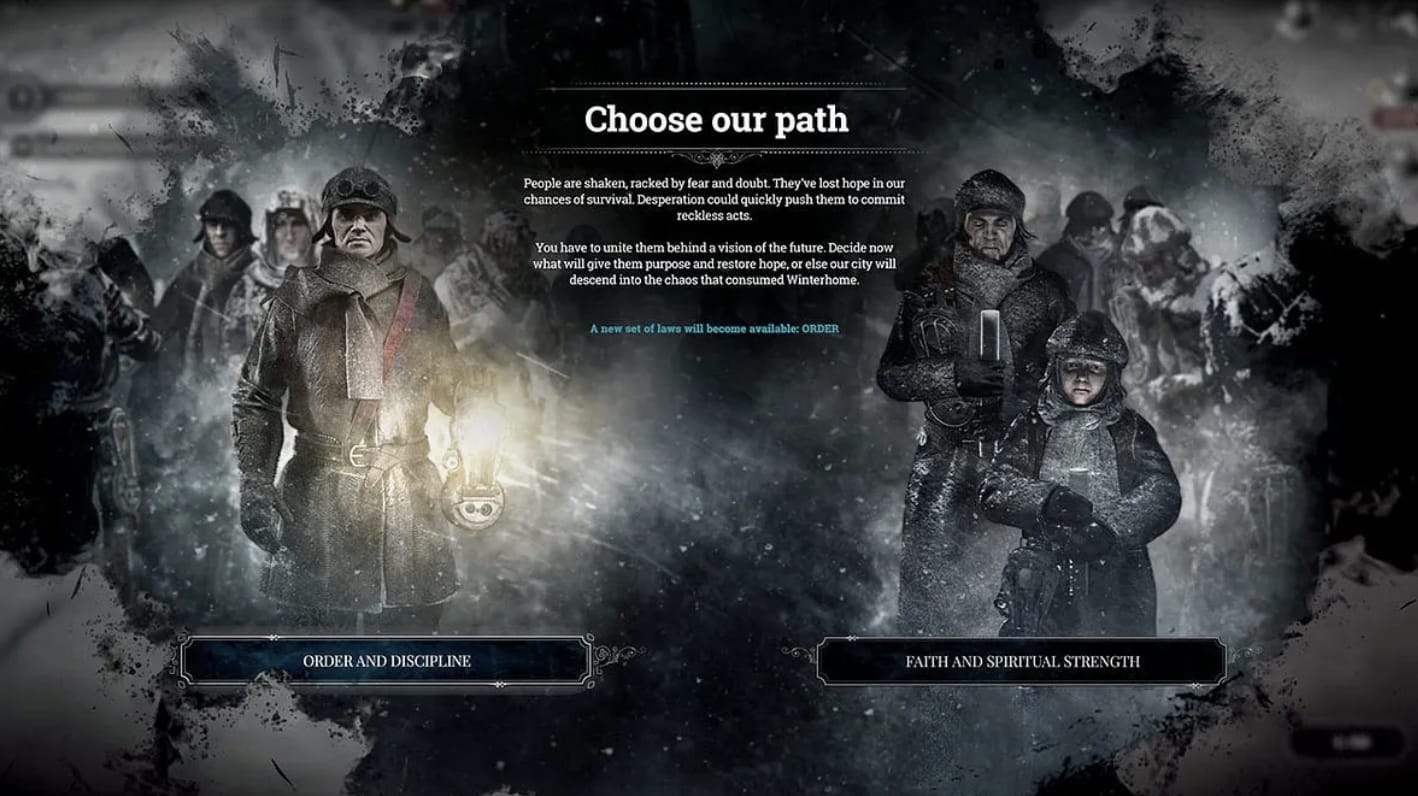

Frostpunk: Critical Decisions and Branching Societal Outcomes

Frostpunk, a city-building survival game set in a volcanic winter apocalypse, presents another compelling example of realistic social simulation under crisis conditions. Unlike the Corrupted Blood incident, which demonstrated emergent agent-based behaviors, Frostpunk operates through programmed decision trees and scripted responses. However, it excels as a showcase for how critical decisions create branching pathways and diverse societal outcomes as a crucial element for understanding AI impact scenarios.

Critical decision-making mechanisms include:

- Policy choices: Legislation on labor, healthcare, and emergency measures with cascading societal effects

- Resource trade-offs: Immediate survival needs versus long-term sustainability investments

- Governance paths: Authoritarian versus spiritual approaches that fundamentally reshape social structures

- Moral boundaries: Individual decisions that accumulate into permanent shifts in societal values

These decisions create branching pathways across temporal scales, illustrating multi-timescale forecasting principles essential for AI impact modeling:

- Short-term impacts: Resource allocation affects immediate survival rates and social tension

- Mid-term adaptations: Early policy choices evolve into broader governance and economic systems

- Long-term outcomes: Survival strategies determine whether societies maintain democratic structures or evolve into authoritarian regimes

Conditional pathway modeling in practice: The game exemplifies how catastrophic outcomes aren't random but result from aligned preconditions: low resources + harsh laws + ignored warnings = societal revolt. Conversely, strategic interventions at key decision points—investing in hope-boosting infrastructure during stable periods—can steer trajectories toward positive equilibria.

These dynamics directly parallel AI deployment scenarios where immediate technical decisions (short-term), regulatory adaptations (mid-term), and civilizational value shifts (long-term) interact to determine societal outcomes, validating the importance of multi-timescale simulation frameworks for robust AI impact forecasting.

Limitations and risks of the forecasting system

- Limited "learning" and development in AI agents: Unlike humans, who genuinely learn from experiences, adapt creatively, and evolve personal worldviews over time, AI agents rely on predefined prompts, static interview data, and algorithmic updates. They simulate adaptation through reflections and planning, but lack intrinsic motivation or consciousness. In long-term scenarios (10-30 years), this could underrepresent emergent properties in the complex system, such as cultural paradigm shifts or grassroots innovations, leading to overly rigid or repetitive simulations that miss "black swan" societal transformations.

- Scalability and representational gaps in emergent properties: While scaling to 10,000-100,000 agents is theoretically possible, current systems struggle with the combinatorial explosion of interactions over extended timescales. Long-term emergents—like evolving norms around AI ethics or intergenerational value changes—might not manifest authentically.

- Data and bias inheritance: Interviewing people is not enough to capture human complexity/different personalities/cultural background to have fair diversity.

Beyond technical limits, simulations carry societal risks if misused or misinterpreted.

- Misuse for agendas or malicious ends: Outputs could be exploited to craft harmful narratives—e.g., corporations downplaying risks or actors probing AGI pathways for acceleration. If simulations reveal ways to benefit one group while harming others (e.g., nudging reality toward inequality), this could lead to real-world manipulation without oversight.

- Spread of misinformation or false certainty: Compelling "stories" might be mistaken for predictions, fueling panic or overreactions; cherry-picked analyses could mislead, eroding trust and creating echo chambers.

- Data collection and privacy risks: Scraping social media without consent invades privacy and creates unauthorized digital twins, as criticized in public forums, potentially exposing individuals to harms like biased representations or data breaches.

- Resource and access inequalities: High-compute tools favor elites, concentrating narrative power.

Potential solution (we are figuring out more and better ones, help us):

- Constrain malicious requests and commercial exploitation:

- Require mandatory public disclosure of all multi-timescale forecasts and optimization outputs, alerting everyone to potential consequences and enabling monitoring of bad acts.

- For specific queries, systems should flag and reject malicious requests (similar to LLM harm filters), with ethical audits and governance boards preventing exploitation.

- Limit commercial use through regulations that prohibit profit-driven applications (e.g., banning insurance companies from using simulations to maximize revenue at the expense of overall medical benefits, ensuring outputs prioritize societal welfare over monopoly gains).

- By prioritizing steerability in these sandboxes, we can simulate and refine mitigations—such as ethical filters and public oversight—to ensure simulations guide positive outcomes.

- Ethical data collection:

- Use anonymous interviews (e.g., secure, consent-based platforms without identifiable information) to build profiles. Implement binding contracts for data security, including encryption, limited retention, and third-party audits.

- As an alternative, invite participants to anonymous gameplay (e.g., Minecraft sessions), recording preferences non-invasively and voluntarily to capture authentic behaviors while respecting privacy and enhancing representation.

- Promote public interest: Adopt open-source frameworks and cloud-subsidized access for non-profits/civic groups, with international standards to democratize tools.

- Equity and inclusivity:

- Mandate diverse data sourcing (e.g., quotas for underrepresented groups in interviews) and bias audits in agent design

- Ensuring simulations highlight impacts on marginalized communities.

Dynamic sandboxes emphasize steerability, letting us test mitigations like policy interventions before reality does, turning potential hopelessness into proactive control.

A few examples to put everything together: how does the simulation provide insights?

Note: the cases below are not from real simulations. They are meant to show how simulation could provide insight.

Example 1: LLM Deployment in a 500-Person Community

Initial Conditions:

- Population: 500 agents

- Technology: Open-source LLM hub (50% productivity boost for compatible tasks)

- Occupation Distribution: 60% non-digital, 30% white-collar digital, 10% entrepreneurs/freelancers

Temporal Evolution of Community Impacts:

Finding: Initial inequality levels function as amplifiers—AI deployment either reduces disparities (low-inequality baseline) or accelerates stratification (high-inequality baseline), with divergence observable within 12 months and becoming irreversible by year 3.

- Policy leverage points: Introducing training/upskilling within 3–6 months mitigates polarization between tech-savvy and non-tech-savvy populations.

- Demographic vulnerability: Older or less connected agents disproportionately face job displacement; without inclusive infrastructure, the simulation forecasts long-term inequity.

- Labor substitution vs. augmentation: The dominant employer mindset—whether to augment or replace staff—drastically alters system-wide outcomes.

- Emergent behavior: Freelance “AI micro-entrepreneurship” wasn't explicitly coded but emerged from agents responding to job loss and market signals.

| Timeframe | Economic Effects | Social Dynamics | Emergent Behaviors |

|---|---|---|---|

| Short-term (0-6 months) | • White-collar efficiency gains • Reduced overtime, questioning of staffing needs • Initial job displacement (1-2 positions) | • Shift in leisure patterns • Early adopters vs. traditional workers divide | • YouTube tutorial searches for "ChatGPT monetization" • Substack newsletter launches • AI-powered startup ideation |

| Mid-term (6-18 months) | • Economic stratification emerges • 30% clerical staff reduction • 3 firms eliminate junior analyst roles | • Growing demand for upskilling programs • "AI-enabled" vs "AI-displaced" tensions • Changed work-life patterns | • Micro-entrepreneurship proliferation • Formation of AI service guilds • Gig economy expansion |

| Long-term (2-5 years) | Positive Path: • Public LLM co-working center • AI wage adjustments • Narrowed digital divide Negative Path: • Wage suppression • Black market "prompt labor" • Rising inequality | Positive Path: • Community cohesion • Inclusive growth Negative Path: • Social fragmentation • Informal labor markets | • Adaptive economic reorganization • Novel employment categories • Community-driven solutions (or lack thereof) |

Example 2: Comparative Analysis of 5,000-Person Societies

Initial societal situation:

| Dimension | Society A (Low Inequality) | Society B (High Inequality) |

|---|---|---|

| Wealth Distribution | Gini ≈ 0.25-0.30 Strong middle class | Gini ≈ 0.50-0.60 Top 10% owns 70% |

| Institutional Framework | • Universal public services • Participatory governance • Cooperative ecosystem | • Weak public services • Low institutional trust • Corporate dominance |

| Digital Access | Near-universal broadband | 20% high-speed access |

| AI Deployment Model | Public infrastructure + co-op pools | Corporate/elite concentration |

Divergent Trajectories by Timeframe

| Period | Society A Outcomes | Society B Outcomes | Divergence Metrics |

|---|---|---|---|

| Year 0-1 | • Universal AI adoption • Subsidized training • Cross-sector productivity gains | • Elite-concentrated adoption • Limited bottom-50% access • Corporate efficiency focus | Adoption Gap: 85% vs 35% Training Access: 100% vs 15% |

| Year 1-3 | • Work week: 32-35 hrs • Cooperative AI platforms • Guild formation | • Mass displacement • AI rentier class emergence • Local business failures | Employment Impact: +5% vs -15% New Ventures: +12% vs -8% |

| Year 3-5 | • Decreased inequality • Enhanced civic participation • Local AI innovation | • Intensified stratification • Social instability • "AI populism" movements | Gini Change: -0.05 vs +0.12 Social Cohesion Index: +18% vs -32% |

Policy Implications: Initial institutional conditions determine whether AI becomes an equalizing force or an accelerant of inequality. The divergence window occurs within 12 months, with trajectories becoming structurally locked by year 3.

- Policy leverage points: Universal access provision within months 0-6 prevents elite capture. Augmentation incentives implemented before month 12 shape employer behavior toward workforce enhancement rather than replacement.

- Institutional prerequisites: Strong public services and high social trust enable collective AI benefits. Weak institutions cannot counteract market concentration tendencies, leading to extractive dynamics.

- Economic transformation patterns: Society A demonstrates "inclusive innovation" with cooperatives and guilds. Society B exhibits "extractive consolidation" with rentier dynamics and monopolization.

- Emergent political economy: Society A generates participatory democracy enhancement through AI tools. Society B experiences democratic erosion as economic power concentration translates to political influence, spawning populist backlash movements.

I hope these examples make you feel more intuitive about how simulation will help with policy making.

Next Steps

We are developing agent-based simulations to address the limitations of current AI forecasting, as outlined in this document, by modeling dynamic social responses and stakeholder interactions.

- Benchmarks and Historical Validation: We are creating benchmarks using historical examples—like the Corrupted Blood incident and nuclear discontinuities—to ensure our simulations replicate complex social dynamics, including emergent behaviors and non-linear adaptations.

- Prototype Simulation Scale: We will simulate a virtual town of 200-400 interview-grounded agents over several years to explore AI's socioeconomic influences and interplay with factors such as initial inequality levels and ownership models (e.g., centralized corporate vs. distributed co-ops or public AI labs). Key areas include:

- Labor market disruptions: Job displacement, augmentation, and polarization.

- New economic sectors: Rise of AI-enabled entrepreneurship, such as prompt engineers, AI tutors, and augmented gig work.

- Wage inequality and negotiation: Increased returns to capital and IP owners, emergence of "super individuals" who leverage AI to outperform teams, and evolving employer-employee dynamics.

- Constant Updates on Our Findings:

- Regularly update the EA Forum and broader community through posts, disseminating insights via formal channels (e.g., reports and academic papers) and informal ones (e.g., blogs).

- Organize dialogues/roundtables to reveal policy insights, highlight social complexities, and foster collaborative discussions on mitigation strategies.

These simulations will generate comprehensive scenarios, detailing stakeholder impacts (e.g., benefits to IP owners via value extraction mechanisms, harms to displaced workers through reduced bargaining power), and holistic mitigation strategies (e.g., tax policies for redistribution if ownership-driven inequality emerges, or upskilling programs to counter polarization).

If our agent-based simulation for complex systems resonates, connect with us. Please also let us know if you think AI simulation can not solve complex systems.

Your feedback is highly appreciated and will enable iterative improvements toward more robust, actionable tools for equitable AI integration.

Contact:

Jonas Kgomo: jonaskgmoo@gmail.com

Echo Huang: echohuang42@gmail.com

Know a organisation, or researcher who cares about AI forecasting and scenario planning? Please connect us.

Executive summary: This exploratory post introduces Explore Policy, a simulation sandbox aiming to improve AI policy forecasting by modeling complex social dynamics using agent-based simulations, arguing that current linear, intuition-driven, and abstract risk models are inadequate for capturing the non-linear, emergent nature of AI’s societal impacts.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.