TLDR: This 6 million dollar Technical Support Unit grant doesn’t seem to fit GiveWell’s ethos and mission, and I don’t think the grant has high expected value.

Disclaimer: Despite my concerns I still think this grant is likely better than 80% of Global Health grants out there. GiveWell are my favourite donor, and given how much thought, research, and passion goes into every grant they give, I’m quite likely to be wrong here!

What makes GiveWell Special?

I love to tell people what makes GiveWell special. I giddily share how they rigorously select the most cost-effective charities with the best evidence-base. GiveWell charities almost certainly save lives at low cost – you can bank on it. There’s almost no other org in the world where you can be pretty sure every few thousand dollars donated be savin’ dem lives.

So GiveWell Gives you certainty – at least as much as possible.

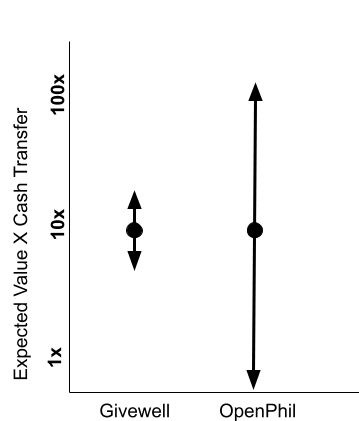

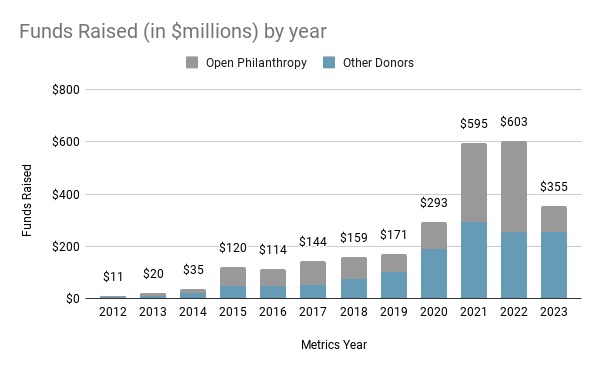

However this grant supports a high-risk intervention with a poor evidence base. There are decent arguments for moonshot grants which try and shift the needle high up in a health system, but this “meta-level”, “weak evidence”, “hits-based” approach feels more Open-Phil than GiveWell[1]. If a friend asks me to justify the last 10 grants GiveWell made based on their mission and process, I’ll grin and gladly explain. I couldn’t explain this one.

Although I prefer GiveWell’s “nearly sure” approach[2], it could be healthy to have two organisations with different roles in the EA global Health ecosystem. GiveWell backing sure things, and OpenPhil making bets.

GiveWell vs. OpenPhil Funding Approach

What is the grant?

The grant is a joint venture with OpenPhil[3] which gives 6 million dollars to two generalist “BINGOs”[4] (CHAI and PATH), to provide technical support to low-income African countries. This might help them shift their health budgets from less effective causes to more effective causes, and find efficient ways to cut costs without losing impact in these leaner times. Teams of 3-5 local experts will be embedded in government ministries for 12-18 months.

In rough order of importance, I list 6 reasons why I’m dubious whether this grant fits GiveWell’s mission, and also why I’m dubious whether the grant makes sense.

1.The TSU Evidence base is minimal

Before this grant, I wouldn’t have thought “technical support units” which support government health ministries would have been on GiveWells radar, because the evidence base is scanty at best. Technical support to health ministries has been a go-to development approach for a long time. Since the 1950s, richer government and NGOs have spent billions on technical support for low income governments. One review described a whopping 13 different TSU models including those of CHAI, Save the Children and the World Bank. All try to improve health policy and fund allocation through embedding TSUs within Government ministries, yet we have little evidence that they make a difference. There are a handful of before and after studies with positive results which focus on specific healthcare priorities such as malnutrition, data management and maternal and child health This meagre data however very is low quality especially in proportion to the large amounts of money spent.

A 2001 review stated “In general, there is scant evidence on the effectiveness of TA (Technical Assistance) and how it may contribute to improve health outcomes”. One meta-analysis of different technical support units showed very little peer-reviewed research on the practice in low-income countries. They correctly stated “Considering its important role in global health, more rigorous evaluations of TA efforts should be given high priority.” Despite perhaps billions of dollars spent, and thousands of technical support programs, there isn’t clear evidence that TSU’s work, nor a wealth of stories and reports which indicate that TSUs might be a cost-effective way of improving healthcare delivery.

Backing an low evidence-base approach with 6 million dollars doesn’t seem to fit GiveWell’s evidence-based funding model.

2. Dubious Theory of Change

From the GiveWell Podcast on the topic, ”it is in the region of a few million dollars of government expenditure would have to be shifted to be 20, 30% more cost effective, 20, 30% cheaper for this to look like a good use of funding.” And so it became apparent quite quickly that there's a lot of upside here and that this does seem like a reasonably sensible use of GiveWell funding.

When it comes to advocacy/technical assistance at the highest level, there is always an enticing carrot of huge potential cost effectiveness. If we can shift policy, or change fund allocations even a tiny bit then almost any grant will probably be worth it. Of course if we can move a few tens of million dollars p from bad uses to good uses the expected value looks great.

The real question is whether changing anything at all is realistic. Because as with any attempt where to influence power at the highest level, tractability is everything.

CE Charity “The center for effective AID Policy” shut down last year after judging that moving Developed countries aid budgets to more effective areas was more difficult than expected. An organisation isn’t a good bet just because making a tiny shift in government spending would have huge impact. We need specific and clear reasons why huge government machineries with low budgets and too many priorities might concretely change what they are doing

I wasn’t convinced by the podcast examples such as data consolidation and dashboards. I don’t believe most government officials in low-income health ministries care much about evidence and data – certainly not in Uganda where I live. Enormous political negotiation and complex machine are needed – data and evidence rarely come into it. I might be more convinced by a theory of TSU that leaned into political wrangling, or building elite coalitions for change, not through generating and presenting better data.

Comments like this seem naive, “it could be the case that the TSUs help the ministry to develop a tool to track real time expenditure across programs and enable them to better shift resources to reduce the underspend of existing budgets.”

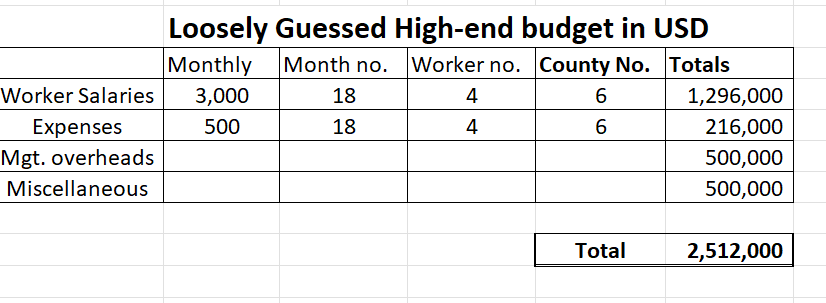

3. High Project Budget

I don’t understand how 24 people doing 8 months of work here cost 6 million dollars. Even with a very high annual salary of $36,000 in these low income countries (5x what our OneDay Health management team gets)[5], I can’t explain why this program could cost more than say 2.5 million dollars.

I could well be missing something important which explains the higher budget, but here’s my quick high-end budget BOTEC, based on some of the highest numbers I can imagine.

4. Missed RCT Opportunity

Edited to :Missed rigorous study opportunity instead? I was wrong about an RCT being possible here, thanks for the corrections below by a could of commenters

I was disappointed that possibility for an RCT or other rigorous prospective analysis wasn’t considered in such a poorly researched area. Why not randomize the 6 proposed countries and provide TSU to 3 of them? This A good productive study here could be cheap to follow up as you could monitor outcomes through routinely collected government data. Although you might only be able to gather perhaps 30-50% (rough guess) of the benefit of the program through this, I think it would be possible.

Possible outcome measures (based on podcast)

1. Change in allocation of Grants/Government budgets to poorer districts from richer ones

2. Change in allocation of Donor money to cost-effective programming

3. Changes in supply chain management over the study period

5. No CEA and grant writeup

From GiveWell “All of the research supporting our funding recommendations is free and publicly available.” Although I understand there is perceived urgency here so this might be an exception, I would have appreciated at least some of GiveWell’s normal grant process here, with at least an attempted CEA and short write-up. Of course through the podcast and forum post, they have still been more transparent than most orgs in their position.

6. Why is this considered urgent post USAID cuts?

From GiveWell's podcast “But we know there are lots of other organizations, as I mentioned before, who are providing technical support like this to governments. We didn't do a really comprehensive investigation into other organizations as part of this work because of the need to move quickly……

“I think what the TSUs hopefully will do is help intensify that support at a time when it's really needed.”

This seems the kind of intervention where it wouldn’t make a big difference waiting another 3-6 months to do deeper analysis and publish a CEA. This doesn’t involve kids who’s malnutrition treatment has been cut, or an important RCT which will be stopped with millions of dollars of work wasted unless emergency funding comes in.

There’s even a reasonable argument that post USAID cuts, government budgets might be harder to shift because the remaining money will support budgets that can’t be fixed, like highly paid ministry staff, medications and primary through tertiary services which cannot be shifted. Technical assistance might potentially more beneficial when more money is sloshing around. See the “decreasing budgets limit maneuvrability” by Matthias

Again I’d like to stress that this is an isolated criticism based limited information and there's a high chance I'm way off the mark. I have huge respect for the ability of both GiveWell and OpenPhil’s staff to make good decisions in allocating funds. I did share this with GiveWell, but only gave them 24 hours to comment which isn't really enough time. They responded extremely graciously and I hope they will find the time to comment.

Super keen to hear your thoughts and criticisms of the criticism as well ;).

When I read that description I infer "make the best decision we can under uncertainty", not "only make decisions with a decent standard of evidence or to gather more evidence". It's a reasonable position to think that the TSUs grant is a bad idea or that it would be unreasonable to expect it to be a good idea without further evidence, but I feel like GiveWell are pretty clear that they're fine with making high risk grants, and in this case they seem to think this TSUs will be high expected value