Lorenzo Buonanno🔸

Bio

Software Developer at Giving What We Can, trying to make giving significantly and effectively a social norm.

Posts 12

Comments677

Topic contributions7

my first post on LessWrong was scrapped because they identified it as AI written

I'm surprised to read this, can you check your post on https://www.pangram.com/ ?

https://benefficienza.it/ (spelled with two Fs) has a lot of material on effective giving in Italian, in case it's useful, although nothing on catholicism as far as I'm aware.

Some EA articles were translated here: https://altruismoefficace.it/blog

And the EA handbook a few years ago was translated here: https://forum.effectivealtruism.org/users/ea-italy (I don't know if it changed much since then)

There was also this article in the major Italian Catholic newspaper after the FTX scandals, which was not entirely negative, but still mostly skeptical.

But I’m not sure how fruitful it is for all of us to have a vibes-based conversation about the possible merits of this campaign.

I think promoting good norms and making them more "common knowledge" is one of the few ways that EA Forum conversations can maybe be useful.

As in, I think it's good that "everyone knows that everyone knows" that we should have a strong bias to be collaborative towards other projects with similar goals, and these threads can help a bit with that.

(To be clear, my sense is that FarmKind is already well aware of this and this is collaborative campaign, especially after reading their comment. I mean for the EA Forum readers community as a whole)

Edit: new comment from FarmKind

Thank you for sharing this. I'm personally very surprised to see this campaign from FarmKind after reading "With friends like these" from Lewis Bollard and "professionalization has happened, differences have been put aside to focus on higher goals and the drama overall has gone down a lot" from Joey Savoie.

I would have expected the ideal way to promote donations to animal welfare charities to be less antagonizing towards vegan-adjacent people.

@Vasco Grilo🔸 given that your name is on the https://www.forgetveganuary.com/ campaign and you're active on this forum, I'm curious what you think about this. Were you informed?

Edit: they will remove that section from the page

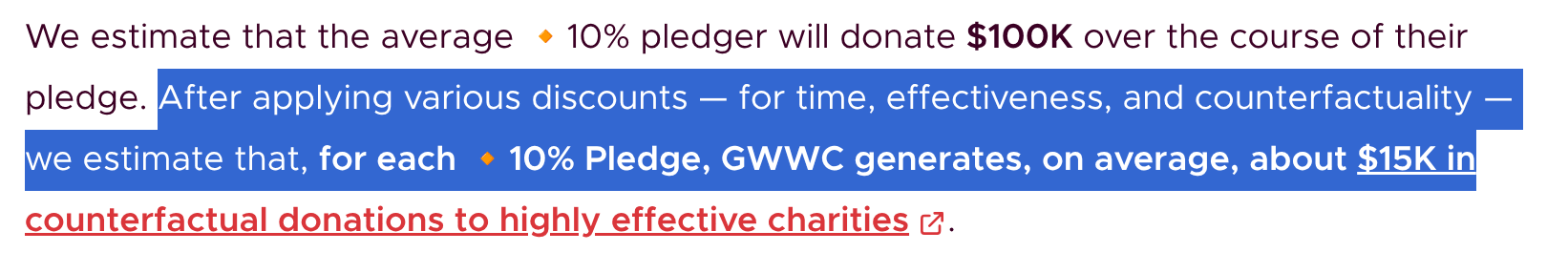

My understanding is that $47k is the estimated time-discounted average lifetime high-impact donations from a 10% pledger, but does not discount for the fact that many pledgers (especially the largest donors giving much more than 10%) would have donated significantly with or without a 10% pledge, so only a fraction of that is counterfactually due to the existence of the 10% pledge and pledge advocacy (whether by gwwc or by others)

Giving What We Can conservatively values the lifetime value of a 🔸 10% Pledge at $100K USD (inflation adjusted to 2024)

Quick note that the number on the GWWC website is about one order of magnitude lower

But of course these are averages, and the people you inspire could give significantly more/less, or significantly more/less counterfactually

will downvote myself for spreading false info, and wasting people's time here.

That seems excessive, it was a reasonable question. I would let other readers decide whether it should be upvoted or downvoted.

But I am surprised you didn't Google "Against Malaria Foundation Crypto" or something like that, it seems faster than asking here.

Thanks for sharing! I'd have guessed they would be using something at least as good as pangram, but maybe it has too many false negatives for them, or it was rejected for other reasons and the wrong rejection message was shown.

As an ex forum moderator I can sympathize with them, not a fun job!