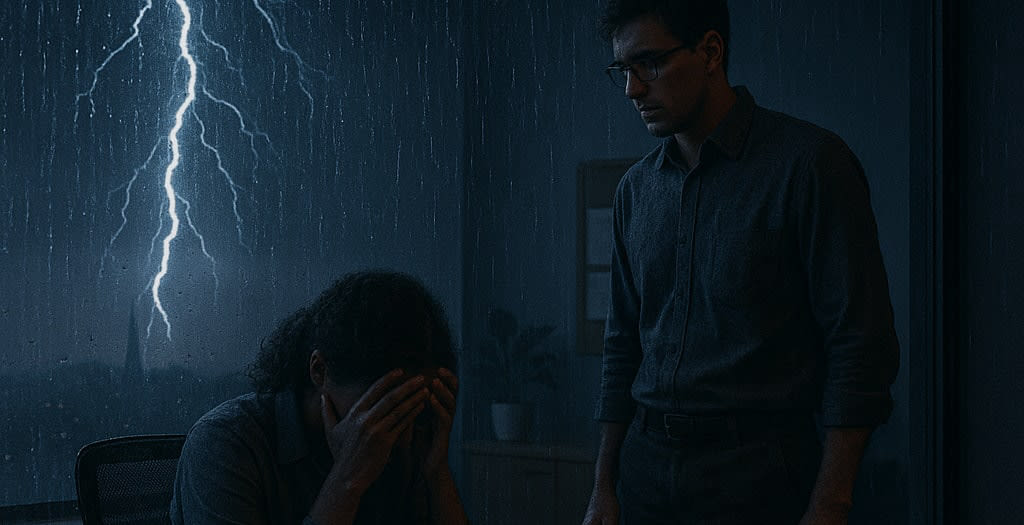

In her office nestled in the corner of constellation, Amina is checking in with her employee Diego about his project – a new AI alignment technique. It’s 8pm and the setting sun is filling Amina’s office with a red glow. Diego is clearly running a sleep deficit, and 60% of Amina's mind is on a paper deadline next week.

1: Hopelessness

Diego: I’m feeling quite hopeless about the whole thing.

Amina: About the project? I think it’s going well, no?

Diego: No, I mean the whole thing. AI safety. It’s so hard for me to imagine the work I’m doing actually moving the p(doom) needle.

Amina: This happens to a lot of people in the bay. There are too many doomers around here! Personally I think the default outcome is that we won’t get paperclipped, we’re just addressing a tail risk.

Diego: That’s not really what I mean. It’s not about how likely doom is… I’m saying that I’m hopeless about some alignment technique actually leading to safe AGI. There are just so many forces fighting against us. The races, the e/accs, the OpenAI shareholders…

Amina: Oh man, sounds like you’re spending too much time on twitter. Maybe it’ll help for us to explicitly go through the path-to-impact of this work again?

Diego: Yea that would be helpful! Remind me what the point of all this is again.

Amina: So, first, we show that this alignment method works.

Diego: I still have this voice in the back of my head that alignment is impossible, but for the sake of argument let’s assume it works. Go on.

Amina: Maybe it’s impossible. But the stakes are too high, we have to try.

Diego: One of my housemates has that tattooed on her lower back.

Amina’s brain short-circuits for a moment, before she shakes her head and brings herself back into the room.

Amina: R-right… Anyway, if we can show that this alignment method works, Anthropic might use it in their next model. We have enough connections with them that we can make sure they know about it. If that next model happens to be one who can, say, make a million on the stock exchange or run a company, then we will have made it less likely that some agent based on that model is going to, like, kill everyone.

Diego: But what about the other models? Would GPT and Gemini and Grok and DeepSeek and HyperTalk and FizzTask and BloobCode use our alignment technique too?

Amina: Well…

Diego: And what about all the other open-source models that are inevitably going to crop up over the next couple of years?

Amina: Eeeh

Diego: In a world where our technique is needed to prevent an AI from killing everyone, even if we get our alignment technique into the first, second, or third AGI system, if the fourth doesn’t use our technique, it could kill everyone. Right?

Amina: You know it’s more complicated than that. If there are already a bunch of other more-or-equally powerful aligned agents roaming around, they will stop the first unaligned one from paperclipping everyone.

Diego: Sure, maybe if we align the first ten AGIs, they can all prevent the eleventh AGI from paperclipping everyone.

But can we really align the first handful of AGIs, given the way the world looks now? At first I had the impression that OpenAI cared about safety at least to an extent. But look at them now. They’ve been largely captured by the interests of their investors, and they’re being forced to race other companies and China. They aren’t just a bunch of idealistic nerds who can devote their energy to making AGI safe anymore.

Amina: We always knew race dynamics would become a thing.

Diego: And doing research on alignment doesn’t stop those dynamics.

Amina: Yes, alignment doesn’t solve anything. That’s why we have our friends in AI governance, right? If they can get legislation passed to prevent AI companies from deploying unaligned AGI, we’ll be in a much better position.

Diego: And how do you think that whole thing is going?

Amina: Oh cheer up. Just because SB 1047 failed doesn’t mean we should just give up and start building bunkers.

Diego: But don’t you get the feeling that the political system is just too slow, too stubborn, too fucked to deal with a problem as complex as making sure AI is safe? Don’t you get the feeling that we’re swimming against the tide in trying to regulate AI?

Amina: Sure. Maybe it’s impossible. But the stakes are too high, we have to try.

Diego: Oh, I also have another friend I met at burning man who has that tattooed on his chest.

Amina: You have a strange taste in friends…

Diego: Anyway, it all feels pretty impossible to me. And that’s even before we start thinking about China. And even if all AGI models end up aligned, can we really allow that much power to be in everybody’s hands? Are humans aligned?

Amina: That’s going to happen no matter what Diego. Technology will continue to improve, the power of the individual will continue to grow.

Diego gets up from his seat and stares out the window at the view over to San Francisco.

Diego: You know, when I first heard about the alignment problem I was so hyped. I’d been looking for some way to improve the world. But the world seemed so confusing, it was so hard for me to know what to do. But the argument for AI safety is so powerful, and the alignment problem is such a clean, clear technical problem to solve. It took me back to my undergrad days, when I could forget the complexity of the world and just sit and work through those maths problems. All I needed to know was there on the page, all the variables and all the equations. There wasn’t this anxious feeling of mystery, that there might be something else important to the problem that hasn’t been explicitly stated.

Amina: You need to state a problem clearly to have any chance of solving it. Decoupling a problem from its context is what has allowed science and engineering to make such huge progress. You can’t split the atom, go to the moon, or build the internet while you’re still thinking about the societal context of the atoms or rockets or wires.

Diego: Yea, I guess this is what originally attracted me to effective altruism. Approaching altruism like a science. Splitting altruism up into a list of problems and working out which are most important. We can’t fix the whole world, but we can work out what’s most important to fix…

But… is altruism really amenable to decoupling? Is it in the same class as building rockets and splitting atoms? I haven’t seen any principled argument about why we should expect this.

Amina: But this decouply engineering mindset has helped build a number of highly effective charities, like LEEP!

Diego: Ok, so it works for getting lead out of kid’s lungs, but does it work for, like, making singularity go well? It seems unclear to me.

I agree that the arguments for AI risk are strong. Maybe we’re all going to get paperclipped. But can we solve the problem by zooming in on AI development, or do we need to consider the bigger picture, the context?

Amina: AI development is what might kill us right, so it’s what we should be focusing on.

Diego: Well, is it the AI that’ll kill us, or the user, or the engineers, or the company, or the shareholders who pressured a faster release? The race dynamics themselves, or Moloch? There’s a long causal chain, and a number of places one could intervene to stop that chain.

Amina: But aligning the first AGI, with technical work and legislation, is where we can actually do something. We can’t fix the whole system. You can’t slay Moloch, Diego.

Diego raised his eyebrows and locked eyes with Amina

Amina: You can’t slay Moloch, Diego!

Diego: I thought you bay area people are meant to be ambitious!

Amina rolls her eyes

Diego: Perhaps we can come up with some alignment techniques that can align the first AGI. Maybe we can pass some laws that force companies to use these techniques, so that all AGIs are aligned and we don’t get paperclipped. But then technology is going to be moving very fast, and there are a whole bunch of other things that can go wrong.

Amina: What do you mean?

Diego: There will be other powerful technologies that bring new x-risks. When anyone can engineer a pandemic, will we have to intervene on that too? When anyone can detonate a nuclear bomb, will we be able to prevent everyone from doing it? I suppose we could avoid this with a world government who surveils everyone to prevent them detonating, but I don’t think I want to live in that world either.

Amina: We’ll just have to cross those bridges when we come to them.

Diego: Will we be able to keep up this whack-a-mole indefinitely? Can EA just keep solving these problems as they come up? If you roll the dice over and over again, eventually you’re going to lose, and we’re all going to die.

Amina: Oh, I guess you’re alluding to the vulnerable world hypothesis. Well, whether or not it’s true, this whack-a-mole is all we can do.

Diego: Is it though? Just continue treating the symptoms? Or can we try to treat the underlying cause. Wouldn’t that be more effective?

Amina: By slaying Moloch? Sure, it would be effective, if it’s possible.

Diego: I’m not interested in slaying Moloch.

Amina: Huh? Then what are you talking about?

Diego: I don’t think we can slay Moloch. But Moloch isn’t the underlying cause, so we don’t have to.

Amina: You’ve lost me now. What is this mysterious deeper cause underlying everything?

Diego: I’m glad you asked.

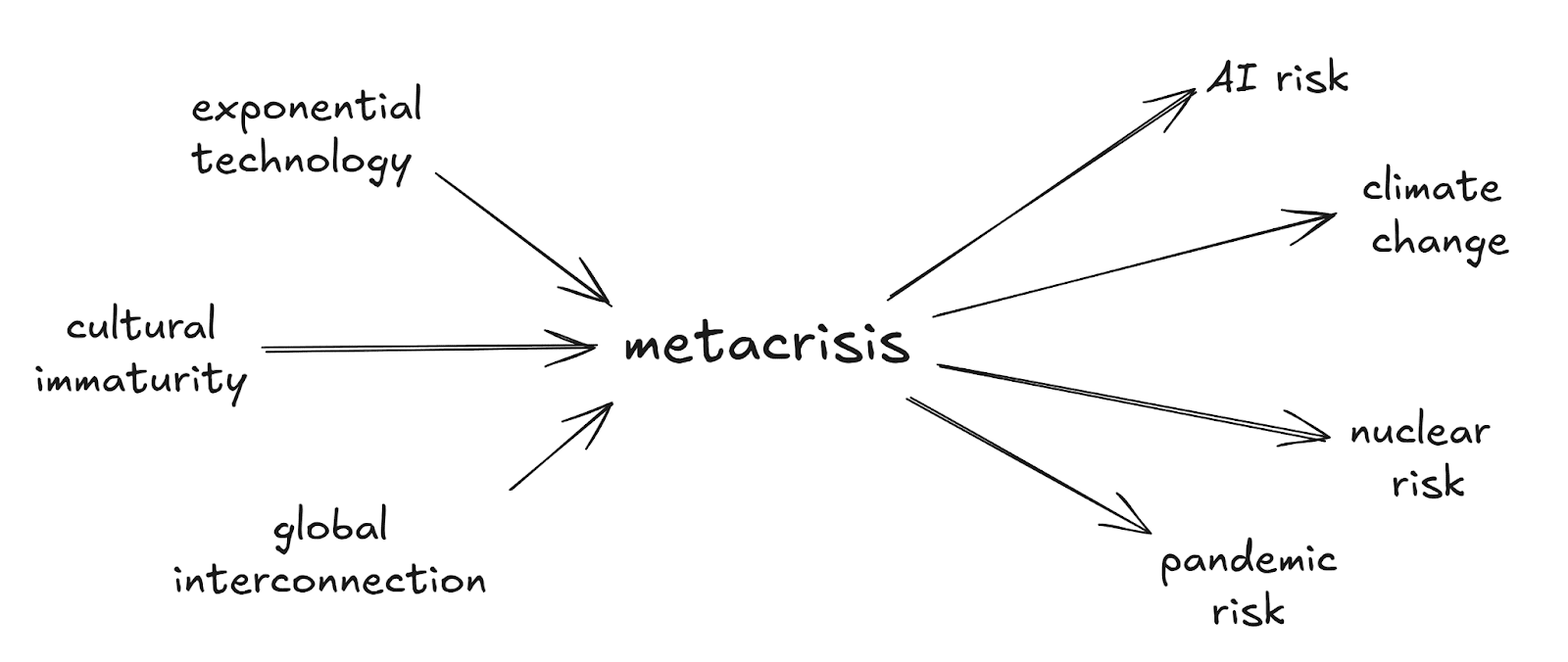

2: The metacrisis movement

Diego strokes his chin for a while and formulates a way to explain his recently updated worldview, gained from his new group of weird, non-EA friends.

Diego: I have a question for you. Are EAs and rationalists the only people who care about x-risk?

Amina: Uuh… maybe? We’re at least the ones who’ve thought most about it.

Diego: Unclear! There’s actually a different cluster of nerds, outside of EA, who care deeply about x-risk. I think of them a little bit like a parallel movement to EA, but they have quite different ways of making sense of the world, built upon a different language.

Amina: Do they have a name?

Diego: Well, not really. Their community isn’t as centralized as EA. Or maybe it’s actually a number of overlapping communities. I’ve heard them called the liminal, metamodern, game B, or integral movement. But I usually call them the metacrisis movement. There’s a handful of small orgs devoted to spreading the movement and making progress on what they see as the world’s most important problems - life itself, perspectiva, civilisation research institute, and others. I think of them as more pre-paradigmatic than EA, there’s less actually doing stuff and more clarifying of problems.

Amina searches some of the buzzwords Diego threw at her on her computer and scans a couple of pages. She sniggers a little, then sighs in frustration.

Amina: This all feels impenetrable to me. They use a bunch of jargon and a lot of it sounds floaty, vague or mental.

Diego: Yea I get that too. It reminds me of how I felt the first time I encountered EA.

Amina: Point taken. So I guess you can help me out in understanding what these weirdos are banging on about. How is – what’s the wording you used – how they make sense of the world – different to how EAs make sense of the world?

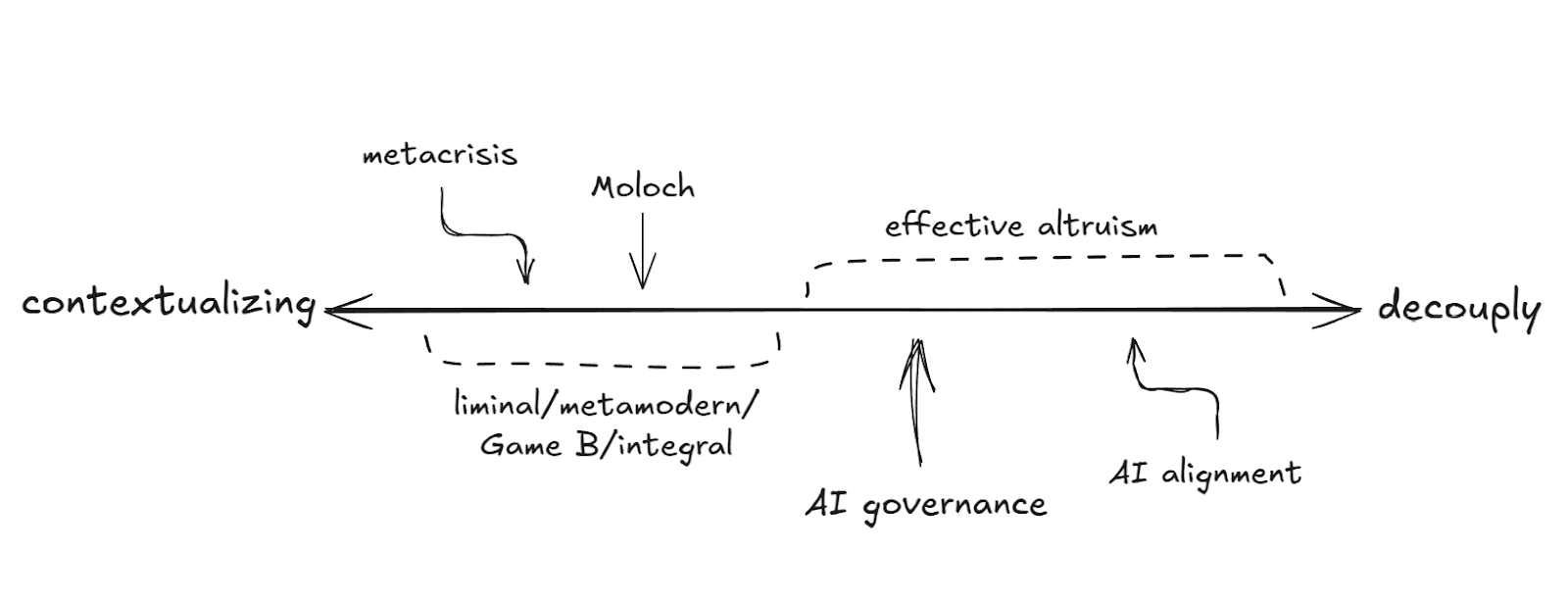

Diego: I see the main way the metacrisis folk are different is that they’re less decouply than EAs. While EAs like to decouple the problem they’re thinking about from its context, metacrisis people are more prone to emphasise the context the problem lives in – be that institutional, societal, cultural or whatever. They’re systems thinkers, they pay more attention to interconnectedness and complexity.

Amina: I’m feeling a bit tense about this going in some political direction. Societal context… are they just leftists? Is the metacrisis just “evil capitalism”?

Diego: Try to suspend judgement until you’ve engaged with the ideas!

Amina: Fair enough. Go on.

Diego: The metacrisis crowd take systems change seriously as a way to improve the world, unlike much of EA. EAs are marginal thinkers, metacrisis folk are systems thinkers.

Amina: Systems change… I can get behind some kinds of systems change, like getting laws passed. But if you’re talking about slaying Moloch, that’s more like changing the structure of governments, or the structure of the world. Deep systems change. That smells intractable to me.

Diego: Is it? Are there principled reasons to think that?

Amina: I did a fermi estimate some years ago…

Diego: And where did the numbers you plugged into that fermi estimate come from?

Amina looks sheepishly at her shoes

Diego: Did they come out of your ass?

Amina: …they came out of my ass. But I have a strong intuition against deep systems change!

Diego: That’s fair enough! EA tends to attract people who have the intuition that deep systems change is intractable. I can’t prove that intuition wrong. But the metacrisis people don’t share that intuition.

Amina: Hmm…

Diego: Look, I don’t want to talk you out of your EA ways. I obviously still have loads of respect for EA and it’s doing a lot right. All I’m asking is for you to entertain a different perspective.

Amina: Ok, that’s a reasonable request. Please continue. What is this metacrisis thing?

Diego: Ok, I’ll tell you. But to explain, let’s go back to the start of our conversation. If AI doom happens, what will cause it?

Amina sighs again and rings her hands in frustration.

Amina: It would be caused by an AI company deploying an unaligned AGI.

Diego: And what causes that?

Amina: Well, lots of things.

Diego: Let’s imagine it’s OpenAI, for example. What would cause OpenAI to deploy an unaligned AGI?

Amina: Race dynamics, I guess.

Diego: And what causes race dynamics?

Amina: I guess that’s a collective action problem. The competition to be the first to get to AGI means all the players need to sacrifice all other values, like not contributing to x-risk.

Diego: Right, Moloch. That’s part of the story for sure. But what causes Moloch?

Amina: Eh… nothing causes Moloch. It’s just game theory. It’s just human nature…

Diego: Is it though? The metacrisis people say that that is part of the problem - this view that rivalry is just human nature, that zero-sum games are the only possible games to play. Those beliefs help keep Moloch alive.

But anyway, Moloch isn’t the whole story. Moloch doesn’t explain why all these different parties want AGI so damn much.

Amina: …because it’s the ultimate technology right? Because it’ll bring whoever controls it almost infinite wealth, and accelerate technology leading to material wealth for all?

Deigo: That’s another part of the problem.

Amina: Huh?

Diego: This deification of technology, this assumption that better technology is always good. And this emphasis on growing material wealth, at the expense of everything else.

Amina: I guess the e/accs would say that only technologies that benefit us will be developed, because of the rational market.

Diego: There’s problem number three. A belief that the market will do what’s right, because humans are rational agents.

Amina: Well, I mean, it’s approximately true, right–

Diego: But not true all the time. Part of the problem is that faith in the market is a background assumption that’s rarely questioned.

Amina: Rarely questioned?

Diego: Ok, it's questioned by some groups. But does it get questioned where it matters, in silicon valley, or in the US government? Not really for the former and not at all for the latter.

Amina: Ok. So I’m hearing that there’s just a whole mess of things causing AI risk. Beliefs that human nature is fundamentally rivalrous, beliefs that technology and material wealth is always good, and belief that markets will solve everything. So the problem is everybody’s beliefs?

Diego: Kindof-but it’s deeper than beliefs. These are more like background assumptions that colour everyone’s perceptions. At least with explicitly stated beliefs we can examine their validity, but this is something deeper.

Amina: Right, so like not explicit beliefs but implicit ways we make sense of the world, or something like that?

Diego: Yea. I’d say the problem is the cultural paradigm we’re in. The set of implicit assumptions, the language, the shared symbols, society’s operating system.

Amina: Does this cultural paradigm have a name? I’ve not done my homework on cultural paradigms…

Diego: Yes, the cultural paradigm of today, basically, is modernity. We’ve been in modernity ever since the enlightenment. The enlightenment created a new emphasis on reason, individualism, scientific progress, and material wealth.

Amina: That all sounds pretty good to me. I guess these metacrisis dweebs think science, technology and the economy are the root of all evil, and that we should go back to being hunter-gatherers?

Diego: No, they don’t think that. They’re very aware that modernity has given us extraordinary growth, innovation and prosperity. Our current cultural paradigm, powered by science and economic growth, has globally doubled life expectancy, halved child mortality, and cut extreme poverty by an order of magnitude. Nobody can deny that these are all great things.

Amina: Ok, I wasn't expecting that. So what’s the problem with modernity? Why does the culture need to change?

Diego: The problem is that we’re racing towards fucking AI doom Amina!

Amina: Oh yea, that thing.

Diego: Modernity isn’t perfect. It’s given us loads of nice things, but at invisible costs.

All that nice prosperity has negative externalities that our culture ignores: climate change, ecological collapse, various mental health crises, and existential risk.

We need to move to the next cultural paradigm.

Amina: Wait, isn’t the next cultural paradigm postmodernity? I’m not into that. The postmodernists seem to think that there is no objective reality. They reject any idea of human progress. If the world goes postmodern, we’d all quit are jobs and just get high. That’s not going to solve anything.

Diego: Yea, I think postmodernism is an overcorrection to the problems of modernism. We need something that both sees the value in technological and material progress, but isn’t so manically attached to that progress that it’s willing to destroy the world to pursue it.

Amina: And what does that look like?

Diego: We don’t really know yet. Well, some people have some ideas. I can tell you about them if you want–

Amina: I have a call soon. And you still haven’t told me what the metacrisis is!

Diego: Oh, sure, sorry I keep going down tangents. I’ve teased you enough, let me tell you what the metacrisis is.

3: The root cause of existential risk

Diego leaps out of his chair and draws a causal diagram on a whiteboard.

Diego: The metacrisis is the root cause of anthropogenic existential risk. It’s the fact that as technology becomes more powerful, and the world becomes more interconnected, it’s becoming easier and easier for us to destroy ourselves. But our culture, the implicit assumptions, symbols, sense-making tools and values of society, is not mature enough to steward this new-found power.

Amina: I’ve heard people say things like this before. But it’s very abstract…

Diego: Yea, it’s the most zoomed-out, big picture view of x-risk. It’s the reason we live in the hinge of history. We have the power to destroy ourselves, and our society might not be mature enough to steward that power. We can solve AI alignment, we can pass laws, we can prevent a nuclear war or the next pandemic. But technology is going to continue progressing exponentially, and if we continue playing the game we’re currently playing, we’ll find new ways to destroy ourselves.

Amina: But how do you know that we’ll always find a new way to destroy ourselves?

Diego: Because so far, all civilisations destroy themselves eventually. Human history is basically a story of civilisations forming, being stable for a short time, and collapsing. The Babylonians, the Romans, the Mongols, the Ottomans. They all fell. But when they fell it wasn’t the end of humanity, because they were just local collapses. Today, we’ve formed a global, highly interconnected and technologically advanced civilisation. In expectation, this one will fall too. And when this one falls, the whole world falls, and the fall could be the end of humanity.

Thunder claps and torrential rain starts to pour outside the office

Amina: Fucking hell. And people call us doomers. You guys need to cheer up.

Diego: We also believe we can fix it though!

Amina: Right. How? We can’t stop technological progress.

Diego: So you have to help catalyze the next cultural paradigm.

Amina: And how the hell do you do that?

Diego: Well, it’s not clear how yet. The metacrisis movement is in a pre-paradigmatic stage. They’re still trying to clarify the problem, get really clear on the shape of the metacrisis. Then it could become clear what we need to do.

Amina: I sense loads of ifs there. If a promising, talented person devoted their career to clarifying the metacrisis, that would feel like-

Diego: Like a big bet?

Amina: Yes.

Diego: You don’t like taking bets?

Amina: Fine, yes, god damn it, I love taking bets. Who am I kidding?

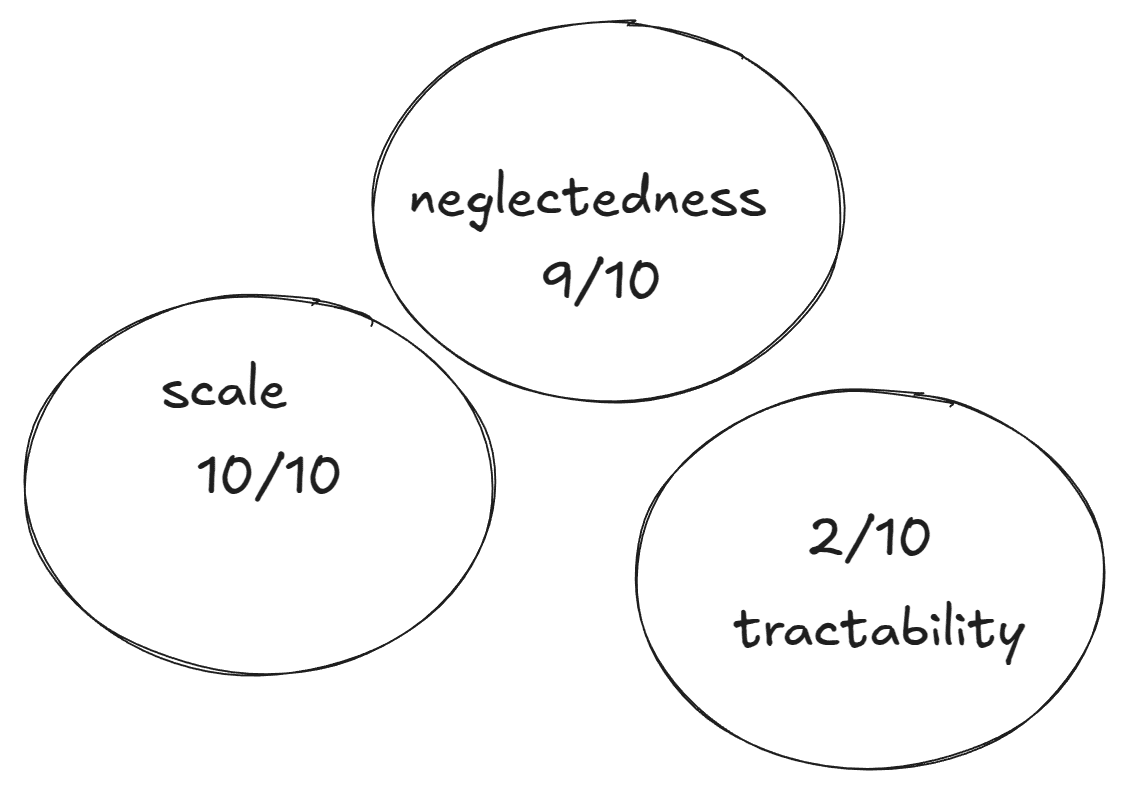

Diego: How about we throw the scale/neglectedness/tractability framework at this?

Amina: Yea let’s do that.

Diego draws three circles on the whiteboard, with “scale”, “neglectedness” and “tractability” in each circle. Diego points at the “scale” circle and stares intensely at Amina

Amina: Yes fine, the scale is big.

Diego: Bigger than any other problem EAs think about, arguably! Let’s give it 10/10 scale.

Amina: Fine, it scores well on scale. And for neglectedness…

Diego: Also scores well. Nobody’s ever even heard of the damn thing.

Amina: But you said there are these orgs that are working on it right?

Diego: They’re tiny. I haven’t run the numbers but I feel kinda confident that there’s 10x less people explicitly working on the metacrisis than AI alignment, for example.

Amina: Seems like a complicated claim, but fine, I’ll trust that you’re roughly right.

Diego: Let’s give it 9/10.

Amina: Fine, scores well on neglectedness. But tractability!

Diego: Yea, tractability it scores poorly on. Do you think it scores better or worse than wild animal suffering?

Amina: Oh, eh... Not sure actually.

Diego: I’m not sure either. But it’s more tractable than you might think at first glance. I don’t know if you’ve noticed, but there’s a big appetite for change in the air, in the west at least. The world might be at a tipping point, and at tipping points, small groups can have big effects by nudging things in the right direction.

Amina remained silently skeptical.

Diego: Let’s score it 2/10 on tractability.

Diego: I think if an intervention that “solves the metacrisis”, in other words, makes the world marginally less metacrisis-y, is possible, it would be a very effective intervention. Instead of having to repeatedly go against the tide of the system of incentives we currently live in to deal with x-risks one-by-one like whack-a-mole, we would be going straight to the root cause, solving all the x-risks at once. Solve the disease, not the symptoms.

Amina: That’s great and all Diego. I see where you’re coming from. But…

Amina grabs Diego by the shoulders and shakes him

Amina: WE DON’T HAVE TIME TO FIX SOCIETY, DIEGO! Didn’t you read AI 2027? AGI is coming in the next couple of years! And after AGI comes, everything will change. It’s all well-and-good treating the cause rather than the symptoms, but if someone comes into the hospital with a heart attack, do you put them on a low-fat diet, or do you just give them the fucking defibrillator?

Diego: Yea I get that. But I have three responses.

Amina: Ooo look at you. Three whole responses.

Diego: Yes, three responses. Firstly, I put a chunky credence on AGI taking way longer to come than all you bay area nerds think, so it makes sense for some people to be preparing for that scenario. Secondly, cultural change can happen faster than you think. It’s actually happening very fast right now. Do you understand skibidi toilet?

Amina: What the hell is skibidi toilet?

Diego: Exactly. And that brings me to my third point. Exponential technology can speed up cultural change. Hell, the internet might have created more culture, memes, and new languages, than the rest of history.

Amina looks skeptically at Diego

Diego: Ok, maybe I’m exaggerating, but you get my point. And AGI is going to keep making things change faster and faster. We could leverage AI to catalyse the kind of cultural evolution we need.

Amina: Ok fine. It doesn’t seem like a total waste of time for some people to be thinking about this.

Diego: That’s nice of you to say.

Amina: But I’m nowhere near convinced that the metacrisis is the most important problem to work on, or that I should quit my job doing AI safety to work on it.

Diego: I’m not trying to convince you to quit your job!

Amina: So what are you trying to convince me of?

Diego: Maybe I’m trying to convince you that the metacrisis frame should be a part of the EA portfolio. Some people in EA should be working on clarifying the metacrisis and understanding how to mitigate it. EAs and rationalists are the ones who take arguments seriously, right? Even if they’re weird or crazy-sounding? Well, I think EAs should take this argument seriously. I think the argument is strong enough that some promising EAs near the start of their careers could have a big impact making progress on the metacrisis.

Amina: Well, I don’t feel convinced of that yet, based on what you’ve said so far.

Diego: Yea, I’ve only really scratched the surface with what I’ve said so far. Maybe we’d need a longer conversation and get deeper into the nitty gritty of the metacrisis frame and how it fits into the EA frame.

Amina: Fair enough. But… There's something else that’s bothering me. It feels kinda strange to think about this metacrisis thing as a cause area in itself, since it lives on a different level of abstraction to the rest of the cause areas.

Diego pauses and thinks for a moment.

Diego: Yea… Maybe it isn’t that useful to view it as a cause area now that I come to think of it. Or, see it as a cause area in the same way as “global priorities research” is a cause area. Like, a meta cause area.

Hmm.. Maybe what I really want to argue is that EAs should be aware of the metacrisis framing and use it as a part of their epistemic toolkit. Maybe this means they can make better and wiser decisions. And maybe this will lead to some cause areas being de-prioritised and others being created.

Amina: And why should we include the metacrisis frame in the EA epistemic toolkit?

Diego: Because EA is decouply, so much so that there’s a chance they’re making a mistake by only prioritising problems that seem promising under a decouply world view. So, for a more robust portfolio, we should diversify how we make sense of the world.

Amina: Worldview diversification… I guess I’m generally pro-that.

Diego: Yea! Foxes are better forecasters than hedgehogs. Here, let me draw something else on the whiteboard.

Diego: When thinking about some problem, like AI safety, where should we draw the boundaries of the system we study? Should it just be around the walls of the OpenAI offices? Should it be AI development more broadly? Should it include the government, or the economy, or the cultural currents underneath? If you include too much, you might just get confused paralized by the complexity of it all. But if your view is too narrow, you might miss the points of highest leverage and ignore the effects you’re having on the rest of the system.

Amina: Hmm… yea, it doesn’t seem like there’s an obvious answer.

Diego: Exactly. To decouple or not decouple? We don’t know. So we should support people working on every point on this spectrum. The alignment people, the governance people, and the metacrisis people. Hedge hedge hedge.

Cleaner: Neither of you have a clue about anything.

Amina and Diego's heads turn to the open door of Amina’s office, to see the cleaner in the corridor who has been listening to the majority of their conversation.

Cleaner: you EA people and you metacrisis people think you've worked everything out, but you're both stuck in your own echo chambers, insulated from the real world.

I'm going to pre-register my prediction that you are both 99% wrong about everything.

Diego: But we're wrong in different ways right! So if we integrate our perspectives we'll only be 98% wrong?

Amina: That's not how it works-

Cleaner: Your wrongness is correlated.

Diego: Ok, 98.5% wrong then?

Amina closes the door as politely as possible.

Amina: Ok. Maybe the metacrisis is a useful frame. Maybe EAs should think more about it.

Amina stares out the window again. The rain has stopped, the clouds have cleared, and she can see the lights of the skyscrapers across the bay.

Amina: It still just feels so impossible to fix this metacrisis thing.

Diego: Maybe it's impossible. But the stakes are too high, we have to try.

Wanna read more about the metacrisis (and related ideas)?

Thanks to Guillaume Corlouer, Indra S. Gesink, Toby Jolly, Jack Koch, and Gemma Paterson for feedback on drafts.

I liked this comment and thought it raised a bunch of interesting points, thanks for writing it.

> Putting this author aside, it seems like many of the folk who talk about this stuff are merely engaging in self-absorbed obscurantism.

I had a bit of a negative reaction to this comment - it seems a bit uncharitable to me