Empirical evidence suggests that, if AI automates AI research, feedback loops could overcome diminishing returns, significantly accelerating AI progress.

Summary

AI companies are increasingly using AI systems to accelerate AI research and development. These systems assist with tasks like writing code, analyzing research papers, and generating training data. While current systems struggle with longer and less well-defined tasks, future systems may be able to independently handle the entire AI development cycle – from formulating research questions and designing experiments, to implementing, testing, and refining new AI systems.

Some analysts have argued that such systems, which we call AI Systems for AI R&D Automation (ASARA), would represent a critical threshold in AI development. The hypothesis is that ASARA would trigger a runaway feedback loop: ASARA would quickly develop more advanced AI, which would itself develop even more advanced AI, resulting in extremely fast AI progress – an “intelligence explosion.”

Skeptics of an intelligence explosion often focus on hardware limitations – would AI systems be able to build better computer chips fast enough to drive such rapid progress? However, there’s another possibility: AI systems could become dramatically more capable just by finding software improvements that significantly boost performance on existing hardware. This could happen through improvements in neural network architectures, AI training methods, data, scaffolding around AI systems, and so on. We call this scenario a software intelligence explosion (SIE). This type of advancement could be especially rapid, since it wouldn’t be limited by physical manufacturing constraints. Such a rapid advancement could outpace society’s capacity to prepare and adapt.

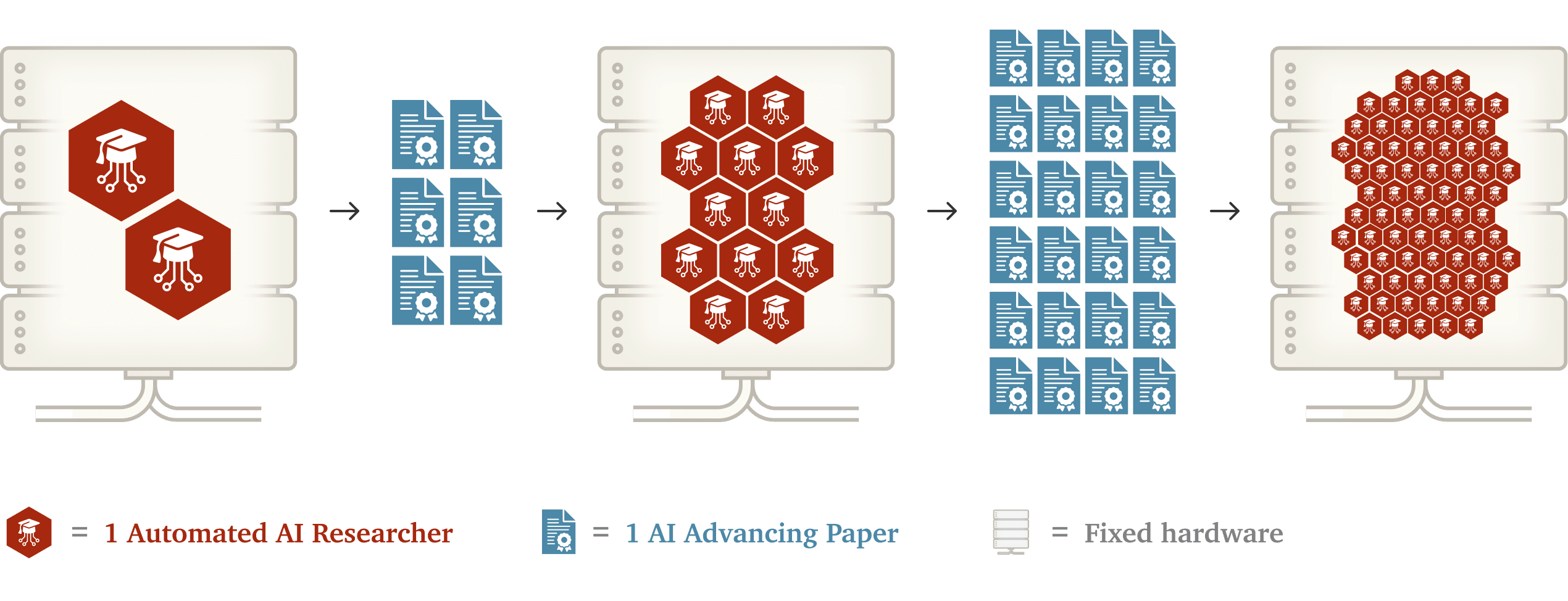

In this report, we examine whether ASARA would lead to an SIE. First, we argue that shortly after ASARA is developed, it will be possible to run orders of magnitude more automated AI researchers than the current number of leading human AI researchers. As a result, the pace of AI progress will be much faster than it is today.

Second, we use a simple economic model of technological progress to analyze whether AI progress would accelerate even further. Our analysis focuses primarily on two countervailing forces. Pushing towards an SIE is the positive feedback loop from increasingly powerful AI systems performing AI R&D. On the other hand, improvements to AI software face diminishing returns from lower hanging fruit being picked first – a force that pushes against an SIE.

To calibrate our model, we turn to empirical data on (a) the rate of recent AI software progress (by drawing on evidence from multiple domains in machine learning and computer science) and (b) the growing research efforts needed to sustain this progress (proxied by the number of human researchers in the field). We find that (a) likely outstrips (b) – i.e., AI software is improving at a rate that likely outpaces the growth rate of research effort needed to achieve these software improvements. In our model, this finding implies that the positive feedback loop of AI improving AI software is powerful enough to overcome diminishing returns to research effort, causing AI progress to accelerate further and resulting in an SIE.

If such an SIE occurs, the first AI systems capable of fully automating AI development could potentially create dramatically more advanced AI systems within months, even with fixed computing power.

We examine two major obstacles that could prevent an SIE: (1) the fixed amount of computing power limits how many AI experiments can be run in parallel, and (2) training each new generation of AI system could take months. While these bottlenecks will slow AI progress, we find that plausible workarounds exist which may allow for an SIE nonetheless. For example, algorithmic improvements have historically increased the efficiency of AI experiments and training runs, suggesting that training runs and experiments could be progressively sped up, enabling AI progress to continually accelerate despite these obstacles.

Finally, because such a dramatic acceleration in AI progress would exacerbate risks from AI, we discuss potential mitigations. These mitigations include monitoring for early signs of an SIE and implementing robust technical safeguards before automating AI R&D.

My expectation is that software without humans in the loop evaluating it, will Goodhart's law itself and over fit to the metrics/measures given.

Daniel, You provide good evidence that we will experience a period of SIE. Still I think we can make a second argument that this period of SIE will come to an end. Perhaps it even points towards a second way to assess consequences of SIE.

My notion of an asymptotic performances is easiest seen on a much simpler problem. Consider the task of doing of doing parallel multiplication in silicon. Over the years we have definitely improved the multiplication performance in speed and chip area (for a fixed lithography tech level). I expect there was a period of time where is somehow the speed of human innovation was proportional to current multiplication speed then we would have seen a period of SIE for chip multipliers. Still as our designs approached the (unknown) asymptotic limit of multiplication performance in our chip design this explosion would level off again.

In the same way, if fix the task of running an AI agent capable of ASARA and fix the HW, then there must exist an asymptotically best design theoretically possible. From these if follows that period of SIE must stop as designs approach this asymptote.

This raises an interesting secondary question: How many multiples exist between our first ASARA system, and the asymptotically best one? If that is 10x, that implies a certain profile for SIE, if it is 10,000x then it is a very different profile for SIE. In the end it might be this multiple rather than the velocity of SIE that has greater sway over its societal outcome.

Thoughts on this?

--Dan

We agree there is some limit. We discuss this in the report (from footnote 26):

Determining how high this limit is above the first ASARA systems is a very difficult question. That said, we think there are reasons to suspect the limit is far above the first ASARA systems:

It’s an interesting hypothesis. I think one way in which a SIE can be encouraged is through AI and data enabled financial / risk modelling of any given R&D project.

I was writing on this yesterday, serendipitously!

AI financial risk quantification might significantly improve the accuracy of priors or other probabilistic model variables that evaluate any given R&D IP for a market, meaning we might well be on the cusp of a gradual transition to an economy that is increasingly (one day entirely?) R&D focused, on the assumption that AIs or AI enabled R&D is more likely to perform competitively, either for direct or indirect reasons (I think the psychological component of AI augmenting the way people think about (or copilot to solve) problems is still an open area for approach…).