The current US administration is attempting an authoritarian takeover. This takes years and might not be successful. My manifold question puts an attempt to seize power if they lose legitimate elections at 30% (n=37). I put it much higher.[1]

Not only is this concerning by itself, this also incentivizes them to achieve a strategic decisive advantage via superintelligence over pro-democracy factions. As a consequence, they may be willing to rush and cut corners on safety.

Crucially, this relies on them believing superintelligence can be achieved before a transfer of power.

I don't know how much the belief in superintelligence has spread into the administration. I don't think Trump is 'AGI-pilled' yet, but maybe JD Vance is? He made an accelerationist speech. Making them more AGI-pilled and advocating for nationalization (like Ashenbrenner did last year) could be very dangerous.

- ^

So far, my pessimism about US Democracy has put me in #2 on the Manifold topic, with a big lead over other traders. I'm not a Superforecaster though.

The forum likes to catastrophize Trump but I need to point out a few things for the sake of accuracy since this is very misleading and highly upvoted.

- The current administration has done many things that I find horrible, but I don't see any evidence of an authoritarian takeover. Being hyperbolic isn't helpful.

- Your Manifold question is horribly biased because you are the author and made it very biased. First, there is your bias in how you will resolve the question. Second, the wording of the question comes off as incredibly biased. For example, saying that Bush v Gore counts as a coup or "Anything that makes the person they try to put in power illegitimate in my judgment,". Your judgment is doing a lot of heavy lifting there.

- I think it's important to quantify this supposed incentive. Needless to say, I think it's very low.

I don't think it matters much but I am Manifold's former #1 trader until I stopped and I'm fairly well regarded as a forecaster.

The forum likes to catastrophize Trump but I need to point out a few things for the sake of accuracy since this is very misleading and highly upvoted.

- The current administration has done many things that I find horrible, but I don't see any evidence of an authoritarian takeover. Being hyperbolic isn't helpful.

I think this statement is highly misleading. First, I think compared to most other fora and groups, this Forum is decidedly not catastrophizing Trump.

Second, if you don't see "any evidence of an authoritarian takeover" then you are clearly not paying very much attention.

I think there is a fair debate to be had about how seriously to take various signs of authoritarianism on the part of the Administration, but "seeing no evidence of it" is not really consistent with the signals one does readily find when paying attention, such as:

- an attack on the independence of the judiciary and law firms, complaining about the fact that courts exercise their legitimate powers

- flirting with the idea of being in defiance of court orders

- talking about a third term

- praising Putin, Orban, and other authoritarians

- undermining due process

For the record, by authoritarian takeover I mean a gradual process aiming for a situation like Hungary (which they've frequently cited as their inspiration and something to aspire to). Given that Trump has tried to orchestrate a coup the last time he was in office, I don't think it's a hyperbolic claim to say he's trying again this time. I'm also not making any claims about the likelihood of success.

is only getting the upvotes (at the time I posted, it was all upvotes and "agree" reacts), because of the forum's political bias.

I think this is very uncharitable to other Forum users. (Unless you meant "is getting only upvotes [..]")

We’re probably already violating Forum rules by discussing partisan politics, but I’m curious to hear how you view Trump’s claim that he is “not joking” about a third term. Is this:

- A lie (it cannot be hyperbole as the claim he made was very specifically framed)

- Legal under the constitution, because he would do it via running for Vice President and having the elected President resign, and anything technically legal is not an ‘authoritarian takeover’

- Illegal under the constitution, but he would legally amend the constitution to remove term limits

- Something else?

And then, for whichever you believe, could you explain how it isn’t an authoritarian takeover?

(I choose this example because it’s relatively clear-cut, but we could point to Trump vs. United States, the refusal to follow court orders related to deportations, instructing the AG not to prosecute companies for unbanning Tik Tok, the attempts from his surrogates to buy votes, freezing funding for agencies established by acts of Congress, bombing Yemen without seeking approval from Congress, kidnapping and holding legal residents without due process, etc. etc. etc., I just think those have greyer areas)

I think 1, 3, and 4 are all possible.

Trump and crew spout millions of lies. It's very common at this point. If you get worked up about every one of these, you're going to lose your mind.

Look, I'm not happy about this Trump stuff either. It's incredibly destabilizing for many reasons. But you are going to lose focus on important things if you get swept up into the daily Trump news. If you are focused on AI safety or animal welfare or poverty or whatever it may be, your most effective thing will almost certainly be focusing on something else.

I'm starting a discussion group on Signal to explore and understand the democratic backsliding of the US at ‘gears-level’. We will avoid simply discussing the latest outrageous thing in the news, unless that news is relevant to democratic backsliding.

Example questions:

- “how far will SCOTUS support Trump's executive overreach?”

- “what happens if Trump commands the military to support electoral fraud?”

- "how does this interact with potentially short AGI timelines?”

- "what would an authoritarian successor to Trump look like?"

- "are there any neglected, tractable

Here's an argument I made in 2018 during my philosophy studies:

A lot of animal welfare work is technically "long-termist" in the sense that it's not about helping already existing beings. Farmed chickens, shrimp, and pigs only live for a couple of months, farmed fish for a few years. People's work typically takes longer to impact animal welfare.

For most people, this is no reason to not work on animal welfare. It may be unclear whether creating new creatures with net-positive welfare is good, but only the most hardcore presentists would argue against preventing and reducing the suffering of future beings.

But once you accept the moral goodness of that, there's little to morally distinguish the suffering from chickens in the near-future from the astronomic amounts of suffering that Artificial Superintelligence can do to humans, other animals, and potential digital beings. It could even lead to the spread of factory farming across the universe! (Though I consider that unlikely)

The distinction comes in at the empirical uncertainty/speculativeness of reducing s-risk. But I'm not sure if that uncertainty is treated the same as uncertainty about shrimp or insect welfare.

I suspect many peop... (read more)

A lot of post-AGI predictions are more like 1920s predicting flying cars (technically feasible, maximally desirable if no other constraints, same thing as current system but better) instead of predicting EasyJet: crammed low-cost airlines (physical constraints imposing economic constraints, shaped by iterative regulation, different from current system)

I just learned that Lawrence Lessig, the lawyer who is/was representing Daniel Kokateljo and other OpenAI employees, supported and encouraged electors to be faithless and vote against Trump in 2016.

He wrote an opinion piece in the Washington Post (archived) and offered free legal support. The faithless elector story was covered by Politico, and was also supported by Mark Ruffalo (the actor who recently supported SB-1047).

I think this was clearly an attempt to steal an election and would discourage anyone from working with him.

I expect someone to eventually sue AGI companies for endangering humanity, and I hope that Lessig won't be involved.

I don't understand why so many are disagreeing with this quick take, and would be curious to know whether it's on normative or empirical grounds, and if so where exactly the disagreement lies. (I personally neither agree nor disagree as I don't know enough about it.)

From some quick searching, Lessig's best defence against accusations that he tried to steal an election seems to be that he wanted to resolve a constitutional uncertainty. E.g.,: "In a statement released after the opinion was announced, Lessig said that 'regardless of the outcome, it was critical to resolve this question before it created a constitutional crisis'. He continued: 'Obviously, we don’t believe the court has interpreted the Constitution correctly. But we are happy that we have achieved our primary objective -- this uncertainty has been removed. That is progress.'"

But it sure seems like the timing and nature of that effort (post-election, specifically targeting Trump electors) suggest some political motivation rather than purely constitutional concerns. As best as I can tell, it's in the same general category of efforts as Giuliani's effort to overturn the 2020 election, though importantly different in that Giuliani (a) had the support and close collaboration of the incumbent, (b) seemed to actually commit crimes doing so, and (c) did not respect court decisions the way Lessig did.

Ray Dalio is giving out free $50 donation vouchers: tisbest.org/rg/ray-dalio/

Still worked just a few minutes ago

Common prevalence estimates are often wrong. Example: snakebites and my experience reading Long Covid literature.

Both institutions like the WHO and academic literature appear to be incentivized to exaggerate. I think the Global Burden of Disease might be a more reliable source, but have not looked into it.

I advise everyone using prevalence estimates to treat them with some skepticism and look up the source.

Not that we can do much about it, but I find the idea of Trump being president in a time that we're getting closer and closer to AGI pretty terrifying.

A second Trump term is going to have a lot more craziness and far fewer checks on his power, and I expect it would have significant effects on the global trajectory of AI.

Really interesting initiative to develop ethanol analogs. If successful, replacing ethanol with a less harmful substance could really have a big effect on global health. The CSO of the company (GABA Labs) is prof. David Nutt, a prominent figure in drug science.

I like that the regulatory pathway might be different from most recreational drugs, which would be very hard to get de-scheduled.

I'm pretty skeptical that GABAergic substances are really going to cut it, because I expect them to have pretty different effects to alcohol. We already have those (L-theanine , saffran, kava, kratom) and they aren't used widely. But who knows, maybe that's just because ethanol-containing drinks have received a lot of optimization in terms of taste, marketing, and production efficiency.

It also seems like finding a good compound by modifying ethanol would be hard, because it's not a great lead compound in terms of toxicity (I expect).

AI vs. AI non-cooperation incentives

This idea had been floating in my head for a bit. Maybe someone else has made it (Bostrom? Schulman?), but if so I don't recall.

Humans have stronger incentives to cooperate with humans than AIs have with other AIs. Or at least, here are some incentives working against AI-AI cooperation.

When humans dominate other humans, there is only a limited ability to control them or otherwise extract value, in the modern world. Occupying a country is costly. The dominating party cannot take the brains of the dominated party and run i... (read more)

There is a natural alliance that I haven't seen happen, but both are in my network: pandemic preparedness and covid-caution. Both want clean indoor air.

The latter group of citizens is a very mixed group, with both very reasonable people and unreasonable 'doomers'. Some people have good reason to remain cautious around COVID: immunocompromised people & their household, or people with a chronic illness, especially my network of people with Long Covid, who frequently (~20%) worsen from a new COVID case.

But these concerned citizens want clean air, and are willing to take action to make that happen. Given that the riskiest pathogens trend to also be airborne like SARS-COV-2, this would be a big win for pandemic preparedness.

Specifically, I believe both communities are aware of the policy objectives below and are already motivated to achieve it:

1) Air quality standards (CO2, PM2.5) in public spaces.

Schools are especially promising from both perspectives, given that parents are motivated to protect their children & children are the biggest spreaders of airborne diseases. Belgium has already adopted regulations (although very weak, it's a good start), showing that this i... (read more)

I am very concerned about the future of US democracy and rule of law and its intersection with US dominance in AI. On my Manifold question, forecasters (n=100) estimate a 37% that the US will no longer be a liberal democracy by the start of 2029 [edit: as defined by V-DEM political scientists].

Project 2025 is an authoritarian playbook, including steps like 50,000 political appointees (there are ~4,000 appointable positions, of which ~1,000 change in a normal presidency). Trump's chances of winning are significantly above 50%, and even if he loses, Republic... (read more)

On my Manifold question, forecasters (n=100) estimate a 37% that the US will no longer be a liberal democracy by the start of 2029.

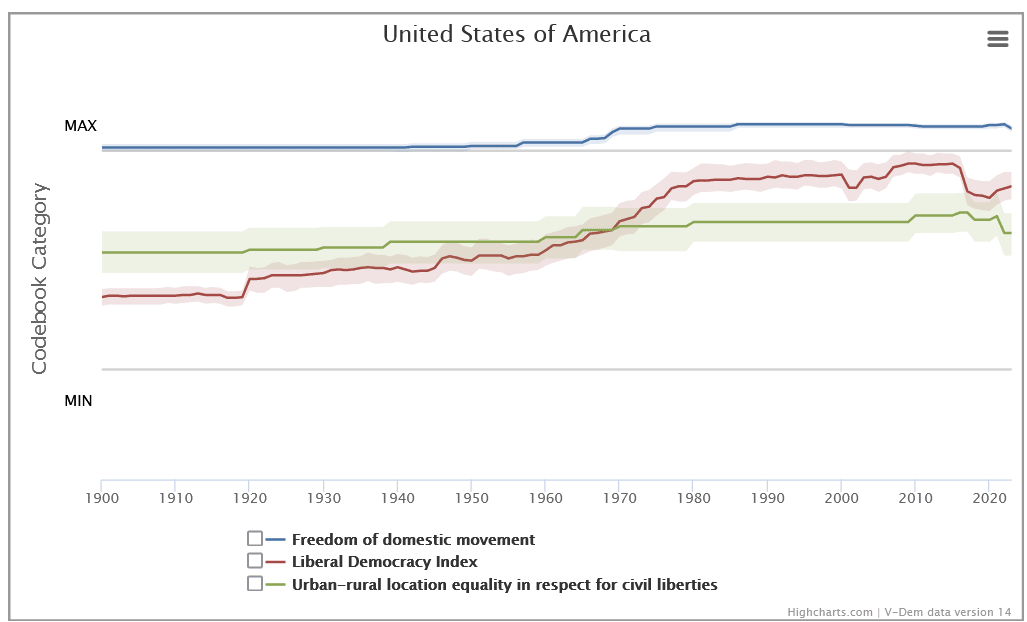

Your question is about V-Dem's ratings for the US, but these have a lot of problems, so I think don't shine nearly as much light on the underlying reality as you suggest. Your Manifold question isn't really about the US being a liberal democracy, it's about V-Dem's evaluation. The methodology is not particularly scientific - it's basically just what some random academics say. In particular, this leaves it quite vulnerable to the biases of academic political scientists.

For example, if we look at the US:

A couple of things jump out at me here:

- The election of Trump is a huge outlier, giving a major reduction to 'Liberal Democracy'.

- This impact occurs immediately despite the fact that he didn't actually pass that many laws or make that many changes in 2017; I think this is more about perception than reality.

- The impact appears to be larger than the abolition of slavery, the passage of the civil rights act, the second world war, conscription or female suffrage. This seems very implausible to me.

- Freedom of domestic movement increased in 2020, despite the introdu

Chevron deference is a legal doctrine that limits the ability of courts to overrule federal agencies. It's increasingly being challenged, and may be narrowed or even overturned this year. https://www.eenews.net/articles/chevron-doctrine-not-dead-yet/

This would greatly limit the ability of, for example, a new regulatory agency on AI Governance to function effectively.

More:

- This argues it would lead to regulatory chaos, and not simply deregulation: https://www.nrdc.org/stories/what-happens-if-supreme-court-ends-chevron-deference

- This describes the Koch network influence on Clarence Thomas. The Kochs are behind the upcoming challenge to Chevron: https://www.propublica.org/article/clarence-thomas-secretly-attended-koch-brothers-donor-events-scotus

I'm very skeptical of this. Chevron deference didn't even exist until 1984, and the US had some pretty effective regulatory agencies before then. Similarly, many states have rejected the idea of Chevron deference (e.g. Delaware) and I am not aware of any strong evidence that they have suffered 'chaos'.

In some ways it might be an improvement from the perspective of safety regulation: getting rid of Chevron would reduce the ability of future, less safety-cautious administrations to relax the rules without the approval of Congress. To the extent you are worried about regulatory capture, you should think that Chevron is a risk. I think the main crux is whether you expect Congress or the Regulators to have a better security mindset, which seems like it could go either way.

In general the ProPublica link seems more like a hatchet job than a serious attempt the understand the issue.

I am concerned about the H5N1 situation in dairy cows and have written and overview document to which I occasionally add new learnings (new to me or new to world). I also set up a WhatsApp community that anyone is welcome to join for discussion & sharing news.

In brief:

- I believe there are quite a few (~50-250) humans infected recently, but no sustained human-to-human transmission

- I estimate the Infection Fatality Rate substantially lower than the ALERT team (theirs is 63% that CFR >= 10%), something like 80%CI = 0.1 - 5.0

- The government's response

Given how bird flu is progressing (spread in many cows, virologists believing rumors that humans are getting infected but no human-to-human spread yet), this would be a good time to start a protest movement for biosafety/against factory farming in the US.

Monoclonal antibodies can be as effective as vaccines. If they can be given intramuscularly and have a long half life (like Evusheld, ~2 months), they can act as prophylactic that needs a booster once or twice a year.

They are probably neglected as a method to combat pandemics.

Their efficacy is easier to evaluate in the lab, because they generally don't rely on people's immune system.

This is a small write-up of when I applied for a PhD in Risk Analysis 1.5 years ago. I can elaborate in the comments!

I believed doing a PhD in risk analysis would teach me a lot of useful skills to apply to existential risks, and it might allow me to direectly work on important topics. I worked as a Research Associate on the qualitative ide of systemic risk for half a year. I ended up not doing the PhD because I could not find a suitable place, nor do I think pure research is the best fit for me. However, I still believe more EAs should study something along the lines of risk analysis, and its an especially valuable career path for people with an engineering background.

Why I think risk analysis is useful:

EA researchers rely a lot on quantification, but use a limited range of methods (simple Excel sheets or Guesstimate models). My impression is also that most EAs don't understand these methods enough to judge when they are useful or not (my past self included). Risk analysis expands this toolkit tremendously, and teaches stuff like the proper use of priors, underlying assumptions of different models, and common mistakes in risk models.

The field of Risk Analysis

Risk analysis is... (read more)

Update to my Long Covid report: https://forum.effectivealtruism.org/posts/njgRDx5cKtSM8JubL/long-covid-mass-disability-and-broad-societal-consequences#We_should_expect_many_more_cases_

UPDATE NOV 2022: turns out the forecast was wrong and incidence (new cases) is decreasing, severity of new cases is decreasing, and significant amounts of people are recovering in the <1 year category. I now expect prevalence to be stagnating/decreasing for a while, and then slowly growing over the next few years.]

I still believe the other sections to be roughly correct, i... (read more)

I have a concept of paradigm error that I find helpful.

A paradigm error is the error of approaching a problem through the wrong, or an unhelpful, paradigm. For example, to try to quantify the cost-effectiveness of a long-termism intervention when there is deep uncertainty.

Paradigm errors are hard to recognise, because we evaluate solutions from our own paradigm. They are best uncovered by people outside of our direct network. However, it is more difficult to productively communicate with people from different paradigms as they use different language.

It is... (read more)

I'm predicting a 10-25% probability that Russia will use a weapon of mass destruction (likely nuclear) before 2024. This is based on only a few hours of thinking about it with little background knowledge.

Russian pro-war propagandists are hinting at use of nuclear weapons, according to the latest BBC podcast Ukrainecast episode. [Ukrainecast] What will Putin do next? #ukrainecast https://podcastaddict.com/episode/145068892 via @PodcastAddict

There's a general sense that, in light of recent losses, something needs to change. My limited understanding sees 4 op... (read more)

Large study: Every reinfection with COVID increases risk of death, acquiring other diseases, and long covid.

https://twitter.com/dgurdasani1/status/1539237795226689539?s=20&t=eM_x9l1_lFKqQNFexS6FEA

We are going to see a lot more issues with COVID still, including massive amounts of long COVID.

This will affect economies worldwide, as well as EAs personally.

Ah sorry I'm not going to do that, mix of reasons. Thanks for offering it though :)

Here's an argument I made in 2018 during my philosophy studies:

A lot of animal welfare work is technically "long-termist" in the sense that it's not about helping already existing beings. Farmed chickens, shrimp, and pigs only live for a couple of months, farmed fish for a few years. People's work typically takes longer to impact animal welfare.

For most people, this is no reason to not work on animal welfare. It may be unclear whether creating new creatures with net-positive welfare is good, but only the most hardcore presentists would argue against preventing and reducing the suffering of future beings.

But once you accept the moral goodness of that, there's little to morally distinguish the suffering from chickens in the near-future from the astronomic amounts of suffering that Artificial Superintelligence can do to humans, other animals, and potential digital beings. It could even lead to the spread of factory farming across the universe! (Though I consider that unlikely)

The distinction comes in at the empirical uncertainty/speculativeness of reducing s-risk. But I'm not sure if that uncertainty is treated the same as uncertainty about shrimp or insect welfare.

I suspect many people instead work on effective animal advocacy because that's where their emotional affinity lies and it's become part of their identity, because they don't like acting on theoretical philosophical grounds, and they feel discomfort imagining the reaction of their social environment if they were to work on AI/s-risk. I understand this, and I love people for doing so much to make the world better. But I don't think it's philosophically robust.

You make a lot of good points - thank you for the elaborate response.

I do think you're being a little unfair and picking only the worst examples. Most people don't make millions working on AI safety, and not everything has backfired. AI x-risk is a common topic at AI companies, they've signed the CAIS statement that it should be a global priority, technical AI safety has a talent pipeline and is a small but increasingly credible field, to name a few. I don't think "this is a tricky field to make a robustly positive impact so as a careful person... (read more)