TLDR: We need more AI Risk-themed events, so I outlined four such events with different strategies. These are: online conferences, one-day summits, three-day conferences and high-profile events for building bridges between various communities.

End of tldr:

I have just written a post critiquing Stuart Russell’s AI Safety and Ethics conference - I think it’s only fair that I share my take on what more impactful AI risk events could look like.

Conferences are a brilliant, if not essential, way of generating focus, community and consensus. In a field as young and fast-moving as AI safety, that’s all the more the case. Sadly, I feel like we are short on making enough of these events happen at the moment.

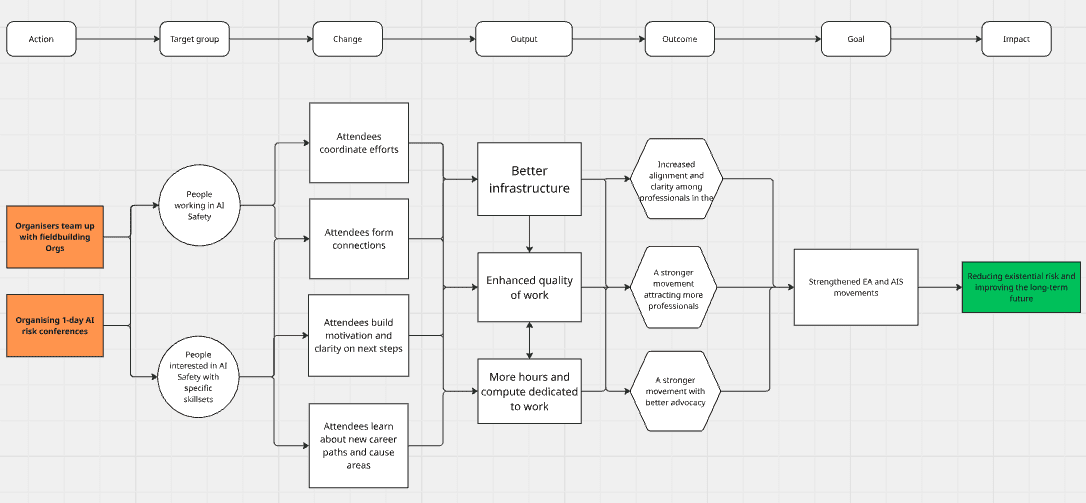

To anchor the value of such events, I propose a general Theory of Change (TOC) for AI Safety conferences. Events like these can lead attendees to:

- Form connections

- Coordinate efforts

- Learn about career paths and cause areas

- Build motivation and clarity on next steps

These changes increase the hours and resources dedicated to AI Safety work, improving infrastructure and the quality of contributions. Over time, this leads to:

- Greater alignment within the professional community

- Stronger advocacy and external legitimacy

- A more attractive and resilient movement

Ultimately, we’re reinforcing pathways that create a stronger movement, powered by the connections and insight fostered by these events. See the graph shown below here.

See the graph shown below here.

I will now outline different types of events that might emulate this process, with the fourth event type going even further.

Disclaimer: While I haven’t yet run a large-scale conference, I’ve attended many and consulted with experienced organizers to ground these suggestions in practical know-how.

An online conference

Why online?

Online events are cost-effective, accessible, and scalable.

Currently, the only[1] multi-day online programming on offer in the AI Safety movement is Apart Research’s hackathons. These hackathons are great, but have some limitations compared to online conferences. They mainly attract a technical crowd and offer limited networking opportunities compared to events with a dedicated networking app.

A well-facilitated online conference can bridge this gap.

Target audience

Existing AI Safety community: Especially those already familiar with EAGx or similar formats. These attendees know how to use platforms like Swapcard and will benefit from dedicated AI Safety branding.

Interested newcomers: Individuals not yet engaged with EA or rationalist communities, but interested in AI Safety. These folks may be unfamiliar with the logistics of effective networking and may require nudging and onboarding support. Organisers can team up with relevant fieldbuilders to scout promising talent profiles from this group.

Function

I see the purpose of this conference as twofold:

- Bring interested people into the movement

There is a lot of latent talent that is interested in reducing AI risk, but hasn’t yet made a connection to the movement. An online conference can be a low-bar and cost-effective way of exposing 100s, or even 1000s of people to AI Safety and adjacent topics. These people would have the opportunity to attend high-quality talks on risks from AI, receive advice on how to get involved, and attend meetups[2] and meetings.

- Supporting the existing AIS community in networking

Large chunks of the AI Safety community have already been to an EAG(x) conference, they know their way around in Swapcard and understand that the way they can maximise the value they get from the event is by booking 1-1 meetings. But having an explicitly AI risk-branded conference would allow us to expand the scope of the conference to those who are deeply engaged with AI Safety, but aren’t familiar with or might be put off by the EA brand.

Outreach Strategies

1) The existing AI Safety community

Simply put, the AI safety community can be divided into two groups: those who are familiar with EA and those who are not.[3]

The former group is rather easy to invite, as you can use the same avenues that people use to promote EAG(x) conferences. But the second group, having engaged in AIS without being part of the EA and rationalist communities, might be trickier to reach.

One will actually need to make an effort.

The low-hanging fruit here is going to fieldbuilding organisations who work with and advise people outside of the EA and rationalist movements, such as Talos, Arkose, Succesif, HIP, 80k[4], Heron, Network for Emerging Technology, LASR, etc.

Through PIBBS, you could also tap into academia. Their past fellows would likely share it with their colleagues. Someone has taken the time to scan ethics journals for AI alignment publications, which is also great. Arkose has a long list of AI researchers who have published in top AI journals.

2) People interested in AI Safety

Given that online events are really cost-effective to scale, it would be a missed opportunity not to use digital marketing to attract people to the conference with paid advertisements. You can target whoever you want, wherever they are. Your ad budget will also go much further in developing countries, so if you think it’s particularly important to expose talent to AI Safety in those parts of the world, you can do so quite cost-effectively.

Note that people who are going to be coming from ads will, on average, be less active during the conference compared to “the familiars”. Because of this, active outreach by fieldbuilders is particularly important, as well as getting this group onto a monthly newsletter.

One-day AI risk summits

Format and Purpose

In the same way we can use many of the best practices from EAGxVirtual for online conferences, we can rely on CEA’s new type of event series called EA Summits. They are a 1-day event that brings together both the existing local community as well as serves as a low-bar introduction to people interested in the movement. Great for people in the early-stages of their career. They can gain awareness, motivation, and initial connections.

Target Audience

I see the target audience for this type of event as fairly similar to what I outlined above for online conferences, except that you would want to focus your outreach effort on the city where the event is going to be held.

Logistics

I would not invest in providing stipends for travel, as people are less likely to want to attend from far away anyway for a one-day event. You should also be more than able to fill the 50-150 attendee cap just from the organising city if you do your outreach right. It’s also worth flagging that this event can be put on by newer organisers, which can be a great stepping stone for making even more ambitious events happen in the future.

3-day AI Safety conferences

Multi-day and regional

By now, you probably know where I’m going with this. It’s time to look for inspiration at EAG(x)conferences. These longer conferences have a greater opportunity for in-depth networking, advanced topics and the possibility for a higher selection bar. I would have this type of conference aimed at people from a given region within the existing AIS community[5].

The reason for this is that a longer event will have higher costs per attendee, so you want to invest this money (including travel stipends) in people who are actively working in AI Safety or have already decided that they want to transition their career. These events can thus catalyse deeper collaboration and regional networks.

Building Bridges for Beneficial AI Conference

Disclaimer: The draft of this section has gotten some reasonable pushback, so I no longer endorse the exact vision that’s written below. To save time, however, I didn’t change the main text, but included the feedback at the end. I hope this section is still useful to give a sense of what an ambitious conference might look like!

Now it’s time to think big. To my knowledge, there hasn’t been any conference with a similar format to what I’m going to outline; the closest thing was Stuart Russell’s annual IASEAI (pronounced as 'eye-see-eye') conference, had it had better facilitated networking and a larger scope of target audience. I also want to just go out and say that this is a high-stakes conference to put on, and should be organised by an experienced team.

Target Audience

As the title suggests, this event would focus on bringing together 4 groups of people: those from the AI Safety, AI Security and AI Ethics movements. The 4th target audience is those whom the previous three groups try to influence, namely, policymakers.

Outreach

I have already outlined how to invite the AI Safety crowd before, but this time I would employ a higher bar in terms of qualifying to attend: those who already work in AI Safety, and you can also trust not to scare away politicians[6].

I expect AI ethicists to be relatively easy to reach, too. First, I would just ask the IASEAI organisers how they did it for their conference, also reaching out to near-term risk-focused think-tanks and the more professional advocacy groups. You can check professors from local universities doing such work, and ask them to come and invite their network. Maybe you can explore online professional communities.

I know much less about the AI Security crowd, and I expect them to be harder to reach. You can contact companies, academia, use LinkedIn. If you have contacts at the government, you can try to get people from national security (not limited to AI-folk) to attend.

As for policymakers, what is best if you already have a network with people from this group, or at least you know people from think-tanks that trust you and are willing to invite their treasured contacts. Interestingly, I would suspect you can use carefully targeted social media ads for this group as well, as it’s possible to limit such advertisements to a ~1 km radius, allowing you to roughly target sets of buildings where such people work.

A challenge for organisers to consider regards a fifth crowd I didn’t mention and personally would not gear the conference towards - people working at frontier AI companies, who also don’t happen to fall into one of the first three categories mentioned above. After all, this is the crowd whose work we are trying to mitigate the harms of, right? This is something the organisers should really think hard about.

I would probably bite the bullet and not invite this crowd, as I wouldn’t want their lobbyists to decrease the amount of time the first three crowds can talk to policymakers. Lobbyists from AI companies probably have a much easier time talking to policymakers outside of such conferences compared to the other groups I mentioned. Having said that, I would still feel quite weird about rejecting a high-profile person’s application from a leading AI company, so take what I’m saying with a grain of salt.

Content

As for content, you want something that is introductory to accommodate policymakers who have little context, introducing them to risks from AI and how to mitigate them through governance. It could also include joint panels of experts from these groups and programming that focuses on building a collaborative atmosphere.

Location

In terms of location, Brussels seems like a natural fit for the type of collaborative event I’m proposing, and to a lesser extent, London and Berlin too. In Paris, you would likely want to frame such a project as more of an AI Security event due to the influence Yann Le Cun and Mistral had on the politicians and culture around AI Safety.[7] I know less about the American context, but I think such an event could work in liberal places like California. You absolutely don’t want to do this in Washington. You should have an event there that focuses on AI Security to not put off Republicans, especially since JD Vance has recently mushed together AI Safety & Ethics with wokeness.

Feedback and caveats

As I mentioned, the draft I sent to some experienced event organisers has received some pushback that I roughly agree with. These were the main points:

- The event is trying to do too much at the same time. It should have one specific goal instead of trying to do bridge-building as well as influencing policymakers.

- Some thought it would be more impactful to influence this group via private, closed-door events with a trusted group, not a big conference.

- There is some worry about trying to bring the safety and ethics crowds together, as they historically have had animosity between them.

Conclusion

We have seen that there is a lot of fertile ground for organising various events. I think these types of events can and should be put on in any important location, such as NYC, Washington, SF, Amsterdam, Germany, Paris, etc. By default, I think you shouldn’t wait around for others to organise them, and assume they won’t happen unless you create them yourself. The different events require different amounts of experience, so based on where you are in your career, you should choose accordingly. Of course, you should still coordinate with other organisations.

Big thank you for Ollie and Ivan for reviewing this draft.

- ^

It would be unfair to emit VAISU/MAISU from here, however they are focusing on people who are already involved with AI Safety.

- ^

The platform called Icebreakers is really great for this, though it is more on the expensive side. EAG(x)Virtual crews had a lot of success using it to facilitate connections in a non-awkward manner.

- ^

See this post on the Relationship between EA Community and AI safety.

- ^

My understanding is that in previous years they have been focusing on advising people with relatively high context, but I suspect that this has been changing, especially with their recent pivot.

- ^

Having said that, I would make exceptions for experienced professionals new to the field who have particularly neglected skillsets, such as those with a background in infosecurity or policy.

- ^

Here I’m think of of the crowds that can come across either as too alarmist and/or less professional. This is a weakly held take though, and I appreciate that there is nuanced debate to be had around this topic about wanting to widen the Overton window.

- ^

Though one could argue this is exactly why you should have an explicitly AIS-themed conference!

- ^

It would be unfair to emit VAISU/MAISU from here, however they are focusing on people who are already involved with AI Safety.

- ^

The platform called Icebreakers is really great for this, though it is more on the expensive side. EAG(x)Virtual crews had a lot of success using it to facilitate connections in a non-awkward manner.

- ^

My understanding is that in previous years they have been focusing on advising people with relatively high context, but I suspect that this has been changing, especially with their recent pivot.

- ^

Here I’m think of of the crowds that can come across either as too alarmist and/or less professional. This is a weakly held take though, and I appreciate that there is nuanced debate to be had around this topic about wanting to widen the Overton window.

- ^

Though one could argue this is exactly why you should have an explicitly AIS-themed conference!