There are many things in EA community-building that the Centre for Effective Altruism is not doing. We think some of these things could be impactful if well-executed, even though we don't have the resources to take them on. Therefore, we want to let people know what we're not doing, so that they have a better sense of how neglected those areas are.

To see more about what we are doing, look at our plans for 2021, and our summary of our long-term focus.

Things we're not actively focused on

We are not actively focusing on:

- Reaching new mid- or late-career professionals

- Reaching or advising high-net-worth donors

- Fundraising in general

- Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

- Career advising

- Research, except about the EA community

- Content creation

- Donor coordination

- Supporting other organizations

- Supporting promising individuals

By “not actively focusing on”, I mean that some of our work will occasionally touch on or facilitate some of the above (e.g. if groups run career fellowships, or city groups do outreach to mid-career professionals), but our main efforts will be spent on other goals.

One caveat to the below is that our community health team sometimes advises people who are working in the areas below (but don’t do the object-level work themselves). For example, they will sometimes advise on projects related to policy (even though none of them work on policy).

Reaching new mid- or late-career professionals

As mentioned in our 2021 plans, we intend to focus our efforts to bring new people into the community on students (especially at top universities) and young professionals. We intend to work to retain mid- and late-career professionals who are already highly engaged in EA, but we do not plan to work to recruit more mid- or late-career people.

Reaching or advising high-net-worth donors

We haven't done this for a while, but other EA-aligned organizations are working in this area, including Longview Philanthropy and Effective Giving.

Fundraising in general

Not focusing on fundraising is a change for us; we used to run EA Funds and Giving What We Can. These projects have now spun out of CEA, and we hope that this will give both these projects and CEA a clearer focus.

Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

As part of our work with local groups, we may work with group leaders to support cause-specific fellowships, workshops, or 1:1 content. However, we do not have any other plans in this area.

Career advising

As part of our work with local groups, we may work with group leaders to support career fellowships, workshops, or 1:1 content. And at our events, we try to match people with mentors who can advise them on their careers. We do not have any other plans in this area.

80,000 Hours clarified what they are and aren’t doing in this post.

Research, except about the EA community

We haven't had full-time research staff since ~2017, although we did support the CEA summer research fellowship in 2018 and 2019. We’ll continue to work with Rethink Priorities on the EA Survey, and to do other research that informs our own work.

We’ll also continue to run the EA Forum and EA Global, which are venues where researchers can share and discuss their ideas. We believe that supporting these discussions ties in with our goals of recruiting students and young professionals and keeping existing community members up to speed with EA ideas.

Content creation

We curate content when doing so supports productive discussion spaces (e.g. inviting speakers to events, developing curricula for fellowships run by groups). We occasionally write content on community health issues. We also try to incentivize the creation of quality content by giving speakers a platform and giving out prizes on the EA Forum. Aside from this, we don’t have plans to create more content.

Donor coordination

As mentioned above, we have now spun out EA Funds, which has some products (e.g. the Funds and the donor lottery) that help with donor coordination.

Supporting other organizations

We do some work to support organizations as they work through internal conflicts and HR issues. We also provide operational support to 80,000 Hours, Forethought Foundation, EA Funds, Giving What We Can, and a longtermist project incubator. We also occasionally share ideas and resources — related to culture, epistemics, and diversity, equity, and inclusion — with other organizations. Other than this, we don’t plan to work in this space.

Supporting promising individuals

The groups team provides support and advice to group organizers, and the community health team provides support to people who experience problems within the community. We also run several programs for matching people up with mentors (e.g. as part of EA Global).

We do not plan to financially support individuals. Individuals can apply for financial support from EA Funds and other sources.

Conclusion

A theme in the above is that I view CEA as one organization helping to grow and support the EA community, not the sole organization which determines the community’s future. I think that this isn’t a real change: the EA community’s development was always influenced by a coalition of organizations. But I do think that CEA has sometimes aimed to determine the community’s future, or represented itself as doing so. I think this was often a mistake.

Starting a project in one of these areas

CEA has limited resources. In order to focus on some things, we need to deprioritize others.

We hope the information above encourages others to start projects in these areas. However, there are two things to consider before starting such a project.

First, we think that additional high-quality work in the areas listed above will probably be valuable, but we haven’t carefully considered this, and some projects within an area are probably much more promising than others. We recommend carefully considering which areas and approaches to pursue.

Second, we think there’s potential to cause harm in the areas above (e.g. by harming the reputation of the effective altruism movement, or poisoning the well for future efforts). However, if you take care to reflect on potential paths to harm and adjust based on critical feedback, we think you’re likely to avoid causing harm. (For more resources on avoiding harm, see these resources by Claire Zabel, 80,000 Hours, and Max Dalton and Jonas Vollmer.)

Finally, even if CEA is doing something, it doesn’t mean no one else should be doing it. There can be benefits from multiple groups working in the same area.

If you’d like to start something related to EA community building, let us know by emailing Joan Gass; we can let you know what else is going on in the area. Since we’re focused on our core projects, we probably won’t be able to provide much help, but we can let you know about our plans and about any other similar projects we’re aware of. Additionally, the Effective Altruism Infrastructure Fund at EA Funds might be able to provide funding and/or advice.

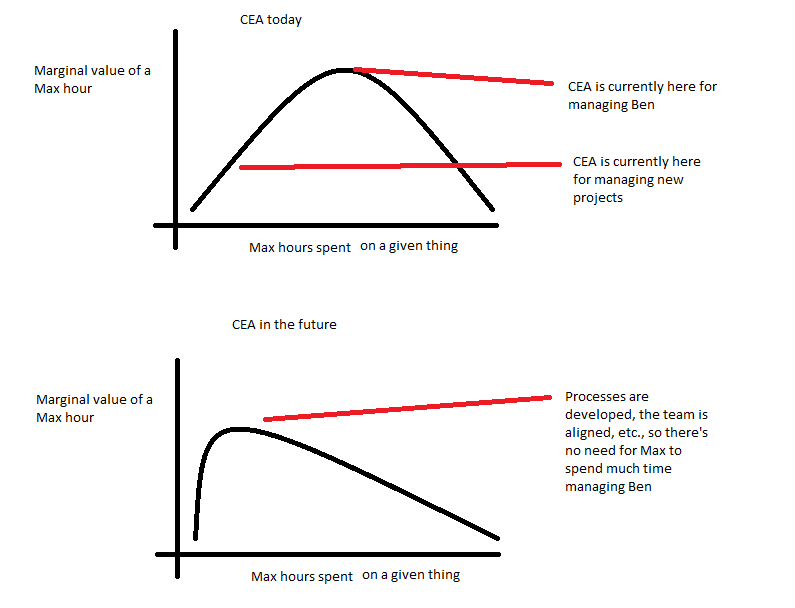

Hi Max,

Thanks for clarifying your reasoning here.

Again, if you think CEA shouldn’t expand, my guess is that it shouldn’t.

I respect your opinion a lot here and am really thankful for your work.

I think this is a messy issue. I tried clarifying my thoughts for a few hours. I imagine what’s really necessary is broader discussion and research into expectations and models of the expansion of EA work, but of course that’s a lot of work. Note that I'm not particularly concerned with CEA becoming big; I'm more concerned with us aiming for some organizations to be fairly large.

Feel free to ignore this or just not respond. I hope it might provide information on a perspective, but I’m not looking for any response or to cause controversy.

What is organization centrality?

This is a complex topic, in part because the concept of “organizations” is a slippery one. I imagine what really matters is something like, “coordination ability”, which typically requires some kind of centralization of power. My impression is that there’s a lot of overlap in donors and advisors around the groups you mention. If a few people call all the top-level shots (like funding decisions), then “one big organization” isn’t that different from a bunch of small ones. I appreciate the point about operations sharing; I’m sure there are some organizations that have had subprojetts that have shared fewer resources than what you described. It’s possible to be very decentralized within an organization (think of a research lab with distinct product owners) and to be very centralized within a collection of organizations.

Ideally I’d imagine that the choice of coordination centralization would be quite separate from that about the formal Nonprofit structure. You’re already sharing operations in an unconventional way. I could imagine cases where it could makes sense to have many nonprofits under a single ownership (even if this ownership is not legally binding), perhaps to help for targeted fundraising or to spread out legal liability. I know many people and companies own several sub LLCs and similar, I could see this being the main case.

-> If CEA is vetting which projects get made and expand, and hosts community health and other resources, then it’s not *that* much different from technically bringing in these projects formally under its wing. I imagine finding some structure where CEA continues to offer organizational and coordination services as the base of organizations grows, will be a pretty tricky one.

Again, what I would like to see is lots of “coordination ability”, and I expect that this could go further with the centralization of power with capacity to act on it. (I could imagine funders who technically have authority, but don’t have the time to do much that’s useful with it). It’s possible that if CEA (or another group) is able to be a dominant decision maker, and perhaps grow that influence over time, then that would represent centralized control of power.

What can we learn from the past?

I’ve heard of the histories of CEA and 80,000 Hours being used in this way before. I agree with much of what you said here, but am unsure about the interpretations. What’s described is a very small sample size and we could learn different kinds of lessons from them.

Most of the non-EA organizations that I could point to that have important influence in my life are much bigger than 20 people. I’m very happy Apple, Google, The Bill & Melinda Gates Foundation, OpenAI, Deepmind, The Electronic Frontier Foundation, Universities, The Good Food Institute, and similar, exist.

It’s definitely possible to have too many goals, but that’s relative to size and existing ability. It wouldn’t have made sense for Apple to start out making watches and speakers, but it got there eventually, and is now doing a pretty good job at it (in my opinion). So I agree that CEA seems to have over-applied itself, but don’t think that means it shouldn’t be aiming to grow later on.

Many companies have had periods where they’ve diversified too quickly and suffered. Apple, famously, before Jobs came back, Amazon apparently had a period post-dot-com bubble, arguably Google with Google X, the list goes on and on. But I’m happy these companies eventually fixed their mistakes and continued to expand.

“Many Small EA Orgs”

I like the idea of having lots of organizations, but I also like the idea of having at least some really big organizations. The Good Food Institute now seems to have a huge team and was just created a few years ago, and they seem to correspondingly be taking big projects.

I’m happy that we have few groups that coordinate political campaigns. Those seem pretty messy. True, the DNC in the US might have serious problems, but I think the answer would be a separate large group, not hundreds of tiny ones.

I’m also positive about 80,000 Hours, but I feel like we should be hoping for at least some organizations (like The Good Food Institute) to have much better outcomes. 80,000 Hours took quite some time to get to where it is today (I think it started in around 2012?), and is still rather small in the scheme of things. They have around 14 full time employees; they seem quite productive, but not 2-5 orders of magnitude more than other organizations. GiveWell seems much more successful; not only did they also grow a lot, but they convinced a Billionaire couple to help them spin off a separate entity which now is hugely important.

The costs of organizational growth vs. new organizations

Trust of key figures

It seems much more challenging to me to find people I would trust as nonprofit founders than people I would trust as nonprofit product managers. Currently we have limited availability of senior EA leaders, so it seems particularly important to select people in positions of power who already understand what these leaders consider to be valuable and dangerous. If a big problem happens, it seems much easier to remove a PM than a nonprofit Executive Director or similar.

Ease

Founding requires a lot of challenging tasks like hiring, operations, and fundraising, which many people aren’t well suited to. I’m founding a nonprofit now, and have been having to learn how to set up a nonprofit and maintain it, which has been a major distraction. I’d be happier at this stage making a department inside a group that would do those things for me, even if I had to pay a fee.

It seems great that CEA did operations for a few other groups, but my impression is that you’re not intending to do that for many of the new groups you are referring to.

One related issue is that it can be quite hard for small organizations to get talent. Typically they have poor brands and tiny reputations. In situations where these organizations are actually strong (which should be many), having them be part of the bigger organization in brand alone seems like a pretty clear win. On the flip side, if some projects will be controversial or done poorly, it can be useful to ensure they are not part of a bigger organization (so they don't bring it down).

Failure tolerance

Not having a “single point of failure” sounds nice in theory, but it seems to me that the funders are the main thing that matters, and they are fairly coordinated (and should be). If they go bad, then little amount of reorganization will help us. If they’re able to do a decent job, then they should help select leadership of big organizations that could do a good job, and/or help spin-off decent subgroups in the case of emergencies.

I think generally effort going into “making sure things go well” is better than effort going into “making sure that disasters won’t be too terrible”; and that’s better achieved by focusing on sizable organizations.

Smaller failure tolerance could also be worse with a distributed system; I expect it to be much easier to fire or replace a PM than to kick out a founder or move them around.

Expectations of growth

One question might be how ambitious we are regarding the growth of meta and longtermist efforts. I could imagine a world where we’re 100x the size, 20 years from now, with a few very large organizations, but it’s hard to imagine how many people we could manage with tiny organizations.

TLDR

My read of your posts is that you are currently aiming for / expecting a future of EA meta where there are a bunch of very small (<20 person) organizations. This seems quite unusual compared to other similar movements I’m aware of. Very unusual actions often require much stronger cases than usual ones, and I don’t yet see it. The benefits of having at least a few very powerful meta organizations seems greater than the costs.

I’m thankful for whatever work you decide to pursue, and more than encourage trying stuff out, like trying to encourage many small groups. I think I mainly wouldn’t want us to over-commit to any strategy like that though, and I also would like to encourage some more reconsideration, especially as new evidence emerges.