There are many things in EA community-building that the Centre for Effective Altruism is not doing. We think some of these things could be impactful if well-executed, even though we don't have the resources to take them on. Therefore, we want to let people know what we're not doing, so that they have a better sense of how neglected those areas are.

To see more about what we are doing, look at our plans for 2021, and our summary of our long-term focus.

Things we're not actively focused on

We are not actively focusing on:

- Reaching new mid- or late-career professionals

- Reaching or advising high-net-worth donors

- Fundraising in general

- Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

- Career advising

- Research, except about the EA community

- Content creation

- Donor coordination

- Supporting other organizations

- Supporting promising individuals

By “not actively focusing on”, I mean that some of our work will occasionally touch on or facilitate some of the above (e.g. if groups run career fellowships, or city groups do outreach to mid-career professionals), but our main efforts will be spent on other goals.

One caveat to the below is that our community health team sometimes advises people who are working in the areas below (but don’t do the object-level work themselves). For example, they will sometimes advise on projects related to policy (even though none of them work on policy).

Reaching new mid- or late-career professionals

As mentioned in our 2021 plans, we intend to focus our efforts to bring new people into the community on students (especially at top universities) and young professionals. We intend to work to retain mid- and late-career professionals who are already highly engaged in EA, but we do not plan to work to recruit more mid- or late-career people.

Reaching or advising high-net-worth donors

We haven't done this for a while, but other EA-aligned organizations are working in this area, including Longview Philanthropy and Effective Giving.

Fundraising in general

Not focusing on fundraising is a change for us; we used to run EA Funds and Giving What We Can. These projects have now spun out of CEA, and we hope that this will give both these projects and CEA a clearer focus.

Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)

As part of our work with local groups, we may work with group leaders to support cause-specific fellowships, workshops, or 1:1 content. However, we do not have any other plans in this area.

Career advising

As part of our work with local groups, we may work with group leaders to support career fellowships, workshops, or 1:1 content. And at our events, we try to match people with mentors who can advise them on their careers. We do not have any other plans in this area.

80,000 Hours clarified what they are and aren’t doing in this post.

Research, except about the EA community

We haven't had full-time research staff since ~2017, although we did support the CEA summer research fellowship in 2018 and 2019. We’ll continue to work with Rethink Priorities on the EA Survey, and to do other research that informs our own work.

We’ll also continue to run the EA Forum and EA Global, which are venues where researchers can share and discuss their ideas. We believe that supporting these discussions ties in with our goals of recruiting students and young professionals and keeping existing community members up to speed with EA ideas.

Content creation

We curate content when doing so supports productive discussion spaces (e.g. inviting speakers to events, developing curricula for fellowships run by groups). We occasionally write content on community health issues. We also try to incentivize the creation of quality content by giving speakers a platform and giving out prizes on the EA Forum. Aside from this, we don’t have plans to create more content.

Donor coordination

As mentioned above, we have now spun out EA Funds, which has some products (e.g. the Funds and the donor lottery) that help with donor coordination.

Supporting other organizations

We do some work to support organizations as they work through internal conflicts and HR issues. We also provide operational support to 80,000 Hours, Forethought Foundation, EA Funds, Giving What We Can, and a longtermist project incubator. We also occasionally share ideas and resources — related to culture, epistemics, and diversity, equity, and inclusion — with other organizations. Other than this, we don’t plan to work in this space.

Supporting promising individuals

The groups team provides support and advice to group organizers, and the community health team provides support to people who experience problems within the community. We also run several programs for matching people up with mentors (e.g. as part of EA Global).

We do not plan to financially support individuals. Individuals can apply for financial support from EA Funds and other sources.

Conclusion

A theme in the above is that I view CEA as one organization helping to grow and support the EA community, not the sole organization which determines the community’s future. I think that this isn’t a real change: the EA community’s development was always influenced by a coalition of organizations. But I do think that CEA has sometimes aimed to determine the community’s future, or represented itself as doing so. I think this was often a mistake.

Starting a project in one of these areas

CEA has limited resources. In order to focus on some things, we need to deprioritize others.

We hope the information above encourages others to start projects in these areas. However, there are two things to consider before starting such a project.

First, we think that additional high-quality work in the areas listed above will probably be valuable, but we haven’t carefully considered this, and some projects within an area are probably much more promising than others. We recommend carefully considering which areas and approaches to pursue.

Second, we think there’s potential to cause harm in the areas above (e.g. by harming the reputation of the effective altruism movement, or poisoning the well for future efforts). However, if you take care to reflect on potential paths to harm and adjust based on critical feedback, we think you’re likely to avoid causing harm. (For more resources on avoiding harm, see these resources by Claire Zabel, 80,000 Hours, and Max Dalton and Jonas Vollmer.)

Finally, even if CEA is doing something, it doesn’t mean no one else should be doing it. There can be benefits from multiple groups working in the same area.

If you’d like to start something related to EA community building, let us know by emailing Joan Gass; we can let you know what else is going on in the area. Since we’re focused on our core projects, we probably won’t be able to provide much help, but we can let you know about our plans and about any other similar projects we’re aware of. Additionally, the Effective Altruism Infrastructure Fund at EA Funds might be able to provide funding and/or advice.

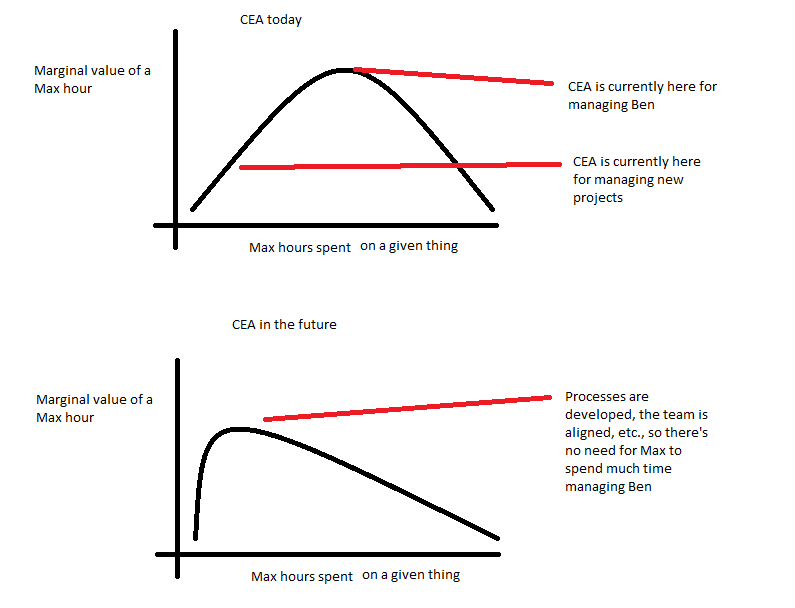

Thanks for the diagrams and explanation!

I think when I see the diagrams, I think of these as "low overhead roles" vs "high overhead roles"; where "low overhead roles" have peak marginal value much earlier than high overhead roles. If one is interested in scaling work, and assuming that requires also scaling labor, then scalable strategies would be ones that would have many low overhead roles, similar to your second diagram of "CEA in the Future"

That said, my main point above wasn't that CEA should definitely grow, but that if CEA is having trouble/hesitancy/it-isn't-ideal growing, I would expect that the strategy of "encouraging a bunch of new external nonprofits" to be limited in potential.

If CEA thinks it could help police new nonprofits, that would also take Max's time or similar; the management time is coming from the same place, it's just being used in different ways and there would ideally be less of it.

In the back of my mind, I'm thinking that OpenPhil theoretically has access to +$10Bil, and hypothetically much of this could go towards promotion of EA or EA-related principles, but right now there's a big bottleneck here. I could imagine that it's possible it could make sense to be rather okay wasting a fair bit of money and doing things quite unusual in order to get expansion to work somehow.

Around CEA and related organizations in particular, I am a bit worried that not all of the value of taking in good people is transparent. For example, if an org takes in someone promising and trains them up for 2 years, and then they leave for another org, that could have been a huge positive externality, but I'd bet it would get overlooked by funders. I've seen this happen previously. Right now it seems like there are a bunch of rather young EAs who really could use some training, but there are relatively few job openings, in part because existing orgs are quite hesitant to expand.

I imagine that hypothetically this could be an incredibly long conversation, and you definitely have a lot more inside knowledge than I do. I'd like to personally do more investigation to better understand what the main EA growth constraints are, we'll see about this.

One thing we could make tractable progress in is in forecasting movement growth or these other things. I don't have things in mind at the moment, but if you ever have ideas, do let me know, and we could see about developing them into questions in Metaculus or similar. I imagine having a group understanding of total EA movement growth could help a fair bit and make conversations like this more straightforward.