Summary

The goal of this post is to analyze the growth of the technical and non-technical AI safety fields in terms of the number of organizations and number of FTEs working in these fields.

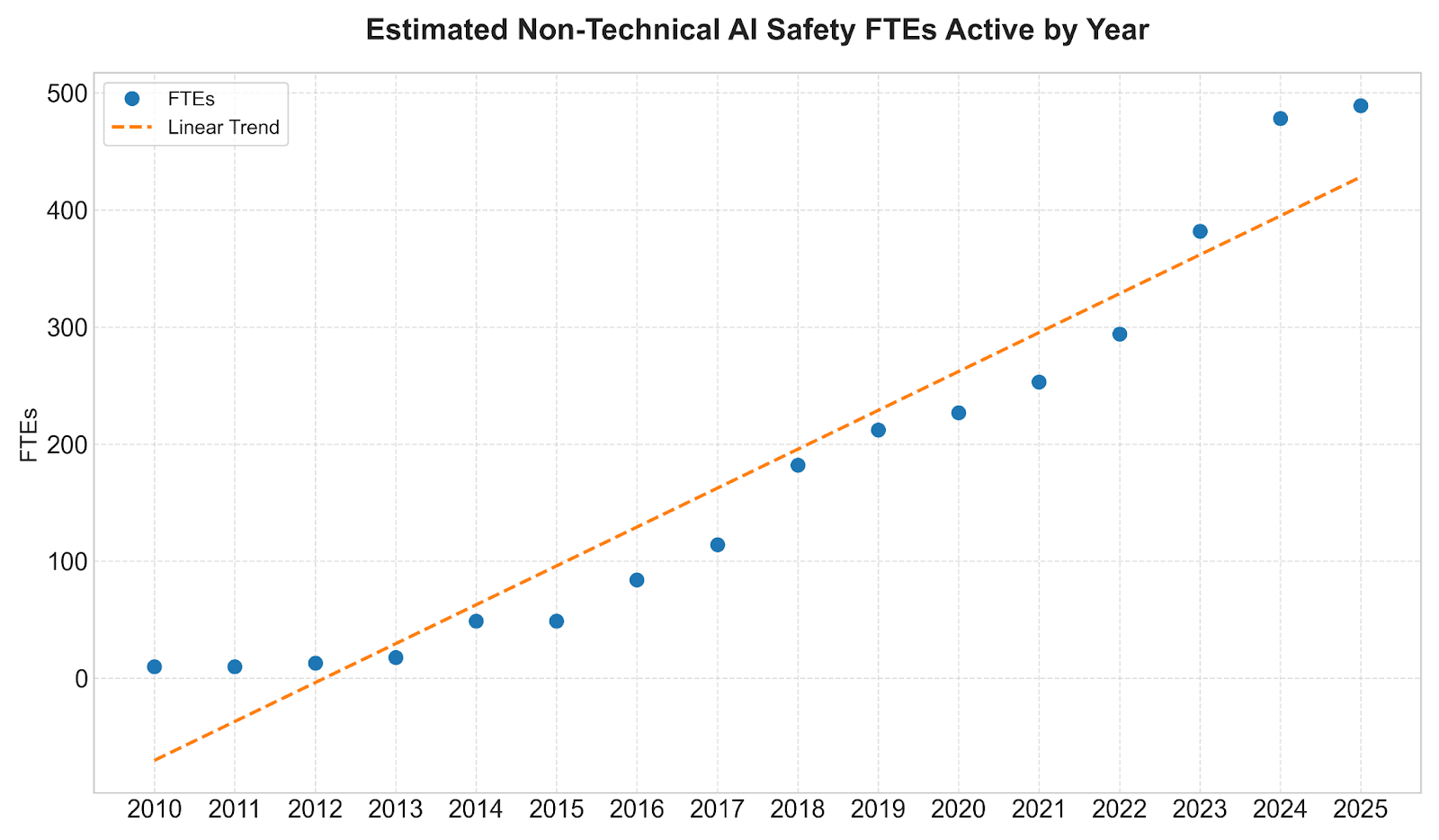

In 2022, I estimated that there were about 300 FTEs (full-time equivalents) working in the field of technical AI safety research and 100 on non-technical AI safety work (400 in total).

Based on updated data and estimates from 2025, I estimate that there are now approximately 600 FTEs working on technical AI safety and 500 FTEs working on non-technical AI safety (1100 in total).

Note that this post is an updated version of my old 2022 post Estimating the Current and Future Number of AI Safety Researchers.

Technical AI safety field growth analysis

The first step for analyzing the growth of the technical AI safety field is to create a spreadsheet listing the names of known technical AI safety organizations, when they were founded, and an estimated number of FTEs for each organization. The technical AI safety dataset contains 70 organizations working on technical AI safety and a total of 645 FTEs working at them (68 active organizations and 620 active FTEs in 2025).

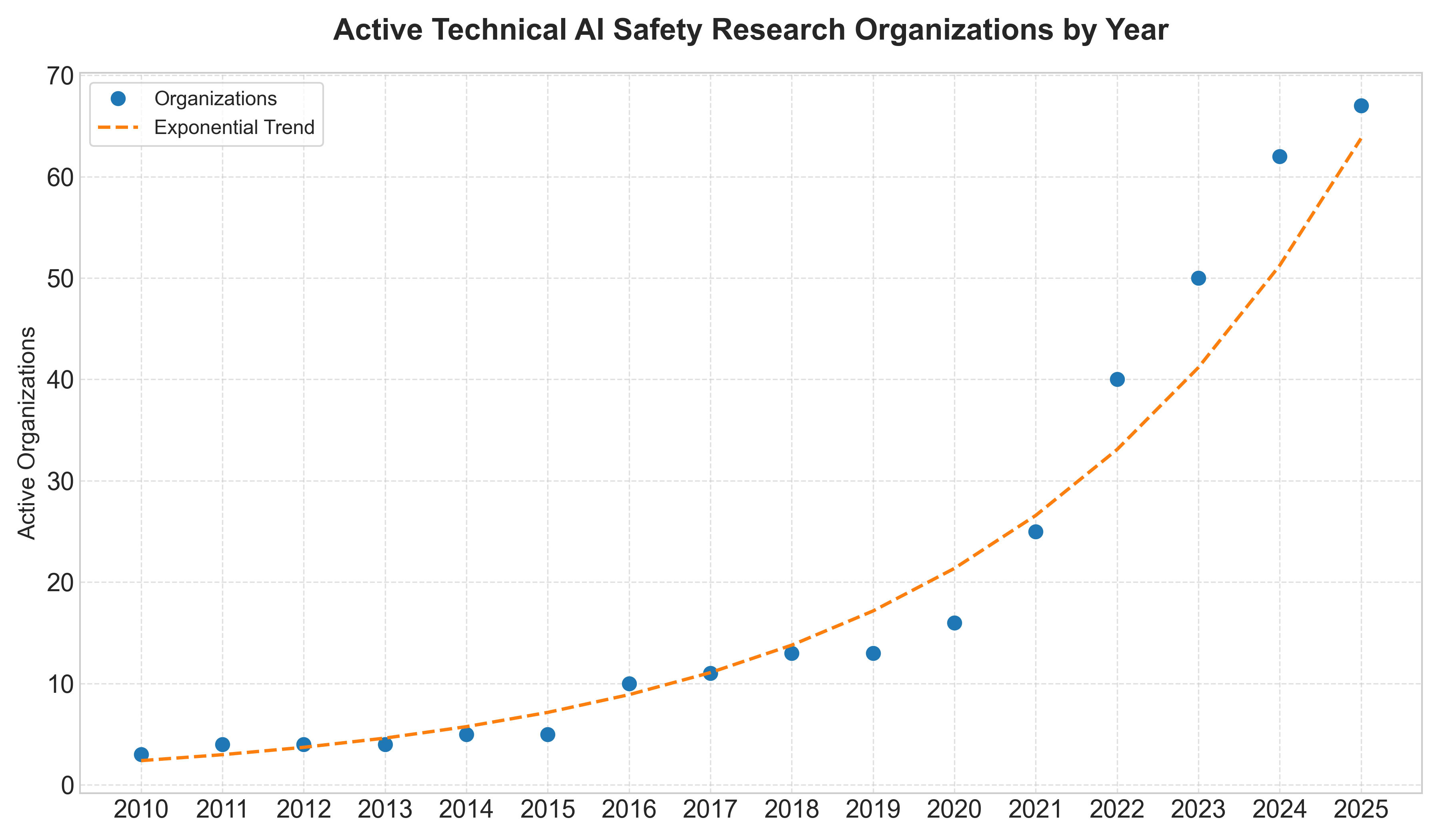

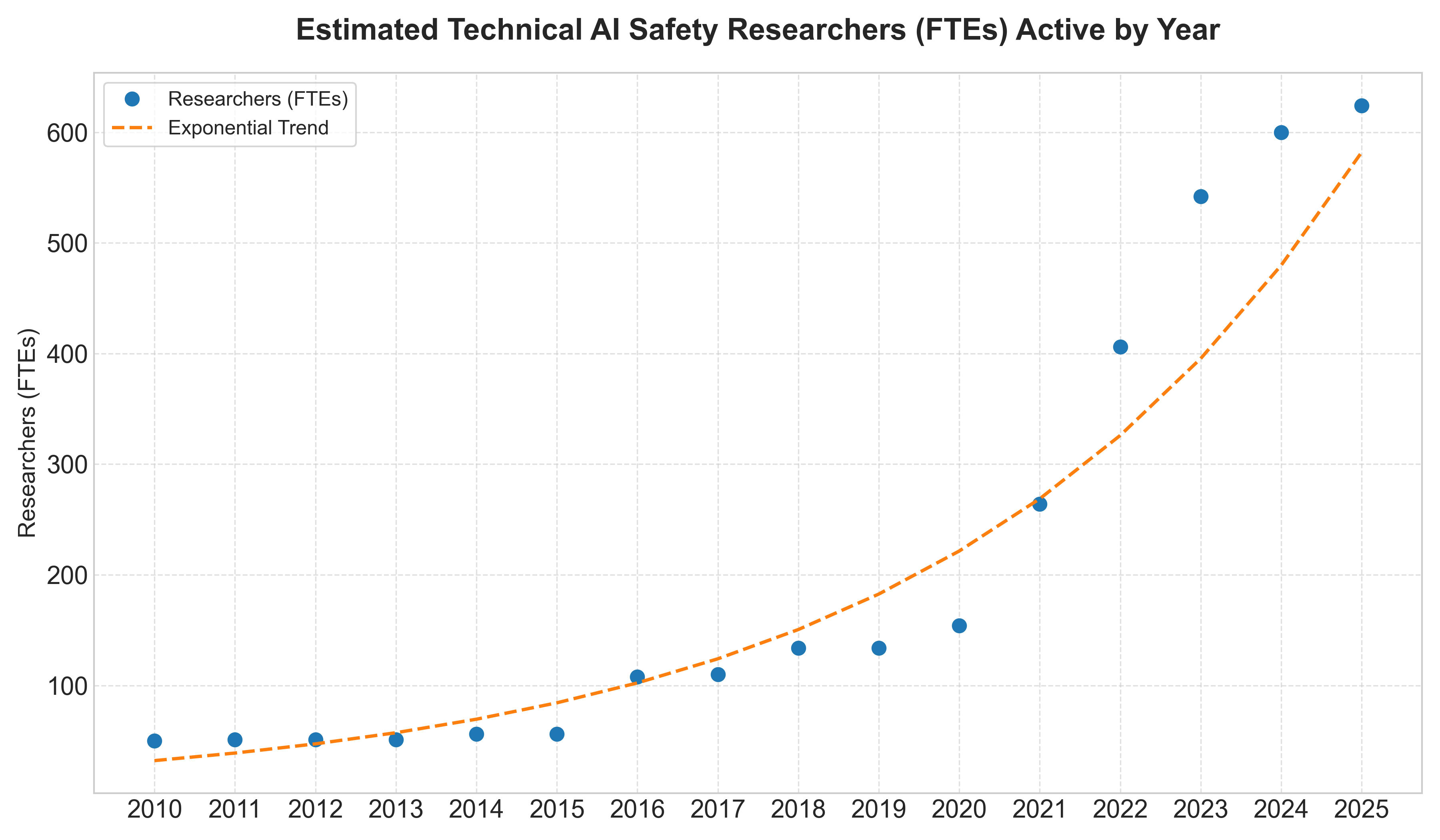

Then I created two scatter plots showing the number of technical AI safety research organizations and FTEs working at them respectively. On each graph, the x-axis is the years from 2010 to 2025 and the y-axis is the number of active organizations or estimated number of total FTEs working at those organizations. I also created models to fit the scatter plots. For the technical AI safety organizations and FTE graphs, I found that an exponential model fit the data best.

The two graphs show relatively slow growth from 2010 to 2020 and then the number of technical AI safety organizations and FTEs starts to rapidly increase around 2020 and continues rapidly growing until today (2025).

The exponential models describe a 24% annual growth rate in the number of technical AI safety organizations and 21% growth rate in the number of technical AI safety FTEs.

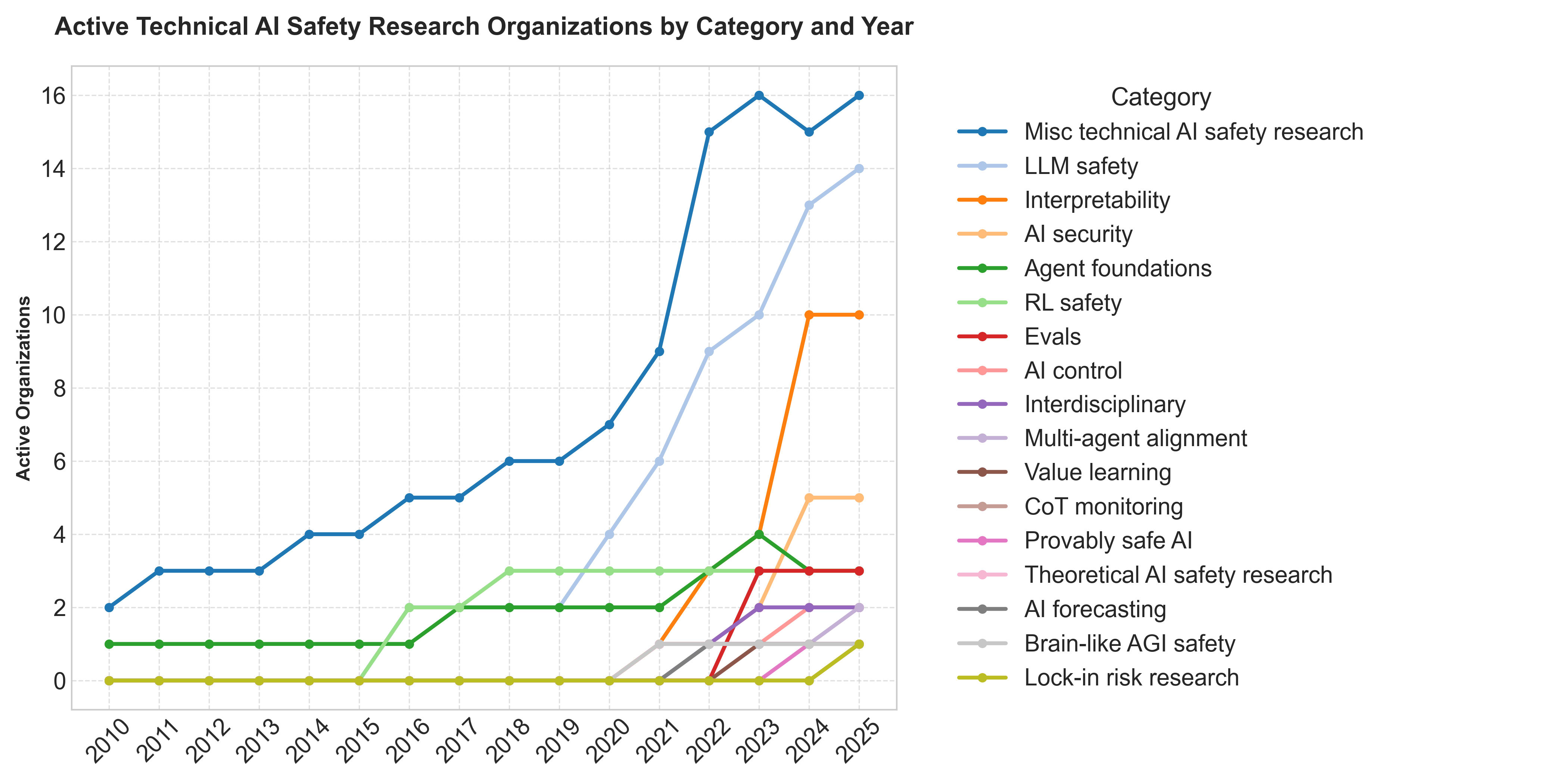

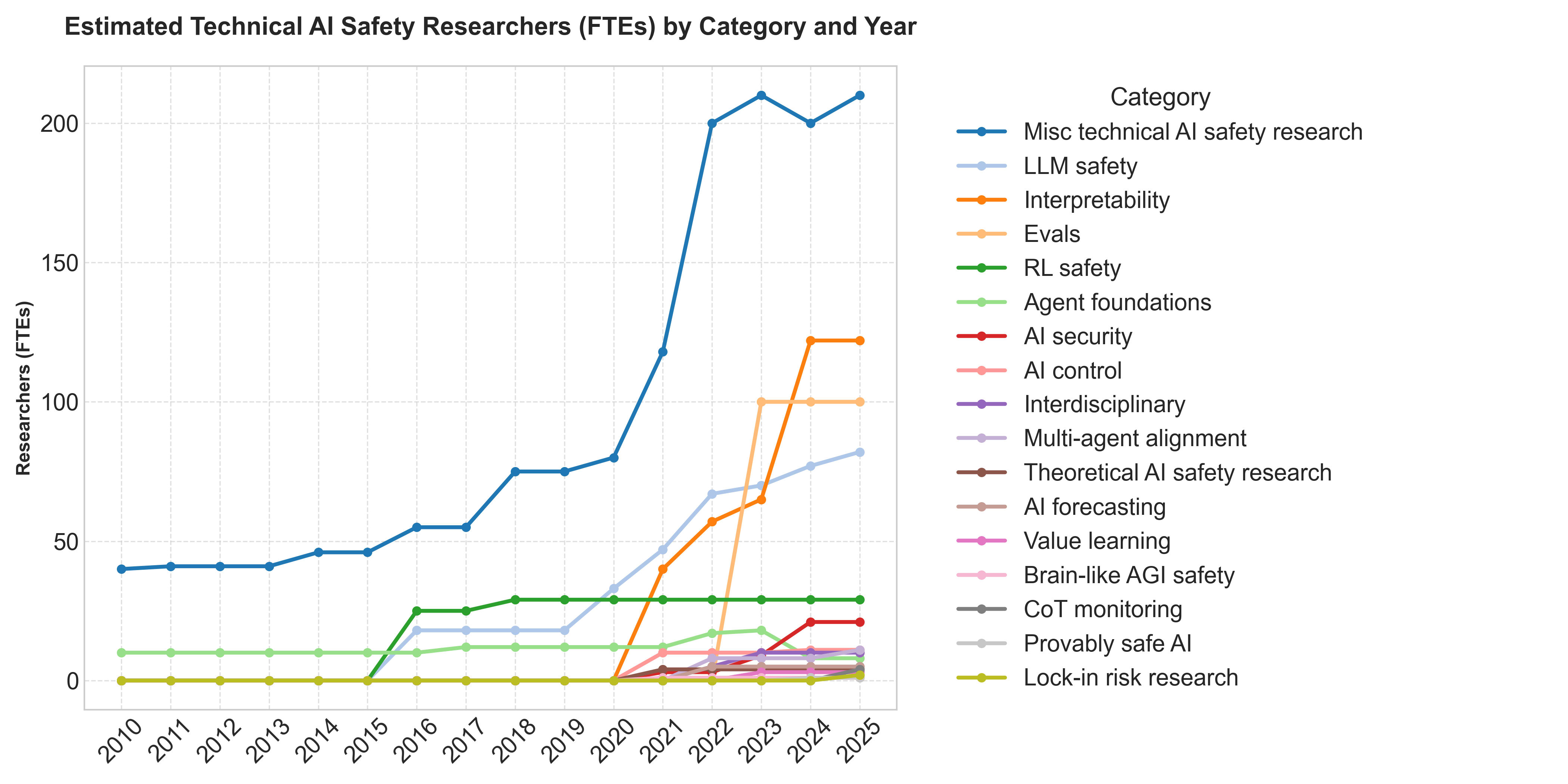

I also created graphs showing the number of technical AI safety organizations and FTEs by category. The top three categories by number of organizations and FTEs are Misc technical AI safety research, LLM safety, and interpretability.

Misc technical AI safety research is a broad category that mostly consists of empirical AI safety research that is not purely focused on LLM safety research such as scalable oversight, adversarial robustness, jailbreaks, and otherwise research that covers a variety of different areas and is difficult to put into a single category.

Non-technical AI safety field growth analysis

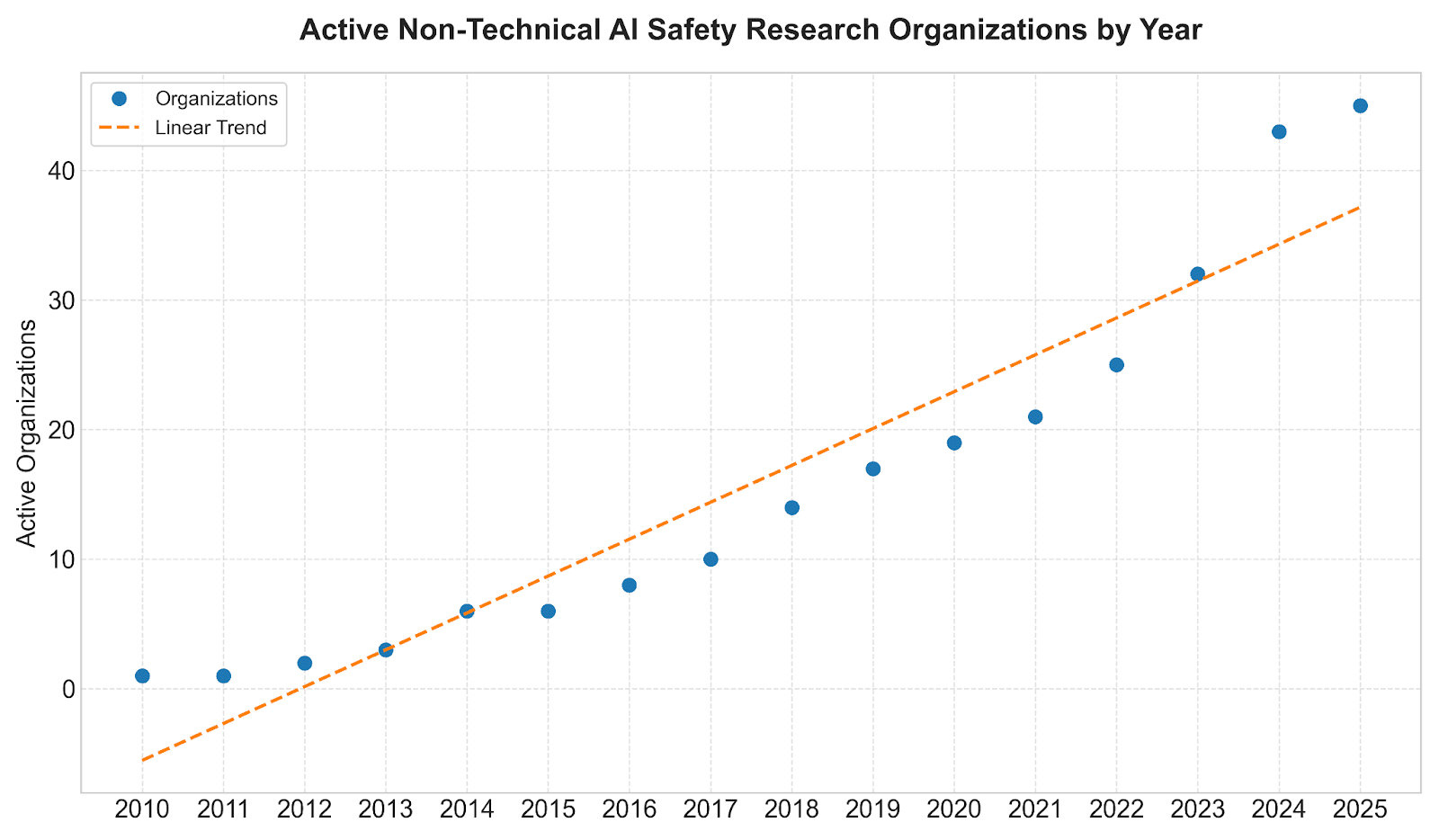

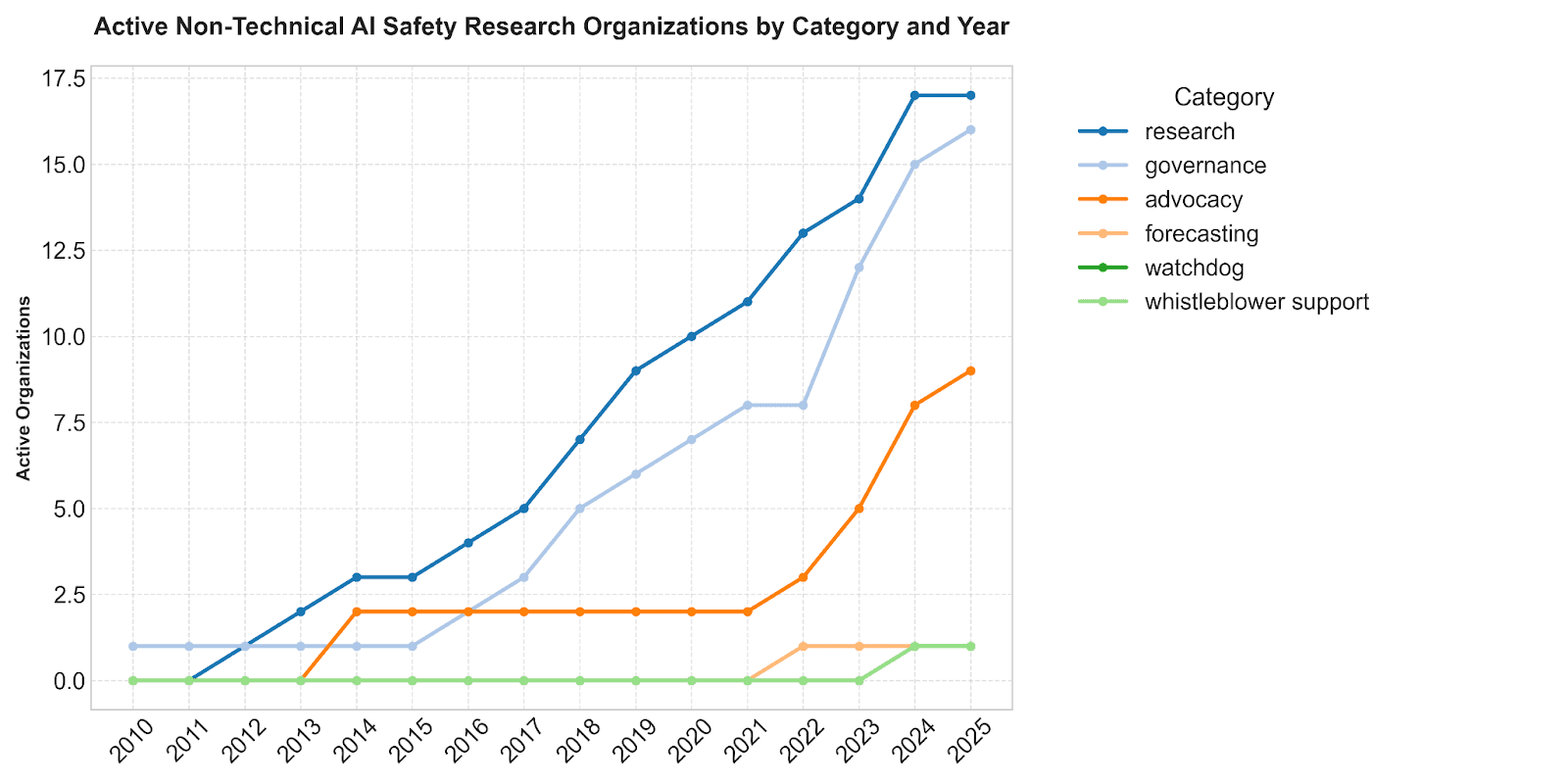

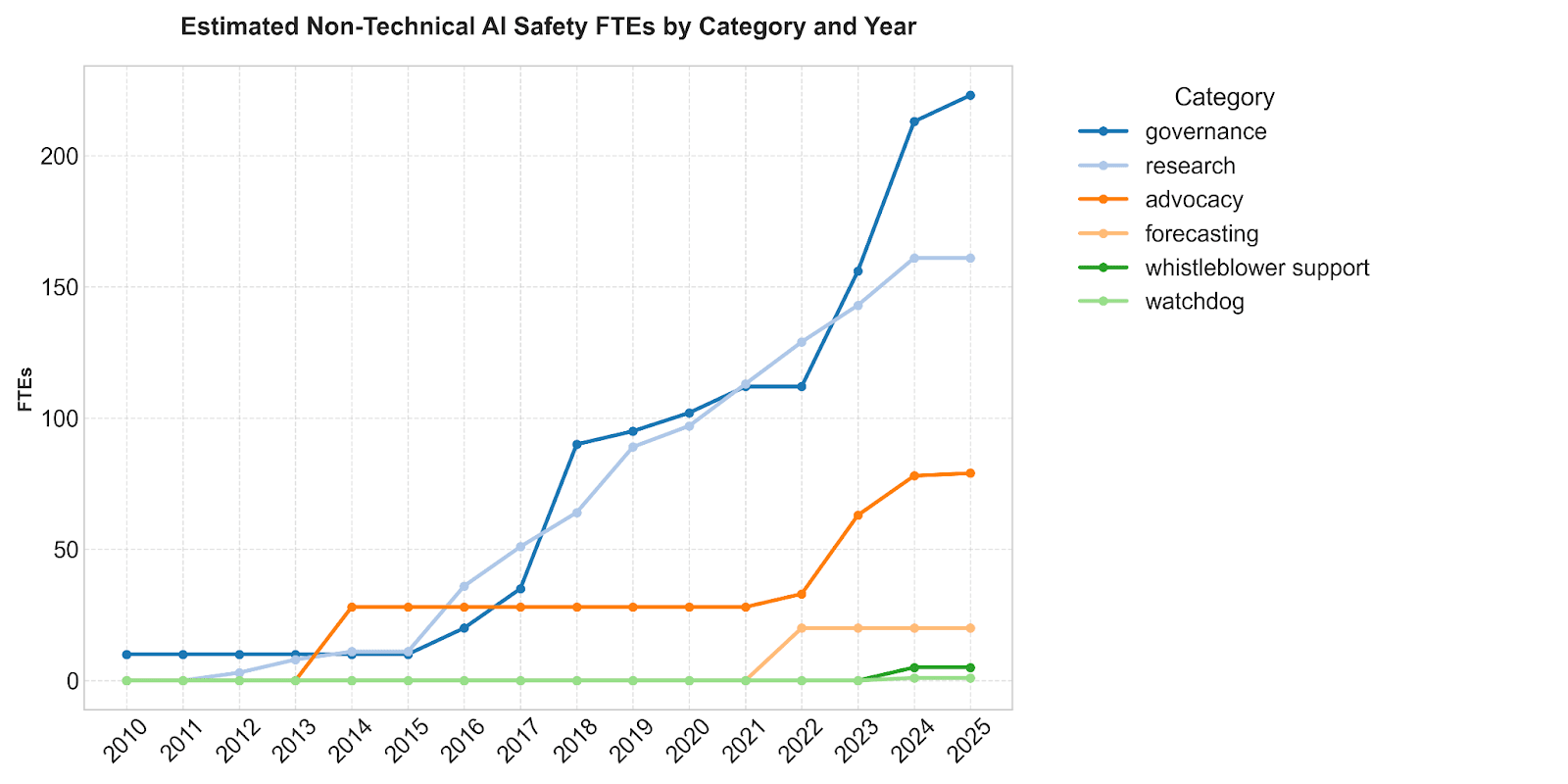

I also applied the same analysis to a dataset of non-technical AI safety organizations. The non-technical AI safety landscape, which includes fields like AI policy, governance, and advocacy, has also expanded significantly. The non-technical AI safety dataset contains 45 organizations working on non-technical AI safety and a total of 489 FTEs working at them.

The graphs plotting the growth of the non-technical AI safety field show an acceleration in the rate of growth around 2023 though a linear model fits the data well from the years 2010 - 2025.

In the previous post from 2022, I counted 45 researchers on Google Scholar with the AI governance tag. There are now over 300 researchers with the AI governance tag, evidence that the field has grown.

I also created graphs showing the number of non-technical AI safety organizations and FTEs by category.

Acknowledgements

Thanks to Ryan Kidd from SERI MATS for sharing data on AI safety organizations which was useful for writing this post.

Appendix

- A Colab notebook for reproducing the graphs in this post can be found here.

- Technical AI safety organizations spreadsheet in Google Sheets.

- Non-Technical AI safety organizations spreadsheet in Google Sheets.

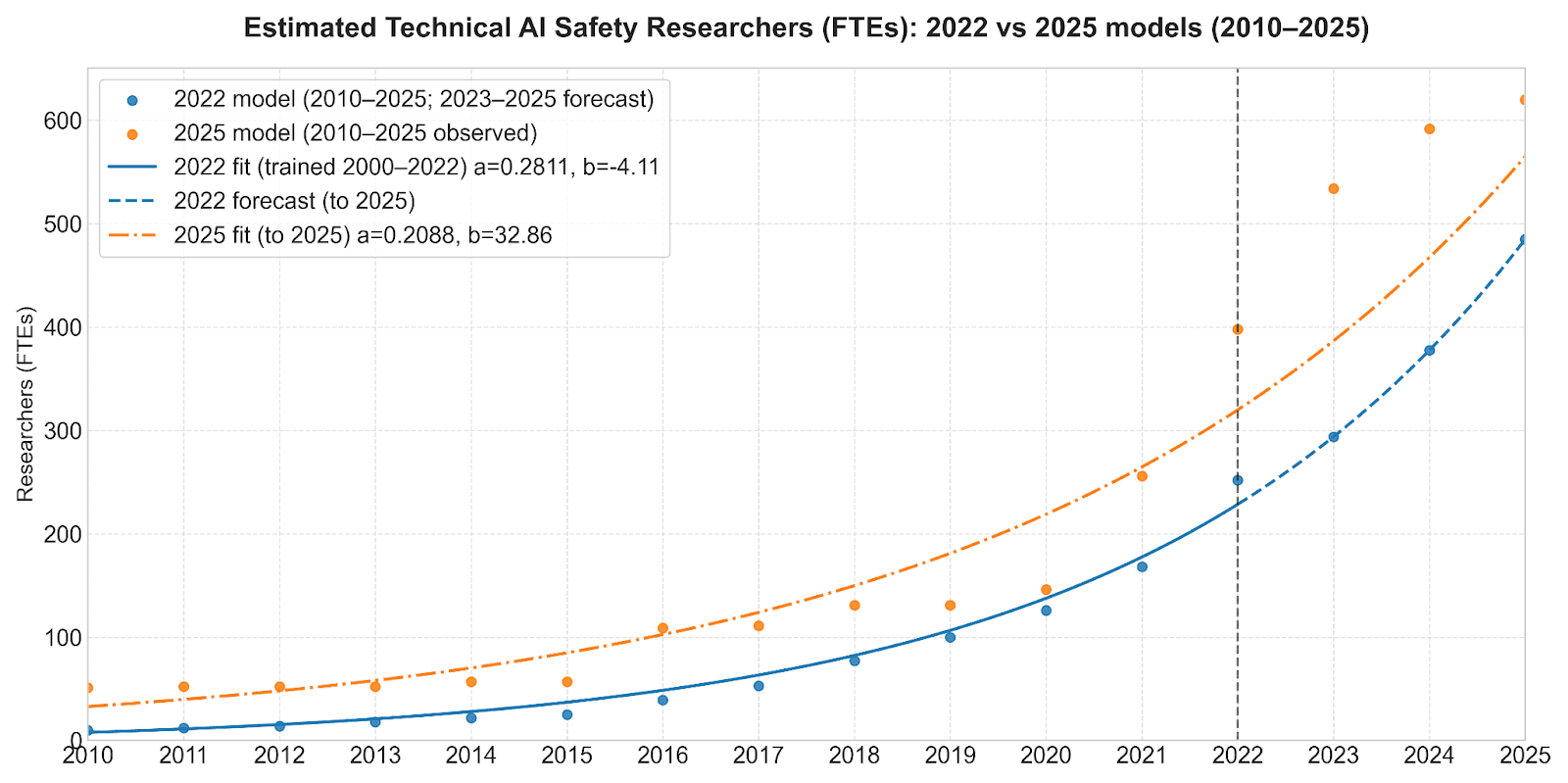

Old and new dataset and model comparison

The following graph shows the difference between the old dataset and model from the Estimating the Current and Future Number of AI Safety Researchers (2022) post compared with the updated dataset and model.

The old model is the blue line and the new model is the orange line.

The old model predicts a value of 484 active technical FTEs in 2025 and the true value is 620. The percentage error between the predicted and true value is 22%.

Technical AI safety organizations table

| Name | Founded | Year of Closure | Category | FTEs |

| Machine Intelligence Research Institute (MIRI) | 2000 | 2024 | Agent foundations | 10 |

| Future of Humanity Institute (FHI) | 2005 | 2024 | Misc technical AI safety research | 10 |

| Google DeepMind | 2010 | Misc technical AI safety research | 30 | |

| GoodAI | 2014 | Misc technical AI safety research | 5 | |

| Jacob Steinhardt research group | 2016 | Misc technical AI safety research | 9 | |

| David Krueger (Cambridge) | 2016 | RL safety | 15 | |

| Center for Human-Compatible AI | 2016 | RL safety | 10 | |

| OpenAI | 2016 | LLM safety | 15 | |

| Truthful AI (Owain Evans) | 2016 | LLM safety | 3 | |

| CORAL | 2017 | Agent foundations | 2 | |

| Scott Niekum (University of Massachusetts Amherst) | 2018 | RL safety | 4 | |

| Eleuther AI | 2020 | LLM safety | 5 | |

| NYU He He research group | 2021 | LLM safety | 4 | |

| MIT Algorithmic Alignment Group (Dylan Hadfield-Menell) | 2021 | LLM safety | 10 | |

| Anthropic | 2021 | Interpretability | 40 | |

| Redwood Research | 2021 | AI control | 10 | |

| Alignment Research Center (ARC) | 2021 | Theoretical AI safety research | 4 | |

| Lakera | 2021 | AI security | 3 | |

| SERI MATS | 2021 | Misc technical AI safety research | 20 | |

| Constellation | 2021 | Misc technical AI safety research | 18 | |

| NYU Alignment Research Group (Sam Bowman) | 2022 | 2024 | LLM safety | 5 |

| Center for AI Safety (CAIS) | 2022 | Misc technical AI safety research | 5 | |

| Fund for Alignment Research (FAR) | 2022 | Misc technical AI safety research | 15 | |

| Conjecture | 2022 | Misc technical AI safety research | 10 | |

| Aligned AI | 2022 | Misc technical AI safety research | 2 | |

| Apart Research | 2022 | Misc technical AI safety research | 10 | |

| Epoch AI | 2022 | AI forecasting | 5 | |

| AI Safety Student Team (Harvard) | 2022 | LLM safety | 5 | |

| Tegmark Group | 2022 | Interpretability | 5 | |

| David Bau Interpretability Group | 2022 | Interpretability | 12 | |

| Apart Research | 2022 | Misc technical AI safety research | 40 | |

| Dovetail Research | 2022 | Agent foundations | 5 | |

| PIBBSS | 2022 | Interdisciplinary | 5 | |

| METR | 2023 | Evals | 31 | |

| Apollo Research | 2023 | Evals | 19 | |

| Timaeus | 2023 | Interpretability | 8 | |

| London Initiative for AI Safety (LISA) and related programs | 2023 | Misc technical AI safety research | 10 | |

| Cadenza Labs | 2023 | LLM safety | 3 | |

| Realm Labs | 2023 | AI security | 6 | |

| ACS | 2023 | Interdisciplinary | 5 | |

| Meaning Alignment Institute | 2023 | Value learning | 3 | |

| Orthogonal | 2023 | Agent foundations | 1 | |

| AI Security Institute (AISI) | 2023 | Evals | 50 | |

| Shi Feng research group (George Washington University) | 2024 | LLM safety | 3 | |

| Virtue AI | 2024 | AI security | 3 | |

| Goodfire | 2024 | Interpretability | 29 | |

| Gray Swan AI | 2024 | AI security | 3 | |

| Transluce | 2024 | Interpretability | 15 | |

| Guide Labs | 2024 | Interpretability | 4 | |

| Aether research | 2024 | LLM safety | 3 | |

| Simplex | 2024 | Interpretability | 2 | |

| Contramont Research | 2024 | LLM safety | 3 | |

| Tilde | 2024 | Interpretability | 5 | |

| Palisade Research | 2024 | AI security | 6 | |

| Luthien | 2024 | AI control | 1 | |

| ARIA | 2024 | Provably safe AI | 1 | |

| CaML | 2024 | LLM safety | 3 | |

| Decode Research | 2024 | Interpretability | 2 | |

| Meta superintelligence alignment and safety | 2025 | LLM safety | 5 | |

| LawZero | 2025 | Misc technical AI safety research | 10 | |

| Geodesic | 2025 | CoT monitoring | 4 | |

| Sharon Li (University of Wisconsin Madison) | 2020 | LLM safety | 10 | |

| Yaodong Yang (Peking University) | 2022 | LLM safety | 10 | |

| Dawn Song | 2020 | Misc technical AI safety research | 5 | |

| Vincent Conitzer | 2022 | Multi-agent alignment | 8 | |

| Stanford Center for AI Safety | 2018 | Misc technical AI safety research | 20 | |

| Formation Research | 2025 | Lock-in risk research | 2 | |

| Stephen Byrnes | 2021 | Brain-like AGI safety | 1 | |

| Roman Yampolskiy | 2011 | Misc technical AI safety research | 1 | |

| Softmax | 2025 | Multi-agent alignment | 3 | |

| 70 | 645 |

Non-technical AI safety organizations table

| Name | Founded | Category | FTEs |

| Centre for Security and Emerging Technology (CSET) | 2019 | research | 20 |

| Epoch AI | 2022 | forecasting | 20 |

| Centre for Governance of AI (GovAI) | 2018 | governance | 40 |

| Leverhulme Centre for the Future of Intelligence | 2016 | research | 25 |

| Center for the Study of Existential Risk (CSER) | 2012 | research | 3 |

| OpenAI | 2016 | governance | 10 |

| DeepMind | 2010 | governance | 10 |

| Future of Life Institute | 2014 | advocacy | 10 |

| Center on Long-Term Risk | 2013 | research | 5 |

| Open Philanthropy | 2017 | research | 15 |

| Rethink Priorities | 2018 | research | 5 |

| UK AI Security Institute (AISI) | 2023 | governance | 25 |

| European AI Office | 2024 | governance | 50 |

| Ada Lovelace Institute | 2018 | governance | 15 |

| AI Now Institute | 2017 | governance | 15 |

| The Future Society (TFS) | 2014 | advocacy | 18 |

| Centre for Long-Term Resilience (CLTR) | 2019 | governance | 5 |

| Stanford Institute for Human-Centered AI (HAI) | 2019 | research | 5 |

| Pause AI | 2023 | advocacy | 20 |

| Simon Institute for Longterm Governance | 2021 | governance | 10 |

| AI Policy Institute | 2023 | governance | 1 |

| The AI Whistleblower Initiative | 2024 | whistleblower support | 5 |

| Machine Intelligence Research Institute | 2024 | advocacy | 5 |

| Beijing Institute of AI Safety and Governance | 2024 | governance | 5 |

| ControlAI | 2023 | advocacy | 10 |

| International Association for Safe and Ethical AI | 2024 | research | 3 |

| International AI Governance Alliance | 2025 | advocacy | 1 |

| Center for AI Standards and Innovation (U.S. AI Safety Institute) | 2023 | governance | 10 |

| China AI Safety and Development Association | 2025 | governance | 10 |

| Transformative Futures Institute | 2022 | research | 4 |

| AI Futures Project | 2024 | advocacy | 5 |

| AI Lab Watch | 2024 | watchdog | 1 |

| Center for Long-Term Artificial Intelligence | 2022 | research | 12 |

| SaferAI | 2023 | research | 14 |

| AI Objectives Institute | 2021 | research | 16 |

| Concordia AI | 2020 | research | 8 |

| CARMA | 2024 | research | 10 |

| Encode AI | 2020 | governance | 7 |

| Safe AI Forum (SAIF) | 2023 | governance | 8 |

| Forethought Foundation | 2018 | research | 8 |

| AI Impacts | 2014 | research | 3 |

| Cosmos Institute | 2024 | research | 5 |

| AI Standards Labs | 2024 | governance | 2 |

| Center for AI Safety | 2022 | advocacy | 5 |

| CeSIA | 2024 | advocacy | 5 |

| 45 | 489 |

I think understanding the growth of the field is very important and I appreciate the work you're doing. However, I have some concerns about the methodology:

It seems to me that this is really "the number of people working at AI safety organizations", which I think significantly underestimates the number of people working on AI safety. Lots of AI safety work is being done by organizations that don't explicitly brand themselves as AI safety. I can directly attest this for technical safety in academia (which is my area), but I expect the same applies to other sectors. There's also some overcounting since not every employee of an AI safety organization is working on AI safety, but I expect the undercounting to dominate.

To be clear, I think "the number of people working at AI safety organizations" is still a useful number to have, but I think it's important to be clear that that's what you're measuring.

I appreciate that both of these problems may be pretty difficult to solve, and I think this analysis is useful even without solving these problems. But I think the post as written provides an inaccurate impression of the field. Although not a complete solution, I think reframing this as "the number of people working at AI safety organizations" would help significantly.

Thanks for your feedback Ben.

I totally agree with point 1. and you're right that this post is really estimating the total number of people who work at AI safety organizations and then using this number as a proxy for estimating the size of the field. As you said, there are a lot of people who aren't completely focused on AI safety but still make significant contributions to the field. For example, a AI researcher might consider themselves to be an "LLM researcher" and split their time between non-AI safety work like evaluating models on benchmarks and AI safety work like new alignment methods. Such a researcher would not be counted in this post.

I might add an "other" category to the estimate to avoid this form of undercounting.

Regarding point 2, I collected the list of organizations and estimated the number of FTEs at each using a mixture of Google Search and Gemini Deep Research. The lists are my attempt to find as many AI safety organizations as possible though of course, I may be missing a few. If you can think of any that aren't in the list, I would appreciate if you shared them so that I can add them.

Thanks for the response Stephen. To clarify point 1, I'm also saying that there may be researchers who are more or less completely focused on AI safety but simply don't brand themselves that way and don't belong to an AI safety organization.

For point 2, I think the data collection methodology should be disclosed in the post. I would also be interested to know if you used Gemini Deep Research to help you identify relevant organizations but then verified them yourself (including number of employees), or if you used Gemini's estimates for the number of employees as given.

Re missing organizations: like I said, I think looking through Chinese research institutes is a good place to start. There's also a bunch of "Responsible AI"-branded initiatives in the US (e.g., https://www.cmu.edu/block-center/responsible-ai) which should possibly be included, depending on your definition of "AI safety". (I think the post would also benefit including the guidelines you used to determine what counts as AI safety.)

Thanks for the hard work!

I used Gemini Deep Research to discover organizations and then manually visited their websites to create estimates.

Thank you for doing this analysis!

Would you say this analysis is limited to safety from misalignment related risks, or any (potentially catastrophic) risks from AI, including misuse, gradual disempoerment, etc.?

The technical AI safety organizations cover a variety of different areas including AI alignment, AI security, interpretability, and evals with the most FTEs working on empirical AI safety topics like LLM alignment, jailbreaks, and robustness which covers a variety of different risks including misalignment and misuse.

Useful data and analysis thanks, though I'd note that from a TAI/AI risk-focused perspective I would expect the non-safety figures to overcount for some of these orgs. E.g. CFI (where I work) is in there at 25 FTE, but that covers a very broad range of AI governance/ethics/humanities topics, where only a subset (maybe a quarter?) would be specifically relevant to TAI governance (specifically a big chunk of Kinds of Intelligence that mainly does technical evaluation/benchmarking work, but advises policy on the basis of this, and the AI:FAR group). I would expect similar with some of the other 'broader' groups e.g. Ada.

Also in both categories I don't follow the rationale for including GDM but not the other frontier companies with safety/governance teams e.g. Anthropic, OpenAI, xAI (admittedly more minimal). I can see a rationale for including all or none of them.

Thanks for your feedback Sean.

Estimating the number of FTEs at the non-technical organizations is not straightforward since often only a fraction of the individuals are focused on AI safety. For each organization I guessed what fraction of the total FTEs were focused on AI safety though I may have overestimated in some cases (e.g. in the case of CFI I can decrease my estimate).

Also I'll include more frontier labs in the list of non-technical organizations.

That's usueful data, thanks!

How confident are you that an exponential is a good fit here? The 2025 datapoint make the Research org & FTE curves look more like S-curves to me.

Thanks for the insightful observation! I think the reason why the graph starts to flatten out in 2025 simply because it's only September of 2025 so all the organizations that will be founded in 2025 aren't included in the dataset yet.

Very useful work, thanks!

I was wondering what the inclusion/exclusion criteria were for selecting the AI Safety organisations. Looking at the list of non-technical orgs, I was surprised that Good Ancestors, Pour Demain, or Centre for Future Generations to name a few, where not included.

Agreed it's super useful. I think it's probably significantly underestimating the size of the field though, as I think there are dozens of orgs doing at least some work on AI safety not listed here.

I included organizations I was able to find that are focused on or making significant contribution to AGI safety research or non-technical work like governance and advocacy. Regarding the organizations you listed, I never came across them during my search and I will work on including them now.