Disclaimer

I currently have an around 400-day streak on Manifold Markets (though lately I only spend a minute or two a day on it) and have no particular vendetta against it. I also use Metaculus. I’m reasonably well-ranked on both but have not been paid by either platform, ignoring a few Manifold donations. I have not attended any Manifest. I think Manifold has value as a weird form of social media, but I think it’s important to be clear that this is what it is, and not a manifestation of collective EA or rationalist consciousness, or an effective attempt to improve the world in its current form.

Overview of Manifold

Manifold is a prediction market website where people can put virtual money (called “mana”) into bets on outcomes. There are several key features of this: 1. You’re rewarded with virtual money both for participating and for predicting well, though you can also pay to get more. 2. You can spend this mana to ask questions, which you will generally vet and resolve yourself (allowing many more questions than on comparable sites). Moderators can reverse unjustified decisions but it’s usually self-governed. Until recently, you could also donate mana to real charities, though recently this stopped; now only a few “prize” questions provide a more exclusive currency that can be donated, and most questions produce unredeemable mana.

How might it claim to improve the world?

There are two ways in which Manifold could be improving the world. It could either make good predictions (which would be intrinsically valuable for improving policy or making wealth) or it could donate money to charities. Until recently, the latter looked quite reasonable: the company appeared to be rewarding predictive power with the ability to donate money to charities. The counterfactuality of these donations is questionable, however, since the money for it came from EA-aligned grants, and most of it goes to very mainstream EA charities. It has a revenue stream from people buying mana, but this is less than $10k/month, some of which isn’t really revenue (since it will ultimately be converted to donations), and presumably this doesn’t cover the staff costs. The founders appear to believe that eventually they will get paid enough money to run markets for other organisations, in which case the donations would be counterfactual. But this relies on the markets producing good predictions.

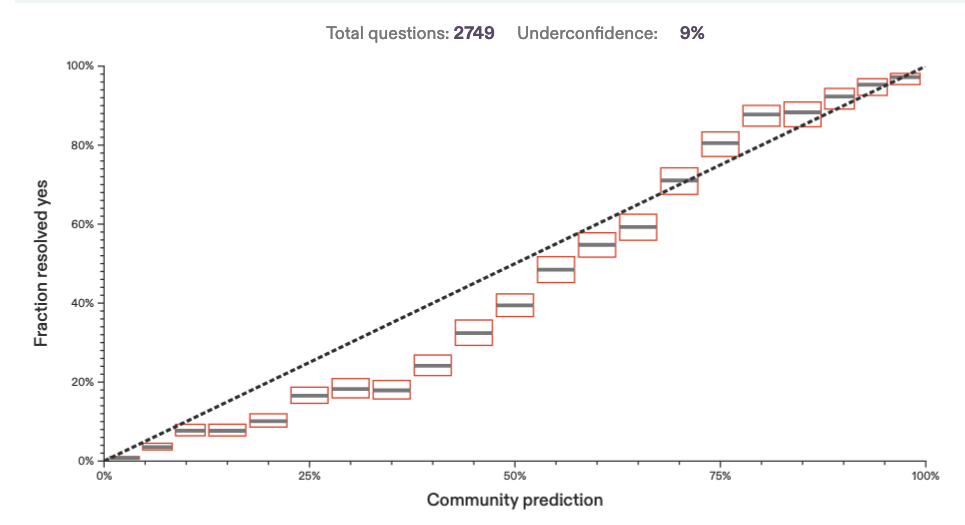

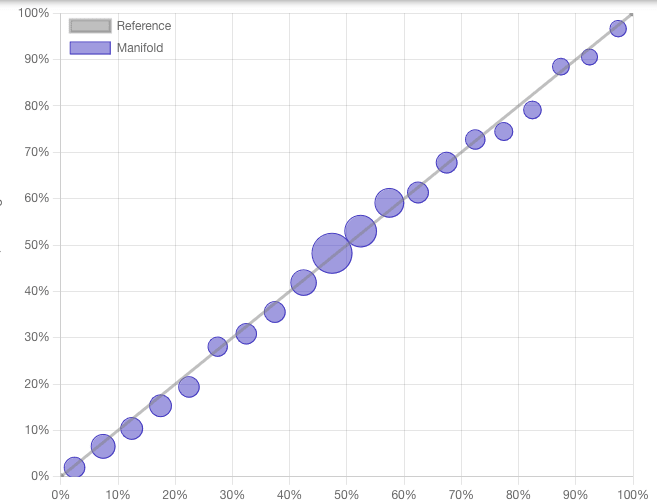

Sadly, Manifold does not produce particularly good predictions. In last year’s ACX contest, it performed worse than simply averaging predictions from the same number of people who took part in each market. Their calibration, while good by human standards, has a clear systematic bias towards predicting things will happen when they don’t (Yes bias). By contrast, rival firm Metaculus has no easily-corrected bias and seems to perform better at making predictions on the same questions (including in the ACX contest). Metaculus’ self-measured Brier score is 0.111, compared to Manifold’s 0.168 (lower is better, and this is quite a lot lower, though they are not answering all the same questions). Metaculus doesn’t publish the number of monthly active users like Manifold does, but the number of site visits they receive are comparable (slightly higher for Metaculus by one measure, lower by another), so it doesn’t seem like the prediction difference can be explained by user numbers alone.

Can the predictive power be improved?

Some of the problems with Manifold, like the systematic Yes bias, can be algorithmically fixed by potential users. Others are more intrinsic to the medium. Many questions resolve based on extensive discussions about exactly how to categorise reality, meaning that subtle clarifications by the author can result in huge swings in probability. The market mechanism of Manifold produces an information premium that incentivises people to act quickly on information, meaning that questions also swing wildly based on rumours.

The idea behind giving people mana for predictions is that wealth should accumulate with predictive prowess, resulting in people who have historically predicted better being able to weight their opinions more strongly. However in practice Manifold has many avenues for people incapable of making correct predictions to get Mana. It:

1. Wants bad predictors to pay money to keep playing

2. Hands out mana for asking engaging questions (which are not at all the same as useful questions)

3. Has many weird meta markets where people with lots of money can typically make more mana without predicting anything other than the spending of the superwealthy (“whalebait”)

4. Allows personal markets, where people can earn mana by doing tasks they set for themselves. This is an officially endorsed form of insider trading, insider trading being generally accepted.

5. Lets people gamble, go into negative equity, then burn their accounts and make new ones if bets go badly. The use of “puppet accounts” to manipulate markets in this or more complex ways is actively fought but still happens, and several of the all-time highest earners have transpired to use them to generate wealth.

6. Tends to leave markets open until after the answer to a question is publicly known and the answer is posted in the comments, so as well as large mana rewards for reading the news fast, simply reacting to posts on the site itself can produce a reliable profit

The first of these is entirely structural to their business model. While in principle the others could be “fixed” (if you consider them bugs) and some have lately become smaller (e.g. you can no longer bet things outside the 1-99% range, reducing profit from publicly known events), several have proven rather robust. This is quite apart from insider trading (which arguably makes the markets more accurate, even if it rewards people unfairly) and pump-and-dump schemes, which are hard to fix in any market system.

Framed as a fun pastime, having tricks to make mana without making predictions is fine, and this could drive up engagement. But these are all signs that the accuracy of the predictions (as opposed to news-reading) is not a highly ranked goal of the site. And it’s important to highlight the tradeoff between attracting idiots who will pay to play, as on gambling sites, and attracting companies who will pay to get good results. The assumption that idiots are distributed across opinions and so their biases will cancel each other out is ill-founded, particularly if a site has poor diversity.

By comparison, Metaculus simply applies an algorithm to weight people’s opinions based on past performance. There are obviously systematic differences between who uses which website, but a study indicated that under ideal conditions, a non-market approach to aggregating opinions was more successful than a prediction market. Prediction markets may be better than just doing a poll (for short-term predictions – Manifold can argue for its calibration over longer times via its loan system, which is plausible but this hasn’t been demonstrated), but worse than measures that can actually look at who is saying what. This would indicate that even without the quirky mana sources and time-dynamics, we wouldn’t expect Manifold to produce the best predictions. You can also see market failures explicitly in a handful of long-term markets (often about AI extinction risk, which cannot possibly pay out to humans) where a small number of very rich people have pegged the value of the market at the levels that they want it, in spite of this being essentially guaranteed to lose mana. Arguably this is the opposite criticism to the free-market criticisms - humans being willing to lose currency to do what they perceive as the right thing to highlight AI extinction risk - but it still represents an oligarchic market failure resulting in biased predictions. Unless prediction markets are really very large indeed and have no form of oligarchy, the neoliberal assumption that we can ignore who is putting up the currency is invalid.

This creates interesting possibilities to improve predictions. One, Manifold could have taxes to reduce opinion inequality. Two, Manifold could report the market values for predictions but (for a price) give you the better probabilities calculated by an appropriate algorithm, which is basically guaranteed to be better (it can always degenerate to “just use the market value” if that’s genuinely best). Why hasn’t it done this already? Well, there are probably several reasons, but one that I’m highlighting here is the link to neoliberal ideology. This is not to say that users of the site are all neoliberal – though they probably are more so than average. I mean that the “contrarian” ideologues present at the controversial Manifest24 and that the co-founders of manifold clearly find most interesting are consistently from a right-wing, market-trusting perspective, and this creates material blind spots. In spite of having many tens of talks on how to make predictions at this event, people don’t seem to point out that one of the host organisations is making very basic mistakes in how they go about their main job.

The harm of platforming bigots is manifold: firstly, that the people they hate are directly harmed; secondly that they will most likely stay away, reducing your diversity and thus the variety of perspectives and potential insight present; thirdly, that you will be perceived as bigoted, harming you socially and further reducing the diversity of the event. These problems have been extensively discussed elsewhere, and I see no value in discussing them again here. The issue that hasn't been discussed is how the people who feel excluded are those who are most used to critiquing power inequality, and if they still engage with your platform at all, will focus on discussing your bigotry, rather than other structural issues.

I'm a frequent Manifold user.

While I like criticism of Manifold, I think these criticisms miss the mark, and are mostly false or outdated.

The best criticism here is the link the the ACX article. I'd still defend manifold, the ACX predictions occurred very early in the site's life, and most of the current top traders either didn't use the site at all or didn't have enough capital to move markets, so one would expect it to be less accurate. But we did perform badly.

You compare Metaculus's "Metaculus prediction" calibration graph to Manifold's calibration. Metaculus has two probabilities for each question - a "Community Prediction", a simple aggregation of forecasts, and a more complicated Metaculus Prediction that weights by track record. The Metaculus Prediction is not public while markets are open, and I can't see it for any of the metaculus markets I'm interested in. So, when comparing the utility of the two sites the Community Prediction is the right comparison, and it's as bad as or worse than Manifold's.

Manifold's hosted calibration graph graph covers markets through all of manifold's history. I'd expect manifold to do better recently. Using https://calibration.city/, using the 'market midpoint' and starting from Jul 2023, Manifold's calibration looks great (later dates seem noisy but unbiased, the other mode is buggy). I also recalculated time-weighted calibration myself over the past months of Manifold, and didn't see deviation from y=x other than noise. (It does replicate manifold's old bias on all past data). Manifold's site shows the old bias, but we've improved!

Manifold's overall Brier score is not comparable to Metaculus's. "they are not answering all the same questions" is an issue, the distribution of user-generated questions is different from highly curated questions. Manifold also has a much longer tail of less-traded questions that you'd expect to score lower even if the question distributions were the same.

For the analysis of brier scores on comparable questions, it's early in Manifold's life, and from the post, "Metaculus, on average had a much higher number of forecasters", and from a comment "Some of the markets in this dataset probably only ever got single-digit number of trades and were obviously mispriced". This shouldn't generalize to comparisons between metaculus and manifold today, with comparable numbers of users.

"Many questions resolve based on extensive discussions about exactly how to categorise reality, meaning that subtle clarifications by the author can result in huge swings in probability". This does happen, one of the tradeoffs with user-created markets is the criteria are less detailed, but it's not common enough to significantly affect profits for the top traders. In my rough estimation, around 1 in 20 big manifold questions have criteria issues, and many of those N/A. As a comparison, 3 out of the 50 Bridgewater Metaculus competition questions had to be annulled due to bad criteria.

The study is interesting, but the experiment has significant differences from manifold and I don't get from the paper that prediction polls are overall superior to markets.

You name "many avenues for people incapable of making correct predictions to get Mana". After the pivot, in Manifold's current state, all but ~1.5 of these are no longer relevant.

"2. Hands out mana for asking engaging questions (which are not at all the same as useful questions)" - In the past, Manifold awarded generous creator bonuses and market liquidity for traders, inflating the mana supply. Post-pivot, creators fund liquidity pools themselves and only earn mana through roughly 3-1.5% fees on their markets, so market creation is now a mana sink for creators (and, net, for traders, Manifold gets fees as well). (There remains a partner program to pay selected creators, but that pays out in USD, is smaller, and partners are generally not converting that to mana.)

"3. Has many weird meta markets where people with lots of money can typically make more mana without predicting anything other than the spending of the superwealthy (“whalebait”)" - Also true in the past, with the Whales vs Minnows https://news.manifold.markets/p/isaac-kings-whales-vs-minnows-and incident as the apex, making half the profit of the top trader at the time. (whalebait skill is correlated with forecasting skill, that trader remains #3 now that whalebait profit isn't counted). But after that Manifold started hiding whalebait, and today large whalebait markets are effectively dead.

"Allows personal markets, where people can earn mana by doing tasks they set for themselves. This is an officially endorsed form of insider trading, insider trading being generally accepted." - Post pivot, creators fund the liquidity pools for personal markets and trading on markets no longer prints mana via bonuses (it's negative sum due to fees), so you can't earn mana like that.

"Lets people gamble, go into negative equity, then burn their accounts and make new ones if bets go badly" - With the pivot, Manifold has stopped giving out loans, which was what allowed people to go into negative equity.

"6. Tends to leave markets open until after the answer to a question is publicly known and the answer is posted in the comments, so as well as large mana rewards for reading the news fast, simply reacting to posts on the site itself can produce a reliable profit" - I traded on this in the past, but it's 10x less effective now that market creators fund their own liquidity pools, so they now usually close markets before the answer is known to recover some of their money (prize markets do that too). Trading on news still happens though, and is probably inevitable with prediction markets.

"1. Wants bad predictors to pay money to keep playing" is a problem, and is an issue with any prediction market involving real money. Real money has benefits, though - as prediction markets scale, it incentivize professional prediction. Bad traders incentivize good traders to price markets well.

> But these are all signs that the accuracy of the predictions (as opposed to news-reading) is not a highly ranked goal of the site

As I see it, the fact that we did make all of these changes is a sign that prediction accuracy is a top goal!

The counterfactuality of the charity program was an issue, but post-pivot that's not relevant anymore as you can just withdraw prizepoints as USD and then donate.

I do have concerns about the utility of prediction market (and forecasting generally). Pushing information into probabilities might remove most of the value that a richer written exchange would have. Prediction markets incentivize smart people to keep information other than their bets private. But this post missed the mark, I think.

I probably got one, maybe a few, things wrong here, but the general point stands

By my accounts, you have implicitly agreed that all of 1-6 used to be issues, but 2-4 are currently not issues and 5 now needs the phrase "negative equity" deleted. I'm still making mana by reading the news, so don't see that you've halved that claim. You're right that whalebait is less profitable, and I now need to actually search for free mana to find the free mana markets. The fact that I can still do this and then throw all my savings into it means that we should expect exponential growth of mana at some risk-free rate (depending on the saturation of t... (read more)