Current takeaways from the 2024 US election <> forecasting community.

First section in Forecasting newsletter: US elections, posting here because it has some overlap with EA.

- Polymarket beat legacy institutions at processing information, in real time and in general. It was just much faster at calling states, and more confident earlier on the correct outcome.

- The OG prediction markets community, the community which has been betting on politics and increasing their bankroll since PredictIt, was on the wrong side of 50%—1, 2, 3, 4, 5. It was the democratic, open-to-all nature of it, the Frenchman who was convinced that mainstream polls were pretty tortured and bet ~$45M, what moved Polymarket to the right side of 50/50.

- Polls seem like a garbage in garbage out kind of situation these days. How do you get a representative sample? The answer is maybe that you don't.

- Polymarket will live. They were useful to the Trump campaign, which has a much warmer perspective on crypto. The federal government isn't going to prosecute them, nor bettors. Regulatory agencies, like the CFTC and the SEC, which have taken such a prominent role in recent editions of this newsletter, don't really matt

I don't have time to write a detailed response now (might later), but wanted to flag that I either disagree or "agree denotatively but object connotatively" with most of these. I disagree most strongly with #3: the polls were quite good this year. National and swing state polling averages were only wrong by 1% in terms of Trump's vote share, or in other words 2% in terms of margin of victory. This means that polls provided a really large amount of information.

(I do think that Selzer's polls in particular are overrated, and I will try to articulate that case more carefully if I get around to a longer response.)

Oh cool, Scott Alexander just said almost exactly what I wanted to say about your #2 in his latest blog post: https://www.astralcodexten.com/p/congrats-to-polymarket-but-i-still

Prompted by a different forum:

...as a small case study, the Effective Altruism forum has been impoverished over the last few years by not being lenient with valuable contributors when they had a bad day.

In a few cases, I later learnt that some longstanding user had a mental health breakdown/psychotic break/bipolar something or other. To some extent this is an arbitrary category, and you can interpret going outside normality through the lens of mental health, or through the lens of "this person chose to behave inappropriately". Still, my sense is that leniency would have been a better move when people go off the rails.

In particular, the best move seems to me a combination of:

- In the short term, when a valued member is behaving uncharacteristically badly, stop them from posting

- Followup a week or a few weeks later to see how the person is doing

Two factors here are:

- There is going to be some overlap in that people with propensity for some mental health disorders might be more creative, better able to see things from weird angles, better able to make conceptual connections.

- In a longstanding online community, people grow to care about others. If a friend goes of the rails, there is the question of how to stop them from causing harm to others, but there is also the question of how to help them be ok, and the second one can just dominate sometimes.

I'm surprised by this. I don't feel like the Forum bans people for a long time for first offences?

Reasons why upvotes on the EA forum and LW don't correlate that well with impact .

- More easily accessible content, or more introductory material gets upvoted more.

- Material which gets shared more widely gets upvoted more.

- Content which is more prone to bikeshedding gets upvoted more.

- Posts which are beautifully written are more upvoted.

- Posts written by better known authors are more upvoted (once you've seen this, you can't unsee).

- The time at which a post is published affects how many upvotes it gets.

- Other random factors, such as whether other strong posts are published at the same time, also affect the number of upvotes.

- Not all projects are conducive to having a post written about them.

- The function from value to upvotes is concave (e.g., like a logarithm or like a square root), in that a project which results in a post with a 100 upvotes is probably more than 5 times as valuable as 5 posts with 20 upvotes each. This is what you'd expect if the supply of upvotes was limited.

- Upvotes suffer from inflation as EA forum gets populated more, so that a post which would have gathered 50 upvotes two years might gather 100 upvotes now.

- Upvotes may not take into account the relationship

Open Philanthopy’s allocation by cause area

Open Philanthropy’s grants so far, roughly:

This only includes the top 8 areas. “Other areas” refers to grants tagged “Other areas” in OpenPhil’s database. So there are around $47M in known donations missing from that graph. There is also one (I presume fairly large) donation amount missing from OP’s database, to Impossible Foods

See also as a tweet and on my blog. Thanks to @tmkadamcz for suggesting I use a bar chart.

Estimates of how long various evaluations took me (in FTEs)

| Title | How long it took me |

|---|---|

| Shallow evaluations of longtermist organizations | Around a month, or around three days for each organization. |

| External Evaluation of the EA Wiki | Around three weeks |

| 2018-2019 Long-Term Future Fund Grantees: How did they do? | Around two weeks |

| Relative Impact of the First 10 EA Forum Prize Winners | Around a week |

| An experiment to evaluate the value of one researcher's work | Around a week |

| An estimate of the value of Metaculus questions | Around three days |

The recent EA Forum switch to to creative commons license (see here) has brought into relief for me that I am fairly dependent on the EA forum as a distribution medium for my writing.

Partly as a result, I've invested a bit on my blog: <https://nunosempere.com/blog/>, added comments, an RSS feed, and a newsletter.

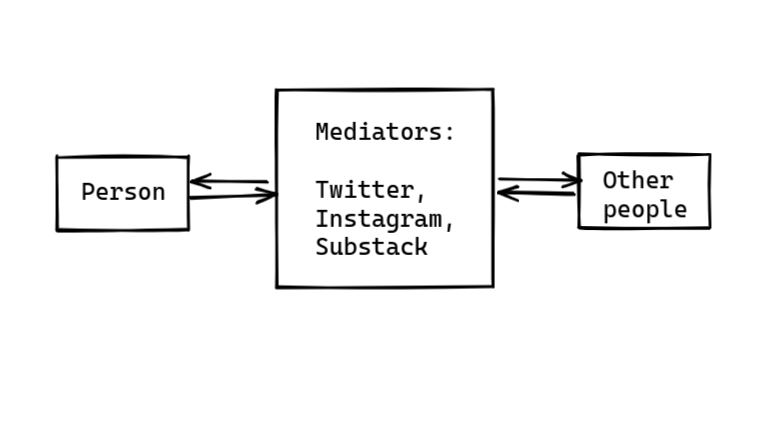

Arguably I should have done this years ago. I also see this dependence with social media, where a few people I know depend on Twitter, Instagram &co for the distribution of their content & ideas.

(Edit: added some more thoughts here)

Prizes in the EA Forum and LW.

I was looking at things other people had tried before.

EA Forum

How should we run the EA Forum Prize?

Cause-specific Effectiveness Prize (Project Plan)

Announcing Li Wenliang Prize for forecasting the COVID-19 outbreak

$100 Prize to Best Argument Against Donating to the EA Hotel

Cash prizes for the best arguments against psychedelics being an EA cause area

Cause-specific Effectiveness Prize (Project Plan)

Debrief: "cash prizes for the best arguments against psychedelics"

Cash prizes for the best arguments against psychedelics being an EA cause area

AI alignment prize winners and next round

$500 prize for anybody who can change our current top choice of intervention

The Most Good - promotional prizes for EA chapters from Peter Singer, CEA, and 80,000 Hours

LW (on the last 5000 posts)

Over $1,000,000 in prizes for COVID-19 work from Emergent Ventures

The Dualist Predict-O-Matic ($100 prize)

Seeking suggestions for EA cash-prize contest

Announcement: AI alignment prize round 4 winners

A Gwern comment on... (read more)

What happened in forecasting in March 2020

Epistemic status: Experiment. Somewhat parochial.

Prediction platforms.

- Foretold has two communities on Active Coronavirus Infections and general questions on COVID.

- Metaculus brings us the The Li Wenliang prize series for forecasting the COVID-19 outbreak, as well as the Lockdown series and many other pandemic questions

- PredictIt: The odds of Trump winning the 2020 elections remain at a pretty constant 50%, oscillating between 45% and 57%.

- The Good Judgment Project has a selection of interesting questions, which aren't available unless one is a participant. A sample below (crowd forecast in parenthesis):

- Will the UN declare that a famine exists in any part of Ethiopia, Kenya, Somalia, Tanzania, or Uganda in 2020? (60%)

- In its January 2021 World Economic Outlook report, by how much will the International Monetary Fund (IMF) estimate the global economy grew in 2020? (Less than 1.5%: 94%, Between 1.5% and 2.0%, inclusive: 4%)

- Before 1 July 2020, will SpaceX launch its first crewed mission into orbit? (22%)

- Before 1 January 2021, will the Council of the European Union request the consent of the European Parliament to conclude a European Uni

Brief thoughts on my personal research strategy

Sharing a post from my blog: <https://nunosempere.com/blog/2022/10/31/brief-thoughts-personal-strategy/>; I prefer comments there.

Here are a few estimation related things that I can be doing:

- In-house longtermist estimation: I estimate the value of speculative projects, organizations, etc.

- Improving marginal efficiency: I advise groups making specific decisions on how to better maximize expected value.

- Building up estimation capacity: I train more people, popularize or create tooling, create templates and a

The Stanford Social Innovation Review makes the case (archive link) that new, promising interventions are almost never scaled up by already established, big NGOs.

Infinite Ethics 101: Stochastic and Statewise Dominance as a Backup Decision Theory when Expected Values Fail

First posted on nunosempere.com/blog/2022/05/20/infinite-ethics-101 , and written after one too many times encountering someone who didn't know what to do when encountering infinite expected values.

In Exceeding expectations: stochastic dominance as a general decision theory, Christian Tarsney presents stochastic dominance (to be defined) as a total replacement for expected value as a decision theory. He wants to argue that one decision is only ratio... (read more)

I've been blogging on nunosempere.com/blog/ for the past few months. If you are reading this shortform, you might want to check out my posts there.

Here is an excerpt from a draft that didn't really fit in the main body.

Getting closer to expected value calculations seems worth it even if we can't reach them

Because there are many steps between quantification and impact, quantifying the value of quantification might be particularly hard. That said, each step towards getting closer to expected value calculations seems valuable even if we never arrive at expected value calculations. For example:

- Quantifying the value of one organization on one unit might be valuable, if the organization aims to do better e

Quality Adjusted Research Papers

Taken from here, but I want to be able to refer to the idea by itself.

- ~0.1-1 mQ: A particularly thoughtful blog post comment, like this one

- ~10 mQ: A particularly good blog post, like Extinguishing or preventing coal seam fires is a potential cause area

- ~100 mQ: A fairly valuable paper, like Categorizing Variants of Goodhart's Law.

- ~1Q: A particularly valuable paper, like The Vulnerable World Hypothesis

- ~10-100 Q: The Global Priorities Institute's Research Agenda.

- ~100-1000+ Q: A New York Times Bestseller on a valuable topi

The Tragedy of Calisto and Melibea

https://nunosempere.com/blog/2022/06/14/the-tragedy-of-calisto-and-melibea/

Enter CALISTO, a young nobleman who, in the course of his adventures, finds MELIBEA, a young noblewoman, and is bewitched by her appearance.

CALISTO: Your presence, Melibea, exceeds my 99.9% percentile prediction.

MELIBEA: How so, Calisto?

CALISTO: In that the grace of your form, its presentation and concealment, its motions and ornamentation are to me so unforeseen that they make me doubt my eyes, my sanity, and my forecasting prowess. In that if beau... (read more)

A comment I left on Knightian Uncertainty here.:

The way this finally clicked for me was: Sure, Bayesian probability theory is the one true way to do probability. But you can't actually implement it.

In particular, problems I've experienced are:

- I'm sometimes not sure about my calibration in new domains

- Sometimes something happens that I couldn't have predicted beforehand (particularly if it's very specific), and it's not clear what the Bayesian update should be. Note that I'm talking about "something took me completely by surprise" rather than "something ... (read more)

How much would I have to run to lose 20 kilograms?

Originally posted on my blog, @ <https://nunosempere.com/blog/2022/07/27/how-much-to-run-to-lose-20-kilograms/>

In short, from my estimates, I would have to run 70-ish to 280-ish 5km runs, which would take me between half a year and a bit over two years. But my gut feeling is telling me that it would take me twice as long, say, between a year and four.

I came up with that estimate because was recently doing some exercise and I didn’t like the machine’s calorie loss calculations, so I rolled some calcula... (read more)

CoronaVirus and Famine

The Good Judgement Open forecasting tournament gives a 66% chance for the answer to "Will the UN declare that a famine exists in any part of Ethiopia, Kenya, Somalia, Tanzania, or Uganda in 2020?"

I think that the 66% is a slight overestimate. But nonetheless, if a famine does hit, it would be terrible, as other countries might not be able to spare enough attention due to the current pandem

... (read more)Here is a css snippet to make the forum a bit cleaner. <https://gist.github.com/NunoSempere/3062bc92531be5024587473e64bb2984>. I also like ea.greaterwrong.com under the brutalist setting and with maximum width.

Excerpt from "Chapter 7: Safeguarding Humanity" of Toby Ord's The Precipice, copied here for later reference. h/t Michael A.

SECURITY AMONG THE STARS?

Many of those who have written about the risks of human extinction suggest that if we could just survive long enough to spread out through space, we would be safe—that we currently have all of our eggs in one basket, but if we became an interplanetary species, this period of vulnerability would end. Is this right? Would settling other planets bring us existential security?

The idea is based on an important stat... (read more)

Here is a more cleaned up — yet still very experimental — version of a rubric I'm using for the value of research:

Expected

- Probabilistic

- % of producing an output which reaches goals

- Past successes in area

- Quality of feedback loops

- Personal motivation

- % of being counterfactually useful

- Novelty

- Neglectedness

- % of producing an output which reaches goals

- Existential

- Robustness: Is this project robust under different models?

- Reliability: If this is a research project, how much can we trust the results?

Impact

- Overall promisingness (intuition)

- Scale: How many people affected

- Importance: How

Better scoring rules

From SamotsvetyForecasting/optimal-scoring:

... (read more)This git repository outlines three scoring rules that I believe might serve current forecasting platforms better than current alternatives. The motivation behind it is my frustration with scoring rules as used in current forecasting platforms, like Metaculus, Good Judgment Open, Manifold Markets, INFER, and others. In Sempere and Lawsen, we outlined and categorized how current scoring rules go wrong, and I think that the three new scoring rules I propose avoid the pitfalls outlined in that pape

Testing embedding arbitrary markets in the EA Forum

Will a Democrat (Warnock) or Republican (Walker) win in Georgia?

How to get into forecasting, and why?

Taken from this answer, written quickly, might iterate.

As another answer mentioned, I have a forecasting newsletter which might be of interest, maybe going through back-issues and following the links that catch your interest could give you some amount of background information.

For reference works, the Superforecasting book is a good introduction. For the background behind the practice, personally, I would also recommend E.T. Jaynes' Probability Theory, The Logic of Science (find a well-formatted edition, some of t... (read more)

Some thoughts on Turing.jl

Originally published on my blog @ <https://nunosempere.com/blog/2022/07/23/thoughts-on-turing-julia/>

Turing is a cool probabilistic programming new language written on top of Julia. Mostly I just wanted to play around with a different probabilistic programming language, and discard the low-probability hypothesis that things that I am currently doing in Squiggle could be better implemented in it.

My thoughts after downloading it and playing with it a tiny bit are as follows:

1. Installation is annoying: The program is pre... (read more)

Notes on: A Sequence Against Strong Longtermism

Summary for myself. Note: Pretty stream-of-thought.

Proving too much

- The set of all possible futures is infinite which somehow breaks some important assumptions longtermists are apparently making.

- Somehow this fails to actually bother me

- ...the methodological error of equating made up numbers with real data

- This seems like a cheap/unjustified shot. In the world where we can calculate the expected values, it would seems fine to compare (wide, uncertain) speculative interventions with harcore GiveWell data (note that

If one takes Toby Ord's x-risk estimates (from here), but adds some uncertainty, one gets: this Guesstimate. X-risk ranges from 0.1 to 0.3, with a point estimate of 0.19, or 1 in 5 (vs 1 in 6 in the book).

2020 U.S. Presidential election to be most expensive in history, expected to cost $14 billion - The Hindu https://www.thehindu.com/news/international/2020-us-presidential-election-to-be-most-expensive-in-history-expected-to-cost-14-billion/article32969375.ece Thu, 29 Oct 2020 03:17:43 GMT

Here is a more cleaned up — yet still very experimental — version of a rubric I'm using for the value of research:

Expected

Impact

Per Unit of Resources

See also: Charity Entrepreneurship's rubric, geared towards choosing which charity to start.

Sure. So I'm thinking that for impact, you'd have sort of causal factors (Scale, importance, relation to other work, etc.) But then you'd also have proxies of impact, things that you intuit correlate well with having an impact even if the relationship isn't causal. For example, having lots of comments praising some project doesn't normally cause the project to have more impact. See here for the kind of thing I'm going for.