(Update: Not sure why I didn't just make two separate posts of this. The first part is about the Occlumency sorting algorithm, and the second part is about how to adjust for information cascades. They don't really relate to each other.)

(Update2: Something equivalent to first part of this post has already been implemented (yay!), and you can access it here.)

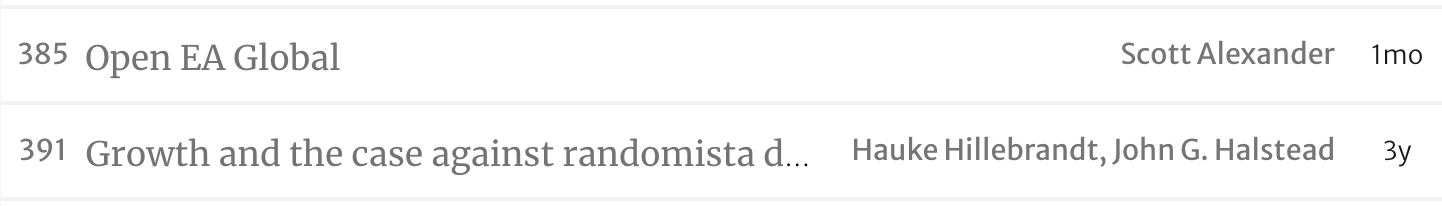

32/50 of the highest karma posts on the forum are less than a year old. The top 3 posts are within a month old. Forum activity seems to have grown a lot lately, and there could be reasons to expect it to climb even higher.

Make no mistake, this could be really good news! Maybe more people have become interested in figuring out how to do good. I'm going to add it to my bag of reasons to be optimistic about the future. But it does mean we should reflect on what it could mean for us and how we should adapt to it.

1) It means that posts from this year are much more likely to have more karma compared to older posts of similar quality.

2) It also means that older posts are more valuable to promote on the forum since we have more fresh eyes here who haven't already read them.

3) And finally it means that it's slightly harder to find some of the historically best posts on the forum since sorting by karma will heavily bias it towards this year.

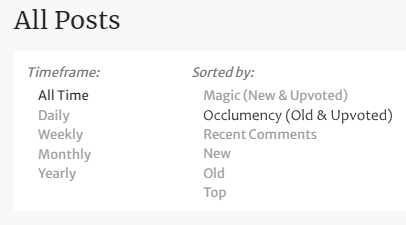

For these reasons, maybe someone should introduce another sorting category where instead of sorting by Magic (New & Upvoted), we can sort by Occlumency (Old & Upvoted).

Slice-of-pie weighting by monthly forum activity to approximate the conversion rate of readers->karma

Note: This was edited to give a clearer view of how the system could look, and take into account karma power inflation as mentioned by Arepo in the comments.

If two posts have the same number of readers, but the latter post has double the karma, that is some evidence that speaks to how likely it is to be usefwl to you.[1] Let's call the efficiency with which a post converts readers to karma its conversion rate.

Unfortunately, directly weighting a post's karma by the reader count is potentially gameable. So you may wish to weight by a hard-to-game proxy for how many potential voters read it.

Assuming the data is available, one way to do this would be to break down a post's karma by month and weight the karma for each month based on the absolute value of the total karma assigned that month. What this could mean in practice is that each month has a fixed amount of karma that's allocated towards posts based on the proportion of monthly karma those posts received.[2]

This would mean that Occlumency sorts posts by enduring value. The top-sorted posts will be the ones that have received the most and biggest slices of pie over time. If a post has been extremely popular for one month but hasn't seen much relevancy since then, it's going to be buried by posts that have been relevant for a longer time.

This also controls for karma inflation due to the accumulation of karma power for users with more karma. On Occlumency, users with more karma control a larger slice of the pie but don't add to the total amount of karma going around that month.

That being said, some bias in favor of more recent posts could be good if information and wisdom increased over time. And you want to be wary of accidentally promoting outdated posts. But if voter demographics have proportionately shifted towards people who haven't spent as much time thinking about the many nuances of having a positive impact, some bias towards older votes could be good. You could balance these considerations by adjusting the monthly pool of karma to have a non-linear positive correlation to the total unadjusted karma, but some experimental fine-tuning might be in order.

Information cascades

The expected amount of marginal karma a post will get within a slice of time depends on ) the expected number of potential voters reading it, and ) the expected number of karma they will give it given that they read it.

But further depends on the karma it already has, since 1) upvoted posts are more visible, and 2) we often read things because we want to stay "in the loop" and we use karma to infer what the loop actually is.

And could also depend on karma because we may subconsciously use popularity to partially infer quality and may insufficiently adjust downwards.

This has all the prerequisites for information cascades that depend on slight variations in initial conditions.

An information cascade occurs when people update on other people's beliefs, which may individually be a rational decision but may still result in a self-reinforcing community opinion that does not necessarily reflect reality.

Two posts published simultaneously may be of equal quality, but if the former starts out with 100 karma compared to the latter's 1, we are likely to see the former gain a lot more karma compared to the latter before the frontpage time window is over.

In summary, karma information cascades pose two problems:

- They amplify small variations in initial conditions. This means that small initial differences between posts may persist over time, and this increases the extent to which the top karma posts depend on luck to get there.

- They amplify the relative differences between posts. If all you know about two posts is that the former has five times the karma, then you probably shouldn't infer that its conversion rate (or quality) is five times higher.

Adjust for information cascades in real-time by hiding post authorship and karma first day of publication

You could imagine adjusting for information cascades by making karma follow a log curve, and doing this for all posts historically. This could ameliorate the second problem mentioned above, so maybe it's worth investigating and perhaps running simple computer simulations to decide on a fair curve. But I'm tentatively pessimistic about the value of that since it wouldn't change the ordinal ranking of posts, and that's what matters for visibility and sorting algorithms.

So to affect ordinal rankings, you need to deal with information cascades in real-time.

One way to do this could be to hide authorship and karma for 24 hours (or something) after the post has been published. Readers are now spread more evenly across day-one posts, which means that on day two, their relative karmas are more indicative of true conversion rates and less influenced by randomness and author popularity. So even if you let everything run as normal thenceforth, the information cascades would start on initial conditions that better reflect true conversion rates.

And importantly, this doesn't put new posts at a disadvantage compared to old posts. You're probably not changing the expected total karma over time by much. Instead, you're just changing how sensitive they are to randomness in initial conditions.

But, of course, there are trade-offs involved. To some of you, this might sound dumb but my intuition is that information cascades aren't that big of a problem in EA—for reasons. And delaying information about karma and authorship has a cost insofar as readers benefit from those as indicators of Value of Information.

To unequivocally recommend for or against it, I would want to do more investigation, but I think it's worth testing in order to get feedback on how it works in practice. To people who might wish to look into it, you might wish to find relevant insights in the field known as "veritistic social epistemology".

- ^

Assuming 1) people vote based on usefwlness-to-them, and 2) they're similar enough to you that usefwlness-to-them correlates strongly with usefwlness-to-you. Both are questionable assumptions, but you're already aware that karma only partially correlates with usefwlness-to-you.

- ^

FJehn had a somewhat related project last year, where they sorted based on a weighting of karma by the average karma for posts in the month of that post's publication.

A quick guess is that a good way to implement this (once a definition for “old” posts is given) is to track instances of people upvoting an old post (or just karma accumulation of old posts).

Then some score based on this (which itself can decay) can be blended into the regular “hotness” mix, so the people can see some oldie goldies.

This might be better than “naively” scaling up posts by compensating for traffic, because:

in this idea, older posts will tend to be promoted by relevance (e.g some day EA can solve “really hard to find an EA job” and it doesn’t need to be as visible compared to an ongoing issue).

It also gives a new channel for low karma old posts (e.g. brilliant treatise ahead of its time) to reappear.

This also gives an incentive to actually upvote old posts, further helping to solve the problem.

Oh, this is wonderfwl. But to be clear, Occlumency wouldn't be the front page. It would one of several ways to sort posts when you go to /all posts. Oldie goldies is a great idea for the frontpage, though!

Hmm, maybe we are talking about different things, but I think the /all pages already breaks down posts by year.

So that seems to mitigate a lot of the problem I think you are writing about (less if within year inflation is high)?

I also think your post is really thoughtful, deep and helpful.

Oh. It does mitigate most of the problem as far as I can tell. Good point Oo

Your idea is still viable and useful!

There’s also valuable discussion and ideas that came up. IMO you deserve at least 80% of the credit for these, as they arose from your post.

I like that idea about information cascades. We could test how big this effect is on EA Forum, by having some bot who randomly upvotes or downvotes new posts, and measuring the final karma after some time.

There was a similar experiment with reddit (maybe you already know this).

Why do you think information cascades aren't significant on EA Forum? (I hope that's true)

I just meant that I think info cascades aren't too important of a problem in EA more generally, not just the forum. I did not mean that I think they didn't have large effects. Here are some reasons to expect info cascades to have limited damage in EA. Although I no longer fully endorse my earlier statements--I'd encourage people to be more worried.

The linked study is really cool! I hadn't seen it, so thanks a lot for bringing to my attention. I would've very much liked for them to ask the question "for the top 10% rated comments, what proportion of them were up-treated vs control group?" To know whether the initial effect washes out for the top posts, or whether the amplification compounds with no upper bound.

The type of experiment you suggest and link to seems much more feasible for the EA forum than anything I've thought of, so if you're not going to suggest it in the EA forum suggestion thread (please do, and I'll strong upvote it), I will.

Great!

I posted it in that thread: link

Feel free to add something there.

Also, the main thing we need is synthesis. how do we take many old articles and turn them into one well summary given all we now know?

I would also like some old articles to be rereleased on the front page, maybe you get votes from a new generation.

Another concern is karma inflation from strong upvotes. As time goes by, the strength of new strong upvotes increases (details here), which means more recent posts will naturally tend to be higher rated even given a consistent number of users.

Maybe we should automatically update upvotes to track people's current karma?

Pretty sure that would be computationally intractable. Every time someone was upvoted beyond a threshhold you'd need to check the data of every comment and post on the forum.

Someone I know has worked with databases of varying sizes, sometimes in a low level, mechanical sense . From my understanding, to update all of a person’s votes, the database operation is pretty simple, simply scanning the voting table for that ID and then doing a little arithmetic for each upvote and calling another table or two.

You would only need to do the above operation for each “newly promoted” user, which is like maybe a few dozen users a day at the worst.

Many personal projects involve heavier operations. I’m not sure but a google search might be 100x more complicated.

But in the process you might also promote other users - so you'd have to check for each recipient of strong upvotes if that was so, and then repeat the process for each promoted user, and so on.

That’s a really good point. There’s many consequent issues beyond the initial update, including the iterative issue of multiple induced “rounds of updating” mentioned in your comment.

After some thought, I think I am confident the issue you mentioned is small.

To be confident, I guess that these second round and after computations are probably <<50% of the initial first round computational cost.

Finally, if the above wasn’t true and the increased costs were ridiculous (1000x or something) you could just batch this, say, every day, and defer updates in advanced rounds to later batches. This isn’t the same result, as you permanently have this sort of queue, but I guess it’s a 90% good solution.

I’m confident but at the same time LARPing here and would be happy if an actual CS person corrected me.

[I'm not a computer scientist, but] Charles is right. The backend engineering for this won't be trivial, but it isn't hard either.

The algorithmic difficulty seems roughly on the level of what I'd expect a typical software engineering interview in a Silicon Valley tech company to look like, maybe a little easier, though of course the practical implementation might be much more difficult if you want to account for all of the edge cases in the actual code and database.

The computational costs is likely trivial in comparison. Like it's mathematically equivalent to if every newly promoted user just unupvoted and reupvoted again. On net you're looking at an at-most 2x increase in the load on the upvote system, which I expect to be a tiny increase in total computational costs, assuming that the codebase has an okay design.

To be clear, I'm looking at the computational costs, not algorithmic complexity which I agree isn't huge.

Where are you getting 2x from for computations? If User A has cast strong upvotes to up to N different people, each of who has cast strong upvotes to up to N different people, and so on up to depth D, then naively a promotion for A seems to have O(N^D) operations, as opposed to O(1) for the current algorithm. (though maybe D is a function of N?)

In practice as Charles says big O is probably giving a very pessimistic view here since there's a large gap between most ranks, so maybe it's workable - though if a lot of the forum's users are new (eg if forum use grows exponentially for a while, or if users cycle over time) then you could have a large proportion of users in the first three ranks, ie being relatively likely to be promoted by a given karma increase.

I retract the <2x claim. I think it's still basically correct, but I can't prove it so there may well be edge cases I'm missing.

My new claim is <=16x.

We currently have a total of U upvotes. The maximal karma threshold is 16 karma per strong upvote at 500k karma (and there are no fractional karma). So the "worst case" scenario is if all current users are at the lowest threshold (<10 karma) and you top out at making all users >500k karma, with 16 loops across all upvotes. This involves ~16U updates, which is bounded at 16x.

If you do all the changes at once you might crash a server, but presumably it's not very hard to queue and amortize.

I think you're mixing up updates and operations. If I understand you right, you're saying each user on the forum can get promoted at most 16 times, so at most each strong update gets incremented 16 times.

But you have to count the operations of the algorithm that does that. My naive effort is something like this: Each time a user's rank updates (1 operation), you have to find and update all the posts and users that received their strong upvotes (~N operations where N is either their number of strong upvotes, or their number of votes depending on how the data is stored). For each of those posts' users, you now need to potentially do the same again (N^2 operations in the worst case) and so on.

(Using big O approach of worst case after ignoring constants)

The exponent probably couldn't get that high - eg maybe you could prove no cascade would cause a user to be promoted more than once in practice (eg each karma threshold is >2x the previous, so if a user was one karma short of it, and all their karma was in strong upvotes, then at most their karma could double unless someone else was somehow multiply promoted), so I was probably wrong that it's computationally intractable. I do think it could plausibly impose a substantial computational burden on a tiny operation like CEA though, so it'd be someone would need to do the calculations carefully before trying to implement it.

There's also the philosophical question of whether it's a good idea - if we think increasing karma is a proxy for revealing good judgement, then we might want to retroactively reward users for upvotes from higher ranked people. If we think it's more like a proxy for developing good judgement, then maybe the promotee's earlier upvotes shouldn't carry any increased weight, or at least not as much.

I agree, upvotes do seem a bit inflated. It creates an imbalance between new and old users that continually grows as existing users rack up more upvotes over time. This can be good for preserving culture and norms, but as time goes on, the difference between new and old users only grows. Some recalibration could help make the site more welcoming to new users.

In general, I think it would be nice if each upvote counted for roughly 1 karma. Will MacAskill’s most recent post received over 500 karma from only 250 voters, which might exaggerate the reach of the post to someone who doesn’t understand the karma system. On a smaller scale, I would expect a comment with 10 karma from 3 votes to be less useful than a comment with 10 karma from 5 - 8 votes. These are just my personal intuitions, would be curious how other people perceive it.

The users with the highest karma come from a range of different years, and the two highest joined in 2017 and 2019. I don't think it's too much of a problem.

Good point! Edited the post to mention this.

Looks like they updated to add something similar to this. ^^

I thought I had already written this, but FYI this post was counterfactually responsible for the feature being implemented.

(The idea had occurred to me already, but the timing was good to suggest this soon before a slew of work trials.)

This is great! But what is going on here?

I'd use this feature if added!

I wonder if the algorithm (if it is done algorithmically?) that selects the posts to put in "Recommendations"/"Forum Favourites" should also be weighted for occlumency. It seems like the reasons outlined in this post would push in favour of this, though I have some concern that there'd be old posts that are now outdated, rather than foundational, which could get undue attention.

I share this "outdated, rather than foundational" concern. I think it is possible that what is really called for here is human editorial attention rather than algorithms and sorting tools. Someone or someones to read through tons of old stuff and make some Best Of collections.

Experimental fine-tuning might be in order. But even without it, Occlumency has a different set of problems to Magic (New & Upvoted), so the option value is probably good.

As for outdated post, there could be an "outdated" tag that anyone can add to posts and vote down or up. And anyone who uses it should be encouraged to link to the reason the post is outdated in the comments. Do you have any posts in mind?

For LessWrong, we've thought about some kind of "karma over views" metrics for a while. We experimented a few years ago but it proved to be a hard UI design challenge to make it work well. Recently we've thought about having another crack at it.

I have no idea how feasible it is. But I made this post because I personally would like to search for posts like that to patch the most important missing holes in my EA Forum knowledge. Thanks for all the forum work you've done, the result is already amazing! <3

I would be in favor of this!