At EAGx Australia 2022, I spoke about the role of behavioural science in effective altruism. You can now watch the recording on Youtube. In the talk, I introduce the concept of behavioural science, discuss how it relates to effective altruism, and highlight some common mistakes we make when trying to change behaviour for societal good.

Want to skim a post instead of consuming a 30-minute video? I don’t blame you, here’s the basics of my talk…

Imagine a world…

Imagine a world where…

- We know what the most effective charities are. We've got a list of all the charities that exist, we've got detailed information about each one, and we've ranked them on various criteria that we care about. There's no more uncertainty, GiveWell is out of business, and we know where to funnel our charitable donations.

- We have perfected our biosecurity risk standards. We understand all the potential risks. We know how we can prevent things like lab leaks from occurring. We’ve even developed safety protocols outlining everything we need to do.

- We just understand sentience. Turns out the hard problem of consciousness wasn't actually that hard to solve. We understand which beings are sentient – which beings feel pleasure and pain – and we know why.

Sounds pretty great, right? In this world, we seem to have achieved monumental strides.

Yet, perhaps this wouldn’t be that exciting: these strides say nothing about the impact we’re having.

Why?

- We may understand what the most effective charities are, but what happens if no one donates to them?

- We may develop biosecurity risk standards and protocols, but what does that mean if people don't comply with them?

- We may know which beings are sentient, but what impact does that have if we don't change our treatment of those beings?

These examples demonstrate that we can have knowledge, understanding, and even action, but if we don't understand how to change behaviour – we might not have the impact that we want.

What is behavioural science?

Behavioural science is the scientific study of human behaviour. Why do people do the things they do? Why do they make the decisions that they do? What needs to change in order for them to do differently?

Behavioural science considers many influences: conscious thoughts, habits, motivations, the social context, and more. It borrows from several disciplines, including economics, psychology, and sociology.

What is the role of behavioural science in effective altruism?

Here is a (very) basic theory of change for effective altruism.

We know how to do the most good. We act on that knowledge. And, as a result, we hopefully have an impact.

Examples of things we can do at the knowledge stage include understanding which charities are most effective, creating problem profiles, and predicting what existential risks are most consequential or likely. At the action stage, we could donate to those charities, make career changes based on what we think is most impactful, or actually work to prevent existential risks.

How does behavioural science come into this? It focuses on the action stage and it asks, ‘How?’. How do we get people to donate to effective charities? How do we encourage others to make career changes, or work to prevent existential risks? Behavioural science can inform how we act, or how we get others to act, in order to enhance our impact.

Note that there are different audiences we can target when we’re thinking about behaviour change. Some audience ‘levels’ include:

- Individuals / the population level: This often involves behaviours that many people can engage in. For instance, donating to charity or reducing animal-product consumption.

- Critical actors: This includes people who possess specific skills or are in an influential position. For example, they might play an important role in institutional decision making, or perhaps they’re in a unique position to shape AI governance.

- The community: Effective altruism is not just a philosophical concept; it's also a social movement. In this context, the community plays a significant role. We want to attract people to meetups, sustain motivation, maintain a great culture, etc.

Sounds simple, right?

Common mistakes we make when trying to change behaviour for societal good

Well, there are several common mistakes we make when trying to change behaviour. I’ll outline three of them here:

Mistake #1: “I know what the problem is”

Mistake #2: “I know what works”

Mistake #3: “I’ll just use one strategy”

Mistake #1: ‘I know what the problem is’

Summary of this mistake

Often, we jump to conclusions about what the problem is – what it is that’s causing the ‘bad’ behaviour or preventing the ‘good’ behaviour from occurring. For example, you might assume that people don’t donate to effective charities because they’re not aware that some charities are more effective than others. That is, you assume that the problem is a lack of awareness.

We overlook that problems are complex, can involve different actors with competing agendas, and that there might be many different drivers and barriers. On top of that, we bring our own biases and lenses to interpreting the issue.

How can we prevent it?

Instead of assuming what the problem is, we need to spend time unpacking it. Ask yourself what assumptions you’re making, and whether you might be lacking information.

Gather evidence to fill in the gaps. In more formal settings, this could involve conducting research like literature reviews, surveys, or interviews. For example, Lucius Caviola and colleagues (2020) did not assume that the obstacle to effective giving was lack of awareness: they conducted a literature review to investigate the barriers involved (and there are many). More informally, gathering evidence could involve just talking to people who are impacted by or invested in the problem.

Mistake #2 : ‘I know what works’

Summary of the mistake

We sometimes assume we know what strategy will work to encourage the target behaviour. We don’t consider the specific context, the nuances of the target audience, or whether that strategy aligns with general behaviour change principles.

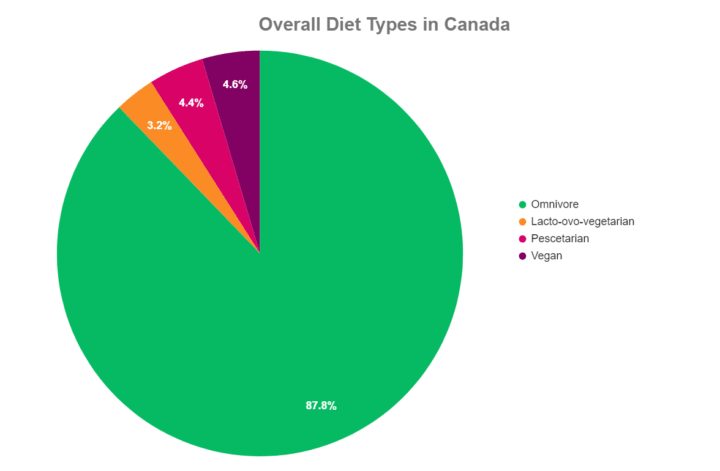

For example, in some animal advocacy materials you see charts depicting the percentage of a population that’s vegetarian or vegan (see Figure 3 for an example). Sometimes, this is intended to motivate others to make a dietary change. It might be assumed that presenting such a figure could encourage a positive social norm (after all, these figures show that not everyone is an omnivore).

When we assume what works, and hurdle over the stage of considering it more deeply, we may be making some mistakes. In this example, when we're talking about a behaviour that only a minority of the population does, research suggests that this kind of figure can actually backfire. People see that they’re with the majority (by being omnivore) and don’t necessarily feel a pull to change.

How can we prevent it?

Instead of assuming what works…

- Consider your goal. If you’re trying to change behaviour, you should be able to articulate what behaviour change you actually want to occur. For example, if you want to encourage people to get more involved in effective altruism, reflect on what that means concretely. Do you want them to just be familiar with the concept? To come to meetups? To make a career change? This will help you evaluate whether some strategy does actually work (because you understand what success would look like).

- Investigate what might work. Again, this could be more formal (e.g., literature reviews, interviews) or more informal (e.g., talking to people, reflecting on what worked for you if you have relevant experience).

- Test, reflect, and update. Test out different strategies, reflect on the result, and update your approach.

Mistake #3: ‘I’ll just use one strategy’

Summary of the mistake

Sometimes, we mistakenly rely on just one approach or strategy to change behaviour. We don’t consider that there may be multiple barriers or drivers we should address, or that different audiences may respond differently to a particular strategy.

For example, imagine that some people working in a lab aren’t complying with the safety protocols. Let’s say there are different reasons for this: some people don’t know what to do, some don’t remember, and others don’t care. Management becomes aware of this issue and decides that to increase compliance, they’ll create videos describing exactly what’s required by the protocol.

While implementing this one solution may help those who lack information, it might not do much for those who don’t remember it or don’t care. In this situation, multiple strategies may be preferable (e.g., videos, signs, discussing the importance of the protocols) to address the multiple audiences and barriers.

How can we prevent it?

- Consider your target audience. Is it possible that one stategy won’t work for everyone you’re trying to influence? Are people experiencing different drivers and barriers that you should address?

- Pilot test. If you’re not sure whether it will work for everyone, give it a go, reflect, and adapt.

- Implement multiple strategies. If you have the time and resources, implement a combination of strategies that address different aspects of the behaviour change.

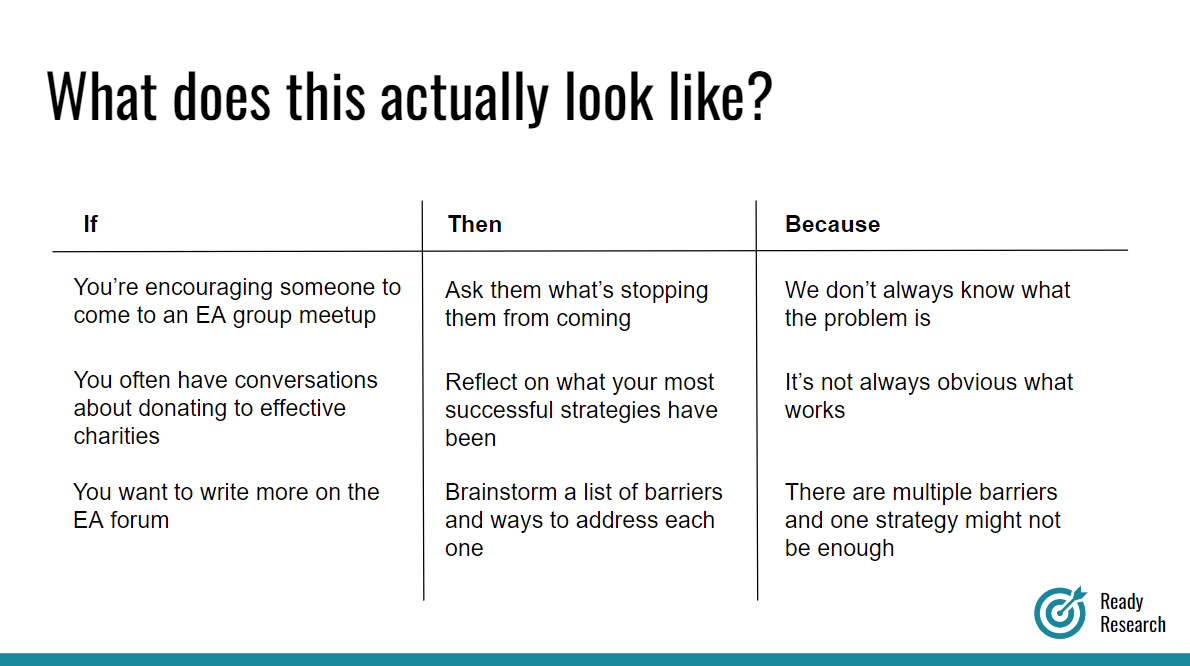

So what does this actually look like?

Here are a couple of examples of what addressing these mistakes can look like in relatable, everyday situations:

- If you’re encouraging someone to come to an effective altruism meetup -> Then you could ask them what’s stopping them from coming -> Because we don’t always know what the problem is.

- If you often have conversations about donating to more effective charities -> Then reflect on what your most successful strategies have been -> Because it’s not always obvious what works.

- If you want to write more on the EA forum -> Then brainstorm a list of barriers and ways to address each one -> Because there are multiple barriers and implementing one strategy might not be enough.

The key takeaway

Behavioural science is a crucial tool in the effective altruism toolkit. It can inform how we act, or how we get others to act, in order to enhance impact. By avoiding common pitfalls, such as assuming we know what the problem or solution is, or just implementing one strategy, we can more effectively change behaviour for societal good.

Thanks Emily:

I see some resonance between the behavioral science "Mistakes" that you think EAs might be making and differences that I find in my approach to EA work compared to what seems to be documented in the EA literature.

Specifically, I was recently reading more thoroughly the works of Peter Singer, (specifically The Life You Can Save and The Most Good That You Can Do), and while I appreciated the arguments that were being made, I did not feel as though they reflected or properly respect the real beliefs and motivations of the friends and family that donate to support my EA activities.

In this sense I also see a set of behavior science mistakes that the EA movement seems to be making from my particular individual perspective.

So in my 30 years of doing Africa-focused, quantitatively-oriented development projects, I have developed a different "Theory of Change" for how my personal EA activities can have impact.

This personal EA-like Theory of Change has three key elements: (1) Attracting non-EA donors for non-EA reasons to support EA Global Health and Welfare (GHW) causes, (2) Focusing on innovation to increase EA GHW impact leverage to 100:1, and (3) Cooperating with other EAs with the assumption that each member of the EA community has a different set of beliefs and an individual agenda. Cooperation serves aligned and community interests.

I would appreciate it if you might comment on whether some of my divergences from general EA practice might address some of the "behavioral science" issues that you have identified.

The first element of my Theory of Change is that for my EA causes to be successful, my projects have to be able to attract mostly non-EA donors. I recognize that my EA-type views are the views of a relatively tiny minority in our larger society. Therefore, I do not personally try to change people's moral philosophy which seems to be Peter Singer's approach. When I do make arguments for people to modify their moral philosophy, I find that people usually find this to be either threatening or offensive.

Element #1: While the vast majority donors do not donate based on "maximum quantitative cost-effectiveness," they do respond to respectful arguments that a particular cause or charity that you are working on is more important and impactful than other causes and charities. When "maximum quantitative cost-effectiveness" is a reason for someone that they know and respect to dedicate their life effort and money to a cause, many people will be willing to join and support that person's commitment. So while only a few people may be motivated by EA philosophical arguments, many more people can support the movement if people that they like, know and respect show a strong commitment to the movement.

This convinces people to support EA causes because they see that EAs are honest, dedicated, and committed people that they can trust. You do not have to convince people of EA philosophy to have people donate to EA cause/efforts. Most people who donate to EA causes could potentially have strong philosophical disagreements with the EA movement.

The second element of my Theory of Change is that EA projects need to have very large amounts of impact leverage. So it is important to constantly improve the impact leverage of EA projects. Statistics on charitable donations indicates, that most people donate only a few percent of their income to charity, and may donate less than 1% of income to international charitable causes.

Element #2: If people are going to donate less than 1% of income to international charitable causes, then in order to try to address the consequences of international economic inequality, EA Global Health and Welfare charities should strive to have 100:1 leverage or impact. That is, $1 of charitable donation should produce $100 of benefit for people in need. In that way, it may be possible to create an egalitarian world over the long term in spite of the fact that people may be willing to give only 1% of their income on average to international charitable causes.

In my little efforts, I think I have gotten to 20:1 impact leverage. I hope I can demonstrate something closer to 50:1 impact leverage in a year or two.

The third element of my EA theory of change is that I assume that every EA has a different personal agenda that is set by their personal history and circumstances. It is my role to modify that agenda, only if someone is open to change.

Element #3: Everyone in the EA movement has a different personal agenda and different needs and goals. Therefore my goal in interacting with other EAs is to help them realize their full potential as an EA community participant on their terms. Now since, I have my own personal views and agenda, generally I will help the agenda of others when it is low cost to my work or when it also make a contribution to my personal EA agenda (i.e. encouraging EA GHW projects to have 100:1 impact leverage). But if I can keep my EA agenda general enough, then there should be lots of alignment between my EA agenda and the agenda/interests of other EAs and I can be part of a substantial circle of associates that are mutually supportive.

Now this Theory of Change or Theory of Impact is to some extent assuming fairly minimal behavior change. It assumes that most people support EA causes for their own reasons. And it also assumes that people will not change their charitable donation behavior very much. It puts most of the onus of change on a fairly small EA community that achieves the technical accomplishment of attaining 100:1 impact leverage.

Does this approach avoid the mistakes that you mention, while at the same time making a minimal impact on changing behavior???

Just curious. I hope this response to your presentation of EA behavior science "Mistakes" is useful to you.

Thanks so much for this comment, Robert - I appreciate the engagement.

It’s interesting to hear what mistakes you see, and what you’ve experienced as working better.

It sounds like you’re really considering who your audience is – something that I think is crucial. For example, you don’t assume that people (especially those not involved in EA) will be sold by more philosophical arguments. These arguments can work for some, but definitely not everyone. I also agree that having a positive reputation (e.g., being seen as credible and honest) can attract people. ... (read more)