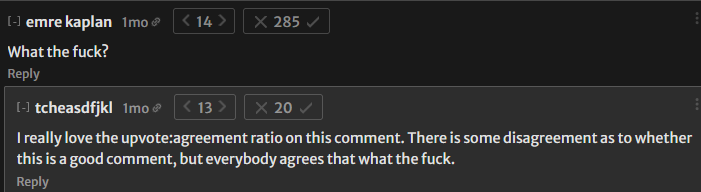

Someone recently shared with me a comment (which will appear in this post) which had strong agreement and but a strong down vote. I thought comments like that might make interesting comments to find (turns out they'd found the best example - but I enjoyed searching for more examples and I'm sharing this to save anyone else the effort).

There is a risk that just be surfacing these comments it will change their karma, so I've stated their current numbers in this post in case the numbers change in response to this.

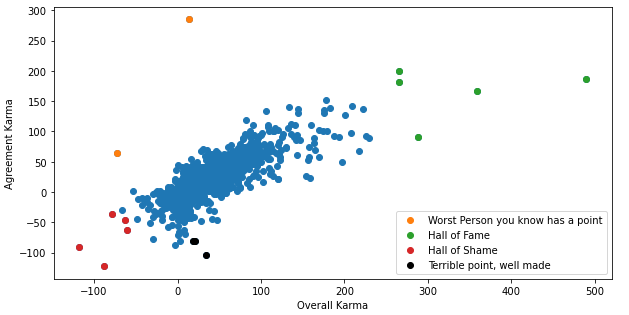

Since agreement karma is new this year, there isn't a huge sample size of posts to analyse. Unsurprisingly there is a strong relationship between overall karma and agreement:

I see roughly 4 different types of outlier:

- "Hall of Fame" - abnormally high karma and agreement

- "Hall of Shame" - abnormally low karma and agreement

- "Terrible point, well made"- abnormally high karma compared to agreement

- "The worst person you know has a point" - abnormally low karma compared to agreement

Hall of Fame

- Owen Cotton-Barratt's explanation for buying Wytham Abbey (490, 187)

- Habryka's defence of the EA leadership (359, 166)

- Scott Alexander's explanation for why he denied Kathy Forth's accusations (266, 199)

- Milan_Griffes asking Will MacAskill to account for his involvement in the FTX saga (265, 182)

- ClaireZabel lifting the lid on where the $ came from for Wytham Abbey (288, 91)

The Worst Person You Know has a Point

Terrible point, well made

- RAB thought the FTX Future fund team resigned too early (34, -104)

- MichaelPlant doesn't like the EA leadership discussing reputational issues in private (21, -81)

- EliezerYudkowsky makes his case for when fucking matters (18, -81)

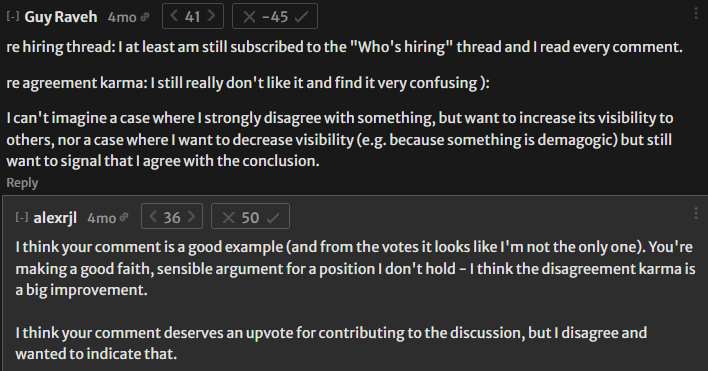

Honorable mention: Guy Raveh doesn't see the point in agreement karma... but people disagree

Hall of Shame

I'm a little bit disappointed with these - I wouldn't bother clicking on any of them. I was hoping some of them would be "so bad it's good", but really these are just bad. Don't waste your time. (But to save anyone searching, here they are)

- michaelroberts is rude in a boring way (-118, -91)

- effectenator comes from twitter to get some money back (-88, -123)

- [anonymous] has a meandering essay about why EA was doomed to fail (-60, -63)

- Yorad419 calls SBF & Caroline ugly (-78, -36)

- michaelroberts (again) is rude (-63, -47)

I did not imagine this would be the achievement that gets me recognition on the EA Forum, but I'm honoured still!

Trying to give you more fame here , but looks like you won't quite reach the dizzying heights/depths of your previous comment :D.

I think most of these top scoring (good or bad) comments come in the context of high attention hot-button controversy-related posts. Maybe the awards should try to adjust for that?

It's interesting that all of the highest "agreement Karma" are defences against questioning or criticism, mostly levelled at either EA leadership or something EA leadership has done. That could be good in showing solidarity and support for leadership and the movement in general.

But while all the comments are important, none of them are about core effective altruism stuff

This possibly is because forums and social media get more traffic around drama and negativity rather than positive ideas and intellectual discussion. Maybe this is unavoidable in online discourse. I even notice myself often gravitating to the "drama" posts rather than good ideas and more interesting discussion.

I hope in future though that some of the highest agreed comments are around the core business of effective altruism.

Are there stats on how many people up voted (rather than the karma), as some people have interacted with the forum more therefore their vote by default may be worth e.g. 5+ x as much as that of other people. Particularly interested to see how this looks in the defense posts.

Also would be interested to see with the number of people rather than karma how much something was strong voted rather than more individual people agreeing/disagreeing

Nice one I agree that would be interesting - you are right that those who are hardcore EAs are more likely to defend the movement, and that might be part of the reason for the defence posts being so highly rated. Personally I'm uncomfortable with any system where some animals are more equal than others, but I understand the sentiment of giving senior forumers more weight. It has been discussed a couple of times but i can't find the threads right nw.

I think whether or not it makes sense to give senior forumers more weight, the fact that this is the case needs to be considered when drawing conclusions:

e.g. Higher agreement karma does not imply that more people agree with a comment. It may be that, but it may also be that fewer-than-that people who have interacted with the forum more agree, or a certain group of people agree more strongly.

You get some idea when you look at the number of votes as well as the karma, but for example in this post something like this could have been discussed but wasn't.

One possible modest change to the formula: No more than half of all upvotes (or downvotes) can count as strong. I think it's natural to read high karma count as expressing some breadth of support. So while I'm fine with, e.g., two strong upvotes (each worth +6 in this example) and nine regular upvotes (each worth +2 in this example) equalling 15 regulars, I'm less convinced that five strong upvotes should carry the same karma. That seems too open to a small group strong-upvoting their friends/allies and giving the appearance of broader support.

Another possibility would be that strong upvote/downvote costs the casting user one karma.

[Edited to clarify]

I find the way your example is written is a bit unclear (perhaps a less confusing phrasing is e.g. "two strong upvotes (each worth +6)"). I understood what you were trying to say but I had to read it a few times. By my understanding: that you are not convinced that just 5 strong votes should not be worth the same as 11 votes which include some strong votes, or fifteen regular votes.

To make things perhaps more confusing I'm not sure everyone has the same multiplier between regular and strong votes. Initially a regular vote is worth 1 and a strong vote 2, now my regular votes are worth 1 and strong votes are worth 3. So at the very least the 3x multiplier is not the same for newer users.

I can also see the potential of people trying to balance out the fact that their votes are worth less by doing strong votes, or generally people not being completely consistent between what they count as a strong vote and what they just count as a regular vote (e.g. between days or depending on the mood, or on the type of post) . I expect between people there is also likely variation of how strongly they need to agree to something before they strong vote it.

Adding a cost to the strong votes would certainly make people less likely to strong vote, but having it as a 'fixed fine' of 1 will make no real difference if you have a lot of karma, but if you have less karma it will make more of one (e.g. I believe you need a certain amount of karma to add coauthors to posts). So for newer users this would be both having their votes be worth less in comparison and higher costs for them using the strong votes, which I do not think is a good idea.

Thanks -- edited to try to clarify that the +2/+6 were example values. I believe you are correct that the ratio is not the same; the most recent chart I have seen is here which suggests that the ratio can be up to 5:1.

I could see an argument for a scaled loss of karma for strongvotes depending on how many points one's strongvotes were worth. I would view this as a transfer of karma, at least where strongupvotes are concerned, rather than a "fine." I pulled the -1 suggestion from Stack Overflow, where all downvotes incur -1 to reputation.

I'd be interested in a look at the ratios of upvotes to agreements. Like, one of the hall of fame posts has three times as many upvotes as agreements, which seems to indicate that it's a controversial opinion at least.

I'm not sure a strong correlation here is super unsurprising, and, even if it is, I can think of two rather different explanations:

One could view these two explanations as forming an axis, with (1) being at the "(EA Forum voting is) epistemically unvirtuous" end and (2) being at the "epistemically virtuous" end. I personally suspect voting is closer to virtuous than unvirtuous, but I wouldn't take this as given, and I also suspect that, in theory at least, there's room for improvement. (In practice, though, steering voting further in the epistemically virtuous direction may be difficult. I tried thinking for five minutes but couldn't come up with any potentially promising interventions.)

There's a 3rd reason, which I expect is the biggest contributor. Number of readers of the post/comment.

I think this 3rd explanation can be decomposed into the first two (and possibly others that I haven't laid out)?

(For what it's worth, I agree with a "You don’t have to respond to every comment" norm. So, don't feel obligated to reply, especially if you think—as may well be the case—that I'm talking past you and/or that I'm missing something and the resulting inferential distance is large.)

I enjoyed the way this post was written -- nice work!