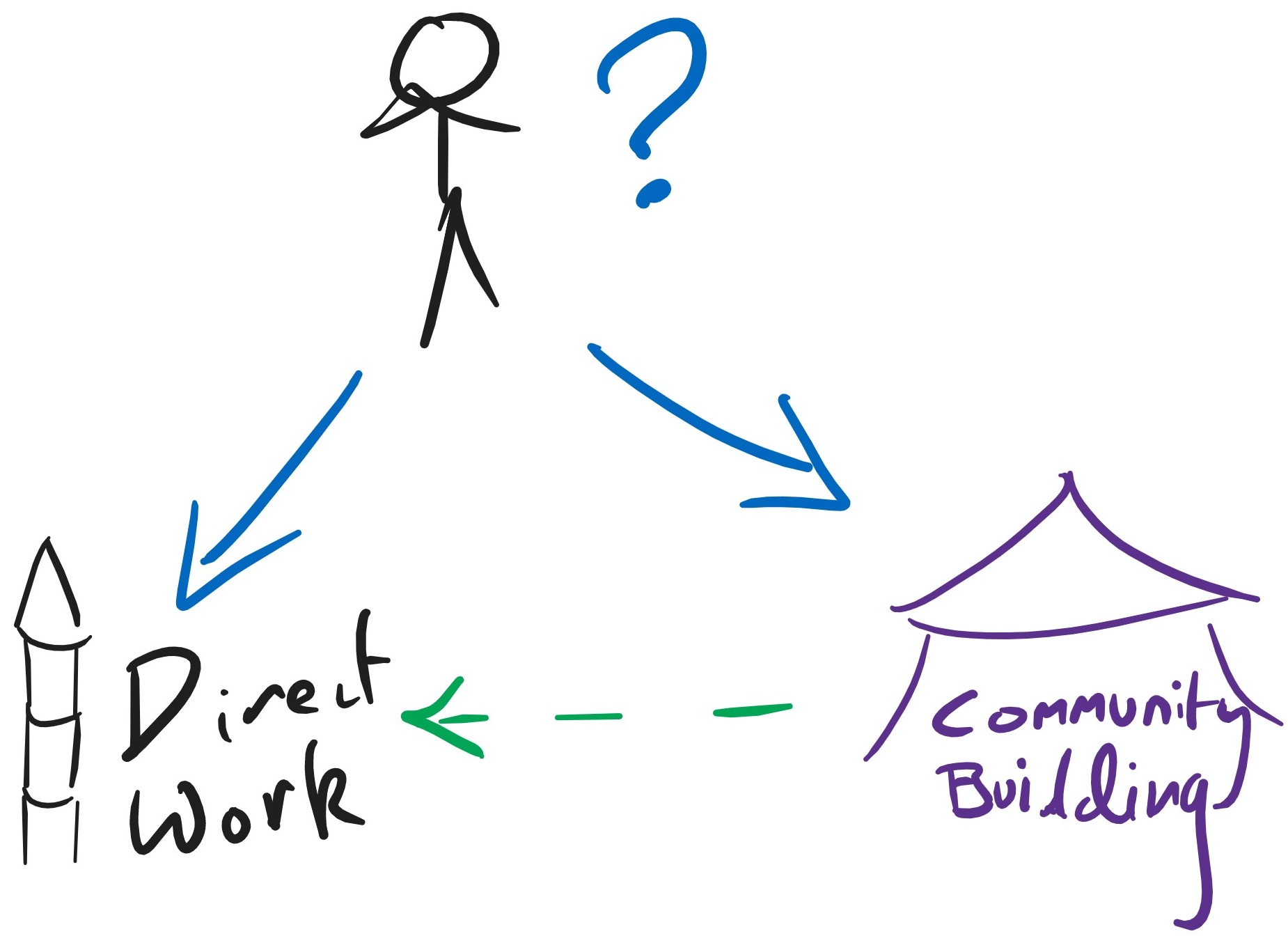

Here's a cartoon picture I think people sometimes have:

I think this is misguided and can be harmful.

The appeal of the picture is that specialization generally brings big benefits; since there are two large buckets of work it's natural to think they should be pursued by specialists working in specialist orgs.

The reason that the specialization argument doesn't just go through is that the things we're trying to build communities for are complicated. I think community-building work is often a lot better when it's high context on what's being done and what's needed in direct work.[1] In some cases this could shift it from negative to positive; in others it merely provides a significant boost. By default in the "separate camps" model, the community-building camp simply isn't high enough context on the direct work camp to achieve that.

Rather than separate camps, I think that it's better to think of there being one camp, which is oriented around "direct work". A good amount of community-building work goes on there, but it's all pretty well integrated with the direct work (often with heavy overlap of the people involved).

At an individual level, I think it's often correct for people to multi-class between direct work and community building. After you have expertise on direct work and what's needed, there are low-hanging fruit in leveraging that expertise in community building (this might be e.g. via giving talks or mentoring junior people). Or if you're mostly focused on community building I think you'll often benefit from spending a fraction of your time really trying to get to the bottom of understanding direct work agendas, and exactly why people are pursuing different strategies. A possible way of getting solid grounding for this is to actually spend a fraction of your time aiming to do high-value direct work, but that's certainly not the only approach — reading content and (especially) talking to people who are engaged with direct work about what their bottlenecks are also helpful. As a very rough rule of thumb, I think that it's good if people doing community-building work spend at least 20% of their time obsessing over the details of what's needed in direct work.[2]

Clarifications & caveats to these claims:

- 20% time doesn't need to mean a day every week. It could be ten weeks a year, or one year every five. (It probably shouldn't be stretched longer than that, because knowledge will go stale.)

- I mean to refer to people trying to build communities aimed at increasing direct work. I think it's fine to have separate specialist people/orgs for things like:

- Education — e.g. if you had an org aimed at getting more people to intuitively understand scope-sensitivity

- Building communities that aren't aimed at direct work — e.g. if you want to build a community of AI researchers who read science fiction together and think about the future of AI

- It's fine to have professional facilitators who are helping the community-building work without detailed takes on object-level priorities, but they shouldn't be the ones making the calls about what kind of community-building work needs to happen

- The whole 20% figure is me plucking a number that feels reasonable out of the air. If someone wants to argue that it should be 12% or 30% I'm like "that's plausible". If someone wants to argue that it should be 2% or 80% I'm going to be pretty sceptical.

- I think a lot of people who are working professionally in community building understand all of this intuitively. But it doesn't seem to me to be uniformly understood/discussed, so I thought it was worth sharing the model.

Corollaries of this view:

- People who want to do community building work should be particularly interested in doing so in environments which give them high access to people doing direct work

- People doing direct work should be excited to look for high-leverage ways to apply their knowledge to help community building, like:

- Spend time uploading their models to community-builders (so long as they can find ones they're excited to work with)

- Meeting directly with promising people, perhaps referred to them by community builders, even when it isn't immediately helpful to their goals

- More people should consider moving between community building and direct work roles through their careers

- (For especially technical direct work roles it may be relatively hard to move into them later, but there are plenty of roles which aren't like this)

- ^

A straightforward case is that it's often very valuable for people who might get involved to talk to someone with sophisticated models of what's being done in different areas, so that they have a chance to interrogate these models.

- ^

And probably more than that early in their careers; cf. Community Builders Spend Too Much Time Community Building.

I used to agree more with the thrust of this post than I do, and now I think this is somewhat overstated.

[Below written super fast, and while a bit sleep deprived]

An overly crude summary of my current picture is "if you do community-building via spoken interactions, it's somewhere between "helpful" and "necessary" to have a substantially deeper understanding of the relevant direct work than the people you are trying to build community with, and also to be the kind of person they think is impressive, worth listening to, and admirable. Additionally, being interested in direct work is correlated with a bunch of positive qualities that help with community-building (like being intellectually-curious and having interesting and informed things to say on many topics). But not a ton of it is actually needed for many kinds of extremely valuable community building, in my experience (which seems to differ from e.g. Oliver's). And I think people who emphasize the value of keeping up with direct work sometimes conflate the value of e.g. knowing about new directions in AI safety research vs. broader value adds from becoming a more informed person and gaining various intellectual benefits from practice engaging with object-level rather than social problems.

Earlier on in my role at Open Phil, I found it very useful to spend a lot of time thinking through cause prioritization, getting a basic lay of the land on specific causes, thinking through what problems and potential interventions seemed most important and becoming emotionally bought-in on spending my time and effort on them. Additionally, I think the process of thinking through who you trust, and why, and doing early audits that can form the foundation for trust, is challenging but very helpful for doing EA CB work well. And I'm wholly in favor of that, and would guess that most people that don't do this kind of upfront investment are making an important mistake.

But on the current margin, the time I spend keeping up with e.g. new directions in AI safety research feels substantially less important than spending time on implementation on my core projects, and almost never directly decision-relevant (though there are some exceptions, e.g. I could imagine information that would (and, historically, has) update(d) me a lot about AI timelines, and this would flow through to making different decisions in concrete ways). And examining what's going on with that, it seems like most decisions I make as a community-building grantmaker are too crude to be affected much by additional info at the relevant level of granularity intra-cause, and when I think about lots of other community-building-related decisions, the same seems true.

For example, if I ask a bunch of AI safety researchers what kinds of people they would like to join their teams, they often say pretty similar versions of "very smart, hardworking people who grok our goals, who are extremely gifted in a field like math or CS". And I'm like "wow , that's very intuitive, and has been true for years, without changing". Subtle differences between alignment agendas do not, in my experience, bear out enough in people's ideas about what kinds of recruitment are good that I've found it to be a good use of time to dig in on. This is especially true given that places where informed, intelligent people who have various important-to-me markers of trustworthiness differ are places where I find that it's particularly difficult for an outsider to gain much justified confidence.

Another testbed is that I spend a few years spending a lot of time on Open Phil's biosecurity strategy, and I formed a lot of my own, pretty nuanced and intricate views about it. I've never dived as deep on AI. But I notice that I didn't find my own set of views about biosecurity that helpful for many broader community-building tradeoffs and questions, compared to the counterfactual of trusting the people who seemed best to me to trust in the space (which I think I could have guessed using a bunch of proxies that didn't involve forming my own models of biosecurity) and catching up with them or interviewing them every 6mo about what it seems helpful to know (which is more similar to what I do with AI). Idk, this feels more like 5-10% of my time, though maybe I absorb additional context via osmosis from social proximity to people doing direct work, and maybe this helpful in ways that aren't apparent to me.

Re. 2), I think the relevant figure will vary by activity. 30% is a not-super-well-considered figure chosen for 80k, and I think I was skewing conservative ... really I'm something like "more than +20% per doubling, less than +100%". Losing 90% of the impact would be more imaginable if we couldn't just point outliery people to different intros, and would be a stretch even then.