Survey

I think it would be interesting to know the confidence of EA Forum readers in the Repugnant Conclusion and each of its premises (see next section). For that purpose, I encourage you to fill this Form, where you can assign probabilities to each being true in principle. Feel free to comment below your rationale.

Please participate regardless of the extent to which you accept/reject the Repugnant Conclusion, such that the answers are as representative as possible. I recommend the following procedure:

- Firstly, establish priors for your probabilities based solely on the description of the Repugnant Conclusion, its premises and your intuition.

- Secondly, update your probabilities based on further investigation (e.g. reading this and this, and looking into the comments below).

You can edit your submitted answers as many times as you want[1]. I plan to publish the results in 1 to 2 months.

Repugnant Conclusion and its premises

“The Repugnant Conclusion is the implication, generated by a number of theories in population ethics, that an outcome with sufficiently many people with lives just barely worth living is better than an outcome with arbitrarily many people each arbitrarily well off”. In Chapter 8 of What We Owe to the Future (WWOF; see section “The Total View”), William MacAskill explains that it follows from 3 premises:

- Dominance addition. “If you make everyone in a given population better off while at the same time adding to the world people with positive wellbeing, then you have made the world better”.

- Non-anti-egalitarianism. “If we compare two populations with the same number of people, and the second population has both greater average and total wellbeing, and that wellbeing is perfectly equally distributed, then that second population is better than the first”.

- Transitivity. “If one world is better than a second world, which itself is better than a third, then the first world is better than the third”.

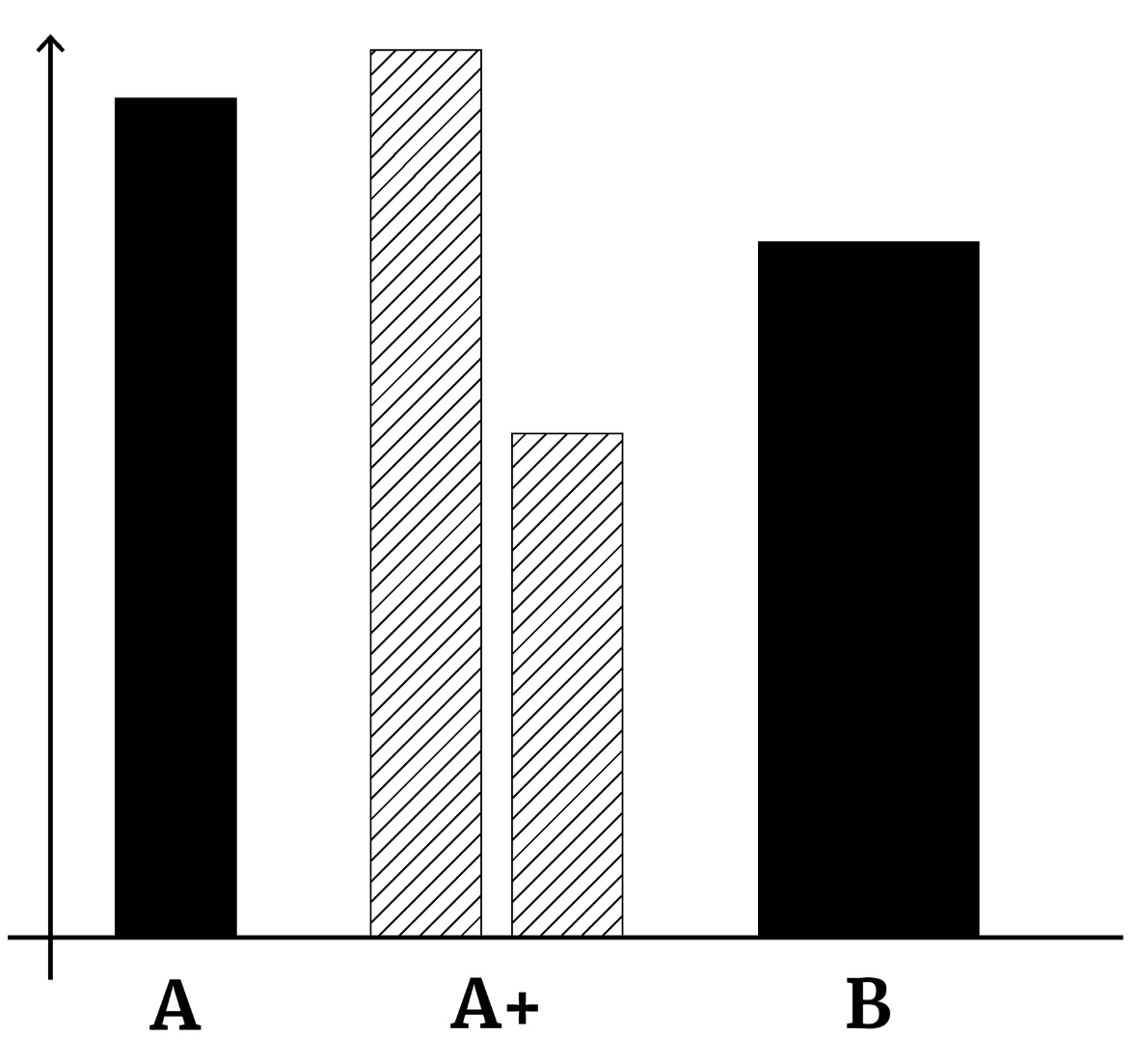

Figure 8.7 of WWOF helps visualise the effect of the 2 1st premises via the following worlds:

- World A:

- “A world of ten billion people who all live wonderful lives of absolute bliss and flourishing”.

- World A+:

- “The ten billion people in A+ have even better lives than those in A, and the total population is larger: in A+ there are an additional ten billion people who have pretty good lives, though much less good than the other ten billion people’s”.

- World B:

- “In this world, there are the same number of people as in A+. But there is no longer any inequality; everyone has the same level of wellbeing. What’s more, in World B, the average and total wellbeing are greater than those of World A+”.

If dominance addition is true, A+ is better than A. If non-anti-egalitarianism is true, B is better than A+. Consequently, if transitivity is also true, B is better than A. This logic can be repeated indefinitely until arriving to a world Z where there is a very large population with very low positive wellbeing.

Accepting all of the premises does not imply accepting the total view, which “regards one outcome as better than another if and only if it contains greater total wellbeing, regardless of whether it does so because people are better off or because there are more well-off people”. For example, the Very Repugnant Conclusion does not follow from the above premises.

However, as each of the above premises is implied by the total view, rejecting at least one implies rejecting the total view.

My strongest objections to the premises

I believe all of the premises are close to indisputable, but my strongest objections are:

- Dominance addition:

- Critical range theory (see here): “adding an individual makes an outcome better to the extent that their wellbeing exceeds the upper end of a critical range, and makes an outcome worse to the extent that their wellbeing falls below the lower limit of the critical range”. Under these conditions, adding to the world people with sufficiently low positive wellbeing does not change the goodness of the world.

- Non-anti-egalitarianism:

- Increasing the mean wellbeing per being only makes the world better if the final mean wellbeing per being is sufficiently high. Consequently, a world with one being with very high wellbeing and N - 1 beings with wellbeing just just above zero may be better than a world with N beings with wellbeing just above zero.

- All (including transitivity):

Acknowledgements

Thanks to Michael St. Jules, Pablo Stafforini, and Ramiro.

- ^

Signing in to a Google account is required to do this, and ensure only one set of answers per person. If you do not have a Google account, and do not want to create one, I am happy to receive a private message with your answers.

I am very strongly attached to both dominance addition and non-anti-egalitarianism. If I was to reject a premise it would probably be transitivity, though I think there are very strong structural reasons to accept transitivity, as well as modestly strong principled ones (in all cases of intransitive values I am aware of, the thing you care about in a world depends on what other world it is being compared to, if this is necessarily the case for intransitive values, it requires a sort of extrinsic valuation that I am very averse to, and which is crucial to my strong acceptance of dominance addition as well).

A more realistic way I reject the repugnant conclusion in practice is on a non-ethical level. I don't think that my aversion to the repugnant conclusion (actually not nearly as strong as my aversion to other principled results of my preferred views, like those of pure aggregation) is sensitive to strong moral reasons at all. I think that rejection of many of these implications, for me, is more part of a different sort of project all together from principled ethics. I don't believe I can be persuaded of these conclusions by even the best principled arguments, and so I don't think there is any level on which I arrived at them because of such principles either.

I think many people find views like this to be a sort of cop-out, but I think arriving at moral rules in a way that more resembles running linear regressions on case-specific intuitions degrades an honest appreciation for both moral principles and my own psychology, I see no reason to expect there to be a sufficiently comfortable convergence between the best versions of the two.