Survey

I think it would be interesting to know the confidence of EA Forum readers in the Repugnant Conclusion and each of its premises (see next section). For that purpose, I encourage you to fill this Form, where you can assign probabilities to each being true in principle. Feel free to comment below your rationale.

Please participate regardless of the extent to which you accept/reject the Repugnant Conclusion, such that the answers are as representative as possible. I recommend the following procedure:

- Firstly, establish priors for your probabilities based solely on the description of the Repugnant Conclusion, its premises and your intuition.

- Secondly, update your probabilities based on further investigation (e.g. reading this and this, and looking into the comments below).

You can edit your submitted answers as many times as you want[1]. I plan to publish the results in 1 to 2 months.

Repugnant Conclusion and its premises

“The Repugnant Conclusion is the implication, generated by a number of theories in population ethics, that an outcome with sufficiently many people with lives just barely worth living is better than an outcome with arbitrarily many people each arbitrarily well off”. In Chapter 8 of What We Owe to the Future (WWOF; see section “The Total View”), William MacAskill explains that it follows from 3 premises:

- Dominance addition. “If you make everyone in a given population better off while at the same time adding to the world people with positive wellbeing, then you have made the world better”.

- Non-anti-egalitarianism. “If we compare two populations with the same number of people, and the second population has both greater average and total wellbeing, and that wellbeing is perfectly equally distributed, then that second population is better than the first”.

- Transitivity. “If one world is better than a second world, which itself is better than a third, then the first world is better than the third”.

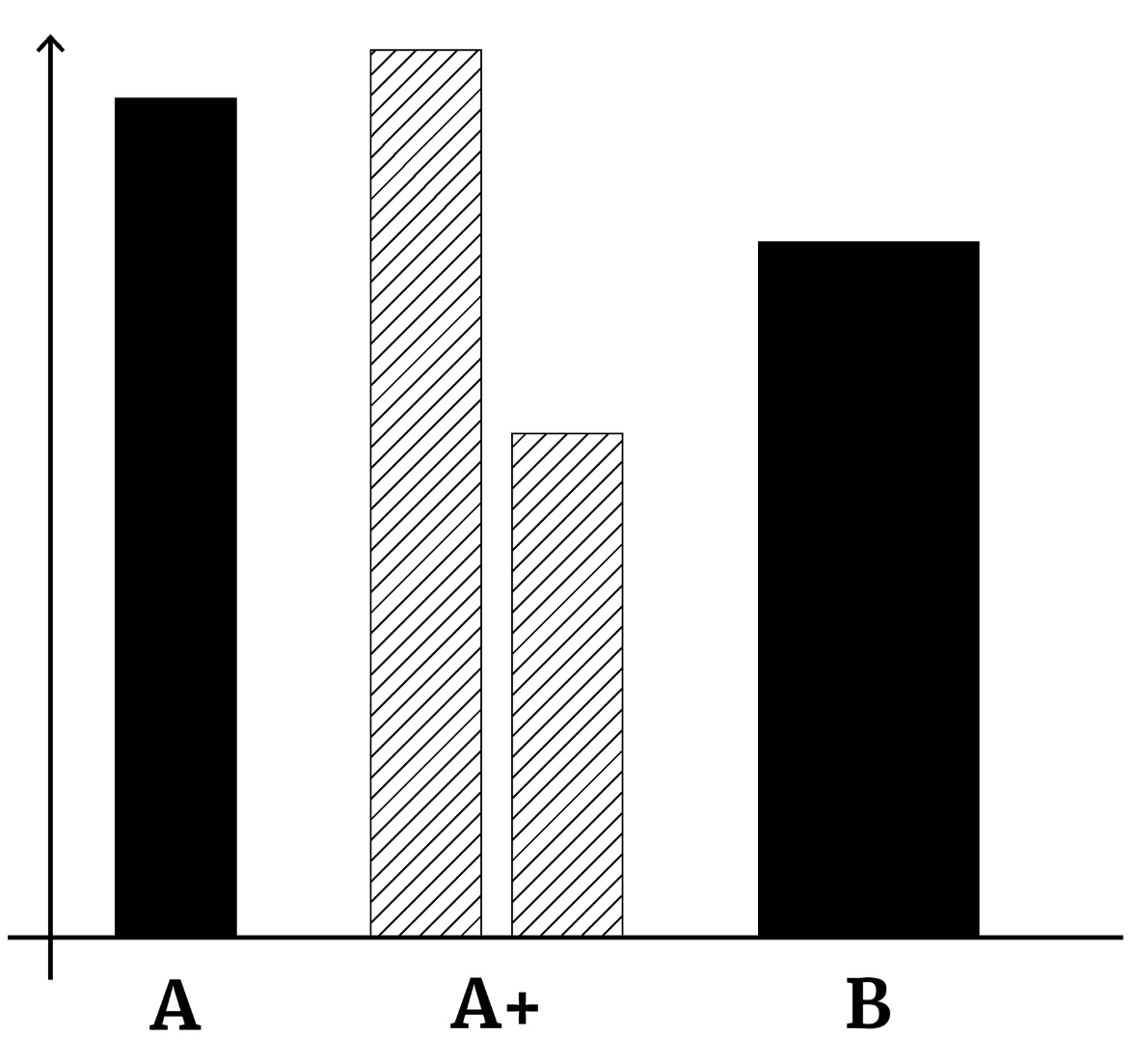

Figure 8.7 of WWOF helps visualise the effect of the 2 1st premises via the following worlds:

- World A:

- “A world of ten billion people who all live wonderful lives of absolute bliss and flourishing”.

- World A+:

- “The ten billion people in A+ have even better lives than those in A, and the total population is larger: in A+ there are an additional ten billion people who have pretty good lives, though much less good than the other ten billion people’s”.

- World B:

- “In this world, there are the same number of people as in A+. But there is no longer any inequality; everyone has the same level of wellbeing. What’s more, in World B, the average and total wellbeing are greater than those of World A+”.

If dominance addition is true, A+ is better than A. If non-anti-egalitarianism is true, B is better than A+. Consequently, if transitivity is also true, B is better than A. This logic can be repeated indefinitely until arriving to a world Z where there is a very large population with very low positive wellbeing.

Accepting all of the premises does not imply accepting the total view, which “regards one outcome as better than another if and only if it contains greater total wellbeing, regardless of whether it does so because people are better off or because there are more well-off people”. For example, the Very Repugnant Conclusion does not follow from the above premises.

However, as each of the above premises is implied by the total view, rejecting at least one implies rejecting the total view.

My strongest objections to the premises

I believe all of the premises are close to indisputable, but my strongest objections are:

- Dominance addition:

- Critical range theory (see here): “adding an individual makes an outcome better to the extent that their wellbeing exceeds the upper end of a critical range, and makes an outcome worse to the extent that their wellbeing falls below the lower limit of the critical range”. Under these conditions, adding to the world people with sufficiently low positive wellbeing does not change the goodness of the world.

- Non-anti-egalitarianism:

- Increasing the mean wellbeing per being only makes the world better if the final mean wellbeing per being is sufficiently high. Consequently, a world with one being with very high wellbeing and N - 1 beings with wellbeing just just above zero may be better than a world with N beings with wellbeing just above zero.

- All (including transitivity):

Acknowledgements

Thanks to Michael St. Jules, Pablo Stafforini, and Ramiro.

- ^

Signing in to a Google account is required to do this, and ensure only one set of answers per person. If you do not have a Google account, and do not want to create one, I am happy to receive a private message with your answers.

My main objections are:

The Independence of Irrelevant Alternatives seems possibly false. Whether the move from A to A+ improves things depends on whether or not B is available. If B is available, then A>A+, and otherwise A<A+ (assuming no other options). The creation of the extra people in A+ creates further obligations if and only if there are options available in which they are better off. The extra people are owed more if and only if they could have been better off (possibly in a wide/nonidentity sense). This cuts against Dominance Addition and Transitivity, as stated, although note that rejecting IIA is compatible with transitivity within each option set, and DA in all option sets of size 2 and many others, just not all option sets.

The aggregation required in Non-anti-egalitarianism seems possibly false. It would mean it’s better to bring someone down from a very high welfare life to a marginal life for a barely noticeable improvement to arbitrarily many other individuals. Maybe this means killing or allowing to die earlier to marginally benefit a huge number of people. Even from a selfish POV, everyone may be willing to pay the tiny cost for even a tiny and almost negligible chance of getting out of the marginal range (although they would need to be "risk-loving" or there would need to be value lexicality). The aggregation also seems plausibly false within a life: suppose each moment of your very long life is marginally good, except for a small share of excellent moments. Would you replace your excellent moments with marginally good ones to marginally improve all of the marginal ones and marginally increase your overall average and total welfare?

The aggregation required in NAE depends on welfare being cardinally measurable on a common scale across all individuals so that we can take sums and averages, which seems possibly false.

It's assumed there is positive welfare and net positive lives, contrary to negative axiologies, like antifrustrationism or in negative utilitariaism. Under negative axiologies, the extra lives are either already perfect, so the move from A+ to B doesn't make sense, or they are very bad to add in aggregate, and plausibly outweigh the benefits to the original population in A in moving to A+, so A>A+. (Of course, there may be other repugnant conclusions for negative axiologies.)

Also, I just remembered that the possibility of positive value lexicality means that these three axioms alone do not imply the RC, because Non-anti-egalitarianism wouldn't be applicable, as the unequal population with individuals past the lexical threshold has greater total and average welfare (in a sense, infinitely more). You need to separately rule out this kind of lexicality with another assumption (e.g. welfare is represented by (some subset of) the real numbers with the usual order and the usual operation of addition used to take the total and average), or replace NAE with something slightly different.

EDIT: Replaced "plausibly" with "possibly" to be clearer.

Re 2: Your objection to non-anti egalitarianism can easily be chalked up to scope neglect.

World A - One person with an excellent life plus 999,999 people with neutral lives.

World B - 1,000,000 people with just above neutral lives.

Let's use the veil of ignorance.

Would you prefer a 100% chance of a just above neutral life or a 1 in a million chance of an excellent life with a 99.9999% chance of a neutral life? I would definitely prefer the former.

Here is an alternative argument.

Surely, it would be moral to decrease the wellbeing of a happy person from ... (read more)