Abstract

Once AI systems can design and build even more capable AI systems, we could see an intelligence explosion, where AI capabilities rapidly increase to well past human performance.

The classic intelligence explosion scenario involves a feedback loop where AI improves AI software. But AI could also improve other inputs to AI development. This paper analyses three feedback loops in AI development: software, chip technology, and chip production. These could drive three types of intelligence explosion: a software intelligence explosion driven by software improvements alone; an AI-technology intelligence explosion driven by both software and chip technology improvements; and a full-stack intelligence explosion incorporating all three feedback loops.

Even if a software intelligence explosion never materializes or plateaus quickly, AI-technology and full-stack intelligence explosions remain possible. And, while these would start more gradually, they could accelerate to very fast rates of development. Our analysis suggests that each feedback loop by itself could drive accelerating AI progress, with effective compute potentially increasing by 20-30 orders of magnitude before hitting physical limits—enabling truly dramatic improvements in AI capabilities. The type of intelligence explosion also has implications for the distribution of power: a software intelligence explosion would by default concentrate power within one country or company, while a full-stack intelligence explosion would be spread across many countries and industries.

Summary

Once AI systems can themselves design and build even more capable AI systems, progress in AI might accelerate, leading to a rapid increase in AI capabilities. This is known as an intelligence explosion (“IE”).

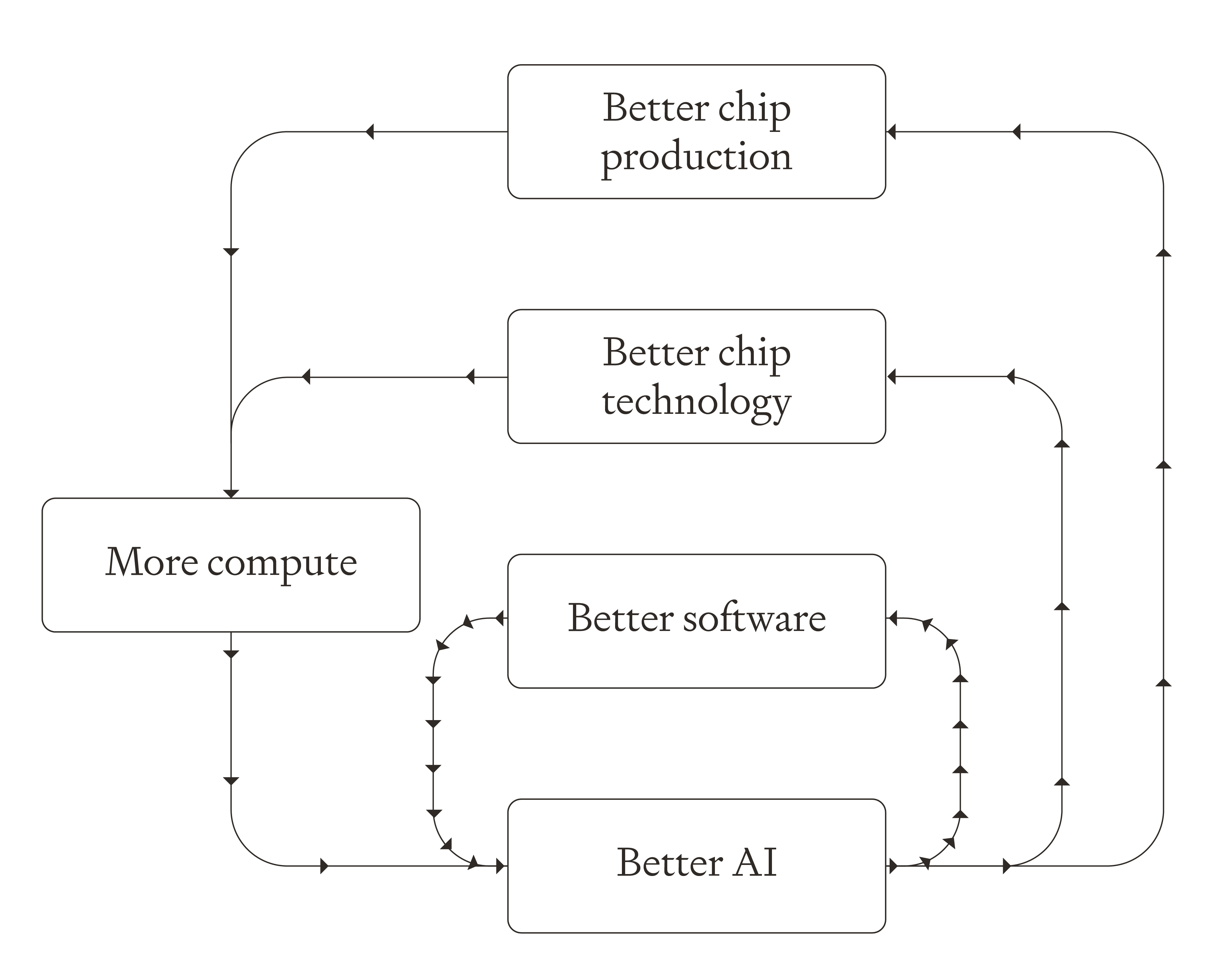

The classic IE scenario involves a feedback loop in AI software, with AI designing better software that enables more capable AI that designs even better software, and so on. But there are many parts of AI development which could lead to a positive feedback loop. We identify:

- A software feedback loop, where AI develops better software. Software includes AI training algorithms, post-training enhancements, ways to leverage runtime compute (like o3), synthetic data, and any other non-compute improvements.

- A chip technology feedback loop, where AI designs better computer chips. Chip technology includes all the cognitive research and design work done by NVIDIA, TSMC, ASML, and other semiconductor companies.

- A chip production feedback loop, where AI and robots build more computer chips.

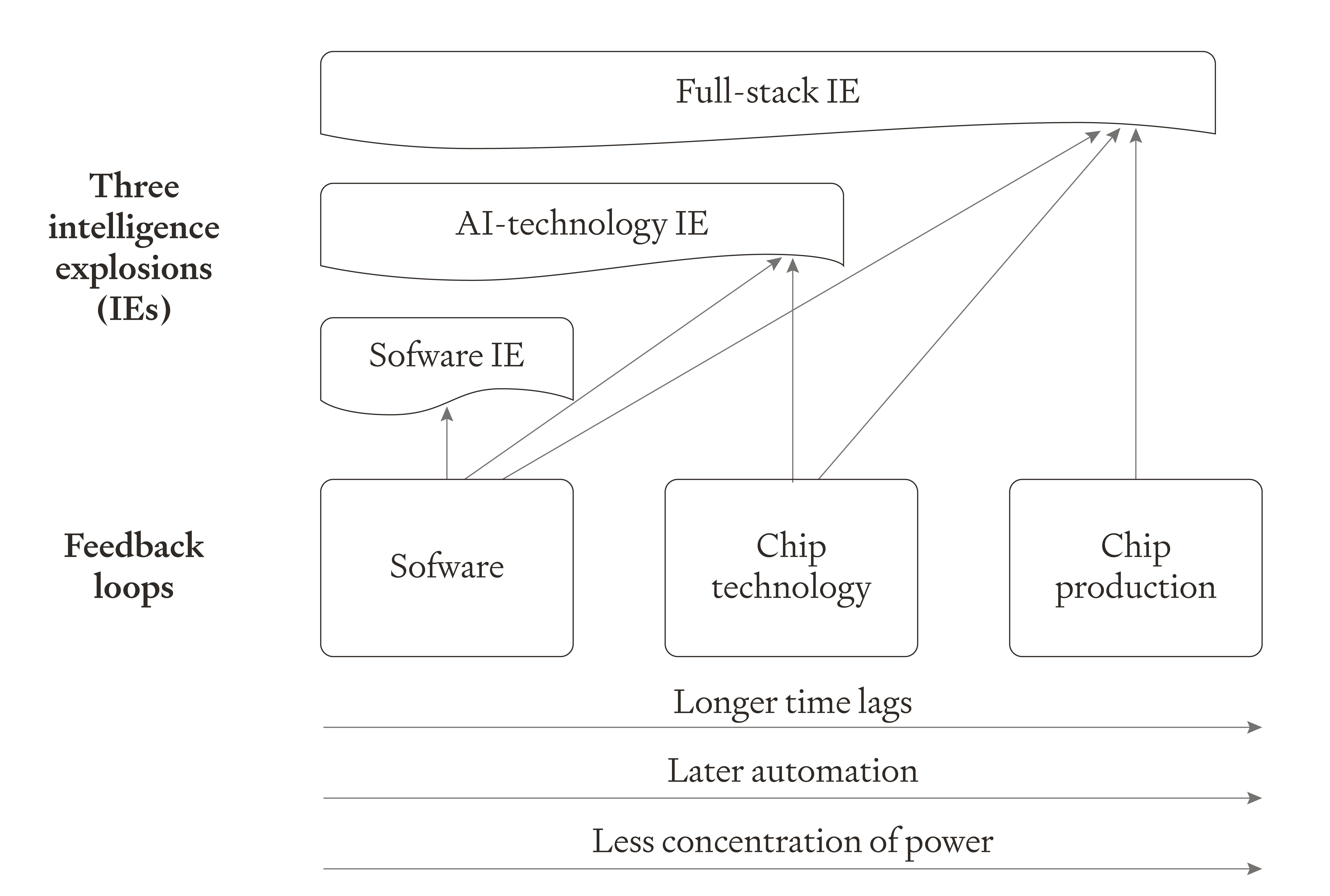

The software loop will likely be automated first and it has the shortest time lags (training new AI models), and the chip production loop will likely be automated last and has the longest time lags (building new fabs). These feedback loops could drive three different types of IE:

- A software IE, where AI-driven software improvements alone are sufficient for rapid and accelerating AI progress.

- An AI-technology IE, where AI-driven improvements in both software and chip technology are needed, but AI-driven improvements in chip production are not.

- A full-stack IE, where AI-driven improvements in all of software, chip technology and chip production are needed.

Crucially, even if the software feedback loop is not powerful enough to drive a software IE, we could still see an AI-technology or full-stack IE.

An IE is more likely if progress accelerates after full automation. We think, based on empirical evidence about diminishing returns, that the software and AI-technology IEs are more likely to accelerate than not, and that a full-stack IE is very likely to accelerate eventually.

An IE will be bigger and faster if effective physical limits are further away. We estimate that before hitting limits, the software feedback loop could increase effective compute by ~13 orders of magnitude (“OOMs”), the chip technology loop by a further ~6 OOMs, and the chip production feedback loop could increase effective compute by a further ~5 OOMs (and by another 9 OOMs if we capture all the sun’s energy from space).

If the recent relationship between increasing effective compute and increasing capabilities continues to hold, this would be equivalent to ~4 “GPT-sized” jumps in capabilities from software (i.e. 4 jumps as large as the jump from GPT-2 to GPT-3, or GPT-3 to GPT-4), a further ~2 GPTs from chip technology, and a further ~2-5 GPTs from chip production.1

These IEs differ in their strategic implications. A software IE would be most likely to occur first in the US, with power strongly concentrated in the hands of the owners of AI chips and algorithms. An AI-technology IE would most likely involve the US and some other countries in the semiconductor supply chain like Taiwan, South Korea, Japan, and the Netherlands, with power more broadly distributed among the owners of AI algorithms, AI chips and the semiconductor supply chain. Compared to the other two IEs, a full-stack IE may be more likely to heavily involve countries like China and the Gulf states, which have a strong industrial base and a more permissive regulatory environment. A full-stack IE would also distribute power more broadly across the industrial base.

Great set of posts (including the 'how far' and 'how sudden' related ones). I only skimmed the parts I had read drafts of, but still have a few comments, mostly minor:

1. Accelerating progress framing

I am a bit skeptical of this definition, both because it is underspecified, and I'm not sure it is pointing at the most important thing.

2. Max rate of change

It would be good to flag in the main text that the justification for this is in Appendix 2 (initially I thought it was a bare asertion). Also, it is interesting that in @kokotajlod's scenario the 'wildly superintelligent' AI maxes out at 1 million-fold AI R&D speedup; I commented to them on a draft that this seemed implausibly high to me. I have no particular take on whether 100x is too low or too high as the theoretical max, but it would be interesting to work out why there is this Forethought vs AI Futures difference.

3. Error in GPT OOMS calculations

4. Physical limits

Regarding the effective physical limits of each feedback loop, perhaps it is worth noting that your estimates are very well grounded and high-confidence for the chip production feedback loop as we know more or less exactly the energy output of the sun. But the other two are super speculative. Which is fine, they are just quite different types of estimates, so we should remember to rely far less on them.

5. End of the transition period

(Finally, Fn2 is missing a link.)

Interesting. Some thoughts: