The winners of the ‘Essays on Longtermism’ competition are:

First place:

- Aaron Bergman, for Utilitarians Should Accept that Some Suffering Cannot be “Offset” - $1000 prize.

Joint second place[1]:

- Arepo, for Fruit-picking as an existential risk — EA Forum - $500 prize

- David Goodman, for Information Preservation as a Longtermist Intervention - $500 prize

We had 67 entries, many of which are underrated. You can read all of the entries here.

Summaries

Utilitarians Should Accept that Some Suffering Cannot be “Offset”

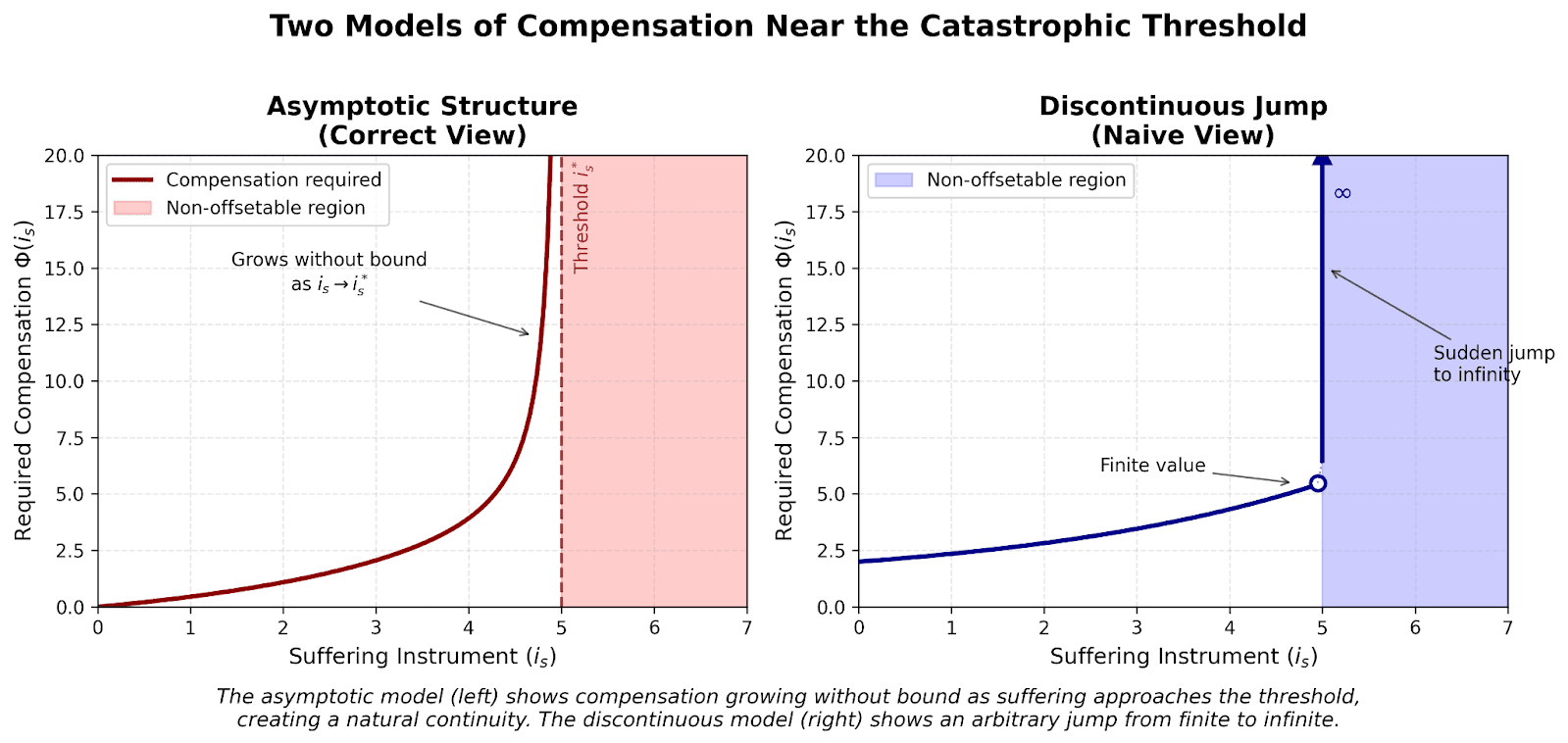

Read the postSummary: @Aaron Bergman’s essay argues against the idea that welfare can be understood as a simple real number scale, where suffering can be traded off with pleasure.

The essay argues two points. First, standard utilitarian commitments do not logically require the view that any suffering can be compensated by enough happiness. Second, once that premise is questioned, it becomes plausible that some extreme suffering is morally non-offsetable — no amount of happiness elsewhere can justify creating it.

He suggests that near a threshold of extreme suffering, the “compensation curve” may rise without bound, making lexicality a natural limit rather than an arbitrary jump.

If so, longtermists should shift from maximizing future happiness to preventing lock-in of catastrophic suffering and s-risks.

Fruit-picking as an existential risk

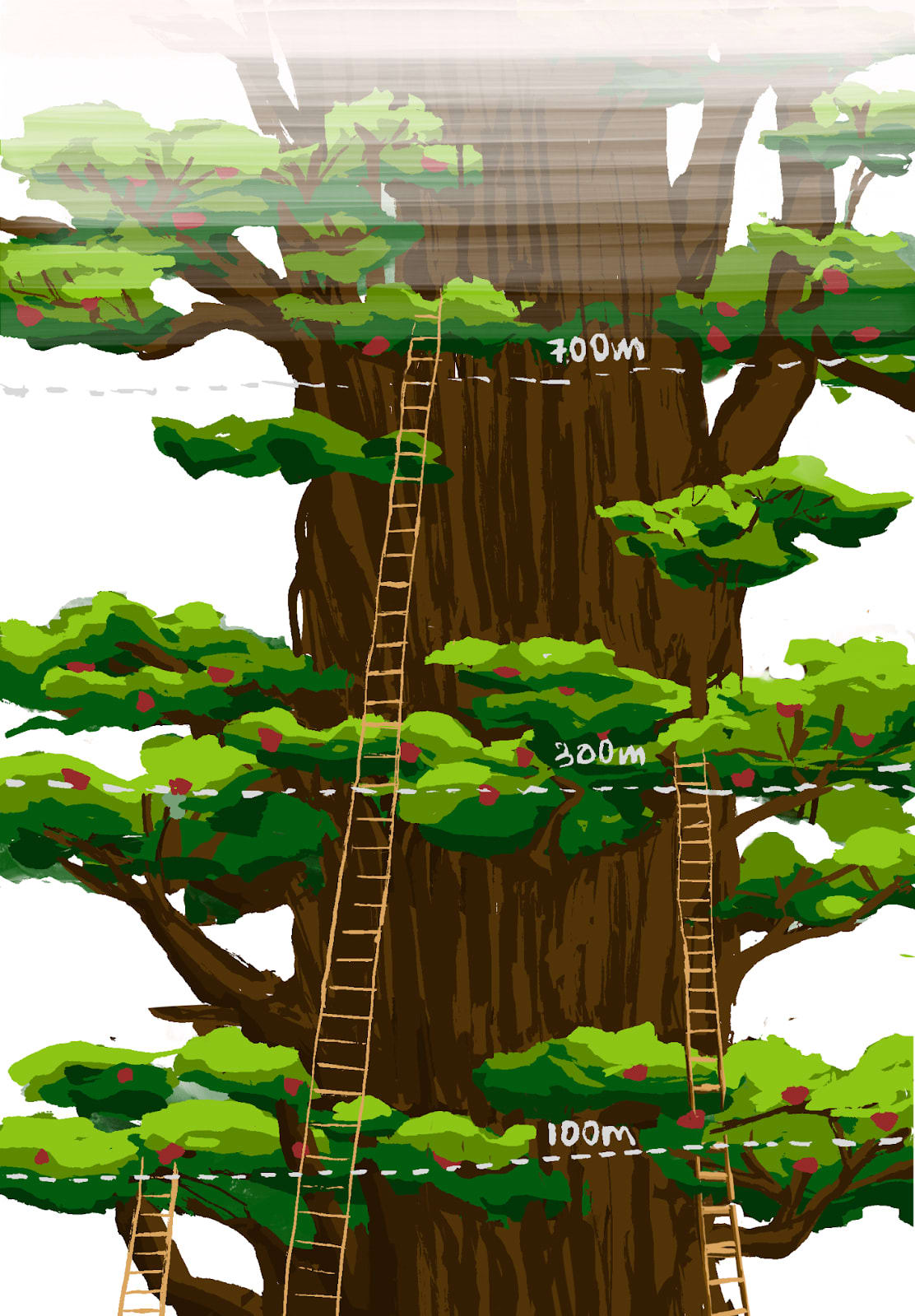

Read the postSummary: @Arepo’s essay argues that longtermists should orient towards ensuring much longer and much better futures rather than merely avoiding extinction. He provides a metaphor (fruit-picking) to represent non-renewable resources, the depletion of which could permanently reduce our chances of achieving the best futures.

With less resources to fuel growth, successor civilisations would climb the same technological ladder but with worse inputs, lengthening their time in the danger zone and increasing cumulative annual collapse risk.

On plausible assumptions, a single civilisational collapse could cut humanity's potential almost as much as extinction itself.

This shifts priorities: focus more on preventing or softening sub-extinction catastrophes, aim explicitly at becoming stably interstellar rather than merely surviving on Earth, and value technological and economic acceleration that shortens the time of perils and conserves resources.

Information Preservation as a Longtermist Intervention

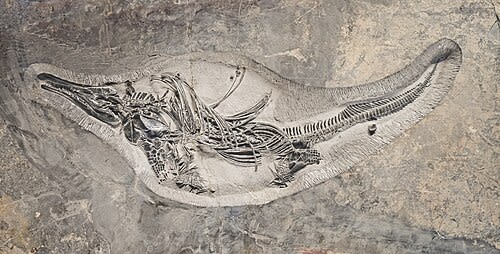

Read the postSummary: @David Goodman's post argues that longtermists should invest in systematically recording information which otherwise would not be available in the future.

The basic argument is that information, for example the genome of a species that will go extinct, once gone, will be gone forever. Any information could be intrinsically and instrumentally valuable over the long course of the future, so any piece of 'saved' information would provide a very long term (though low) benefit.

Gratitude

Thanks to our winners, for working so hard on these original and well reasoned blog posts!

Thanks to everyone else who entered, for all their hard work. You can read all of their essays here.

Thanks to our judges, Will MacAskill, Hilary Greaves, Jacob Barrett, David Thorstad, Kuhan Jeyapragasan and Eva Vivalt. They carefully reviewed every entry I sent them, and made the process very simple from my end. Also - they're all just very busy people, and I'm massively grateful for the time they made to help this competition run so smoothly.

- ^

After three judges had reviewed and scored the essays, the scores for both were the same. I decided to award a joint second rather than hold another round of judging (the EV of rejudging was the same as getting $500).

Wow, this is super exciting and thanks so much to the judges! ☺️

An interesting dynamic around this competition was that the promise of the extremely cracked + influential judging team reading (and implicitly seriously considering) my essay was a much stronger incentive for me to write/improve it than the money (which is very nice don’t get me wrong).[1]

I’m not sure what the implications of this are, if any, but it feels useful to note this explicitly as a type of incentive that could be used to elicit writing/research in the future

Insofar as I’m not totally deluding myself, I mean the altruistic impact of some chance of shaping the judges’ views as opposed to the possibility of seeming mildly clever to some high status figures

Congrats Aaron!

Kudos to whoever wrote these summaries. They give a great sense of the contents and at least wth mine capture the essence of it much more succinctly than I could!

Cheers! That was me + GPT

That illustration by by Siao Si Looi is beautiful similar to the overall post by Aerepo. Extinction automatically gets offset if we care more on securing better futures in near term.