Introduction to the Digital Consciousness Model (DCM)

Artificially intelligent systems, especially large language models (LLMs) used by almost 50% of the adult US population, have become remarkably sophisticated. They hold conversations, write essays, and seem to understand context in ways that surprise even their creators. This raises a crucial question: Are we creating systems that are conscious?

The Digital Consciousness Model (DCM) is a first attempt to assess the evidence for consciousness in AI systems in a systematic, probabilistic way. It provides a shared framework for comparing different AIs and biological organisms, and for tracking how the evidence changes over time as AI develops. Instead of adopting a single theory of consciousness, it incorporates a range of leading theories and perspectives—acknowledging that experts disagree fundamentally about what consciousness is and what conditions are necessary for it.

Here, we present some of the key initial results of the DCM. The full report is now available here.

We will be hosting a webinar on February 10 to discuss our findings and answer audience questions. You can find more information and register for that event here.

Why this matters

It is important to assess whether AI systems might be conscious in a way that takes seriously both the many different views about what consciousness is and the specific details of these systems. Even though our conclusions remain uncertain, it's worth trying to estimate, as concretely as we can, the probability that AI systems are conscious. Here are the reasons why:

- As AI systems become increasingly complex and sophisticated, many people (experts and laypeople alike) find it increasingly plausible that these systems may be phenomenally conscious—that is, they have experiences, and there is something that it feels like to be them.

- If AIs are conscious, then they likely deserve moral consideration, and we risk harming them if we do not take precautions to ensure their welfare. If AIs are not conscious but are believed to be, then we risk giving unwarranted consideration to entities that don’t matter at the expense of individuals who do (e.g., humans or other animals).

- Having a probability estimate that honestly reflects our uncertainty can help us decide when to take precautions and how to manage risks as we develop and use AI systems.

- By tracking how these probabilities change over time, we can forecast what future AI systems will be like and when important thresholds may be crossed.

Why estimating consciousness is a challenging task

Assessing whether AI systems might be conscious is difficult for three main reasons:

- There is no scientific or philosophical consensus about the nature of consciousness and what gives rise to it. There is widespread disagreement over existing theories, and these theories make very different predictions about whether AI systems are or could be conscious.

- Existing theories of consciousness were developed to describe consciousness in humans. It is often unclear how to apply them to AI systems or even to other animals.

- Although we are learning more about how AI systems work, there is still much about their inner workings that we do not fully understand, and the technology is changing rapidly.

How the model works

Our model is designed to help us reason about AI consciousness in light of our significant uncertainties.

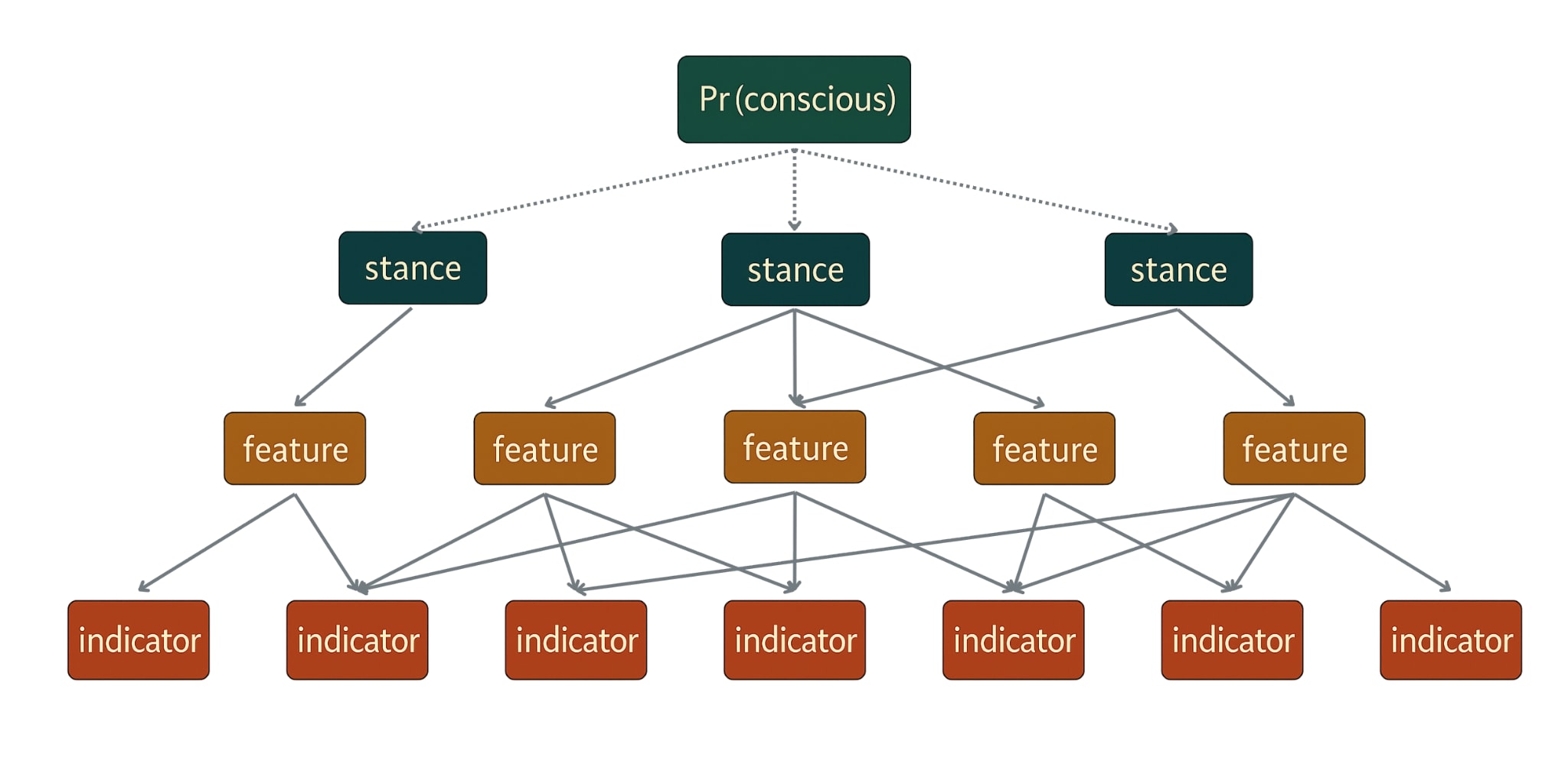

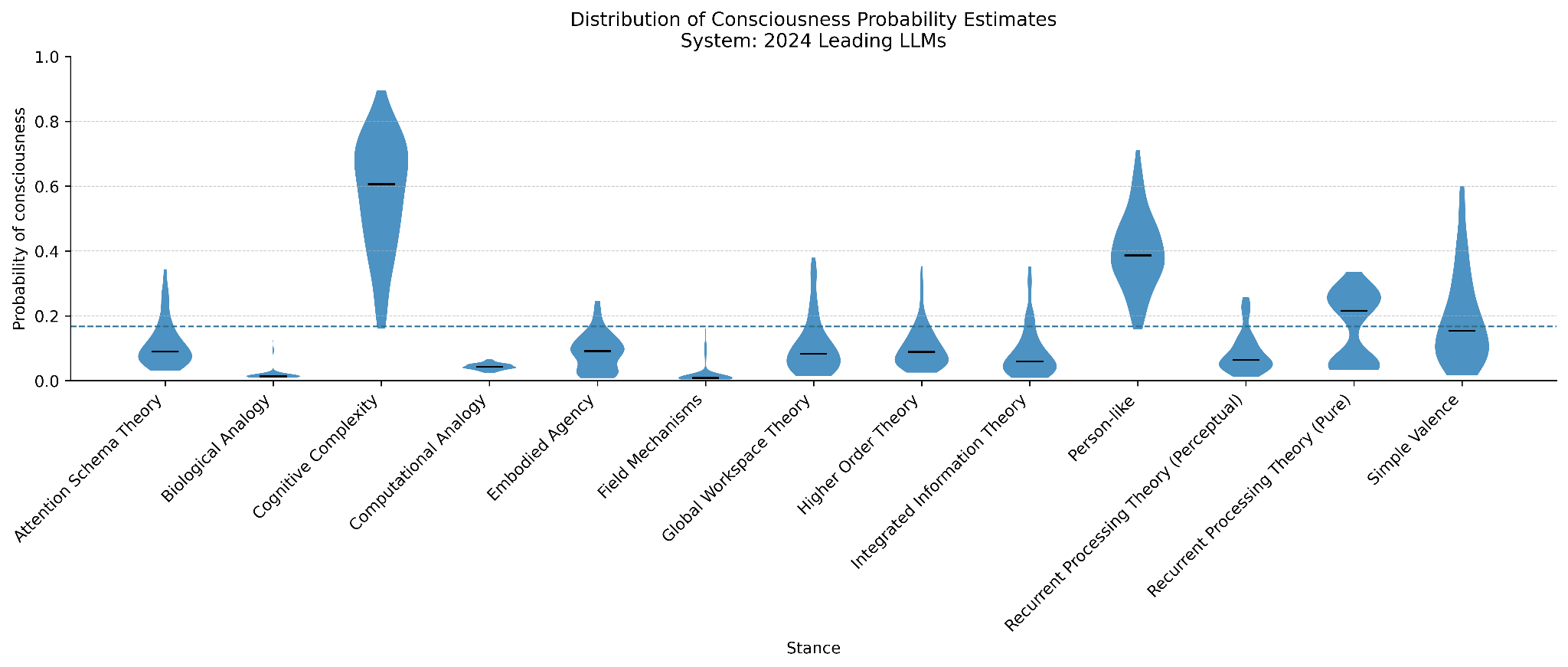

- We evaluate the evidence from the perspective of 13 diverse stances on consciousness, including the best scientific theories of consciousness as well as more informal perspectives on when we should attribute consciousness to a system. We report what each perspective concludes, then combine these conclusions based on how credible experts find each perspective.

- We identify a list of general features of systems that might matter for assessing AI consciousness (e.g., attention, complexity, or biological similarity to humans), which we use to characterize the general commitments of different stances on consciousness.

- We identified over 200 specific indicators, properties that a system could have that would give us evidence about whether it possesses features relevant to consciousness. These include facts about what systems are made of, what they can do, and how they learn.

We gathered evidence about what current AI systems and biological species are like and used the model to arrive at a comprehensive probabilistic evaluation of the evidence.

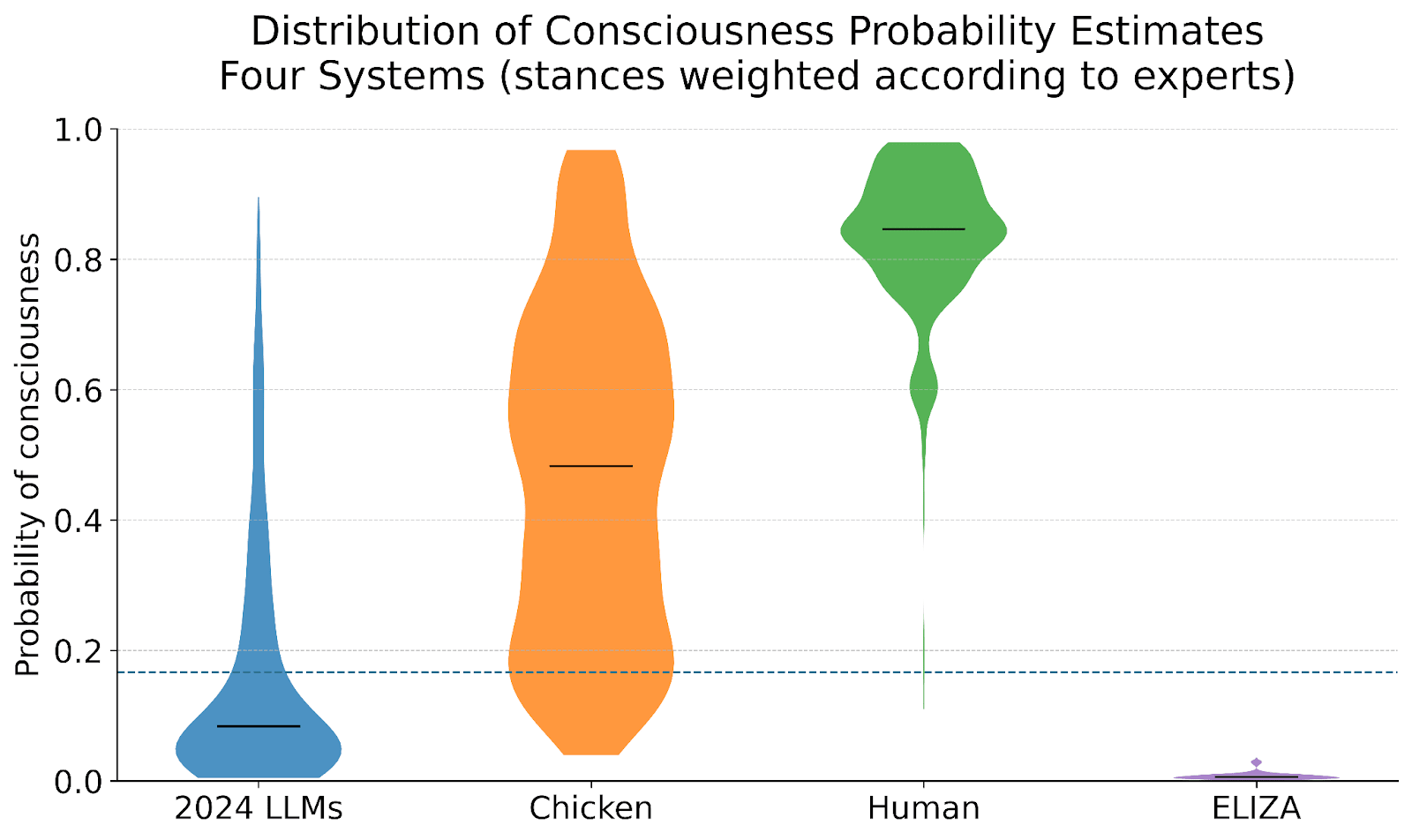

- We considered four systems: 2024 state-of-the-art LLMs (such as ChatGPT 4 or Claude 3 Opus); humans; chickens; and ELIZA (a very simple natural language processing program from the 1960s)

- We asked experts to assess whether these systems possess each of the 200+ indicator properties.

- We constructed a statistical model (specifically, a hierarchical Bayesian model) that uses indicator values to provide evidence for whether a system has consciousness-relevant features, and then uses these feature values to provide evidence for whether the system is conscious according to each of the 13 perspectives we included.

How to interpret the results

The model produces probability estimates for consciousness in each system.

We want to be clear: we do not endorse these probabilities and think they should be interpreted with caution. We are much more confident about the comparisons the model allows us to make.

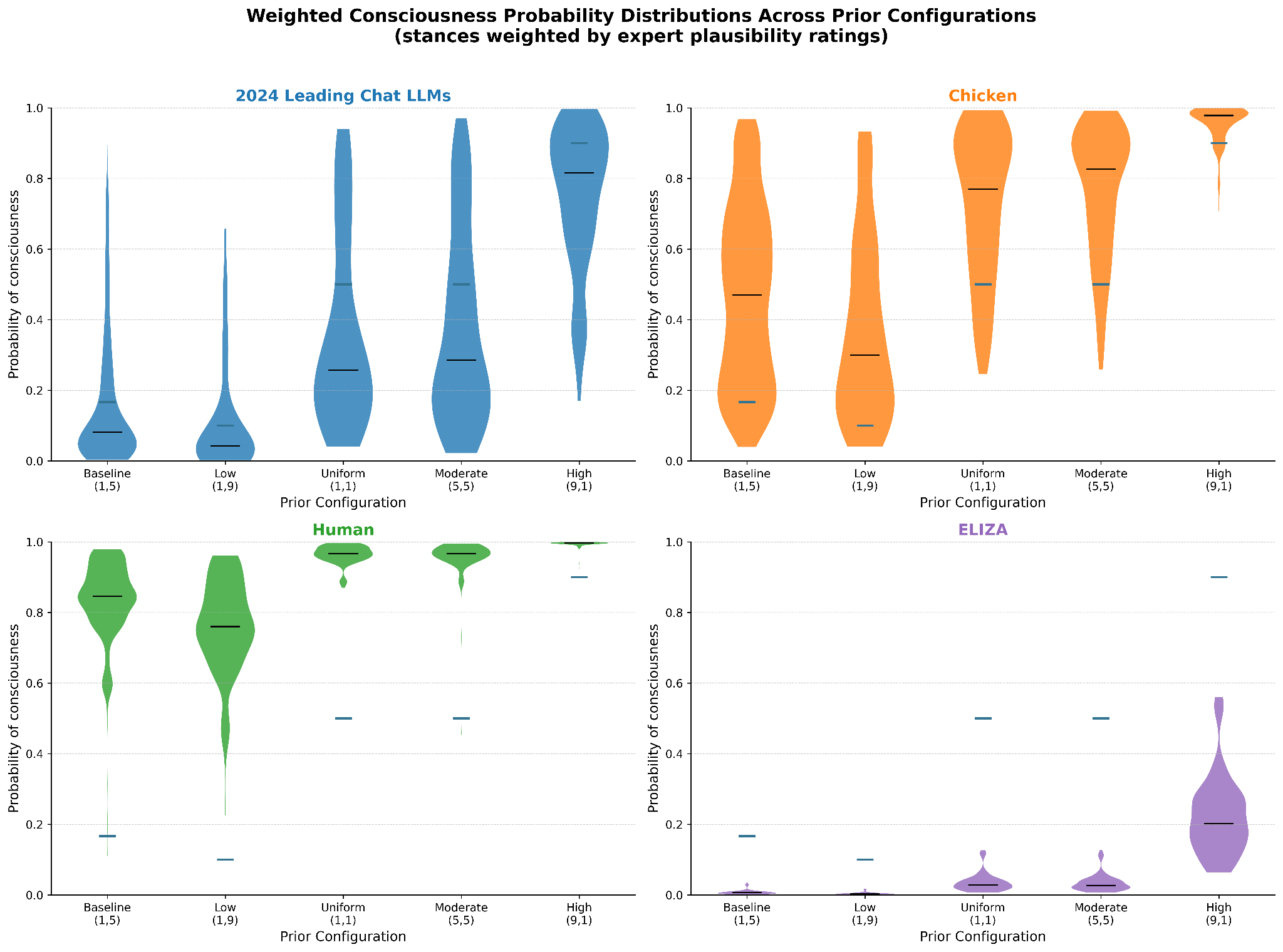

- Because the model is Bayesian, it requires a starting point—a "prior probability" that represents how likely we think consciousness is before looking at any evidence. The choice of a prior is often somewhat arbitrary and intended to reflect a state of ignorance about the details of the system. The final (posterior) probability the model generates can vary significantly depending on what we choose for the prior. Therefore, unless we are confident in our choices of priors, we shouldn’t be confident in the final probabilities.

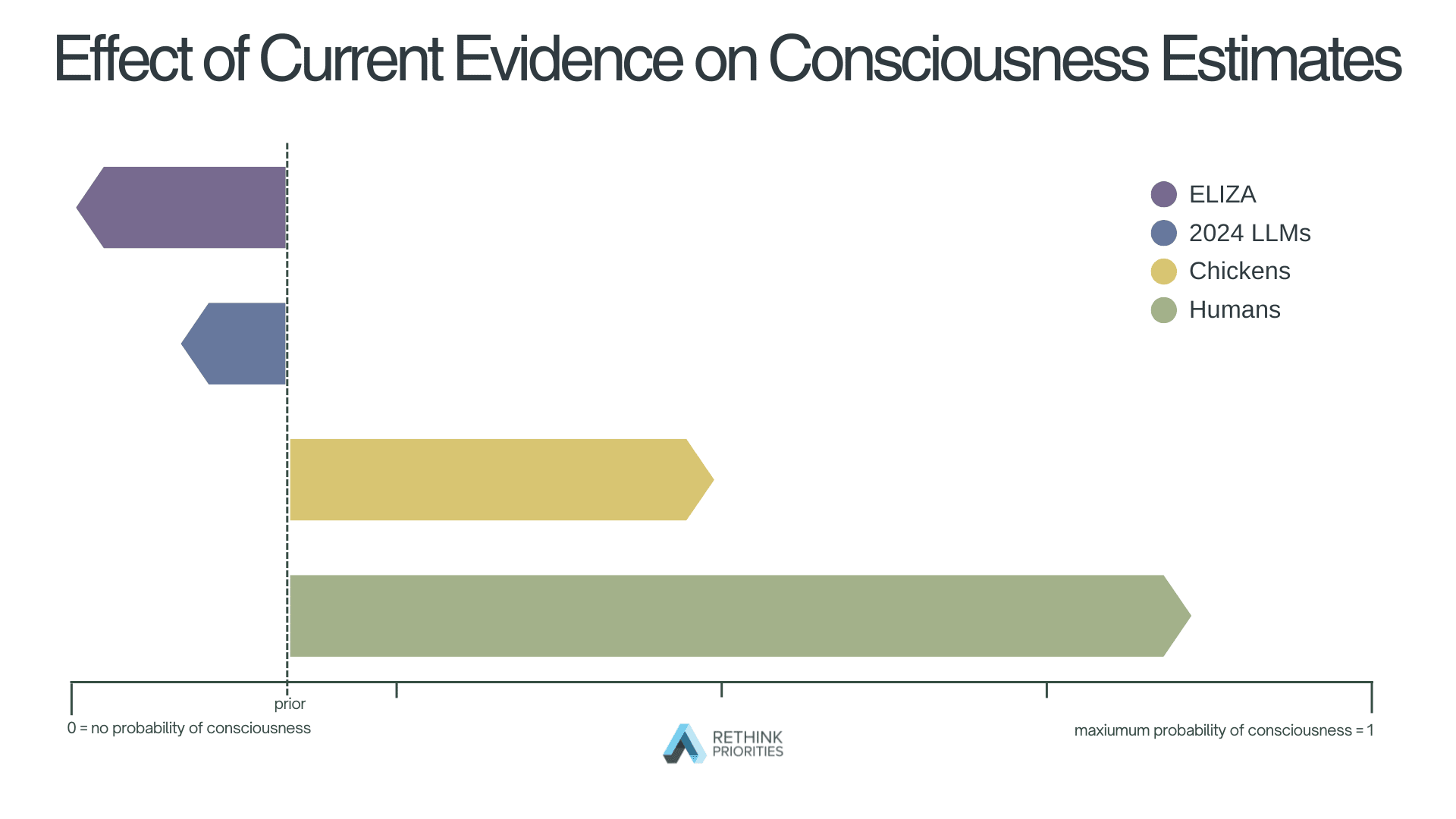

- What the model reliably tells us is how much the evidence should change our minds. We can assess how strong the evidence for or against consciousness is by seeing how much the model’s output differs from the prior probability.

- In order to avoid introducing subjective bias about which systems are conscious and to instead focus just on what the evidence says, we assigned the same prior probability of consciousness (⅙) to each system. By comparing the relative probabilities for different systems, we can evaluate how much stronger or weaker the evidence is for AI consciousness than for more familiar systems like humans or chickens.

Key findings

With these caveats in place, we can identify some key takeaways from the Digital Consciousness Model:

- The evidence is against 2024 LLMs being conscious. The aggregated evidence favors the hypothesis that 2024 LLMs are not conscious.

- The evidence against 2024 LLMs being conscious is not decisive. While the evidence led us to lower the estimated probability of consciousness in 2024 LLMs, the total strength of the evidence was not overwhelmingly against LLM consciousness. The evidence against LLM consciousness is much weaker than the evidence against consciousness in simpler AI systems.

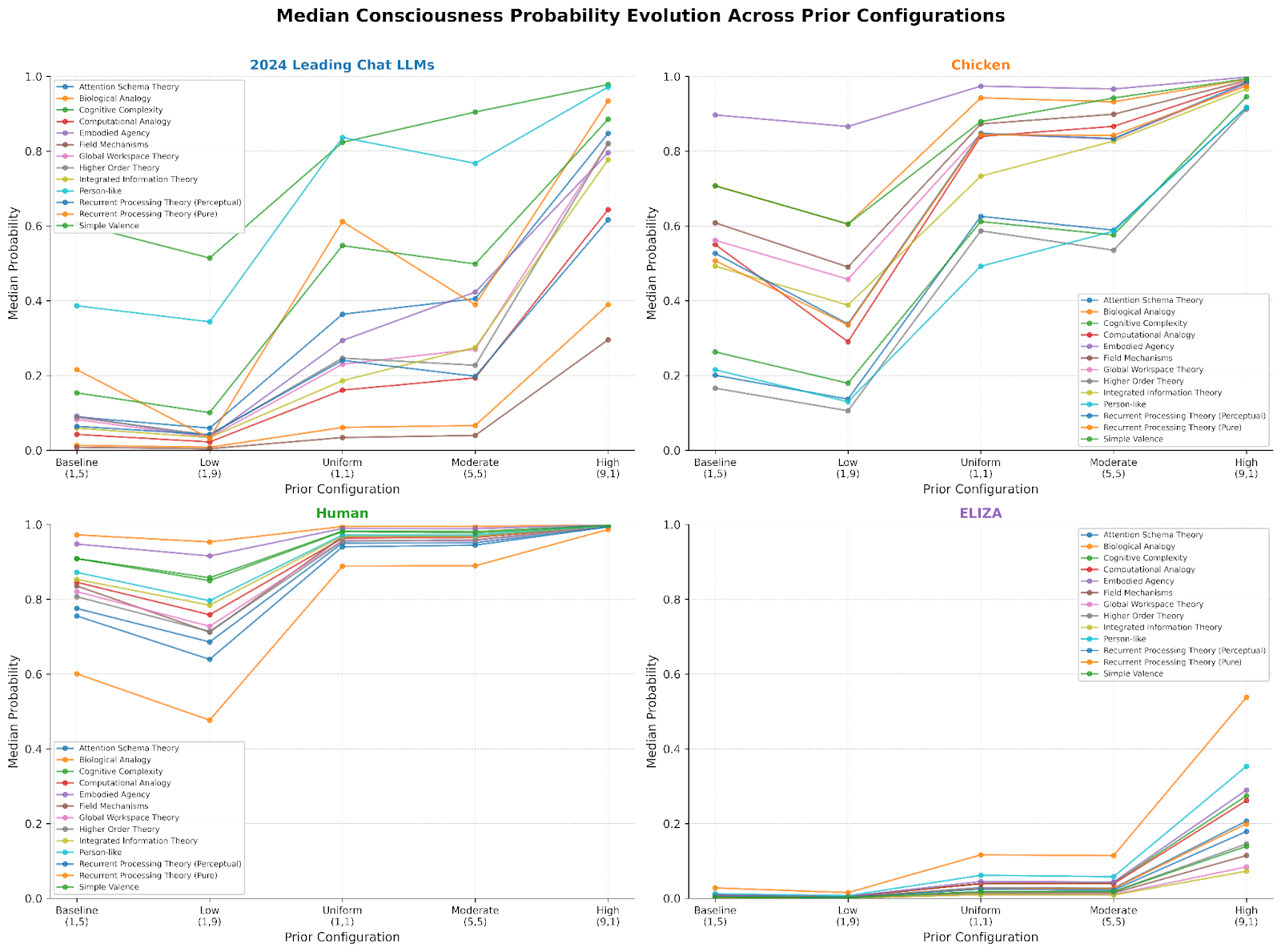

- Different stances (perspectives) make very different predictions about LLM consciousness. Perspectives that focus on cognitive complexity or human-like qualities found decent evidence for AI consciousness. Perspectives that focus on biology or having a body provide strong evidence against it.

- Which theory of consciousness is right matters a lot. Because different stances give strikingly different judgments about the probability of LLM consciousness, significant changes in the weights given to stances will yield significant differences in the results of the Digital Consciousness Model. It will be important to track how scientific and popular consensus about stances change over time and the consequences this will have on our judgments about the probability of consciousness.

- Overall, the evidence for consciousness in chickens was strong, though there was significant diversity across stances. The aggregated evidence strongly supported the conclusion that chickens are conscious. However, some stances that emphasize sophisticated cognitive abilities, like metacognition, assigned low scores to chicken consciousness.

What’s next

The Digital Consciousness Model provides a promising framework for systematically examining the evidence for consciousness in a diverse array of systems. We plan to develop and strengthen it in future work in the following ways:

- Gathering more expert assessments to strengthen our data

- Adding new types of evidence and new perspectives on consciousness

- Applying the model to newer AI systems so we can track changes over time and spot which systems are the strongest candidates for consciousness

- Applying the model to new biological species, allowing us to make more comparisons across systems.

Acknowledgments

This report is a project of the AI Cognition Initiative and Rethink Priorities. The authors are Derek Shiller, Hayley Clatterbuck, Laura Duffy, Arvo Muñoz Morán, David Moss, Adrià Moret, and Chris Percy. We are grateful for discussions with and feedback from Jeff Sebo, Bob Fischer, Alex Rand, Oscar Horta, Joe Emerson, Luhan Mikaelson, and audiences at NYU Center for Mind, Ethics, and Policy and the Eleos Conference on AI Consciousness and Welfare. If you like our work, please consider subscribing to our newsletter. You can explore our completed public work here.

Thanks for this work. I find it valuable.

AIs could have negligible welfare (in expectation) even if they are conscious. They may not be sentient even if they are conscious, or have negligible welfare even if they are sentient. I would say the (expected) total welfare of a group (individual welfare times population) matters much more for its moral consideration than the probability of consciousness of its individuals. Do you have any plans to compare the individual (expected hedonistic) welfare of AIs, animals, and humans? You do not mention this in the section "What’s next".

Do you have any ideas for how to decide on the priors for the probability of sentience? I agree decisions about priors are often very arbitrary, and I worry they will have significantly different implications.

I like that your report the results for each perspective. People usually give weights that are at least 0.1/"number of models", which are not much smaller than the uniform weight of 1/"number of models", but this could easily lead to huge mistakes. As a silly example, if I asked random people with age 7 about whether the gravitational force between 2 objects is proportional to "distance"^-2 (correct answer), "distance"^-20, or "distance"^-200, I imagine I would get a significant fraction picking the exponents of -20 and -200. Assuming 60 % picked -2, 20 % picked -20, and 20 % picked -200, a respondant may naively conclude the mean exponent of -45.2 (= 0.6*(-2) + 0.2*(-20) + 0.2*(-200)) is reasonable. Alternatively, a respondant may naively conclude an exponent of -9.19 (= 0.933*(-2) + 0.0333*(-20) + 0.0333*(-200)) is reasonable giving a weight of 3.33 % (= 0.1/3) to each of the 2 wrong exponents, equal to 10 % of the uniform weight, and the remaining weight of 93.3 % (= 1 - 2*0.0333) to the correct exponent. Yet, there is lots of empirical evidence against the exponents of -45.2 and -9.19 which the respondants are not aware of. The right conclusion would be that the respondants have no idea about the right exponent, or how to weight the various models because they would not be able to adequately justify their picks. This is also why I am sceptical that the absolute value of the welfare per unit time of animals is bound to be relatively close to that of humans, as one may naively infer from the welfare ranges Rethink Priorities (RP) initially presented, or the ones in Bob Fischer's book about comparing welfare across species, where there seems to be only 1 line about the weights. "We assigned 30 percent credence to the neurophysiological model, 10 percent to the equality model, and 60 percent to the simple additive model".

Mistakes like the one illustrated above happen when the weights of models are guessed independently of their output. People are often sensitive to astronomical outputs, but not to the astronomically low weights they imply. How do you ensure the weights of the models to estimate the probability of consciousness are reasonable, and sensitive to their outputs? I would model the weights of the models as very wide distributions to represent very high model uncertainty.

This is an important caveat. While our motivation for looking at consciousness is largely from its relation to moral status, we don't think that establishing that AIs were conscious would entail that they have significant states that counted strongly one way or the other for our treatment of them, and establishing that they weren't conscious wouldn't entail that we should feel free to treat them however we like.

We think that it estimates of consciousness still play an important practical role. Work on AI consciousness may help us to achieve consensus on reasonable precautionary measures and motivate future research directions with a more direct upshot. I don't think the results of this model can be directly plugged into any kind of BOTEC, and should be treated with care.

We favored a 1/6 prior for consciousness relative to every stance and we chose that fairly early in the process. To some extent, you can check the prior against what you update to on the basis of your evidence. Given an assignment of evidence strength and an opinion about what it should say about something that satisfies all of the indicators, you can backwards infer the prior needed to update to the right posterior. That prior is basically implicit in your choices about evidential strength. We didn't explicitly set our prior this way, but we would probably have reconsidered our choice of 1/6 if it was giving really implausible results for humans, chickens, and ELIZA across the board.

There is a tension here between producing probabilities we think are right and producing probabilities which could reasonably act as a consensus conclusion. I have my own favorite stance, and I think I have good reason for it, but I didn't try to convince anyone to give it more weight in our aggregation. Insofar as we're aiming in the direction of something that could achieve broad agreement, we don't want to give too much weight to our own views (even if we think we're right). Unfortunately,among people with significant expertise in this area, there is broad and fairly fundamental disagreement. We think that it is still valuable to shoot for consensus, even if that means everyone will think it is flawed (by giving too much weight to different stances.)

Thanks, Derek.

To clarify, I do not have a view about which models should get more weight. I just think that, when results differ a lot across models, the top priority should be further research to decrease the uncertainty instead of acting based on a consensus view represented by best guesses for the weights of the models.

In particular, I would model the weights of the stances as distributions instead of point estimates. As you note in the report, there was lots of variation across the 13 experts you surveyed

I wonder what exactly you asked the experts. I think the above would underestimate uncertainty if you just asked them to rate plausibility from 0 to 10, and there were experts reporting 0. Have you considered having a range of possible responses in a logarithmtic scale ranging from a weight/probability of e.g. 10^-6 to 1?

Thanks vasco. And thanks for helping us think through what we can do better. Some thoughts on this:

We considered several framings, scales and options to give experts. Since they were evaluating a lot of stances and we wanted experts to really know what we meant, we prioritised giving them context and then asking them the simplified general question of plausibility, with an intuitive scale. The exact question was: 'how plausible do you find X stance?', just after having fully describing X. We also asked them for general notes and comments and they didn't seem to find that part of the survey particularly confusing (perhaps to your and my surprise). More broadly, I agree with you that sometimes perfectly defining terms and scales can help some people think through it but not everyone, and the science on how much it helps points is mixed.

We didn't find that people were responding with zero plausibility very much at all. As you can see from the results, almost all respondents found most, if not all, stances at least a little bit plausible. I agree that had we found a lot of concentration around the very high or very low plausibility, having some sort of logarithmic scale could help distinguish results.

I'm not sure what you have in mind in terms of modelling the stances' weight as distributions instead of point estimates. Perhaps you mean something like leveraging those distributions above via some sort of Monte Carlo where weights are drawn from these distributions and the process is repeated many times, then aggregated. That indeed sounds more sophisticated and could possibly help track uncertainty but I suspect it would very little difference. In particular, I think so because we observed that unweighted pooling of results across all stances is surprisingly similar to the pool when weighted by experts; the same if you squint.

Thanks for clarifying, Arvo.

I wonder how people decided between a plausibility of 0/10 and 1/10. It could be that people picked 0 for a plausibility lower than 0.5/10, or that they interpreted it as almost impossible, and therefore sometimes picked 1/10 even for a plausibility lower than 0.5/10. A logarithmic scale would allow experts to specify plausibilities much lower than 1/10 (e.g. 10^-6/10) without having to pick 0, although I do not know whether they would actually pick such values.

Yes, this is what I had in mind. Denoting by W_i and P_i the distributions for the weight and probability of consciousness for stance i, I would calculate the final distribution for the probability of consciousness from (W_1*P_1 + W_2*P_2 + ... W_13*P_13)/(W_1 + W_2 + ... W_13).

I think the mean of the final distribution for the probability of consciousness would be very similar. However, the final distribution would be more spread out. I do not know how much more spread out it would be, but I agree it would help track uncertainty better.

Nice! It strikes me that in figure 1, information is propagating upward, from indicator to feature to stance to overall probability, and so the arrows should also be pointing upward.

I think the view (stance?) I am most sympathetic to is that all our current theories of consciousness aren't much good, so we shouldn't update very far away from our prior, but that picking a prior is quite subjective, and so it is hard to make collective progress on this when different people might just have quite different priors for P(current AI consciousness).

Hi Oscar, thanks. Yes! Indicator evidence is inserted at the bottom and flows upward. The diagram priors flows down to set expectations, so it's a common convention to draw it so. Informally you see it as each node splitting different nodes into subnodes, but the arrows don't mean to imply that information doesn't travel up.

On to your main point: there's no question that this is a preparadigmatic field where progress and consensus are difficult to find, and rightly so given the state of the evidence.

A few thoughts on why pursue this research now despite the uncertainty:

First, we want a framework in place that can help transition towards a more paradigmatic science of digital minds as the field progresses. Even if you're sceptical about current reliability, we think that having a model that can modularly incorporate new evidence and judgements could serve as valuable infrastructure for organising future findings.

Second, whilst which theory is true remains uncertain, we found that operationalising specific stances was often less fuzzy than expected. Many theories make fairly specific predictions about conscious versus non-conscious systems, giving us firmer ground for stance-by-stance analysis. (Though you might still disagree with any given stance, of course!)

Your concern about theory quality might itself be worth modelling as a stance -- we thought of something like "Stance X" but it could be "Theoretical Scepticism" -- where all features provide very weak support. That would yield small updates from the prior regardless of the system. Already, you can see the uncertainty playing out in the model: notice how wide the bands are for chickens vs humans (Figure 3). Several theories disagree substantially about animal consciousness, which arguably reflects concerns about theory quality.

That said, we're deliberately cautious about making pronouncements on consciousness probability or evidence strength. We see this as a promising way to start characterising these values rather than offering definitive answers.

(Oh and regarding priors: setting them is hard, and robustness important. it might be helpful to see Appendix E and Figures 9-11. The key finding is that while absolute posteriors are highly prior-dependent (as you'd expect), the comparative results and direction of updating are pretty robust across priors.)

Yep, that all makes sense, and I think this work can still tell us something, just it doesn't update me too much given the lack of compelling theories or much consensus in the scientific/philosophical community. This is harsher than what I actually think, but directionally, it has the feel of 'cargo cult science' where it has a fancy Bayesian model and lots of numbers and so forth, but if it all built on top of philosophical stances I don't trust then it doesn't move me much. But that said it is still interesting e.g. how wide the range for chickens is.

Executive summary: This report presents the Digital Consciousness Model, a probabilistic framework combining multiple theories of consciousness, and concludes that current (2024) large language models are unlikely to be conscious, though the evidence against consciousness is limited and highly sensitive to theoretical assumptions.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

What might be interesting in future editions is to note the rate of change (assuming it exists and is >0) over each generation of LLMs, given the truism that their capabilities and thus cognitive complexity, which at least some models favor as relevant, is increasing quite rapidly. I find these questions quite important and I hope that we can continue to interrogate and refine these and other methodologies for answering them.

Hi Andrew, I definitely agree. Tracking how these systems evolve over time — both what the models reveal about their changes and how we can formulate projections based on those insights — has been one of our main motivations for this work. We're in fact hoping to gather data on newer systems and develop some more informed forecasts based on what we find.